Page Summary

-

The Recording and Playback API allows AR experiences to be viewed anywhere and anytime by recording AR sessions into an MP4 file.

-

This feature benefits both developers by simplifying testing and reducing iteration time, and end users by allowing them to interact with AR content outside of real-time constraints.

-

Recorded AR sessions contain video streams, IMU data, and can include custom metadata, saved in an MP4 format viewable with compatible video players.

-

While ARCore is needed for recording, playback can be done without it using any MP4-compatible video player capable of managing the additional data.

-

The recording captures a primary CPU image video, optionally a high-resolution CPU image, and a camera depth map visualization, along with API call events including sensor data and potentially location information.

Platform-specific guides

Android (Kotlin/Java)

Android NDK (C)

Unity (AR Foundation)

Unreal Engine

The vast majority of Augmented Reality experiences are “real-time.” They require users to be at a certain place at a certain time, with their phone set in a special AR mode and opened to an AR app. For example, if a user wanted to see how an AR couch looks in their living room, they would have to “place” the couch in the on-screen environment while they are physically in the room.

The Recording and Playback API does away with this “real-time” requirement, enabling you to create AR experiences that can be viewed anywhere, at any time. The Recording API stores a camera’s video stream, IMU data, or any other custom metadata you choose to save in an MP4 file. You can then feed these recorded videos to ARCore via the Playback API, which will treat the MP4 just like a live session feed. You can still use a live camera session, but with this new API, your AR applications can opt to use a pre-recorded MP4 instead of that live session.

End users can also take advantage of this feature. No matter where they are in real life, they can pull up any video recorded with the Recording and Playback API from their native gallery and edit in or play back AR objects, effects, and filters. With this feature, users can do their AR shopping while on a train commuting to the office, or in bed lounging around.

Use cases for developing with the Recording and Playback API

The Recording and Playback API removes the time and space constraints of building AR apps. Here are some ways you can use it in your own projects.

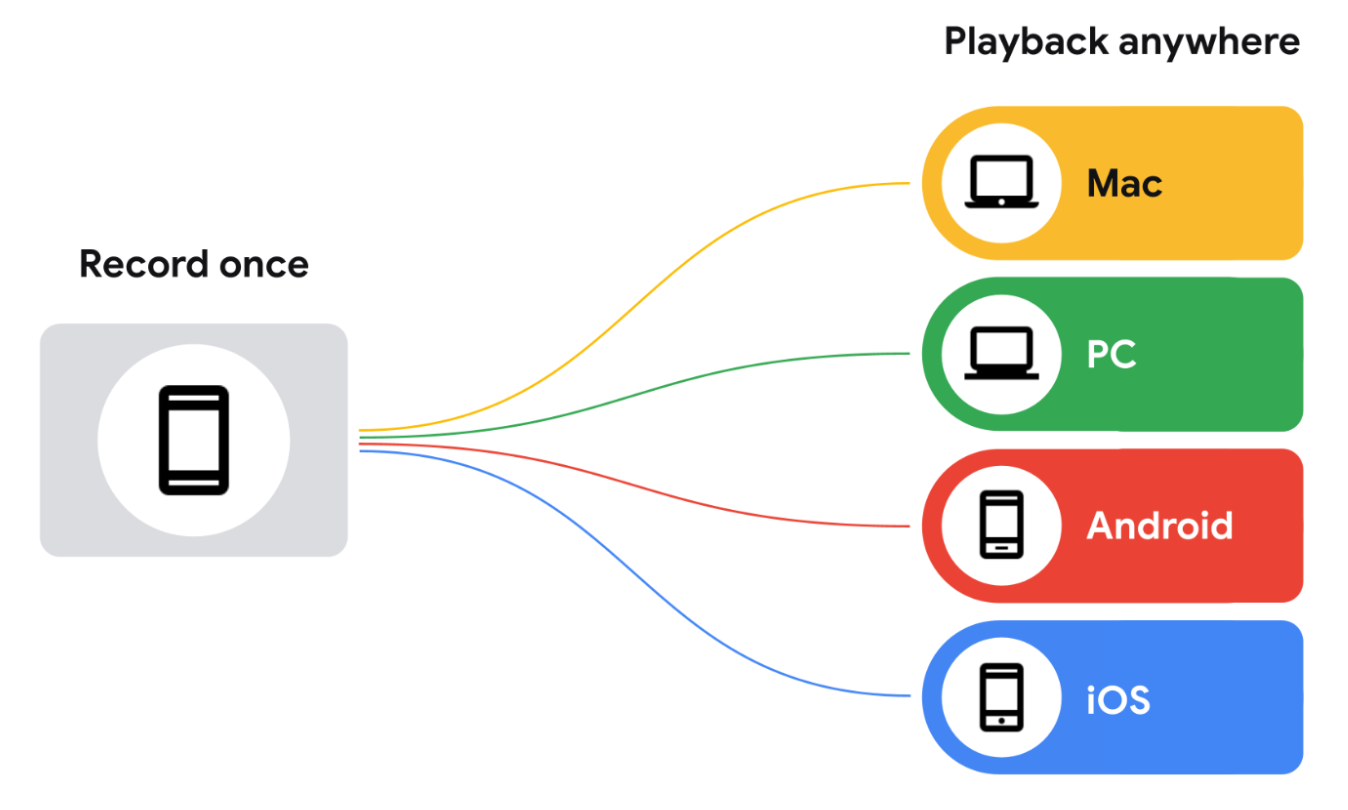

Record once, test anywhere

Instead of physically going to a location every time you need to test out an AR feature, you can record a video using the Recording API and then play it back using any compatible device. Building an experience in a shopping mall? There’s no need to go there every time you want to test a change. Simply record your visit once and then iterate and develop from the comfort of your own desk.

Reduce iteration time

Instead of having to record a video for every single Android device you want to support, for every single scenario you want to test, you can record the video once and play it back on multiple different devices during the iteration phase.

Reduce manual test burden across development teams

Instead of creating custom datasets for every new feature, leverage pre-recorded datasets while launching new features that incorporate depth or the latest tracking improvements from ARCore.

Device compatibility

You’ll need ARCore to record data with the Recording and Playback API, but you won’t need it to play things back. MP4s recorded using this feature are essentially video files with extra data that can be viewed using any video player. You can inspect them with Android’s ExoPlayer, or any compatible player that can both demux MP4s and manage the additional data added by ARCore.

How video and AR data are recorded for playback

ARCore saves recorded sessions to MP4 files on the target device. These files contain multiple video tracks and other miscellaneous data. Once these sessions are saved, you can point your app to use this data in place of a live camera session.

What’s in a recording?

ARCore captures the following data in H.264 video. You can access it on any MP4-compatible video player that is capable of switching tracks. The highest-resolution track is the first in the list because some MP4-compatible video players automatically play the first track in the list without allowing you to choose which video track to play.

Primary video track (CPU image track)

The primary video file records the environment or scene for later playback. By default, ARCore records the 640x480 (VGA) CPU image that's used for motion tracking as the primary video stream.

ARCore does not capture the (high-resolution) GPU texture that is rendered to the screen as the passthrough camera image.

If you want a high-resolution image stream to be available during playback, you must configure a camera which provides a CPU image that has the desired resolution. In this case:

- ARCore will request both the 640x480 (VGA) CPU image that it requires for motion tracking and the high-resolution CPU image specified by the configured camera config.

- Capturing the second CPU image stream may affect app performance, and different devices may be affected differently.

- During playback, ARCore will use the high-resolution CPU image that was captured during recording as the GPU texture during playback.

- The high-resolution CPU image will become the default video stream in the MP4 recording.

The selected camera config during recording determines the CPU image and the primary video stream in the recording. If you don't select a camera config with a high-resolution CPU image, this video will be the first track in the file and will play by default, regardless of which video player you use.

Camera depth map visualization

This is a video file representing the camera's depth map, recorded from the device's hardware depth sensor, such as a time-of-flight sensor (or ToF sensor), and converted to RGB channel values. This video should only be used for preview purposes.

API call events

ARCore records measurements from the device's gyrometer and accelerometer sensors. It also records other data, some of which may be sensitive:

- Dataset format versions

- ARCore SDK version

- Google Play Services for AR version

- Device fingerprint (the output of

adb shell getprop ro.build.fingerprint) - Additional information about sensors used for AR tracking

- When using the ARCore Geospatial API, the device's estimated location, magnetometer readings, and compass readings