Page Summary

-

The Raw Depth API offers more accurate depth data than the full Depth API, though it doesn't cover every pixel and requires a matching confidence image for processing.

-

Both the Raw Depth API and the full Depth API work on devices supporting the Depth API and utilize hardware depth sensors if available, but do not require them.

-

While the Raw Depth API provides higher accuracy for some pixels and includes a confidence image, the full Depth API provides depth estimates for all pixels but may be less accurate due to smoothing and interpolation.

-

Confidence images from the Raw Depth API indicate accuracy per pixel, with higher confidence in textured areas and potentially near the camera if a hardware sensor is present.

-

The Raw Depth API has about half the compute cost of the full Depth API and is useful for AR experiences requiring high depth accuracy, such as 3D reconstruction or measurement.

The Raw Depth API provides depth data for a camera image that has higher accuracy than full Depth API data, but does not always cover every pixel. Raw depth images, along with their matching confidence images, can also be further processed, allowing apps to use only the depth data that has sufficient accuracy for their individual use case.

Device compatibility

Raw Depth is available on all devices that support the Depth API. The Raw Depth API, like the full Depth API, does not require a supported hardware depth sensor, such as a time-of-flight (ToF) sensor. However, both the Raw Depth API and the full Depth API make use of any supported hardware sensors that a device may have.

Raw Depth API vs full Depth API

The Raw Depth API provides depth estimates with higher accuracy, but raw depth images may not include depth estimates for all pixels in the camera image. In contrast, the full Depth API provides estimated depth for every pixel, but per-pixel depth data may be less accurate due to smoothing and interpolation of depth estimates. The format and size of depth images are the same across both APIs. Only the content differs.

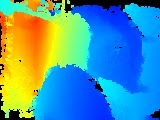

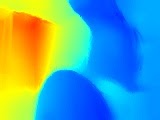

The following table illustrates the differences between the Raw Depth API and the full Depth API using an image of a chair and a table in a kitchen.

| API | Returns | Camera image | Depth image | Confidence image |

|---|---|---|---|---|

| Raw Depth API |

|

|

|

|

| Full Depth API |

|

|

|

N/A |

Confidence images

In confidence images returned by the Raw Depth API, lighter pixels have higher confidence values, with white pixels representing full confidence and black pixels representing no confidence. In general, regions in the camera image that have more texture, such as a tree, will have higher raw depth confidence than regions that don’t, such as a blank wall. Surfaces with no texture usually yield a confidence of zero.

If the target device has a supported hardware depth sensor, confidence in areas of the image close enough to the camera will likely be higher, even on textureless surfaces.

Compute cost

The compute cost of the Raw Depth API is about half of the compute cost for the full Depth API.

Use cases

With the Raw Depth API, you can obtain depth images that provide a more detailed representation of the geometry of the objects in the scene. Raw depth data can be useful when creating AR experiences where increased depth accuracy and detail are needed for geometry-understanding tasks. Some use cases include:

- 3D reconstruction

- Measurement

- Shape detection

Prerequisites

Make sure that you understand fundamental AR concepts and how to configure an ARCore session before proceeding.

Enable Depth

In a new ARCore session, check whether a user's device supports Depth. Not all ARCore-compatible devices support the Depth API due to processing power constraints. To save resources, depth is disabled by default on ARCore. Enable depth mode to have your app use the Depth API.

Java

Config config = session.getConfig(); // Check whether the user's device supports Depth. if (session.isDepthModeSupported(Config.DepthMode.AUTOMATIC)) { // Enable depth mode. config.setDepthMode(Config.DepthMode.AUTOMATIC); } session.configure(config);

Kotlin

if (session.isDepthModeSupported(Config.DepthMode.AUTOMATIC)) { session.configure(session.config.apply { depthMode = Config.DepthMode.AUTOMATIC }) }

Acquire the latest raw depth and confidence images

Call frame.acquireRawDepthImage16Bits() to acquire the latest raw depth image. Not all image pixels returned via the Raw Depth API will contain depth data, and not every ARCore frame will contain a new raw depth image. To determine whether the raw depth image for the current frame is new, compare its timestamp with the timestamp of the previous raw depth image. If the timestamps are different, the raw depth image is based on new depth data. Otherwise, the depth image is a reprojection of previous depth data.

Call frame.acquireRawDepthConfidenceImage() to acquire the confidence image. You can use the confidence image to check the accuracy of each raw depth pixel. Confidence images are returned in Y8 format. Each pixel is an 8-bit unsigned integer. 0 indicates the least confidence, while 255 indicates the most.

Java

// Use try-with-resources, so that images are released automatically. try ( // Depth image is in uint16, at GPU aspect ratio, in native orientation. Image rawDepth = frame.acquireRawDepthImage16Bits(); // Confidence image is in uint8, matching the depth image size. Image rawDepthConfidence = frame.acquireRawDepthConfidenceImage(); ) { // Compare timestamps to determine whether depth is is based on new // depth data, or is a reprojection based on device movement. boolean thisFrameHasNewDepthData = frame.getTimestamp() == rawDepth.getTimestamp(); if (thisFrameHasNewDepthData) { ByteBuffer depthData = rawDepth.getPlanes()[0].getBuffer(); ByteBuffer confidenceData = rawDepthConfidence.getPlanes()[0].getBuffer(); int width = rawDepth.getWidth(); int height = rawDepth.getHeight(); someReconstructionPipeline.integrateNewImage(depthData, confidenceData, width, height); } } catch (NotYetAvailableException e) { // Depth image is not (yet) available. }

Kotlin

try { // Depth image is in uint16, at GPU aspect ratio, in native orientation. frame.acquireRawDepthImage16Bits().use { rawDepth -> // Confidence image is in uint8, matching the depth image size. frame.acquireRawDepthConfidenceImage().use { rawDepthConfidence -> // Compare timestamps to determine whether depth is is based on new // depth data, or is a reprojection based on device movement. val thisFrameHasNewDepthData = frame.timestamp == rawDepth.timestamp if (thisFrameHasNewDepthData) { val depthData = rawDepth.planes[0].buffer val confidenceData = rawDepthConfidence.planes[0].buffer val width = rawDepth.width val height = rawDepth.height someReconstructionPipeline.integrateNewImage( depthData, confidenceData, width = width, height = height ) } } } } catch (e: NotYetAvailableException) { // Depth image is not (yet) available. }

What’s next

- Learn how to build your own app with Raw Depth by going through the Raw Depth codelab.