Page Summary

-

Augmented Faces can be used in your apps by configuring the ARCore session and getting access to the detected face.

-

To configure the ARCore session for Augmented Faces, select the front camera and enable

AugmentedFaceMode. -

Access detected faces by getting a

Trackablefor each frame and checking itsTrackingState.

Learn how to use the Augmented Faces feature in your own apps.

Prerequisites

Make sure that you understand fundamental AR concepts and how to configure an ARCore session before proceeding.

Using Augmented Faces in Android

Configure the ARCore session

Select the front camera in an existing ARCore session to start using Augmented Faces. Note that selecting the front camera will cause a number of changes in ARCore behavior.

Java

// Set a camera configuration that usese the front-facing camera. CameraConfigFilter filter = new CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT); CameraConfig cameraConfig = session.getSupportedCameraConfigs(filter).get(0); session.setCameraConfig(cameraConfig);

Kotlin

// Set a camera configuration that usese the front-facing camera. val filter = CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT) val cameraConfig = session.getSupportedCameraConfigs(filter)[0] session.cameraConfig = cameraConfig

Enable AugmentedFaceMode:

Java

Config config = new Config(session); config.setAugmentedFaceMode(Config.AugmentedFaceMode.MESH3D); session.configure(config);

Kotlin

val config = Config(session) config.augmentedFaceMode = Config.AugmentedFaceMode.MESH3D session.configure(config)

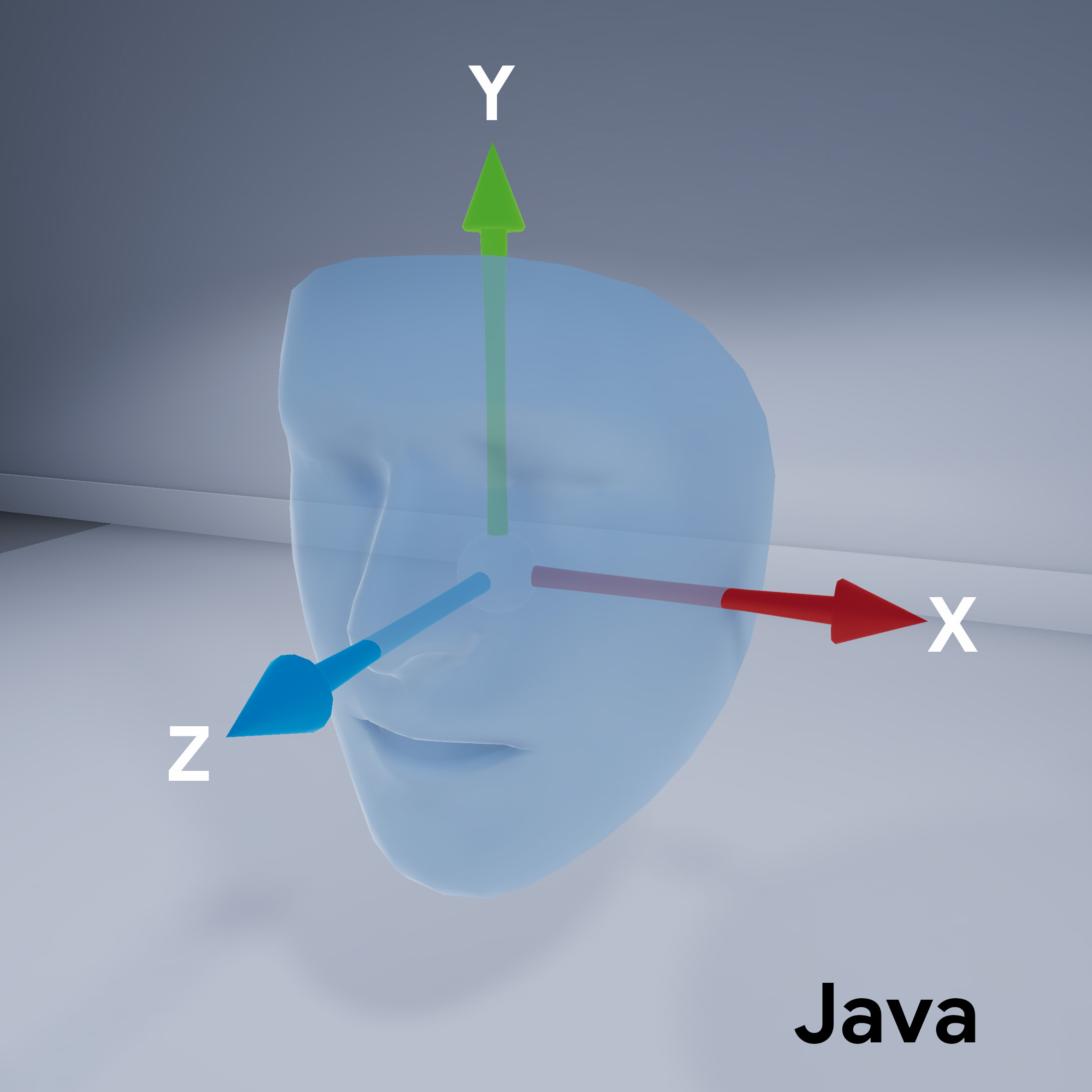

Face mesh orientation

Note the orientation of the face mesh:

Access the detected face

Get a Trackable

for each frame. A Trackable is something that ARCore can track and that

Anchors can be attached to.

Java

// ARCore's face detection works best on upright faces, relative to gravity. Collection<AugmentedFace> faces = session.getAllTrackables(AugmentedFace.class);

Kotlin

// ARCore's face detection works best on upright faces, relative to gravity. val faces = session.getAllTrackables(AugmentedFace::class.java)

Get the TrackingState

for each Trackable. If it is TRACKING,

then its pose is currently known by ARCore.

Java

for (AugmentedFace face : faces) { if (face.getTrackingState() == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. FloatBuffer uvs = face.getMeshTextureCoordinates(); ShortBuffer indices = face.getMeshTriangleIndices(); // Center and region poses, mesh vertices, and normals are updated each frame. Pose facePose = face.getCenterPose(); FloatBuffer faceVertices = face.getMeshVertices(); FloatBuffer faceNormals = face.getMeshNormals(); // Render the face using these values with OpenGL. } }

Kotlin

faces.forEach { face -> if (face.trackingState == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. val uvs = face.meshTextureCoordinates val indices = face.meshTriangleIndices // Center and region poses, mesh vertices, and normals are updated each frame. val facePose = face.centerPose val faceVertices = face.meshVertices val faceNormals = face.meshNormals // Render the face using these values with OpenGL. } }