Page Summary

-

This guide explains how to build VR experiences for iOS using the Google VR SDK and Cardboard.

-

The SDK offers tools for stereoscopic rendering, head tracking, spatial audio, and user interaction via the viewer button.

-

The provided "Treasure Hunt" demo app showcases core SDK features like object selection, audio effects, and 3D navigation.

-

Developers need an iPhone with iOS 8+, Cardboard viewer, Xcode 7.1+, and CocoaPods to get started.

-

The guide includes detailed code walkthroughs for key functionalities, including UI setup, rendering, input handling, and audio integration.

This guide shows you how to use the Google VR SDK for iOS to create your own Virtual Reality (VR) experiences.

You can use Google Cardboard to turn your smartphone into a VR platform. Your phone can display 3D scenes with stereoscopic rendering, track and react to head movements, and interact with apps by detecting when the user presses the viewer button.

The Google VR SDK for iOS contains tools for spatial audio that go far beyond simple left side/right side audio cues to offer 360 degrees of sound. You can also control the tonal quality of the sound—for example, you can make a conversation in a small spaceship sound drastically different than one in a large, underground cave.

This tutorial uses "Treasure Hunt", a demo app that demonstrates the core features of the Google VR SDK. In the game, users look around a virtual world to find and collect objects. It shows you how to:

- Detect button presses

- Determine when the user is looking at something

- Set up spatial audio

- Render stereoscopic images by rendering a different view for each eye

Set up your development environment

Hardware requirements:

- You'll need an iPhone running iOS 8 or higher and a Cardboard viewer.

Software requirements:

Download and build the demo app

Clone the Google VR SDK and the Treasure Hunt demo app from GitHub by running this command:

git clone https://github.com/googlevr/gvr-ios-sdk.git

In a Terminal window, navigate to the

Samples/TreasureHuntfolder, and update the CocoaPod dependencies by running this command:pod update

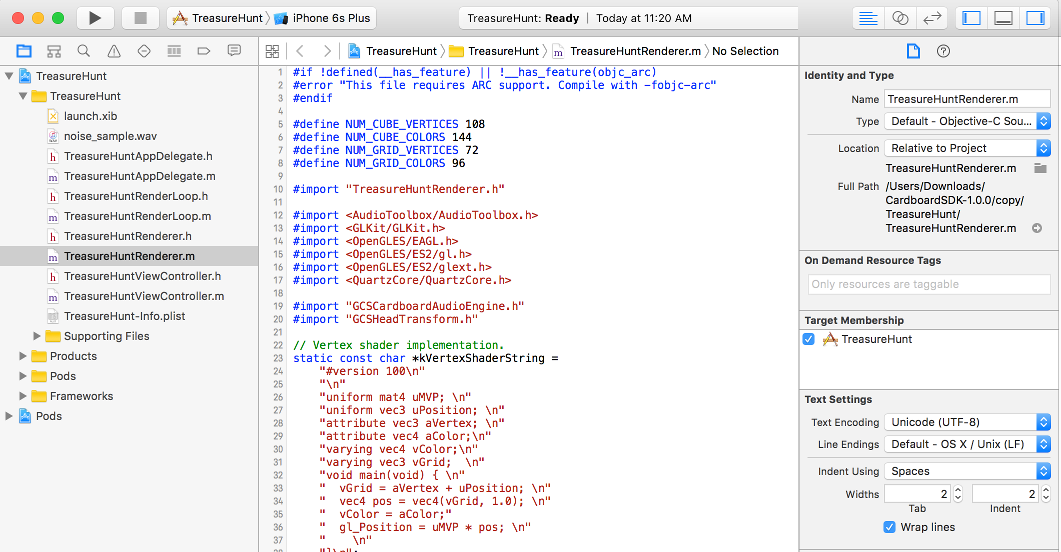

In Xcode, open the TreasureHunt workspace (

Samples/TreasureHunt/TreasureHunt.xcworkspace), and click Run.

Play the game

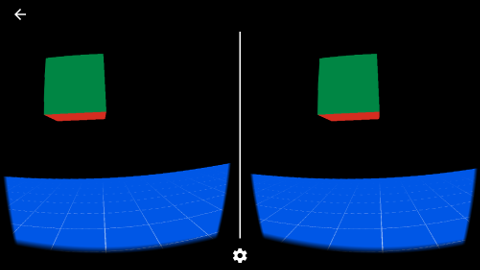

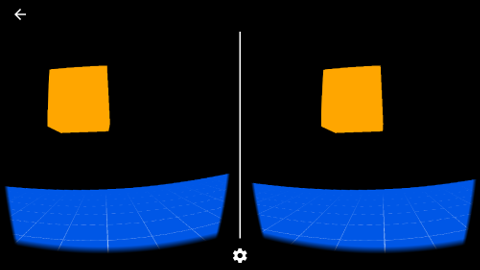

In "Treasure Hunt", you look for and collect cubes in 3D space.

Make sure to wear headphones to experience the game's spatial audio.

To find and collect a cube

- Move your head in any direction until you see a cube.

- Look directly at the cube. This causes it to turn orange.

- Press the Cardboard viewer button to collect the cube.

Code walkthrough

This code walkthrough shows you how Treasure Hunt handles the following tasks:

Implement a

UIViewControllerto hostGVRCardboardView.Define a renderer to implement the

GVRCardboardViewDelegateprotocol.Add a render loop using the

CADisplayLinkobject.Handle inputs.

Implement a UIViewController to host GVRCardboardView

The TreasureHunt app implements a

UIViewController,

the TreasureHuntViewController class. This UIViewController class has an

instance of GVRCardboardView

class. An instance of the TreasureHuntRenderer class is created and set as a

GVRCardboardViewDelegate

for the GVRCardboardView. In addition, the app provides a

render loop, the TreasureHuntRenderLoop class, that drives the

-render

method of the GVRCardboardView.

- (void)loadView {

_treasureHuntRenderer = [[TreasureHuntRenderer alloc] init];

_treasureHuntRenderer.delegate = self;

_cardboardView = [[GVRCardboardView alloc] initWithFrame:CGRectZero];

_cardboardView.delegate = _treasureHuntRenderer;

...

_cardboardView.vrModeEnabled = YES;

...

self.view = _cardboardView;

}

Define a renderer to implement the GVRCardboardViewDelegate protocol

GVRCardboardView

provides a drawing surface for your rendering. It coordinates the drawing with

your rendering code through the

GVRCardboardViewDelegate

protocol. To achieve this, the TreasureHuntRenderer class implements

GVRCardboardViewDelegate:

#import "GVRCardboardView.h"

/** TreasureHunt renderer. */

@interface TreasureHuntRenderer : NSObject<GVRCardboardViewDelegate>

@end

Implement the GVRCardboardViewDelegate protocol

To draw the GL content onto

GVRCardboardView, TreasureHuntRenderer implements the

GVRCardboardViewDelegate

protocol:

@protocol GVRCardboardViewDelegate<NSObject>

- (void)cardboardView:(GVRCardboardView *)cardboardView

didFireEvent:(GVRUserEvent)event;

- (void)cardboardView:(GVRCardboardView *)cardboardView

willStartDrawing:(GVRHeadTransform *)headTransform;

- (void)cardboardView:(GVRCardboardView *)cardboardView

prepareDrawFrame:(GVRHeadTransform *)headTransform;

- (void)cardboardView:(GVRCardboardView *)cardboardView

drawEye:(GVREye)eye

withHeadTransform:(GVRHeadTransform *)headTransform;

- (void)cardboardView:(GVRCardboardView *)cardboardView

shouldPauseDrawing:(BOOL)pause;

@end

Implementations for the willStartDrawing, prepareDrawFrame, and drawEye

methods are described below.

Implement willStartDrawing

To perform one-time GL state initialization, implement

-cardboardView:willStartDrawing:.

Use this opportunity to load shaders, initialize scene geometry, and bind to GL

parameters. We also initialize an instance of the

GVRCardboardAudioEngine

class here:

- (void)cardboardView:(GVRCardboardView *)cardboardView

willStartDrawing:(GVRHeadTransform *)headTransform {

// Load shaders and bind GL attributes.

// Load mesh and model geometry.

// Initialize GVRCardboardAudio engine.

_cardboard_audio_engine =

[[GVRCardboardAudioEngine alloc]initWithRenderingMode:

kRenderingModeBinauralHighQuality];

[_cardboard_audio_engine preloadSoundFile:kSampleFilename];

[_cardboard_audio_engine start];

...

[self spawnCube];

}

Implement prepareDrawFrame

To set up rendering logic before the individual eyes are rendered, implement

-cardboardView:prepareDrawFrame:.

Any per-frame operations specific to this rendering should happen here. This is

a good place to update your model and clear the GL state for drawing. The app

computes the head orientation and updates the audio engine.

- (void)cardboardView:(GVRCardboardView *)cardboardView

prepareDrawFrame:(GVRHeadTransform *)headTransform {

GLKMatrix4 head_from_start_matrix = [headTransform headPoseInStartSpace];

// Update audio listener's head rotation.

const GLKQuaternion head_rotation =

GLKQuaternionMakeWithMatrix4(GLKMatrix4Transpose(

[headTransform headPoseInStartSpace]));

[_cardboard_audio_engine setHeadRotation:head_rotation.q[0]

y:head_rotation.q[1]

z:head_rotation.q[2]

w:head_rotation.q[3]];

// Update the audio engine.

[_cardboard_audio_engine update];

// Clear the GL viewport.

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glEnable(GL_DEPTH_TEST);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnable(GL_SCISSOR_TEST);

}

Implement drawEye

The

drawEye

delegate provides the core of the rendering code, similar to building a regular

OpenGL ES application.

The following snippet shows how to implement drawEye

to get the view transformation matrix for each eye and the perspective

transformation matrix. Note that this method gets called for each eye. If the

GVRCardboardView does not have VR mode enabled, then eye is set to the center

eye. This is useful for monoscopic rendering, to provide a non-VR view of the

3D scene.

- (void)cardboardView:(GVRCardboardView *)cardboardView

drawEye:(GVREye)eye

withHeadTransform:(GVRHeadTransform *)headTransform {

// Set the viewport.

CGRect viewport = [headTransform viewportForEye:eye];

glViewport(viewport.origin.x, viewport.origin.y, viewport.size.width,

viewport.size.height);

glScissor(viewport.origin.x, viewport.origin.y, viewport.size.width,

viewport.size.height);

// Get the head matrix.

const GLKMatrix4 head_from_start_matrix =

[headTransform headPoseInStartSpace];

// Get this eye's matrices.

GLKMatrix4 projection_matrix = [headTransform

projectionMatrixForEye:eye near:0.1f far:100.0f];

GLKMatrix4 eye_from_head_matrix =

[headTransform eyeFromHeadMatrix:eye];

// Compute the model view projection matrix.

GLKMatrix4 model_view_projection_matrix =

GLKMatrix4Multiply(projection_matrix,

GLKMatrix4Multiply(eye_from_head_matrix, head_from_start_matrix));

// Render from this eye.

[self renderWithModelViewProjectionMatrix:model_view_projection_matrix.m];

}

After returning from this call,

GVRCardboardView

renders the scene to the display.

Add a render loop using CADisplayLink

The rendering needs to be driven by a render loop using

CADisplayLink.

The TreasureHunt app provides a sample render loop: TreasureHuntRenderLoop.

This needs to call the

-render

method of the

GVRCardboardView

class. This is handled in the

-viewWillAppear:

and

- viewDidDisappear:

methods of the TreasureHuntViewController class:

- (void)viewWillAppear:(BOOL)animated {

[super viewWillAppear:animated];

_renderLoop = [[TreasureHuntRenderLoop alloc]

initWithRenderTarget:_cardboardView selector:@selector(render)];

}

- (void)viewDidDisappear:(BOOL)animated {

[super viewDidDisappear:animated];

[_renderLoop invalidate];

_renderLoop = nil;

}

Handling inputs

The Google VR SDK detects events fired when the user presses the viewer button.

To provide custom behavior when these events occur, implement the

-cardboardView:didFireEvent:

delegate method.

- (void)cardboardView:(GVRCardboardView *)cardboardView

didFireEvent:(GVRUserEvent)event {

switch (event) {

case kGVRUserEventBackButton:

// If the view controller is in a navigation stack or

// over another view controller, pop or dismiss the

// view controller here.

break;

case kGVRUserEventTrigger:

NSLog(@"User performed trigger action");

// Check whether the object is found.

if (_is_cube_focused) {

// Vibrate the device on success.

AudioServicesPlaySystemSound(kSystemSoundID_Vibrate);

// Generate the next cube.

[self spawnCube];

}

break;

}

}