Page Summary

-

This page demonstrates compiling and running a TensorFlow Lite ML model on Coral NPU's scalar core and vector engine using scripted examples.

-

The MobileNet V1 model, used for computer vision tasks, is executed using reference kernels from TensorFlow Lite Micro on intermediate layers.

-

The process uses the CoreMini AXI high-memory simulator with real inputs and outputs, reporting simulation metrics like execution cycle count.

-

Prerequisites include working in the Google Coral NPU GitHub repository and completing specific software and programming tutorials.

-

Running the model on the vector execution engine demonstrates optimization and performance comparison to the scalar core example.

The scripted example given here let you compile and run a LiteRT (TensorFlow Lite) ML model on Coral NPU's scalar core. The model executed is MobileNet V1, a convolutional neural network (CNN) architecture designed for image classification, object detection, and other computer vision tasks.

The process uses reference kernels from LiteRT Micro, and intermediate layers of MobileNet are executed.

The model is run in the core mini AXI high-memory simulator, with real inputs and real outputs. The simulator output reports simulation metrics, notably the execution cycle count for performance assessment.

Prerequisites

This example assumes you are working in the Google Coral NPU repository on GitHub.

- Be sure to complete the preliminary steps described in Software prerequisites and system setup.

- Complete the Simple programming tutorial.

- Follow the edge AI tutorial here to learn about running an inference on microcontrollers.

Run MobileNet on the scalar core

To run the script, enter the following command:

bazel run -c opt tests/cocotb/tutorial/tfmicro:cocotb_run_mobilenet_v1

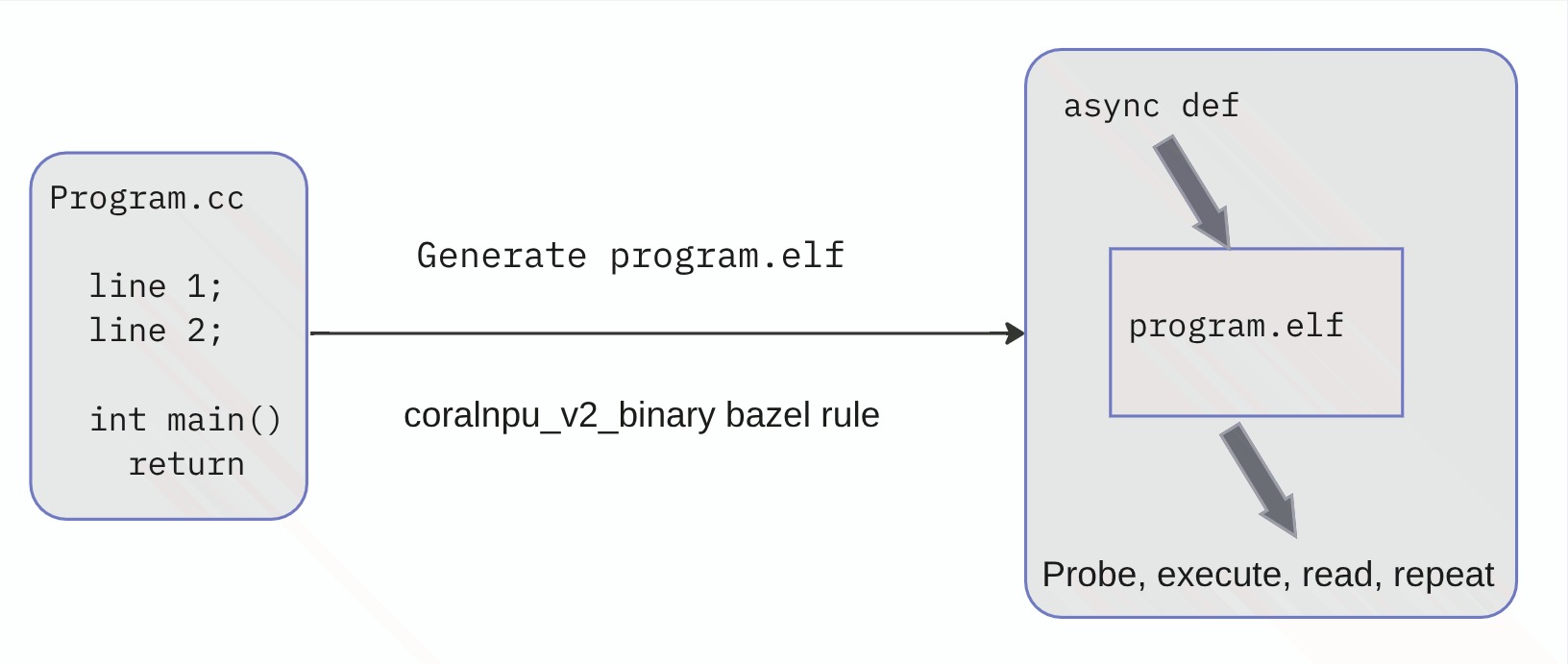

The script performs the following sub-tasks, as illustrated in the figure below:

- Compiles

run_mobilenet.ccusing coralnpu_v2_binary.- coralnpu_v2_binary is a bazel rule that composes flags and settings

to compile for the

coralnpu_v2platform. run_mobilenet.ccis a TFMicro inference with reference kernels.- Finds the example rule run_mobilenet_v1_025_partial_binary.

- coralnpu_v2_binary is a bazel rule that composes flags and settings

to compile for the

- Uses high-memory TCM (tightly-coupled memory) of Coral NPU to:

- Run high-memory programs such as ML model inferencing. See this page for information about high-memory TCM.

- Add a new data section

.extdata. - Memory buffers such as tensor arena, inputs, and outputs can be stored

in this data section:

(uint8_t tensor_arena[kTensorArenaSize] __attribute__((section(".extdata"), aligned(16), used, retain)))

- Runs the cocotb test suite.

- When the TFLite inference program is ready, the

cocotb_test_suitebazel rule is used to simulate the program withrvv_core_mini_highmem_axi_model. - Run the script

cocotb_run_mobilenet_v1.py:- Loads

run_mobilenet_v1_025_partial_binary.elf. - Invokes and executes the program to halt.

- Reads the memory buffer inference_status in program memory.

- Loads

- When the TFLite inference program is ready, the

cocotb is a coroutine-based, co-simulation test bench environment for verifying VHDL and SystemVerilog RTL using Python. cocotb is free, open-source, and hosted on GitHub.