Page Summary

-

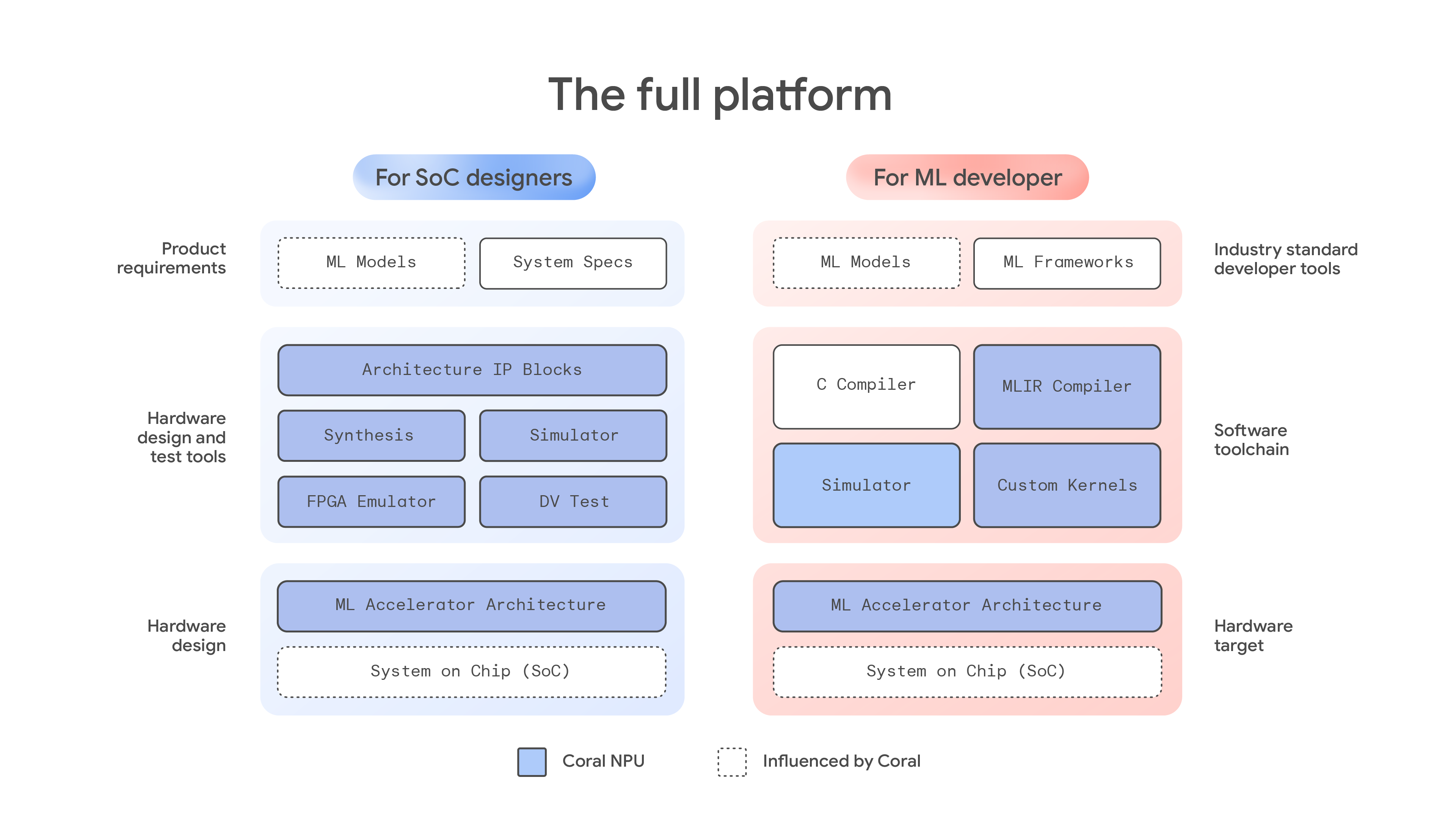

Coral NPU is a full-stack platform for creating efficient Edge AI experiences with an AI-first hardware architecture.

-

It targets ultra-low power, always-on edge AI applications for devices like wearables, prioritizing minimal battery usage.

-

Hardware developers can access architecture IP blocks, synthesis tools, simulators, FPGA emulators, and design verification tests.

-

Software developers are provided with a C compiler, an MLIR compiler, a simulator, and custom kernels for AI/ML model development.

Coral NPU is a full-stack platform designed to empower hardware designers and ML developers to build the next generation of private and efficient Edge AI experiences. By combining an AI-first hardware architecture with a unified developer experience, Coral NPU targets ultra-low power, always-on edge AI applications using ambient sensing. It is designed to enable all day AI-experiences on wearable devices minimizing battery usage.

For hardware developers who design SoC or discrete AI accelerator chips, Coral NPU provides these resources:

Architecture IP blocks: the core and the components described in the architecture overview page above, provide the foundation for the NPU design, using the RISC-V standard.

Synthesis tool: converts hardware description language (HDL) into a technology-specific, gate-level netlist. This is analogous to a software compiler, which translates human-readable source code into machine-executable binaries. It also performs complex optimizations to meet the designer's goals for performance, power consumption, and chip area, collectively known as PPA.

Simulator: creates a virtual model of the RTL to test, verify, and validate its functionality and performance. This allows rapid iterations and helps identify issues early in the development cycle, before committing to expensive physical prototypes.

FPGA emulator: verifies that the design meets functional requirements by imitating hardware behavior. It provides a high-speed, high-capacity and hardware-accelerated platform for testing, validating, and debugging the design before committing to silicon.

Design verification (DV) tests: confirms that the design functions exactly as intended by its specifications. This is essential for preventing costly and time-consuming errors before committing to silicon.

See the hardware developer guide for more details.

For software developers who design AI/ML models, Coral NPU provides these resources:

C language compiler: a standard C compiler for programs written in C. This compiler is not unique for Coral NPU, as any open source standard C compiler can be used.

MLIR compiler: Multi-Level Intermediate Representation (MLIR) based compiler acts as a flexible, modular "compiler toolkit" for bridging high-level software abstractions with diverse hardware targets. Instead of compiling directly from a high-level programming language like Python or C++ to low-level machine code, MLIR enables a series of intermediate steps that allow for domain-specific optimizations.

Simulator: provides a controlled, virtual environment for training, testing, and validating AI models. It helps developers evaluate how a model will interact and perform with the Coral NPU hardware components. Allows for rapid iterations, for debugging and stress tests.

Custom kernels: are low-level, hardware-specific functions written to perform specialized and highly optimized computations. Standard machine learning frameworks offer a wide range of prebuilt operations, but custom kernels are essential for pushing performance beyond these generic libraries and implementing novel AI functionality. Developers should consider using custom kernels for maximum performance, memory and power optimization, specialized data types and sparsity, and to program the Coral NPU based NPU design to execute new and experimental operations.

See the software developer guide for more details.