Page Summary

-

The Coral NPU is a low-power accelerator designed for power-constrained devices like wearables, targeting a 10 mW power envelope.

-

AI/ML accelerator performance spans a wide range, from a few GMACs for low-power devices to over 10 million GMACs for high-performance computing.

-

The Coral NPU's configurable architecture allows silicon partners to scale the design for diverse low-power AI accelerators.

-

This flexibility enables vendors to create differentiated products by tailoring performance and power for various markets.

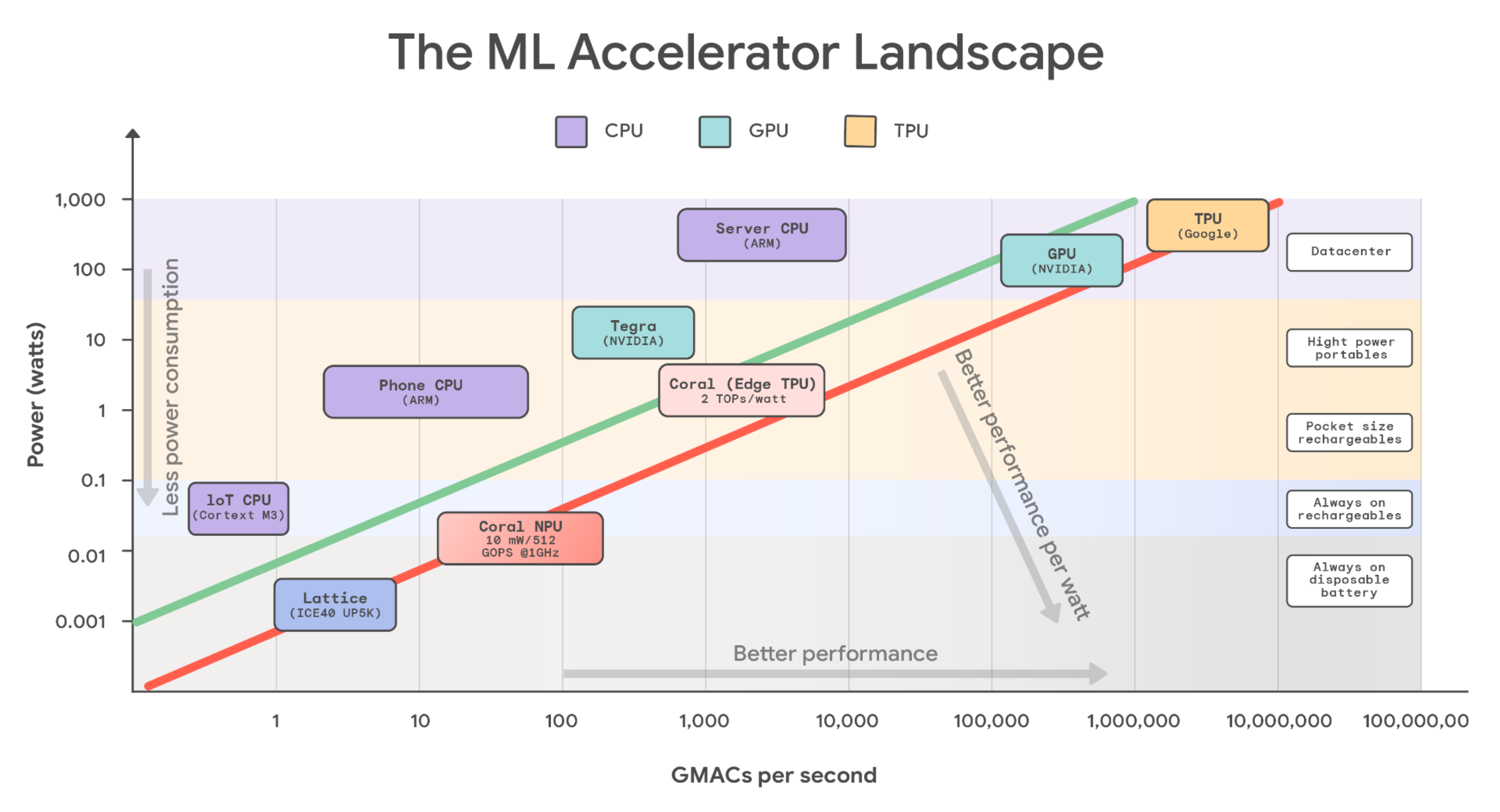

Google's original Coral platform, with its 2 TOPS-per-watt EdgeTPU, set a new standard for AI efficiency in IoT devices. However, the strict power and thermal limits of always-on wearables demanded an even more efficient class of accelerator.

The Coral NPU built on insights from the original platform, is a low-power accelerator for power-constrained devices like wearables and ambient sensors. It targets an exceptionally low 10 mW power envelope while delivering powerful performance.

The diagram illustrates that the performance of AI/ML accelerators, measured in giga multiply-accumulate operations per-second (GMACs), spans a vast range. This ranges from a few GMACs at the low end to over 10 million GMACs (equivalent to 20,000 TOPS) at the high end. Here's a breakdown of the current landscape:

Low-power, battery-operated devices: At the lower end of the performance and power spectrum, ultra-low-power FPGAs like the Lattice iCE40UP5K and microcontrollers based on ARM Cortex-M3 offer performance in the range of a few GMACs and are ideal for battery-powered devices.

Mobile and rechargeable devices: Moving up the scale, mobile phone CPUs, often based on ARM architecture, deliver performance in the dozens of GMACs range. These are commonly found in rechargeable mobile devices.

IoT and edge devices: Further up, we have System-on-Chips (SoCs) that operate in the thousands of GMACs (a few TOPs). This category includes Nvidia's Tegra SoC and Google's EdgeTPU ASIC, which offers 4 TOPs. These are typical in IoT devices that are either battery-powered or have a dedicated power supply.

High-performance computing: At the highest end, CPUs, GPUs, and TPUs are used for dedicated AI/ML acceleration in PCs, servers, and cloud infrastructure.

Flexible and configurable

The Coral NPU's highly configurable architecture allows silicon partners to develop a diverse portfolio of low-power AI accelerators from a single IP. The baseline design targets 512 GOPs/10 mW for wearables, but the design can be scaled to create higher performance NPU’s for specific use cases.

This flexibility provides vendors the ability to create differentiated products for various markets by tailoring performance and power. This strategy builds a robust hardware ecosystem and accelerates the next wave of innovation in edge AI.