Page Summary

-

Coral NPU is an open-source, ultra-low-power neural processing unit designed for ML inferencing on wearable devices.

-

Its domain-optimized instruction set and data paths make it more energy-efficient for AI tasks compared to traditional CPUs.

-

Based on the 32-bit RISC-V ISA, Coral NPU is a C-programmable accelerator balancing efficiency and robustness, supporting custom computing solutions.

-

The architecture includes distinct matrix, vector (SIMD), and scalar processor components that work together.

-

Coral NPU supports various ML inferencing operations and is available as an open-source code base for integration and software development.

Overview

Coral NPU is an open-source IP designed by Google Research and is freely available for integration into ultra-low-power System-on-Chips (SoCs) targeting wearable devices such as hearables, augmented reality (AR) glasses and smart watches. Coral is a neural processing unit (NPU), also known as an AI accelerator or deep-learning processor.

Coral NPU is a highly efficient hardware accelerator for ML inferencing. It lowers SoC energy consumption by reducing both the control overhead of processor (ALU) operations and the distance data must travel within the memory hierarchy.

Coral NPU's instruction set is domain-optimized for ML, featuring application-specific arithmetic operations and data paths built to feed the compute engines efficiently.

This specialized design contrasts with traditional CPUs. CPUs are highly flexible, offering general-purpose instruction sets, an accessible memory model, and uniform software APIs. This allows them to use standard compilers and tools, leveraging broad ecosystem investments. However, this general-purpose programmability makes CPUs inefficient for AI, with a much lower compute-per-Joule, as they lack domain specialization.

Older ML accelerators, on the other hand, are not robust. While often efficient, they are difficult to program, have a limited ability to reuse compute resources for general tasks, and have poor support for execution-as-function-call. The Coral NPU, in contrast, is designed to be both efficient and robust. It is an energy-efficient, C-programmable accelerator that resolves this tradeoff.

Coral NPU is based on the 32-bit RISC-V Instruction Set Architecture (ISA). The extensible industry-standard RISC-V ISA empowers developers to create optimized, customizable computing solutions, from custom processors to hardware/software co-designs. Extending the ISA with custom compute features makes Coral NPU the ideal hardware accelerator for running ML inferencing operations such as:

- Image classification

- Person detection

- Pose estimation

- Transformer (neural network)

Coral NPU enables a software-focused approach to AI hardware, as well as a unified programming model across AI workloads running on CPUs, GPUs, and NPUs. RISC-V allows for domain-specific customization tailored to particular applications and workloads by enabling the selection of appropriate ratified extensions to the standard — for example vector and matrix operations. For the complete list of RISC-V specifications, see the official RISC-V specifications.

This Coral NPU documentation provides:

- Hardware reference and IP integration guidelines for commercial silicon designers and academia

- Guidance for using the software toolchain to compile and run ML models on the NPU processor

The Coral NPU hardware design and software tools codebase is available on GitHub.

To help you understand the design, Google publishes a Gemini code wiki for Coral NPU. This structured wiki provides Gemini-generated documentation for the open-source repository. The information is automatically refreshed and always up-to-date — no stale docs ever!

The MPACT-CoralNPU behavioral simulator is housed in a separate GitHub repository located here.

Coral NPU components

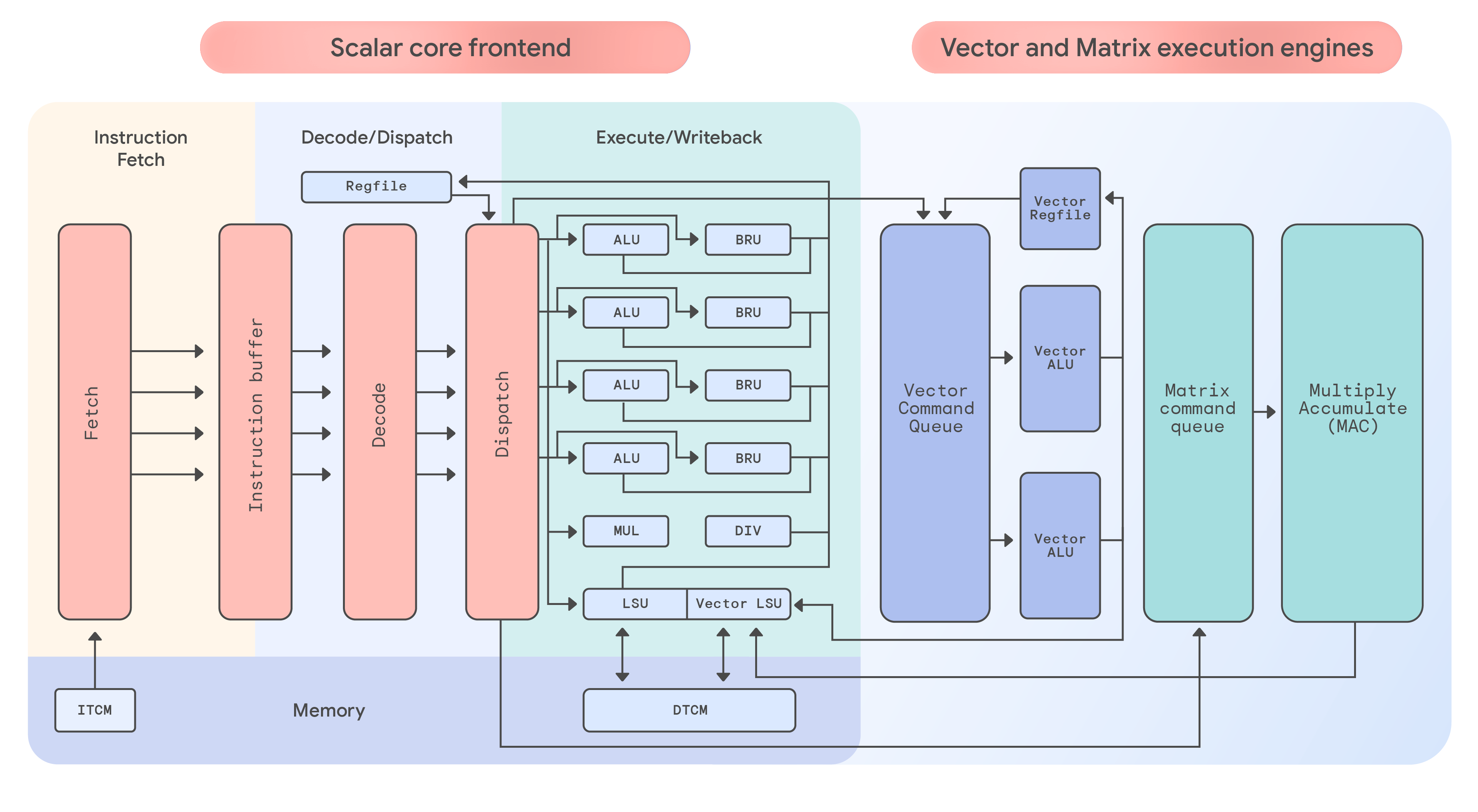

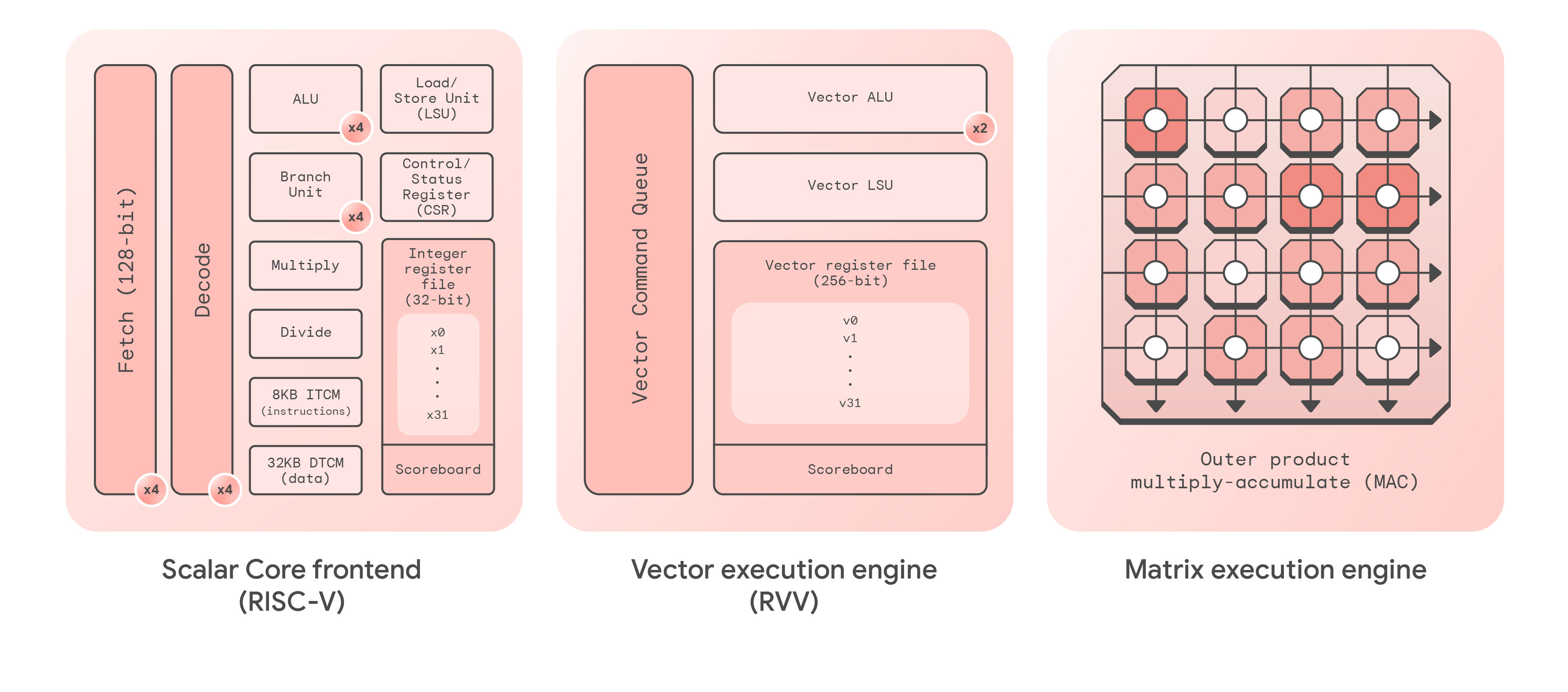

Coral NPU includes three distinct processor components that work together: matrix, vector (SIMD), and scalar. The terms SIMD (single-instruction, multiple data) and vector are used synonymously here.

| Subcomponent | Description |

|---|---|

| Fetch / decode | Retrieves and interprets program instructions |

| Scoreboard | Manages instruction dependencies to prevent conflicts |

| ALU | Arithmetic logic unit |

| BRU | Branch unit: controls the program's flow (loops, jumps) |

| LSU | Load/store unit: moves data between registers and memory |

| CSR | Control/status registers |

| ITCM | Instruction tightly-coupled SRAM memory |

| DTCM | Data tightly-coupled SRAM memory |

| SIMD | Single-instruction, multiple data |

| MAC | Multiply-accumulate execution engine |

Coral NPU features

Coral NPU offers the following top-level feature set:

- RV32IMF_Zve32x RISC-V instruction set

- 32-bit address space for applications and operating system kernels

- Four-stage processor, in-order dispatch, out-of-order retire

- Four-way scalar, two-way vector dispatch

- 128-bit SIMD, 256-bit (future) pipeline

- 8 KB ITCM memory (tightly-coupled memory for instructions)

- 32 KB DTCM memory (tightly-coupled memory for data)

- Both memories are single-cycle-latency SRAM, more efficient than cache memory

- AXI4 bus interfaces, functioning as both master and slave, to interact with external memory and allow external CPUs to configure the Coral NPU

- GNU Debugger (GDB) support

Coral NPU's RV32IMF_Zve32x specification includes the following extensions to the RISC-V ISA base:

- RV32IM – this label indicates the RISC-V 32-bit base instruction set with I (integer) and M (multiplication, division) extensions (operations).

- I – Base integer instruction set

- M – Standard Extension for Integer Multiplication and Division

- F – Standard Extension for Single-Precision Floating-Point

- V – Standard Extension for Vector Operations; see RISC-V vector specification PDF

- Zve32x – subset of V (vector operations), refer to "Zve* Vector Extensions for Embedded Processors" in the RISC-V vector specification

Note that this is a partial list is given here for quick reference; see RISC-V specifications for the complete list of ratified and in-progress extensions.

The complete RISC-V label for Coral NPU is rv32imf_zve32x_zicsr_zifencei_zbb.

Scalar core features

- RV32IM frontend that drives command queues of the ML and SIMD backend

- Thirty-one 32-bit scalar registers (x1 – x31), with x0 hardwired to zero

- Various control and status registers (CSRs) per RV32_Zicsr

Vector execution engine features

- Supports data widths of 8, 16, and 32 bits

- Thirty-two 256-bit vector registers (v0 – v31)

- For example, int32 x 8-bit

- 8 x 8 x 32-bit accumulator (acc<8><8>)

Matrix execution engine features

- Quantized outer-product multiply-accumulate (MAC) engine

- 4 x 8-bit multiplies reduced into 32-bit accumulators

- 256 MACs per-cycle

Coral NPU supports the following data types: int8, int16. Support for floating-point data types is planned for the future.

The custom vector and matrix compute features of Coral NPU will be migrated to adhere to the future RISC-V standards, when available.

A more detailed view of the Coral NPU architecture is shown below.