Video360 is a hybrid Daydream app that includes a 2D (non-VR) Activity and a Daydream (VR) Activity capable of viewing 360 media.

The 2D (non-VR) Activity uses standard Android & OpenGL APIs to render 360 content and can be used as a standalone 360 media viewer.

The Daydream (VR) Activity uses the same rendering system as the 2D (non-VR) Activity but also uses the Daydream SDK to render the scene in VR. It renders a floating 2D (non-VR) UI in VR with a custom Android View and allows the user to control a running video with the Daydream Controller.

The Video360 sample app is included in the Google VR SDK for Android. If you have not yet downloaded the SDK, see Getting Started to set up and start navigating the SDK in Android Studio.

Video360 is more complex than the VrPanoramaView

and

VrVideoView

VR View based sample apps also included in the SDK. These samples are lightweight

widgets that can be added to existing Android apps to display 360 images or

video. They have a simple API and limited room for customization.

Device video support and performance

All Daydream-ready devices support rendering video at 3840 x 2160 at 60 fps. Video playback capabilities and decoding support on other devices varies and should be tested to ensure that video renders correctly and that performance is acceptable.

Daydream-ready Android devices can render H264-encoded video at 3840 × 2160 and 60 fps. For top-bottom stereo, the video frame should contain a pair of 2880 x 1440 scenes stacked on top of each other resulting in a 2880 x 2880 video stream.

Lower-end Android devices cannot render at this resolution, but most devices can render 1920 x 1080 at 60 fps.

Overview of functionality

Embedding a 360 viewer in Android Activities

Video360 demonstrates how to create a 360 media viewer using standard Android & OpenGL APIs. The sample has an embedded GLSurfaceView that renders a 3D scene. This functionality doesn't use any Daydream-specific APIs and can be used to create a pure 2D (non-VR) app to view 360 media.

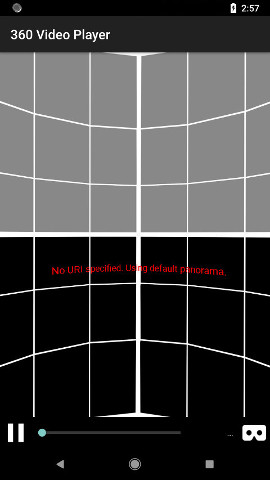

The 2D (non-VR) mode for the sample, showing the default panorama used if no media is specified. See UI and adb commands to load media for more information.

The core of the app involves rendering a video on a 3D sphere.

- The embedded

MonoscopicViewclass uses Android's OpenGL APIs to render a 3D mesh. This is a standardGlSurfaceViewimplementation for OpenGL. - The mesh has an OpenGL GL_TEXTURE_EXTERNAL_OES which allows the system to render video textures.

- An Android SurfaceTexture is created using this OpenGL texture.

- This

SurfaceTextureis wrapped in an Surface which is a low-level Android object for quickly moving a stream of images through the system. - This

Surfaceis sent to a MediaPlayer which renders video to it.

The sample also demonstrates how to render 360 images or draw arbitrary images

using a

Canvas

onto this Surface.

See Android's Graphics Architecture for low-level details about how Surfaces and their related components work.

This Activity uses Android's Sensor API to reorient the scene based on the orientation of the phone. It also uses touch input to allow the user to manipulate the camera.

Creating a 360 video viewer for Daydream

Video360 demonstrates how to render 360 media

inside a Daydream Activity. The same rendering system for the embedded view is

used together with GvrActivity. However, instead of using

MonoscopicView's sensor and rendering systems, the Daydream API's sensor and

rendering system is used.

The Daydream mode for the sample. The congo.mp4 video from the git repository is being played.

Rendering a 2D (non-VR) UI inside a VR scene

The Daydream Controller is used to interact with a 2D (non-VR) UI rendered inside the VR scene.

- Similar to the sphere used for the video above, an OpenGL mesh is used for

the floating UI. This mesh has an an OpenGL

GL_TEXTURE_EXTERNAL_OESwhich is wrapped in aSurfaceTextureand then wrapped in aSurface. - Surface.lockCanvas()

is used to acquire an Android

Canvas. - This

Canvasis passed to the ViewGroup.dispatchDraw() of theVideoUiViewclass. - The

VideoUiViewuses Android standard 2D (non-VR) rendering system to draw on the givenCanvas. - Controller click events are translated into 2D (non-VR) events and dispatched to the

underlying

View.CanvasQuad.translateClickperforms a simple hitbox calculate and generates an XY coordinate that the Android touch system uses to generate standard click events.

Permissions and hybrid VR app flow

The Video360 sample can load images or videos from the device's external storage. This requires asking the user to grant the READ_EXTERNAL_STORAGE permission. Since the user can launch the VR Activity prior to granting this permission. The sample demonstrates how to use the DaydreamApi.exitFromVr() API to switch from the VR Activity to the 2D (non-VR) Activity, request a runtime permission, and then return to VR using DaydreamApi.launchInVr().

The sample also allows the user to toggle between 2D (non-VR) and VR modes using the UI.

UI and adb commands to load media

The Video360 sample is meant to be used as a basis for other apps rather than as a standalone video player so it has a minimal UI. After the app is installed and the user accepts permissions, it will render a placeholder panorama and allow the user to switch in and out of VR. When in VR, the user can use the Daydream Controller to interact with a floating 2D (non-VR) UI.

Loading real media requires using adb to send the appropriate Intent to

the app. Two example commands are given below. They assume you've used adb

push to push IMAGE.JPG and VIDEO.MP4 to the device at /sdcard/.

Load a top-bottom stereo image in the VR Activity:

adb shell am start \ -a android.intent.action.VIEW \ -n com.google.vr.sdk.samples.video360/.VrVideoActivity \ --ei stereoFormat 2 \ -d "file:///sdcard/IMAGE.JPG"Load a monoscopic video in the 2D (non-VR) Activity:

adb shell am start \ -a android.intent.action.VIEW \ -n com.google.vr.sdk.samples.video360/.VideoActivity \ --ei stereoFormat 0 \ -d "file:///sdcard/VIDEO.MP4"

See MediaLoader's Javadoc for more information about how to craft the

appropriate intent.

The sample uses Android's built-in media decoding system so it is capable of rendering any supported media format.

Many devices support more formats. For images, most Android devices can render 4096 x 4096 bitmaps.

Core components

VideoActivity- a standard Android Activity. This hosts the 2D (non-VR) UI for the 360 viewer. This also demonstrates how to request permissions and launch a Daydream Activity.MonoscopicView- aGLSurfaceViewthat renders the 3D scene insideVideoActivity. It uses the Sensor API to adjust the OpenGL camera's orientation based on the phone's orientation. It also has aTouchTrackercomponent that allows the user to change the camera's pitch & yaw by dragging on the screen.SceneRendererandMesh- the core OpenGL rendering components.Meshgenerates and renders an equirectancular sphere that displays the video.SceneRendercoordinates the entire rendering process in 2D (non-VR) and VR.MediaLoader- this parses theIntentand loads the file. It usesMediaPlayerto render video andCanvasto render images.VrVideoActivity- a GvrActivity that handles the Daydream-specific functionality. It uses the Daydream Controller API to let the user interact with the 2D (non-VR) UI while they are in VR.CanvasQuad- a minimal system to render 2D (non-VR) UI inside OpenGL scenes. In combination withVideoUiView, this class can render arbitrary AndroidViews to an OpenGL texture and then render that texture in the 3D scene. It also has basic functionality to convert Daydream Controller events into 2D (non-VR) Android touch events.

Extending the Video360 sample

Video360 includes basic functionality for rendering 360 media. There are several ways to extend the sample:

Improve the sensor system in

MonoscopicView. The sample demonstrates basic sensor input. It doesn't have any sensor fusion beyond what Android provides. Depending on the use case, it may be useful to incorporate sensor fusion to combine multiple sensors and inputs when manipulating the camera.Extend the functionality of

TouchTracker.TouchTrackeronly allows the user to manipulate the pitch & yaw of the camera. The design also uses Euler angles to manipulate the camera. This can lead to strange interactions when the touch input is mixed with the gyroscope input. A better system would be to use quaternions to processing the rotation. The touch events can then be used to drag the camera using spherical linear interpolation which allows for more complex motion. However, the exact UI depends on the needs of the app that will embed this input system. It is also possible to use multi-touch gestures to allow the user to zoom in. This involves detecting gestures and modifying the field of view of the OpenGL camera.Improve media support.

MediaLoaderonly has support for basic video files usingMediaPlayer. A real application would use a more complex media system such as ExoPlayer to render video. ExoPlayer can render to aSurfacejust likeMediaPlayerbut it has support for many more formats including streaming video. You can also use the Grafika samples if you want to build your own media decoding pipeline.Add more 2D (non-VR) UI in VR.

VideoUiViewshows a minimal UI for playing video. However, the general design behind it can be extended to create more complex UIs.CanvasQuaddemonstrates how to render an arbitrary AndroidViewinside an OpenGL scene and allow the user to interact with theViewvia a Daydream Controller. Almost any AndroidViewcan then be embedded inside this rootViewwhich allows for complex 2D (non-VR) UIs inside VR. A complex app can use a WebView to embedd arbitrary HTML & Javascript inside a VR app. This makes it easy to create complex 2D (non-VR) UIs inside VR.Support more 360 media formats. Only equirectangular media is supported in the sample. This is a very common format for 360 content, but it is inefficient. It wastes pixels at the poles of the scene and has low resolution at the equator. The spherical mesh used to rended this content is also inefficient due to the high number of verticies used near the poles. A better format is the equal area projection which improves the visual quality of the media for a given file size. However, most media processing system do not yet have support for this format.