Page Summary

-

The "wisdom of the crowd" suggests that collective opinions can provide surprisingly accurate judgments, as demonstrated by a 1906 ox weight-guessing competition where the collective guess was remarkably close to the true weight.

-

This phenomenon can be explained by the Central Limit Theorem, which states that the average of multiple independent estimates tends to converge towards the true value.

-

In machine learning, ensembles leverage this principle by combining predictions from multiple models, improving overall accuracy when individual models are sufficiently diverse and reasonably accurate.

-

While ensembles require more computational resources, their enhanced predictive performance often outweighs the added cost, especially when individual models are carefully selected and combined.

-

Achieving optimal ensemble performance involves striking a balance between ensuring model independence to avoid redundant predictions and maintaining the individual quality of sub-models for overall accuracy.

This is an Ox.

Figure 19. An ox.

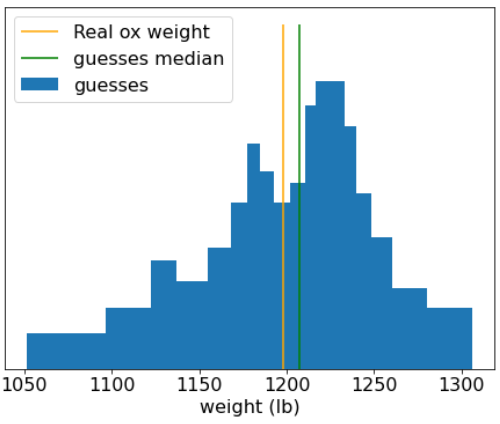

In 1906, a weight judging competition was held in England. 787 participants guessed the weight of an ox. The median error of individual guesses was 37 lb (an error of 3.1%). However, the overall median of the guesses was only 9 lb away from the real weight of the ox (1198 lb), which was an error of only 0.7%.

Figure 20. Histogram of individual weight guesses.

This anecdote illustrates the Wisdom of the crowd: In certain situations, collective opinion provides very good judgment.

Mathematically, the wisdom of the crowd can be modeled with the Central limit theorem: Informally, the squared error between a value and the average of N noisy estimates of this value tends to zero with a 1/N factor. However, if the variables are not independent, the variance is greater.

In machine learning, an ensemble is a collection of models whose predictions are averaged (or aggregated in some way). If the ensemble models are different enough without being too bad individually, the quality of the ensemble is generally better than the quality of each of the individual models. An ensemble requires more training and inference time than a single model. After all, you have to perform training and inference on multiple models instead of a single model.

Informally, for an ensemble to work best, the individual models should be independent. As an illustration, an ensemble composed of 10 of the exact same models (that is, not independent at all) won't be better than the individual model. On the other hand, forcing models to be independent could mean making them worse. Effective ensembling requires finding the balance between model independence and the quality of its sub-models.