Page Summary

-

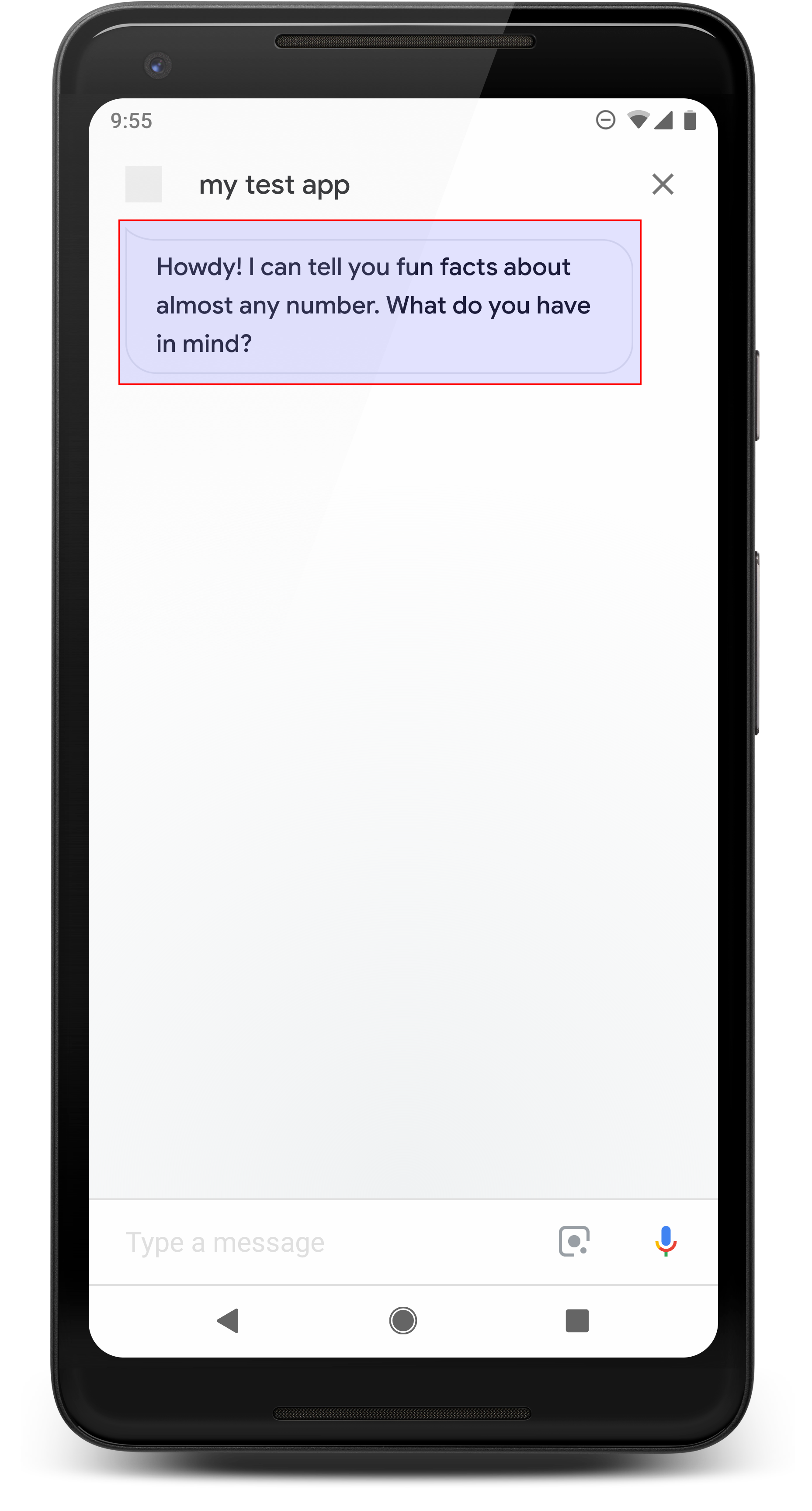

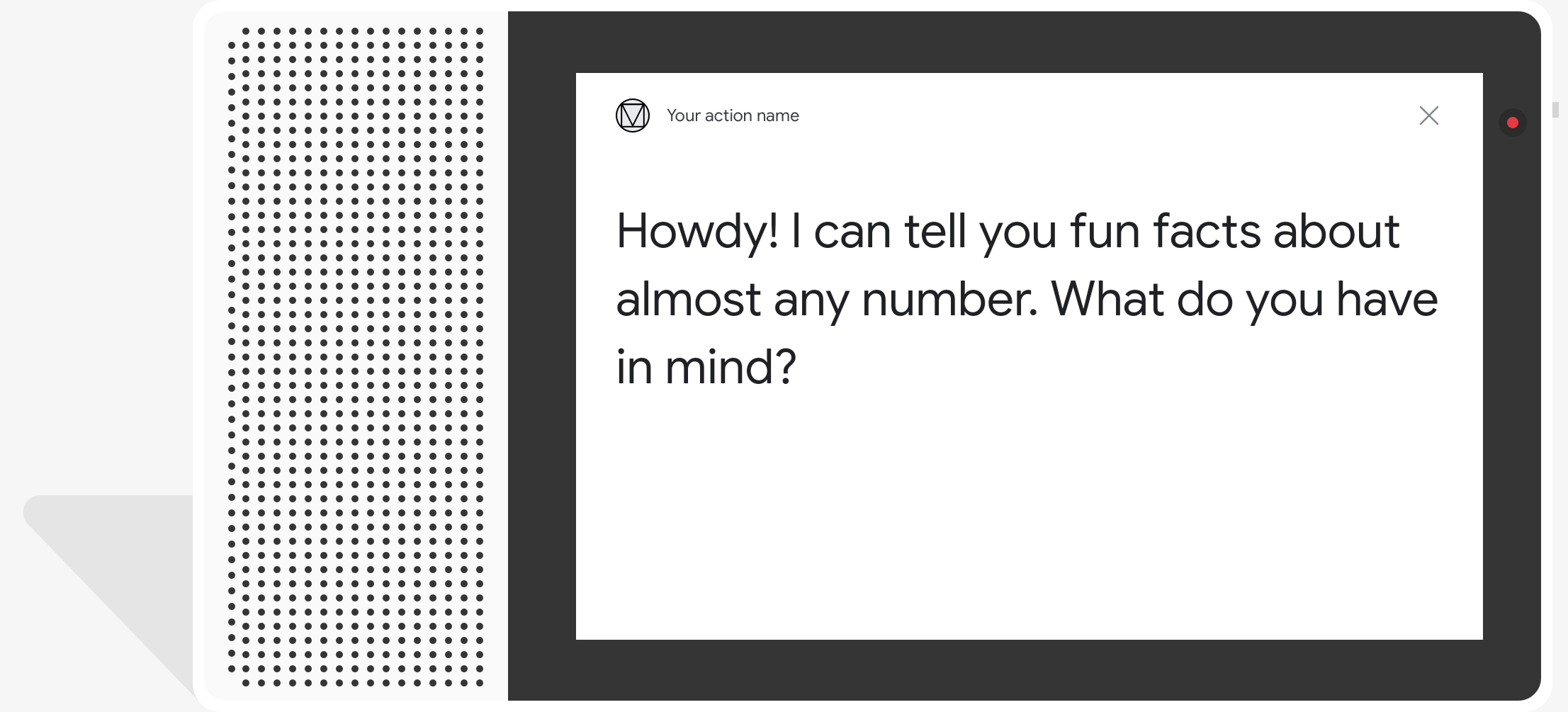

Simple responses appear as chat bubbles and use text-to-speech or SSML for audio output.

-

Chat bubble content should match or transcribe the audio output to improve user comprehension.

-

Simple responses defined in

first_simpleandlast_simpleobjects in a prompt are sent before any rich responses. -

The

speechproperty is for the audio output (SSML or text-to-speech), while thetextproperty is for the visual chat bubble content. -

Simple responses have optional

speechandtextproperties with character limits and recommended lengths for optimal display.

Simple responses take the form of a chat bubble visually and use text-to-speech (TTS) or Speech Synthesis Markup Language (SSML) for sound. By using short simple responses in conversation, you can keep users engaged with a clear visual and audio interface that can be paired with other conversational elements.

Chat bubble content in a simple response must be a phonetic subset or a complete transcript of the TTS/SSML output. This helps users map out what your Action says and increases comprehension in various conditions.

In a prompt, text you provide in the first_simple and last_simple objects

use the properties of a simple response. Google Assistant sends all simple

responses in a prompt, then sends the final rich response in the prompt queue.

Properties

The simple response type has the following properties:

| Property | Type | Requirement | Description |

|---|---|---|---|

speech |

string | Optional | Represents the words to be spoken to the user in SSML or text-to-speech.

If the override field in the containing prompt is "true",

then speech defined in this field replaces the previous simple

prompt's speech. |

text |

string | Optional |

Text to display in the chat bubble. Strings longer than 640 characters are truncated at the first word break (or whitespace) before 640 characters. We recommend using less than 300 characters to prevent content from extending past the screen, especially when paired with a card or other visual element. If not provided, Assistant renders a display version of the

|

Sample code

YAML

candidates: - first_simple: variants: - speech: This is the first simple response. text: This is the 1st simple response. last_simple: variants: - speech: This is the last simple response. text: This is the last simple response.

JSON

{ "candidates": [ { "first_simple": { "variants": [ { "speech": "This is the first simple response.", "text": "This is the 1st simple response." } ] }, "last_simple": { "variants": [ { "speech": "This is the last simple response.", "text": "This is the last simple response." } ] } } ] }

Node.js

app.handle('Simple', conv => { conv.add(new Simple({ speech: 'This is the first simple response.', text: 'This is the 1st simple response.' })); conv.add(new Simple({ speech: 'This is the last simple response.', text: 'This is the last simple response.' })); });

JSON

{ "responseJson": { "session": { "id": "session_id", "params": {} }, "prompt": { "override": false, "firstSimple": { "speech": "This is the first simple response.", "text": "This is the 1st simple response." }, "lastSimple": { "speech": "This is the last simple response.", "text": "This is the last simple response." } } } }

SSML and sounds

Use SSML and sounds in your responses to give them more polish and enhance the user experience. See the SSML documentation for more information.

Sound library

We provide a variety of free, short sounds in our sound library. These sounds are hosted for you, so all you need to do is include them in your SSML.