Page Summary

-

Fulfillment defines the conversational interface for your Action to obtain user input and the logic to process it.

-

Intents in Dialogflow define the grammar for what users say to trigger an intent and the fulfillment to process it.

-

You can create intents with specific names, contexts, events, training phrases, actions/parameters, responses, and fulfillment settings.

-

For some intents, you can build responses directly within Dialogflow without calling fulfillment, useful for static responses.

-

Your fulfillment code, hosted in a webhook, receives requests from Dialogflow when an intent is triggered and processes them to return a response.

-

Fallback intents are triggered when Dialogflow cannot match user input to any training phrases, and should primarily be used for reprompting the user for valid input.

Fulfillment defines the conversational interface for your Action to obtain user input and the logic to process the input and eventually fulfill the Action.

Define your conversation

Now that you've defined Actions, you can build the corresponding conversation for those Actions. You do this by creating Dialogflow intents that define the grammar or what users need to say to trigger the intent and the corresponding fulfillment to process the intent when it's triggered.

You can create as many intents as you want to define your entire conversation's grammar.

Create intents

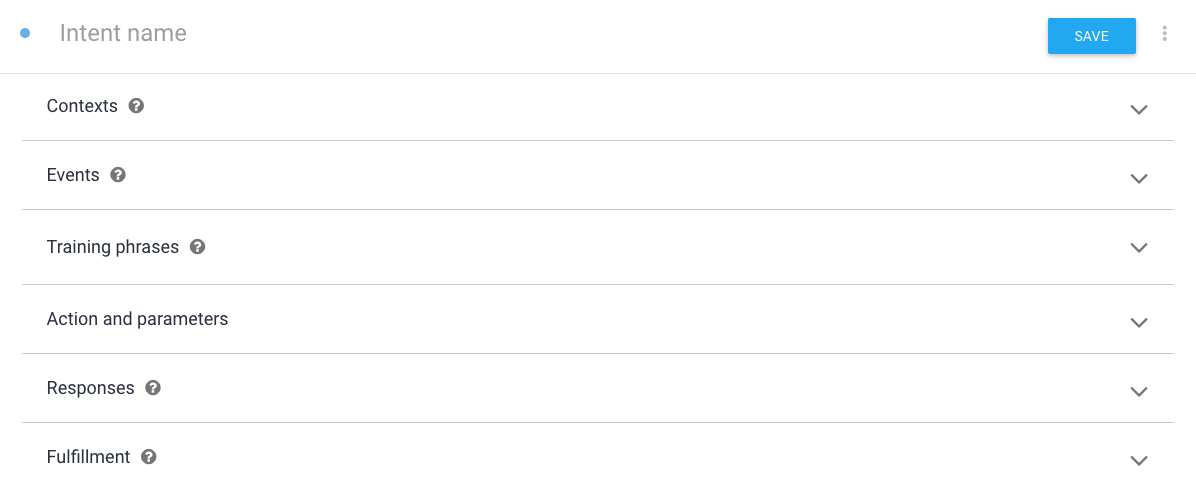

Click the + sign by the Intents menu item in Dialogflow's left navigation. The Intent Editor appears where you can enter the following information:

- Intent name is the name of the intent that's displayed in the IDE.

- Contexts let you scope the triggering of the intent to specific cases. Read the Dialogflow documentation on Contexts for more information.

- Events trigger intents without the need for users to say anything. One

example event is the

GOOGLE_ASSISTANT_WELCOMEevent, which allows the Google Assistant to invoke your Action. This event is used for your Action's default Action. Reference our documentation for more information on built-in helper intents. - Training phrases define what users need to say (the grammar) to trigger the intent. Type a few phrases here (5-10) of what users can say to trigger the intent. Dialogflow automatically handles natural variations of the example phrases you provide.

Action and parameters defines what data to pass to fulfillment, if fulfillment is enabled for this intent. This includes data parsed from the user input and the name that you can use in your fulfillment to detect which intent was triggered. You'll use this name later to map your intent to its corresponding fulfillment logic. See Actions and parameters in the Dialogflow documentation for more information about defining Actions.

Responses is the Dialogflow Response Builder, where you can define the response to this intent directly within Dialogflow, without calling fulfillment. This feature is useful for static responses that don't require fulfillment. You might use this to provide simple welcome or goodbye messages. However, you'll likely use fulfillment to respond to your users for most intents.

Fulfillment specifies whether or not you want to call your fulfillment when this intent is triggered. You most likely will enable this for most intents in your Dialogflow agent. To see this item in the intent, you must have fulfillment enabled for the agent in the Fulfillment menu.

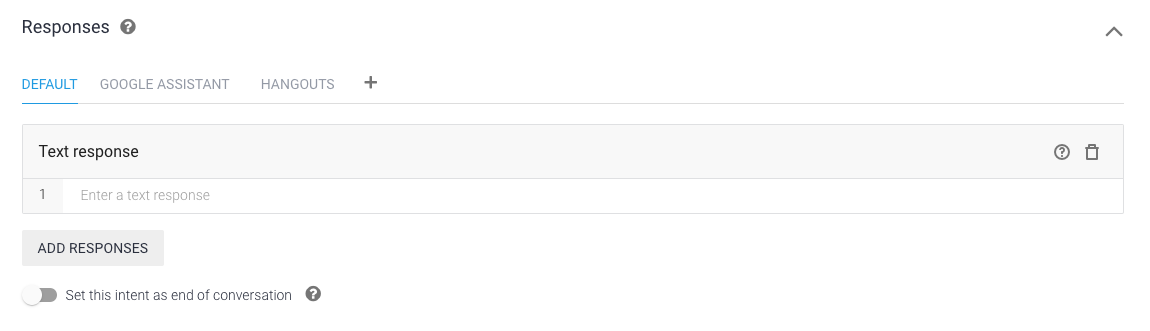

Building responses in Dialogflow

For some intents, you might not need to have your fulfillment return a response. In these cases, you can use the response builder in Dialogflow to create responses.

In the Responses area, provide the textual response you want to return to users. Default text responses are simple TTS text responses that can work across multiple Dialogflow integrations. Responses for Google Assistant are described on the Responses page.

Building fulfillment responses

Your fulfillment code is hosted in the webhook fulfillment logic for an Action.

For instance, in the Silly Name Maker sample,

this logic is found in index.js for the Cloud Function for Firebase.

When an intent is triggered that uses fulfillment, you receive a request from Dialogflow that contains information about the intent. You then respond to the request by processing the intent and returning a response. This request and response is defined by the Dialogflow webhook.

We highly recommend that you use the Node.js client library to process requests and return responses. Here's the general process for using the client library:

- Initialize the Dialogflow object. This object automatically handles listening for requests and parsing them so that you can process them in your fulfillment.

- Create functions to handle requests. These functions process the user input and other components of the intent and build the response to return to Dialogflow.

Initialize the Dialogflow object

The following code instantiates Dialogflow and does some boilerplate

Node.js setup for Google Cloud Functions:

'use strict'; const {dialogflow} = require('actions-on-google'); const functions = require('firebase-functions'); const app = dialogflow({debug: true}); app.intent('Default Welcome Intent', (conv) => { // Do things }); exports.yourAction = functions.https.onRequest(app);

public class DfFulfillment extends DialogflowApp { private static final Logger LOGGER = LoggerFactory.getLogger(DfFulfillment.class); @ForIntent("Default Welcome Intent") public ActionResponse welcome(ActionRequest request) { // Do things // ... }

Create functions to handle requests

When users speak a phrase that triggers an intent, you receive a request from Dialogflow that you handle with a function in your fulfillment. In this function, you'll generally do following things:

- Carry out any logic required to process the user input.

- Build your responses to respond to triggered intents. Take into account the surface that your users are using to construct appropriate responses. See surface capabilities for more information on how to cater responses for different surfaces.

- Call the

ask()function with your response.

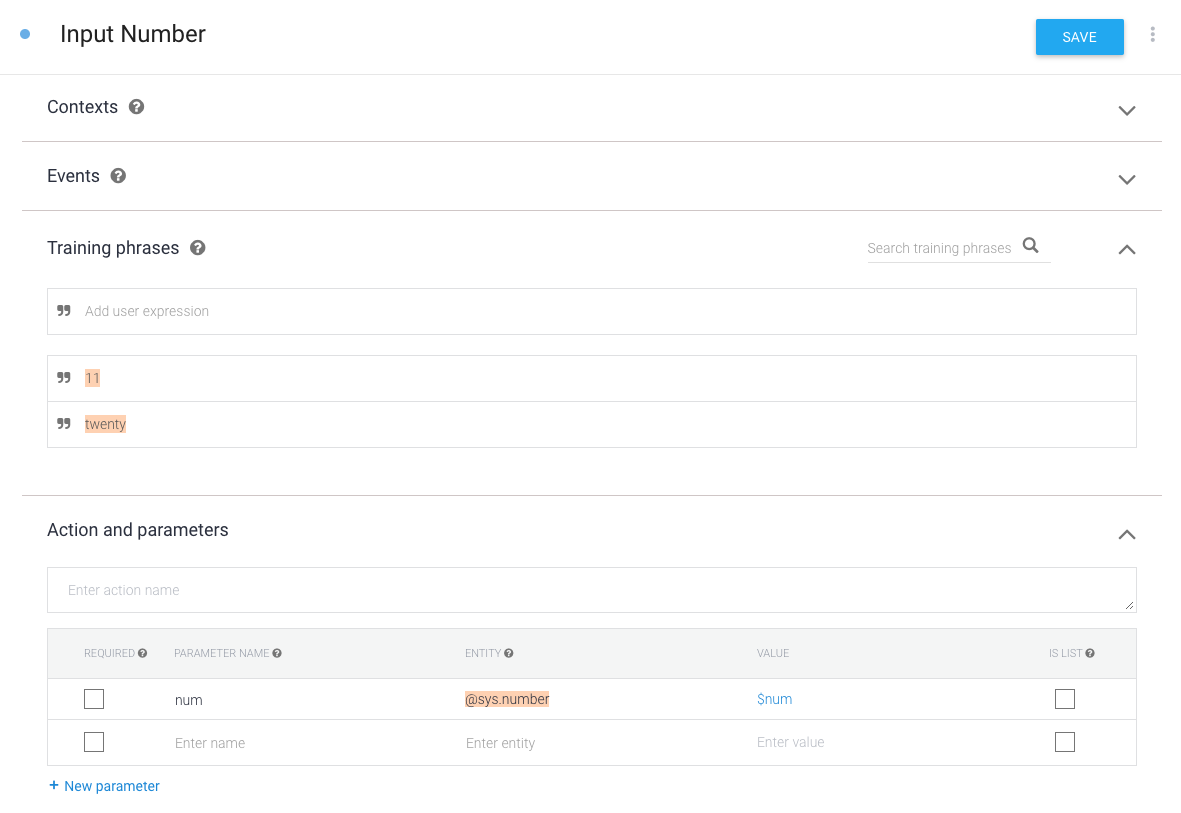

The following code shows you how to build two TTS responses that handle an

invocation intent (input.welcome) and a dialog intent (input.number) that

welcomes the user to your Action and echoes a number that a user has spoken for

a Dialogflow intent with the name:

const app = dialogflow(); app.intent('Default Welcome Intent', (conv) => { conv.ask('Welcome to number echo! Say a number.'); }); app.intent('Input Number', (conv, {num}) => { // extract the num parameter as a local string variable conv.close(`You said ${num}`); });

@ForIntent("Default Welcome Intent") public ActionResponse defaultWelcome(ActionRequest request) { ResponseBuilder rb = getResponseBuilder(request); rb.add("Welcome to number echo! Say a number."); return rb.build(); } @ForIntent("Input Number") public ActionResponse inputNumber(ActionRequest request) { ResponseBuilder rb = getResponseBuilder(request); Integer number = (Integer) request.getParameter("num"); rb.add("You said " + number.toString()); return rb.endConversation().build(); }

The custom intent Input Number, which accompanies the code above, uses the

@sys.number entity to extract a number from user utterances. The intent then

sends the num parameter, which contains the number from the user, to the

function in the fulfillment.

Instead of having individual handlers for each intent, you can alternatively add a fallback function. Inside the fallback function, check which intent triggered it and do the appropriate thing accordingly.

const WELCOME_INTENT = 'Default Welcome Intent'; const NUMBER_INTENT = 'Input Number'; const NUMBER_PARAMETER = 'num'; // you can add a fallback function instead of a function for individual intents app.fallback((conv) => { // intent contains the name of the intent // you defined in the Intents area of Dialogflow const intent = conv.intent; switch (intent) { case WELCOME_INTENT: conv.ask('Welcome! Say a number.'); break; case NUMBER_INTENT: const num = conv.parameters[NUMBER_PARAMETER]; conv.close(`You said ${num}`); break; } });

// you can add a fallback function instead of a function for individual intents @ForIntent("Default Fallback Intent") public ActionResponse fallback(ActionRequest request) { final String WELCOME_INTENT = "Default Welcome Intent"; final String NUMBER_INTENT = "Input Number"; final String NUMBER_ARGUMENT = "num"; // intent contains the name of the intent // you defined in the Intents area of Dialogflow ResponseBuilder rb = getResponseBuilder(request); String intent = request.getIntent(); switch (intent) { case WELCOME_INTENT: rb.add("Welcome! Say a number."); break; case NUMBER_INTENT: Integer num = (Integer) request.getParameter(NUMBER_ARGUMENT); rb.add("You said " + num).endConversation(); break; } return rb.build(); }

No-match reprompting

When Dialogflow cannot match any of the input grammars defined in your intents' Training phrases, it triggers a fallback intent. Fallback intents typically reprompt the user to provide the necessary input for your Action. You can provide reprompt phrases by specifying them in the Response area of a fallback intent or you can use a webhook to provide responses.

When a user's response doesn't match your Action's training phrases, Google Assistant attempts to handle the input. This behavior facilitates users changing Actions in the middle of a conversation. For example, a user asks, "What films are playing this week?" and then changes context mid-conversation: "What is the weather tomorrow?" In this example, because "What is the weather tomorrow?" isn't a valid response to the conversation triggered by the initial prompt, Assistant automatically attempts to handle the match and move the user into an appropriate conversation.

If Assistant can't find an appropriate Action that matches the user's input, the user is returned to the context of your Action.

Because Assistant may interrupt your Action to respond to a valid no-match scenario, do not use fallback intents as a way to fulfill user queries. You should only use fallback intents to reprompt your user for valid input.

To create a fallback intent:

- Click Intents in the navigation menu of Dialogflow.

- Click ⋮ next to Create Intent and select Create Fallback Intent. (Alternatively, click the Default Fallback Intent to edit it.)

Specify reprompt phrases to speak back to users. These phrases should be conversational and be as useful as possible to the user's current context.

To do this without fulfillment: Specify phrases in the Response area of the intent. Dialogflow randomly chooses phrases from this list to speak back to users until a more specific intent is triggered.

To do this with fulfillment:

- Toggle Enable webhook call for this intent in the intent's Fulfillment section.

- In your fulfillment logic, handle the fallback intent like any other intents, as described in the Create functions to handle requests section.

For example, the following function uses the

conv.dataobject (an arbitrary data payload that you can use to maintain state) from the Node.js client library to store a counter that tracks how many times a fallback intent is triggered. If it's triggered more than once, the Action quits. While it's not shown in the code, you should make other intents reset this counter to 0 when a non-fallback intent is triggered. (See the Number Genie sample for details on how to implement this.)Node.js app.intent('Default Fallback Intent', (conv) => { conv.data.fallbackCount++; // Provide two prompts before ending game if (conv.data.fallbackCount === 1) { conv.contexts.set(DONE_YES_NO_CONTEXT, 5); conv.ask('Are you done playing Number Genie?'); } else { conv.close(`Since I'm still having trouble, so I'll stop here. ` + `Let's play again soon.`); } });Java @ForIntent("Default Fallback Intent") public ActionResponse defaultFallback(ActionRequest request) { final String DONE_YES_NO_CONTEXT = "done_yes_no_context"; ResponseBuilder rb = getResponseBuilder(request); int fallbackCount = request.getConversationData().get("fallbackCount") == null ? 0 : (Integer) request.getConversationData().get("fallbackCount"); fallbackCount++; request.getConversationData().put("fallbackCount", fallbackCount); if (fallbackCount == 1) { rb.add(new ActionContext(DONE_YES_NO_CONTEXT, 5)); rb.add("Are you done playing Number Genie?"); } else { rb.add("Since I'm still having trouble, so I'll stop here. Let's play again soon") .endConversation(); } return rb.build(); }

- Toggle Enable webhook call for this intent in the intent's Fulfillment section.

Using contexts

Use contexts if you want Dialogflow to trigger fallback intents only in certain situations. This is helpful if you want to have different fallback intents for different no-match scenarios.

- If you don't set contexts on a fallback intent, it's considered to be a global fallback intent that Dialogflow triggers when no other intent is matched. You should only have one of these defined if you choose to use one.

If you set input contexts on a fallback intent, Dialogflow triggers this fallback intent when the following are true:

- The user's current contexts are a superset of the contexts defined in the intent.

- No other intent matches.

This lets you use multiple fallback intents with different input contexts to customize no-match reprompting to specific scenarios.

If you set an output context on a fallback intent, you keep the user in the same context after the fallback intent is triggered and processed.

See Dialogflow Contexts for more information.

No-input reprompting

See the Reprompts page for details on how to handle when the user does not provide further input on a voice device like a Google Home that requires continued interaction.