Quickstart

This guide walks through the end-to-end workflow for evaluating an AI system in Stax, giving you data to determine which AI models or prompts works for your use case.

0. Add an API Key

To generate model outputs and run LLM-based evaluators, you'll need to add an API key. We recommend starting with a Gemini API key, as our evaluators use it by default, but you can configure them to use other models.

You can add your first API key on the onboarding screen. To add more keys later, go to Settings.

1. Create an Evaluation Project

Begin by creating a new evaluation project by clicking Add Project. Each project should correspond to a single evaluation—for example, testing a new system prompt or comparing two models. It will house a dataset, model outputs, and evaluation results for that specific experiment.

Choose a Single Model project to evaluate the output of a single model or system instruction, or a Side-by-Side project to directly compare two different AI systems.

2. Build Your Dataset

A robust evaluation starts with data that accurately reflects your real-world use cases. You have two primary methods for building a dataset:

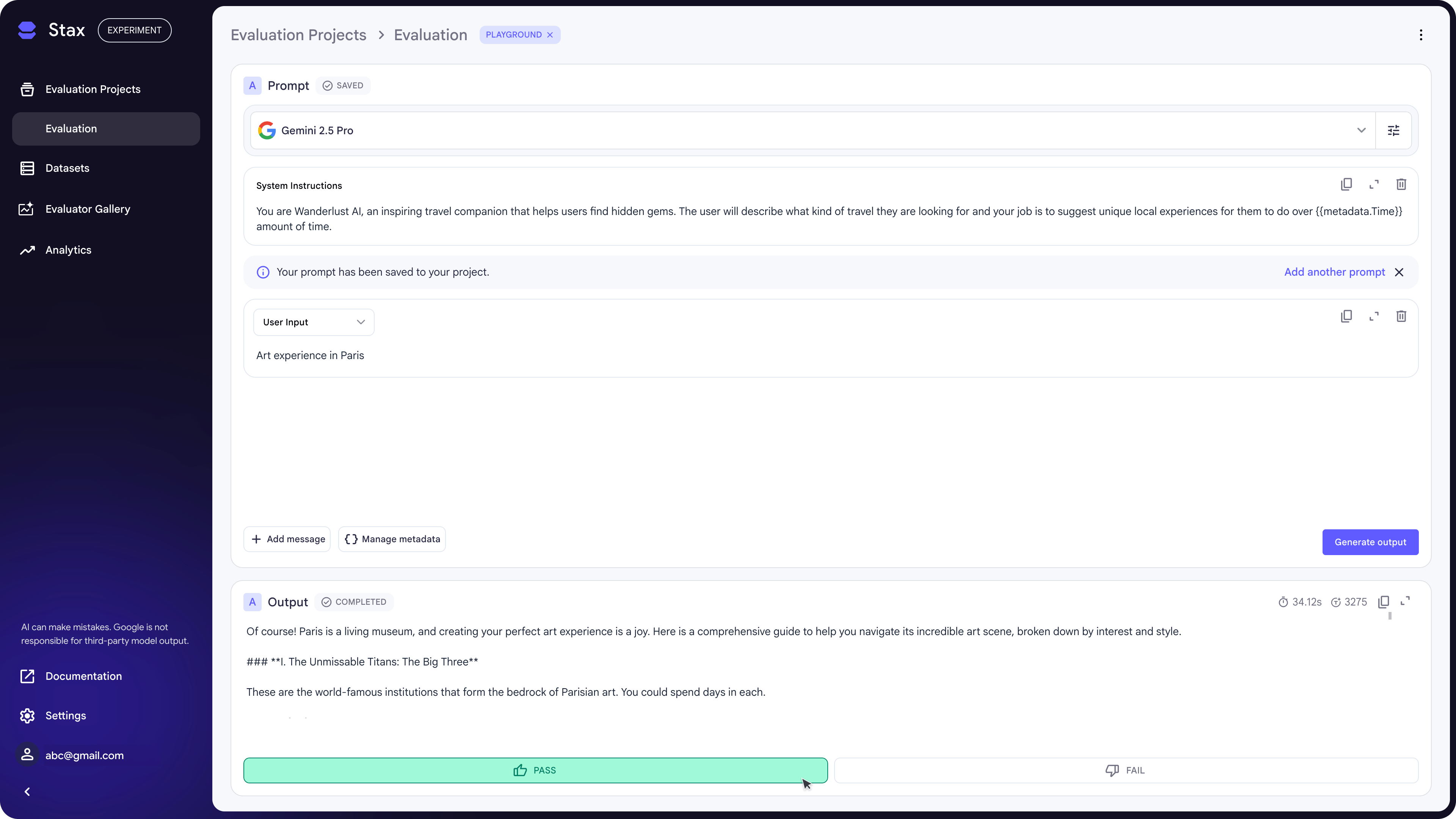

Option A: Add Data Manually in the Prompt Playground

If you don't have an existing dataset, you can build one from scratch in the playground. Start by adding the kinds of prompts you expect your users to try.

- Select Model(s): Choose the model(s) you want to test from any of the available providers or connect to your own custom models.

- Set the System Prompt (optional): Instruct the model on its role, persona, or desired behavior (e.g., "act as a helpful customer support agent").

- Add User Inputs: Enter sample user prompts to test the AI's responses.

- Provide Human Ratings (Optional): As you test, you can provide a manual score on the quality of the output. Each input, output, and rating is automatically saved as a new test case in your project.

Option B: Upload an Existing Dataset

If you have existing production data, you can upload it directly in CSV format from Add Data > Import Dataset. If your dataset doesn't include model outputs, click Generate Outputs and choose a model to generate them with.

See our guide to building effective datasets.

3. Evaluate AI Outputs

With your outputs generated, the next step is to evaluate their quality. You can do this using:

- Manual human evaluations: You can provide human ratings on individual outputs in the prompt playground or directly on the project benchmark.

- Automated Evaluation: To score many outputs at once, click "Evaluate" and choose an LLM-based evaluator. While our pre-loaded evaluators provide an excellent starting point, building custom evaluators is the best way to measure the specific criteria you care about.

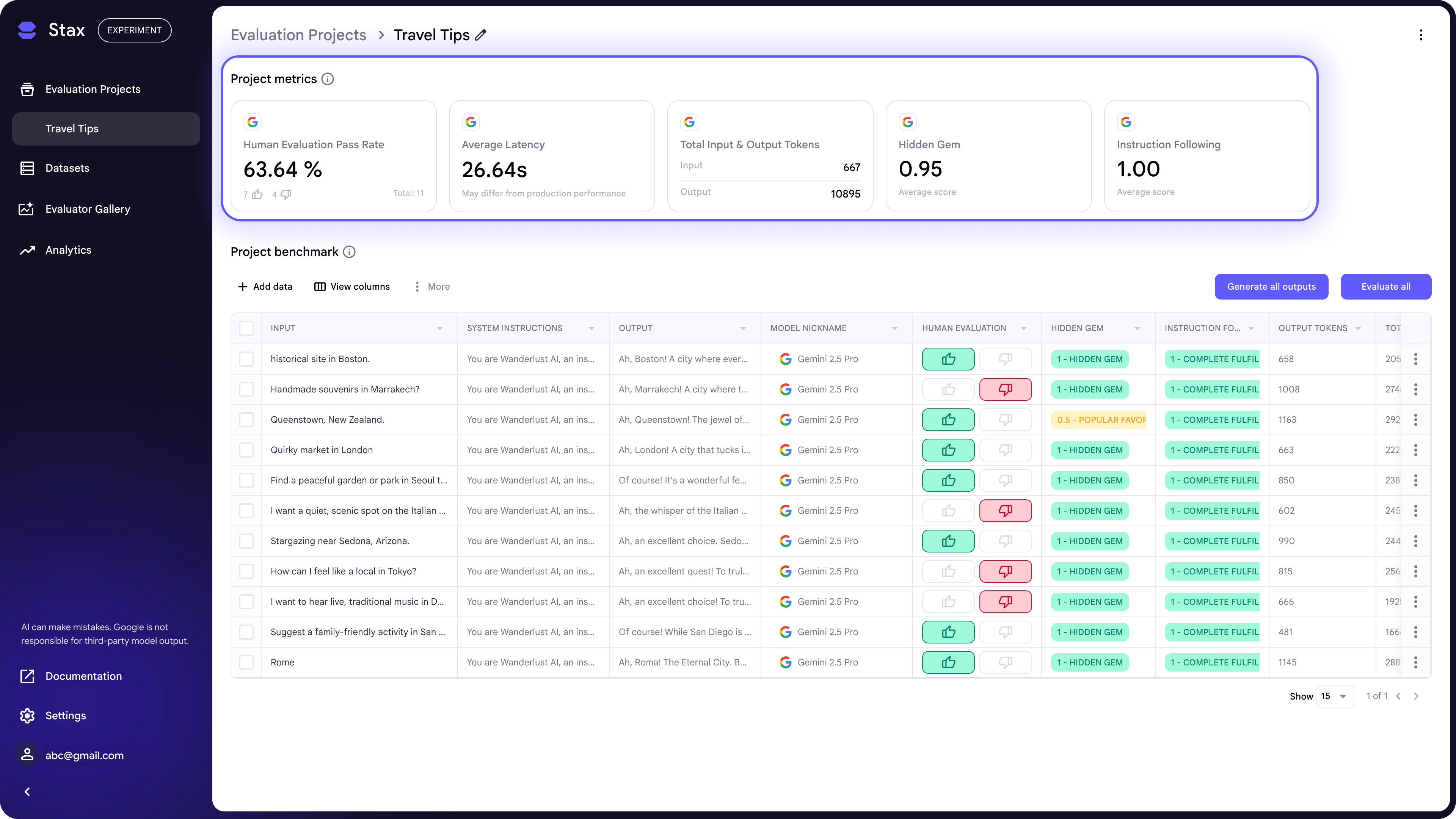

4. Interpret the results

The ultimate goal is to use your evaluation data to make informed decisions on which AI iteration is the most effective to use in your product. The Project Metrics section provides an aggregated view of human ratings, average evaluator scores, and inference latency. Use this quantitative data to compare iterations and decide which model or system prompt better fit your product's requirements for speed versus quality.

If you want to learn more about Stax's advanced features, dive into our best practices.