Make the world your canvas

Create world scale immersive experiences in over 100 countries using the largest cross-device augmented reality platform. ARCore enables you to seamlessly blend physical and digital worlds using easy to integrate workflows and our learned understanding of the world through Google Maps.

ARCore

Features

ARCore fundamentals

- Motion tracking, which shows positions relative to the world

- Anchors, which ensures tracking of an object’s position over time

- Environmental understanding, which detects the size and location of all types of surfaces

- Depth understanding, which measures the distance between surfaces from a given point

- Light estimation, which provides information about the average intensity and color correction of the environment

Geospatial API

Scene Semantics

Recording and Playback API

Depth API

Persistent Cloud Anchors

Streetscape Geometry

Featured Partners

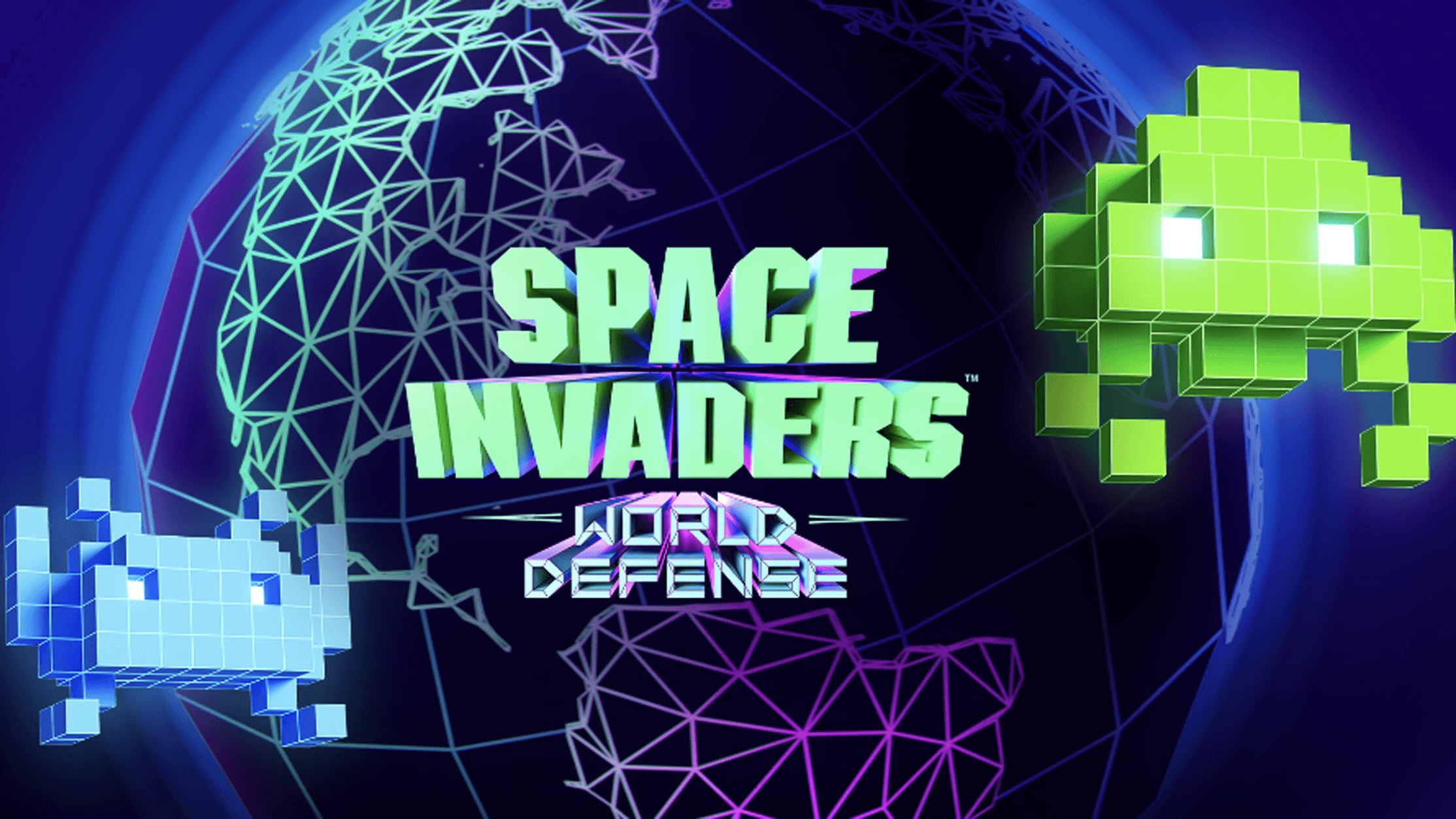

TAITO turns the world into a playground with SPACE INVADERS immersive AR game

Scavengar highlights women pioneers through immersive monuments

Gap and Mattel transform the Times Square Gap Store into a Barbie experience

Our Community

“The whole team was incredibly enthusiastic about trying the Geospatial API. We [had] the idea for a world scale alien invasion game for more than 3 years and we were waiting for the right tech to become available to finally start making it a reality… Augmented Reality. And when we got Geospatial and started checking it the results exceeded our wildest expectations greatly!"

Featured Hackathon Submissions

World Ensemble

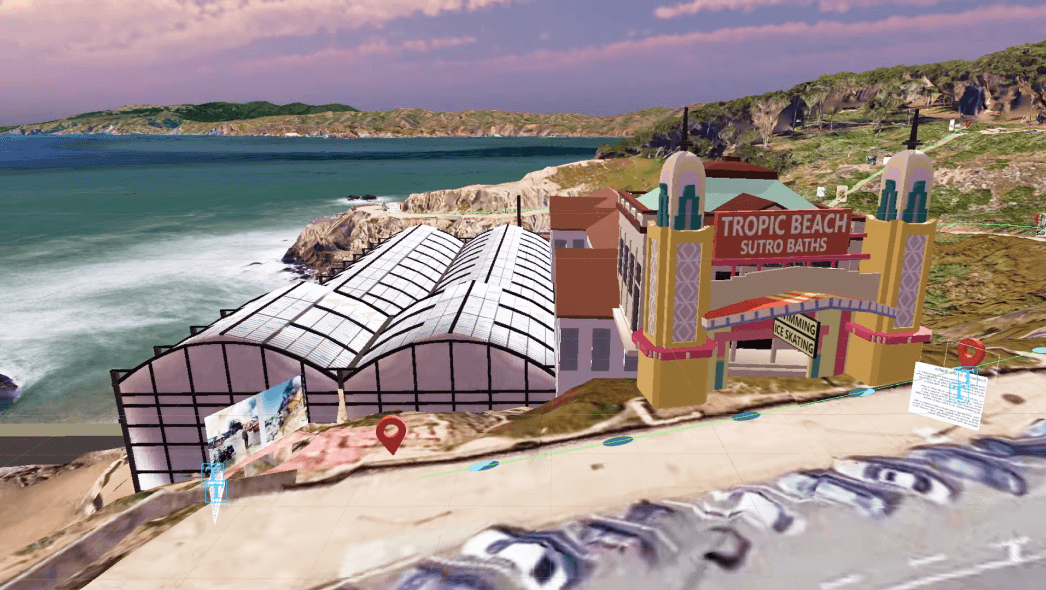

Sutro Baths AR Tour

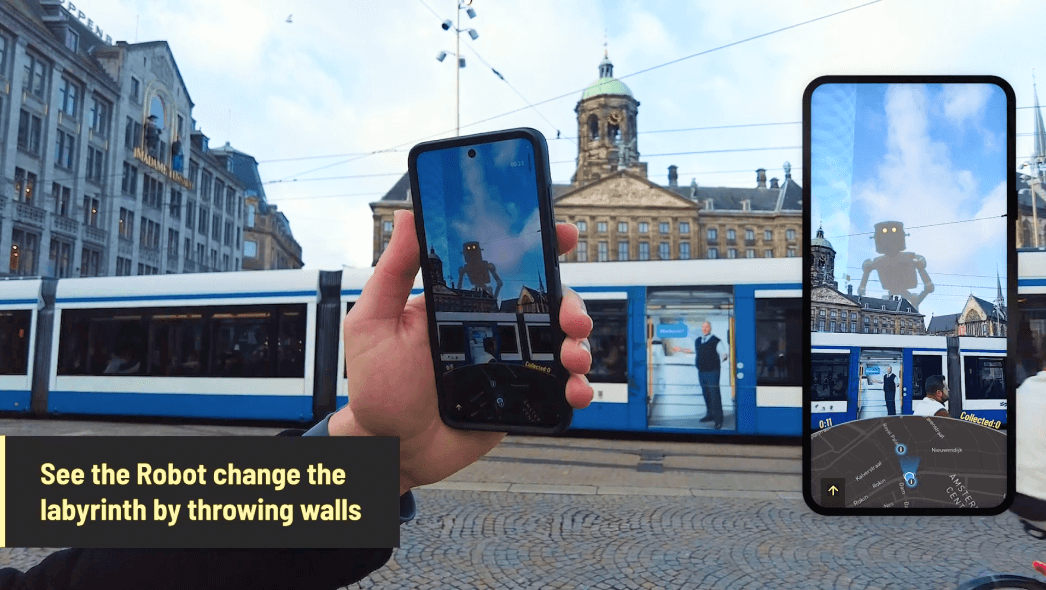

GEOMAZE - The Urban Quest

Simmy

Congratulations to the winners of Google’s Immersive Geospatial Challenge

Check out the winners from our latest hackathon featuring submissions using Geospatial Creator in five different categories, including Entertainment & Events and Commerce.

Congratulations to the winners of the ARCore Geospatial API Challenge

Check out the winners from our previous hackathon featuring submissions using the ARCore Geospatial API in five different categories, including Gaming and Navigation.