此词汇表定义了人工智能术语。

A

消融

一种用于评估特征或组件重要性的技术,方法是将相应特征或组件暂时从模型中移除。然后,您可以在不使用该特征或组件的情况下重新训练模型,如果重新训练后的模型性能明显下降,则表明移除的特征或组件可能很重要。

例如,假设您使用 10 个特征训练了一个分类模型,并在测试集上获得了 88% 的精确率。如需检查第一个特征的重要性,您可以仅使用其他 9 个特征重新训练模型。如果重新训练后的模型性能明显下降(例如,精确率为 55%),则表明移除的特征可能很重要。反之,如果重新训练后的模型表现同样出色,则表明该特征可能并不那么重要。

消融还可以帮助确定以下各项的重要性:

- 较大的组件,例如大型机器学习系统的整个子系统

- 流程或技术,例如数据预处理步骤

在这两种情况下,您都可以观察到在移除组件后,系统的性能会发生怎样的变化(或不发生变化)。

A/B 测试

一种比较两种(或更多种)技术(即 A 和 B)的统计方法。通常,A 是现有技术,而 B 是新技术。 A/B 测试不仅可以确定哪种技术的效果更好,还可以确定这种差异是否具有统计显著性。

A/B 测试通常会比较两种技术在单个指标上的表现;例如,两种技术在模型准确率方面的比较结果如何?不过,A/B 测试也可以比较任意有限数量的指标。

加速器条状标签

一类专门的硬件组件,旨在执行深度学习算法所需的主要计算。

与通用 CPU 相比,加速器芯片(简称加速器)可以显著提高训练和推理任务的速度和效率。它们非常适合训练神经网络和执行类似的计算密集型任务。

加速器芯片的示例包括:

- Google 的张量处理单元 (TPU),具有专用于深度学习的硬件。

- NVIDIA 的 GPU 虽然最初是为图形处理而设计的,但旨在实现并行处理,从而显著提高处理速度。

准确性

正确的分类预测数量除以预测总数。具体来说:

例如,如果某个模型做出了 40 次正确预测和 10 次错误预测,那么其准确率为:

二元分类为不同类别的正确预测和错误预测提供了具体名称。因此,二元分类的准确率公式如下:

其中:

如需了解详情,请参阅机器学习速成课程中的分类:准确率、召回率、精确率和相关指标。

action

在强化学习中,智能体在环境的状态之间转换的机制。智能体使用政策选择操作。

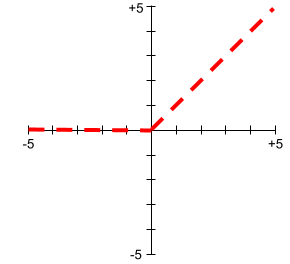

激活函数

一种使神经网络能够学习特征与标签之间非线性(复杂)关系的函数。

常用的激活函数包括:

激活函数的图从不是单条直线。 例如,ReLU 激活函数的图由两条直线组成:

Sigmoid 激活函数的图如下所示:

如需了解详情,请参阅机器学习速成课程中的神经网络:激活函数。

主动学习

一种训练方法,采用这种方法时,算法会选择从中学习规律的部分数据。当有标签样本稀缺或获取成本高昂时,主动学习尤其有用。主动学习算法会选择性地寻找学习所需的特定范围的样本,而不是盲目地寻找各种各样的有标签样本。

AdaGrad

一种先进的梯度下降法,用于重新调整每个形参的梯度,以便有效地为每个形参指定独立的学习速率。如需了解完整说明,请参阅用于在线学习和随机优化的自适应次梯度方法。

改编

与调优或微调的含义相同。

代理

能够对多模态用户输入进行推理,以便代表用户规划和执行操作的软件。

在强化学习中,智能体是使用策略来最大限度提高从环境的状态转换中获得的预期回报的实体。

代理型

agent 的形容词形式。智能体是指智能体所具备的特质(例如自主性)。

智能体工作流

一种动态过程,其中智能体自主规划和执行行动以实现目标。该过程可能涉及推理、调用外部工具和自行纠正方案。

凝聚式聚类

请参阅层次聚类。

AI 垃圾内容

生成式 AI 系统生成的输出,侧重于数量而非质量。例如,包含 AI 垃圾内容的网页充斥着低成本制作的 AI 生成的低质量内容。

异常值检测

识别离群点的过程。例如,如果某个特征的平均值为 100,标准差为 10,那么异常检测功能应将 200 的值标记为可疑。

AR

增强现实的缩写。

PR 曲线下的面积

请参阅 PR AUC(PR 曲线下面积)。

ROC 曲线下面积

请参阅 AUC(ROC 曲线下面积)。

人工通用智能

一种非人类机制,可展现广泛的问题解决能力、创造力和适应性。例如,展示通用人工智能的程序可以翻译文本、创作交响乐,并在尚未发明的游戏中表现出色。

人工智能

能够解决复杂任务的非人类程序或模型。 例如,翻译文本的程序或模型,以及根据放射影像识别疾病的程序或模型都展现出了人工智能。

从形式上讲,机器学习是人工智能的一个子领域。不过,近年来,一些组织开始交替使用人工智能和机器学习这两个术语。

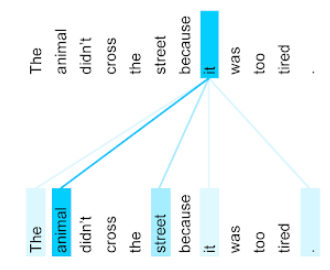

Attention

一种用于神经网络的机制,用于指示特定字词或部分字词的重要性。注意力机制可压缩模型预测下一个 token/字词所需的信息量。典型的注意力机制可能包含一组输入的加权和,其中每个输入的权重由神经网络的另一部分计算得出。

另请参阅 自注意力机制和多头自注意力机制,它们是 Transformer 的构建块。

如需详细了解自注意力机制,请参阅机器学习速成课程中的 LLM:什么是大语言模型?。

属性

与特征的含义相同。

在机器学习公平性方面,属性通常是指与个人相关的特征。

属性抽样

一种用于训练决策森林的策略,其中每个决策树在学习条件时仅考虑随机选择的可能特征子集。一般来说,每个节点都会对不同的特征子集进行抽样。相比之下,在训练不进行属性抽样的决策树时,系统会考虑每个节点的所有可能特征。

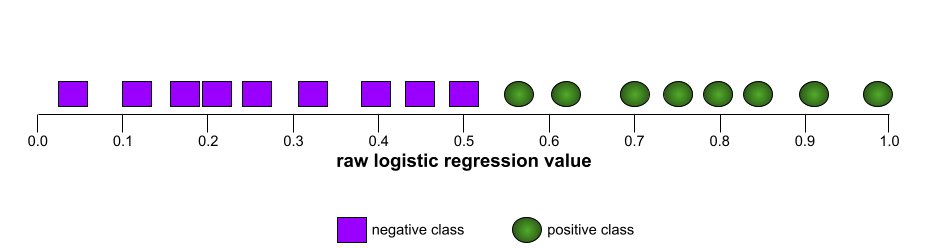

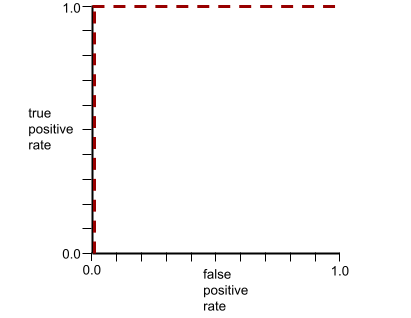

AUC(ROC 曲线下面积)

一个介于 0.0 和 1.0 之间的数字,表示二元分类模型区分正类别和负类别的能力。 AUC 越接近 1.0,模型区分不同类别的能力就越好。

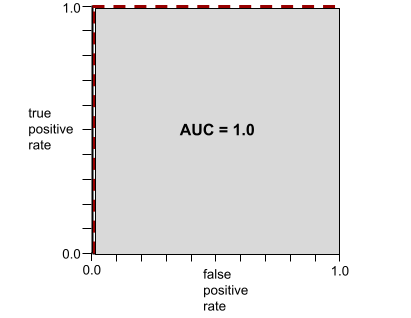

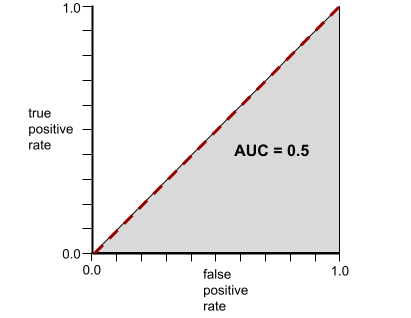

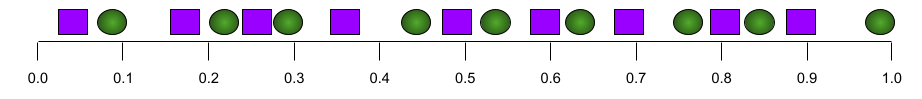

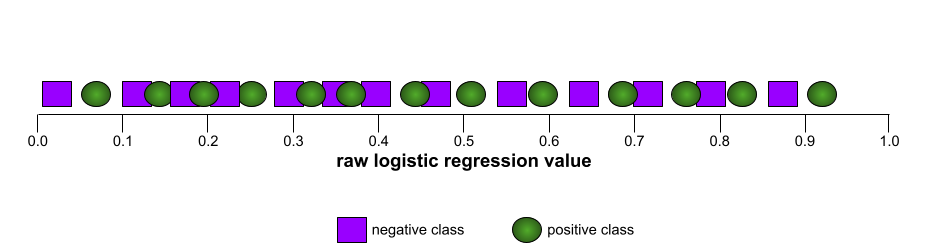

例如,下图显示了一个完美区分正类别(绿色椭圆)和负类别(紫色矩形)的分类模型。这个不切实际的完美模型的 AUC 为 1.0:

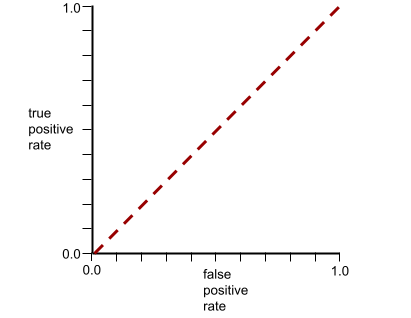

相反,下图显示了生成随机结果的分类模型的结果。此模型的 AUC 为 0.5:

是的,上述模型的 AUC 为 0.5,而不是 0.0。

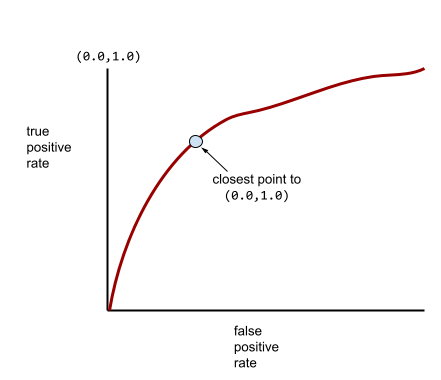

大多数模型都介于这两个极端之间。例如,以下模型在一定程度上区分了正例和负例,因此其 AUC 介于 0.5 和 1.0 之间:

AUC 会忽略您为分类阈值设置的任何值。相反,AUC 会考虑所有可能的分类阈值。

如需了解详情,请参阅机器学习速成课程中的分类:ROC 和 AUC。

增强现实

一种将计算机生成的图像叠加到用户看到的真实世界上的技术,从而提供合成视图。

自动编码器

一种可学习从输入中提取最重要信息的系统。自动编码器是编码器和解码器的组合。自动编码器依赖于以下两步流程:

- 编码器将输入映射到(通常)有损的低维(中间)格式。

- 解码器通过将低维格式映射到原始高维输入格式来构建原始输入的有损版本。

通过让解码器尝试尽可能准确地从编码器的中间格式重建原始输入,对自动编码器进行端到端训练。由于中间格式比原始格式小(维度更低),因此自动编码器必须学习输入中哪些信息是必不可少的,并且输出不会与输入完全相同。

例如:

- 如果输入数据是图形,则非精确复制的图形与原始图形类似,但会进行一些修改。可能非精确副本会去除原始图形中的噪声或填充一些缺失的像素。

- 如果输入数据是文本,自动编码器会生成模仿(但不等同于)原始文本的新文本。

另请参阅变分自编码器。

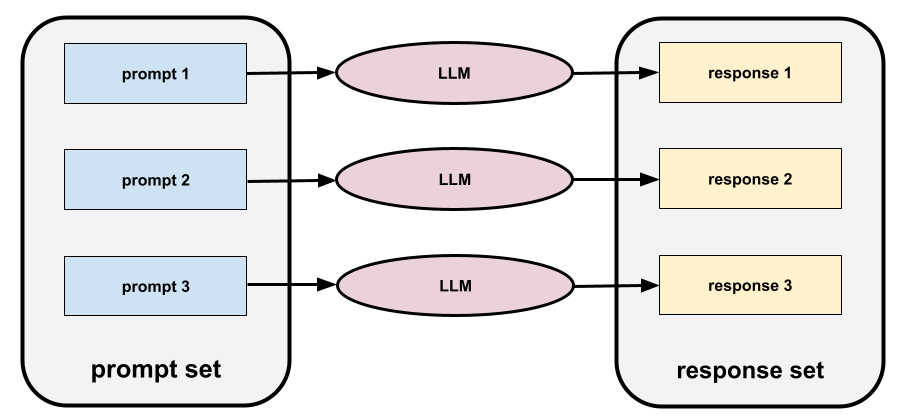

自动评估

使用软件来判断模型输出的质量。

如果模型输出相对简单,脚本或程序可以将模型输出与标准回答进行比较。这种类型的自动评估有时称为程序化评估。ROUGE 或 BLEU 等指标通常有助于进行程序化评估。

如果模型输出复杂或没有唯一正确的答案,则有时会由一个名为自动评分器的单独机器学习程序执行自动评估。

与人工评估相对。

自动化偏差

是指针对自动化决策系统所给出的建议的偏差,在此偏差范围内,即使系统出现错误,决策者也会优先考虑自动化决策系统给出的建议,而不是非自动化系统给出的建议。

如需了解详情,请参阅机器学习速成课程中的公平性:偏差类型。

AutoML

用于构建机器学习 模型的任何自动化流程。AutoML 可以自动执行以下任务:

AutoML 对数据科学家很有用,因为它可以节省他们开发机器学习流水线的时间和精力,并提高预测准确性。对于非专业人士,它也很有用,因为它可以让他们更轻松地完成复杂的机器学习任务。

如需了解详情,请参阅机器学习速成课程中的自动化机器学习 (AutoML)。

自动评估器评估

一种用于评判生成式 AI 模型输出质量的混合机制,它将人工评估与自动评估相结合。自动评估器是一种基于人工评估生成的数据训练的机器学习模型。理想情况下,自动评估器应学会模仿人类评估者。虽然有预建的自动评分器,但最好是专门针对您要评估的任务进行微调的自动评分器。

自回归模型

一种模型,可根据其自身的先前预测推断预测结果。例如,自回归语言模型会根据之前预测的 token 来预测下一个 token。所有基于 Transformer 的大语言模型都是自回归模型。

相比之下,基于 GAN 的图像模型通常不是自回归模型,因为它们通过一次前向传递生成图像,而不是以迭代方式逐步生成图像。不过,某些图片生成模型是自回归模型,因为它们会分步生成图片。

辅助损失

一种与神经网络 模型的主要损失函数结合使用的损失函数,有助于在权重随机初始化的早期迭代期间加速训练。

辅助损失函数会将有效梯度推送到较早的层。这有助于通过解决梯度消失问题,在训练期间实现收敛。

前 k 名的平均精确率

一种用于总结模型在生成排名结果(例如图书推荐的编号列表)的单个提示上的表现的指标。k 处的平均精确率是指每个相关结果的 k 处的精确率的平均值。 因此,前 k 个结果的平均精确率的公式为:

\[{\text{average precision at k}} = \frac{1}{n} \sum_{i=1}^n {\text{precision at k for each relevant item} } \]

其中:

- \(n\) 是列表中的相关商品数量。

与 k 处的召回率相对。

轴对齐条件

在决策树中,仅涉及单个特征的条件。例如,如果 area 是一个特征,则以下是轴对齐条件:

area > 200

与斜条件相对。

B

反向传播

训练神经网络需要多次迭代以下双向传递周期:

- 在前向传递期间,系统会处理一个包含 示例的批次,以生成预测结果。系统会将每个预测值与每个标签值进行比较。预测值与标签值之间的差值就是相应示例的损失。系统会汇总所有示例的损失,以计算当前批次的总损失。

- 在反向传递(反向传播)期间,系统会通过调整所有隐藏层中所有神经元的权重来减少损失。

神经网络通常包含多个隐藏层中的许多神经元。每个神经元以不同的方式影响总体损失。 反向传播会确定是增加还是减少应用于特定神经元的权重。

学习速率是一种乘数,用于控制每次向后传递时每个权重增加或减少的程度。与较小的学习速率相比,较大的学习速率会更大幅度地增加或减少每个权重。

从微积分的角度来看,反向传播实现了微积分中的链式法则。也就是说,反向传播会计算误差相对于每个形参的偏导数。

多年前,机器学习从业者必须编写代码才能实现反向传播。Keras 等现代机器学习 API 现在会为您实现反向传播。好,

如需了解详情,请参阅机器学习速成课程中的神经网络。

装袋

一种用于训练集成的方法,其中每个组成模型都基于有放回抽样的随机训练示例子集进行训练。例如,随机森林是使用 Bagging 训练的决策树的集合。

术语“bagging”是“bootstrap aggregating”(自助抽样集成)的简称。

如需了解详情,请参阅“决策森林”课程中的随机森林。

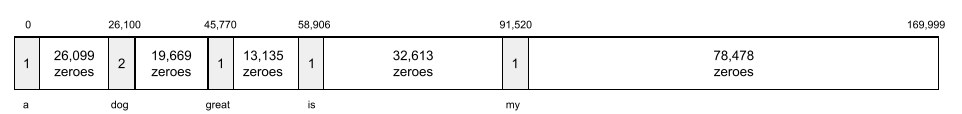

词袋

词组或段落中的字词的表示法,不考虑字词顺序。例如,以下三个词组的词袋完全一样:

- the dog jumps

- jumps the dog

- dog jumps the

每个字词都映射到稀疏向量中的一个索引,其中词汇表中的每个字词都在该向量中有一个索引。例如,词组“the dog jumps”会映射到一个特征向量,该特征向量在字词“the”“dog”和“jumps”对应的三个索引处包含非零值。非零值可以是以下任一值:

- 1,表示某个字词存在。

- 某个字词出现在词袋中的次数。例如,如果词组为“the maroon dog is a dog with maroon fur”,那么“maroon”和“dog”都会表示为 2,其他字词则表示为 1。

- 其他一些值,例如,某个字词出现在词袋中的次数的对数。

baseline

一种用作参考点的模型,用于比较另一模型(通常是更复杂的模型)的效果。例如,逻辑回归模型可以作为深度模型的良好基准。

对于特定问题,基准有助于模型开发者量化新模型必须达到的最低预期性能,以便新模型发挥作用。

基础模型

批处理

一次训练迭代中使用的示例集。批次大小决定了一个批次中的样本数量。

如需了解批次与周期之间的关系,请参阅周期。

如需了解详情,请参阅机器学习速成课程中的线性回归:超参数。

批量推理

对多个无标签样本(分为更小的子集 [“批次”])进行推理预测的过程。

批量推理可以利用加速器芯片的并行化功能。也就是说,多个加速器可以同时对不同批次的无标签示例进行推理预测,从而大幅提高每秒的推理次数。

如需了解详情,请参阅机器学习速成课程中的生产环境中的机器学习系统:静态推理与动态推理。

批次归一化

对隐藏层中激活函数的输入或输出进行归一化。批次归一化具有下列优势:

批次大小

一个批次中的样本数量。 例如,如果批次大小为 100,则模型在每次迭代中处理 100 个样本。

以下是常用的批次大小策略:

- 随机梯度下降法 (SGD),其中批次大小为 1。

- 完整批次,其中批次大小为整个训练集中的样本数量。例如,如果训练集包含 100 万个样本,则批次大小为 100 万个样本。完整批次通常是一种低效的策略。

- 小批次,其中批次大小通常介于 10 到 1000 之间。小批次通常是最有效的策略。

请参阅以下内容了解详细信息:

- 生产环境机器学习系统:静态推理与动态推理(机器学习速成课程)。

- 《深度学习调优指南》。

贝叶斯神经网络

一种概率神经网络,用于解释权重和输出的不确定性。标准神经网络回归模型通常会预测标量值;例如,某个标准模型预测房价为 853,000。相比之下,贝叶斯神经网络会预测值的分布情况;例如,某个贝叶斯模型预测房价为 853000,其中标准偏差为 67200。

贝叶斯神经网络根据 贝叶斯定理计算权重和预测的不确定性。如果需要量化不确定性,例如,在与医药相关的模型中,则贝叶斯神经网络非常有用。贝叶斯神经网络还有助于防止过拟合。

贝叶斯优化

一种概率回归模型技术,通过使用贝叶斯学习技术量化不确定性,优化计算成本高昂的目标函数,而不是直接优化目标函数。由于贝叶斯优化本身非常耗费资源,因此通常用于优化参数数量较少的评估成本较高的任务,例如选择超参数。

贝尔曼方程

在强化学习中,最优 Q 函数满足以下等式:

\[Q(s, a) = r(s, a) + \gamma \mathbb{E}_{s'|s,a} \max_{a'} Q(s', a')\]

强化学习算法应用此恒等式,使用以下更新规则创建 Q-learning:

\[Q(s,a) \gets Q(s,a) + \alpha \left[r(s,a) + \gamma \displaystyle\max_{\substack{a_1}} Q(s',a') - Q(s,a) \right] \]

除了强化学习之外,贝尔曼方程还可应用于动态规划。请参阅 维基百科中有关贝尔曼方程的条目。

BERT(基于 Transformer 的双向编码器表示法)

一种用于文本表示的模型架构。经过训练的 BERT 模型可以作为大型模型的一部分,用于文本分类或其他机器学习任务。

BERT 具有以下特征:

- 使用 Transformer 架构,因此依赖于自注意力。

- 使用 Transformer 的编码器部分。编码器的任务是生成良好的文本表示,而不是执行分类等特定任务。

- 是否为双向。

- 使用遮盖进行无监督训练。

BERT 的变体包括:

如需简要了解 BERT,请参阅开源 BERT:最先进的自然语言处理预训练。

偏差(道德/公平性)

1. 对某些事物、人或群体有刻板印象、偏见或偏袒。这些偏差会影响数据的收集和解读、系统设计以及用户与系统的互动方式。此类偏差的形式包括:

2. 采样或报告过程中引入的系统性误差。 此类偏差的形式包括:

如需了解详情,请参阅机器学习速成课程中的公平性:偏差类型。

偏差(数学概念)或偏差项

距离原点的截距或偏移。偏差是机器学习模型中的一个形参,可用以下任一符号表示:

- b

- w0

例如,在下面的公式中,偏差为 b:

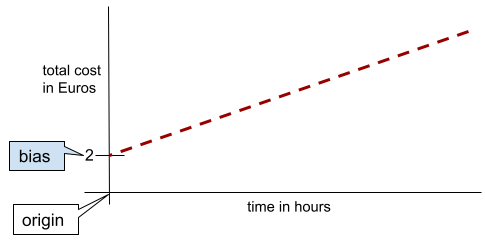

在简单的二维直线中,偏差只是指“y 轴截距”。 例如,下图中的直线的偏差为 2。

之所以存在偏差,是因为并非所有模型都从原点 (0,0) 开始。例如,假设某游乐园的门票为 2 欧元,客户每停留 1 小时需额外支付 0.5 欧元。因此,映射总费用的模型具有 2 的偏差,因为最低费用为 2 欧元。

如需了解详情,请参阅机器学习速成课程中的线性回归。

双向

一种用于描述评估目标文本部分之前和之后文本的系统的术语。相比之下,单向系统仅评估目标文本部分之前的文本。

例如,假设有一个遮盖式语言模型,它必须确定以下问题中带下划线的字词的概率:

你有什么_____?

单向语言模型必须仅根据“What”“is”和“the”这几个字词提供的上下文来确定概率。相比之下,双向语言模型还可以从“with”和“you”中获取上下文,这可能有助于模型生成更好的预测结果。

双向语言模型

一种语言模型,用于根据前文和后文确定给定 token 出现在文本摘录中给定位置的概率。

二元语法

一种 N 元语法,其中 N=2。

二元分类

一种分类任务,用于预测两个互斥的类别之一:

例如,以下两个机器学习模型都执行二元分类:

- 一种用于确定电子邮件是垃圾邮件(正类别)还是非垃圾邮件(负类别)的模型。

- 一种评估医疗症状以确定某人是否患有特定疾病(正类别)的模型。

与多类别分类相对。

如需了解详情,请参阅机器学习速成课程中的分类。

二元条件

在决策树中,条件只有两种可能的结果,通常是是或否。例如,以下是一个二元条件:

temperature >= 100

与非二元条件相对。

如需了解详情,请参阅决策森林课程中的条件类型。

分箱

与分桶的含义相同。

黑盒模型

一种模型,其“推理”过程难以理解或无法理解。也就是说,虽然人类可以看到提示如何影响回答,但人类无法确切地确定黑盒模型是如何确定回答的。换句话说,黑盒模型缺乏可解释性。

BLEU(双语替换评测)

一种介于 0.0 和 1.0 之间的指标,用于评估机器翻译的质量,例如从西班牙语到日语的翻译。

为了计算得分,BLEU 通常会将机器学习模型的翻译(生成的文本)与人类专家的翻译(参考文本)进行比较。生成文本和参考文本中 N 元语法的匹配程度决定了 BLEU 得分。

有关此指标的原始论文是《BLEU:一种用于自动评估机器翻译的方法》。

另请参阅 BLEURT。

BLEURT(基于 Transformer 的双语替换评测)

一种用于评估从一种语言到另一种语言(尤其是英语)的机器翻译的指标。

对于英语与另一种语言之间的翻译,BLEURT 与人工评分的契合度比 BLEU 更高。与 BLEU 不同,BLEURT 侧重于语义(含义)相似性,并且可以适应释义。

BLEURT 依赖于一个预训练的大语言模型(确切来说是 BERT),然后使用人工翻译人员提供的文本对该模型进行微调。

有关此指标的原始论文是 BLEURT: Learning Robust Metrics for Text Generation。

布尔值问题 (BoolQ)

用于评估 LLM 在回答是/否问题方面的熟练程度的数据集。 数据集中的每个挑战都包含三个组成部分:

- 查询

- 暗示查询答案的段落。

- 正确答案,即是或否。

例如:

- 问题:密歇根州有核电站吗?

- 原文:...three nuclear power plants supply Michigan with about 30% of its electricity.

- 正确答案:是

研究人员从匿名化的汇总 Google 搜索查询中收集问题,然后使用维基百科页面来确定信息。

如需了解详情,请参阅 BoolQ:探索自然语言是/否问题的惊人难度。

BoolQ 是 SuperGLUE 集成模型的一个组成部分。

BoolQ

布尔值问题的缩写。

增强学习

一种机器学习技术,以迭代方式将一组简单但不太准确的分类模型(也称为“弱分类器”)合成一个准确率高的分类模型(即“强分类器”),具体方法是对模型目前错误分类的样本进行权重上调。

如需了解详情,请参阅决策森林课程中的什么是梯度提升决策树?。

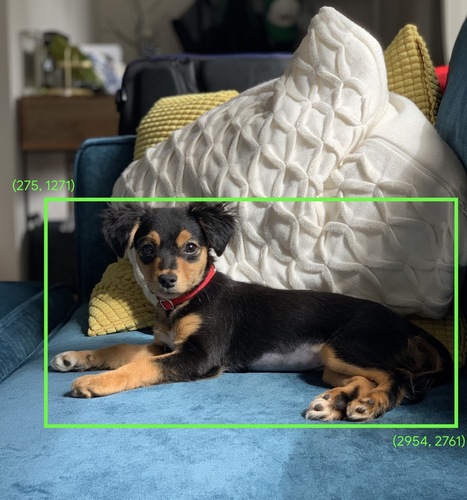

边界框

图片中感兴趣区域(例如下图中的狗)周围矩形的 (x, y) 坐标。

广播

将矩阵数学运算中某个运算数的形状扩展为与该运算兼容的维度。例如,线性代数要求矩阵加法运算中的两个运算数必须具有相同的维度。因此,您无法将形状为 (m, n) 的矩阵与长度为 n 的向量相加。为了使该运算有效,广播会在每列下复制相同的值,将长度为 n 的向量扩展成形状为 (m, n) 的矩阵。

如需了解详情,请参阅 NumPy 中的广播这篇文章的说明。

分桶

将单个特征转换为多个二元特征(称为桶或箱),通常根据值区间进行转换。截断的特征通常是连续特征。

例如,您可以将温度范围划分为离散的区间,而不是将温度表示为单个连续的浮点特征,例如:

- <= 10 摄氏度为“寒冷”区间。

- 11-24 摄氏度为“温带”区间。

- >= 25 摄氏度为“温暖”区间。

模型将以相同方式处理同一分桶中的每个值。例如,值 13 和 22 都位于温和型分桶中,因此模型会以相同的方式处理这两个值。

如需了解详情,请参阅机器学习速成课程中的数值数据:分箱。

C

校准层

一种预测后调整,通常是为了降低预测偏差的影响。调整后的预测和概率应与观察到的标签集的分布一致。

候选集生成

推荐系统选择的初始推荐集。例如,假设某家书店有 10 万本书。在候选集生成阶段,推荐系统会针对特定用户生成一个小得多的合适书籍列表,比如 500 本。但即使向用户推荐 500 本也太多了。推荐系统的后续阶段(例如评分和重排序)会进一步将这 500 本书列表缩小为一个小得多且更实用的推荐集。

如需了解详情,请参阅推荐系统课程中的候选生成概览。

候选采样

一种训练时进行的优化,会使用某种函数(例如 softmax)针对所有正类别标签计算概率,但仅随机抽取一部分负类别标签样本并计算概率。例如,如果某个样本的标签为“小猎犬”和“狗”,则候选采样将针对以下类别计算预测概率和相应的损失项:

- beagle

- dog

- 其余负类别的随机子集(例如,猫、棒棒糖、栅栏)。

这种方法的理念是,只要正类别始终得到适当的正增强,负类别就可以从不太频繁的负增强中学习,这确实符合实际观察情况。

与计算所有负类别的预测结果的训练算法相比,候选采样在计算方面更高效,尤其是在负类别的数量非常庞大时。

分类数据

特征,拥有一组特定的可能值。例如,假设有一个名为 traffic-light-state 的分类特征,该特征只能具有以下三个可能值之一:

redyellowgreen

通过将 traffic-light-state 表示为分类特征,模型可以了解 red、green 和 yellow 对驾驶员行为的不同影响。

分类特征有时称为离散特征。

与数值数据相对。

如需了解详情,请参阅机器学习速成课程中的处理分类数据。

因果语言模型

与单向语言模型的含义相同。

如需对比语言建模中的不同方向性方法,请参阅双向语言模型。

CB

CommitmentBank 的缩写。

形心

由 k-means 或 k-median 算法确定的聚类中心。例如,如果 k 为 3,则 k-means 或 k-median 算法会找出 3 个形心。

如需了解详情,请参阅聚类课程中的聚类算法。

形心聚类

一类聚类算法,用于将数据整理到非分层聚类中。k-means 是使用最广泛的基于形心的聚类算法。

与层次聚类算法相对。

如需了解详情,请参阅聚类课程中的聚类算法。

思维链提示

一种提示工程技术,可鼓励大语言模型 (LLM) 逐步说明其推理过程。例如,请考虑以下提示,并特别注意第二句话:

如果一辆汽车在 7 秒内从 0 加速到 60 英里/小时,驾驶员会感受到多少 g 的重力?在回答中,显示所有相关计算。

LLM 的回答可能会:

- 显示一系列物理公式,并在适当的位置代入值 0、60 和 7。

- 说明系统为何选择这些公式以及各种变量的含义。

思维链提示会强制 LLM 执行所有计算,这可能会得出更正确的答案。此外,通过思维链提示,用户可以检查 LLM 的步骤,以确定回答是否合理。

字符 N-gram F 得分 (ChrF)

用于评估机器翻译模型的指标。 字符 N 元语法 F 分数用于确定参考文本中的 N 元语法与机器学习模型生成的文本中的 N 元语法的重叠程度。

字符 N 元语法 F 分数与 ROUGE 和 BLEU 系列中的指标类似,但有以下区别:

- 字符 N 元语法 F 分数基于字符 N 元语法。

- ROUGE 和 BLEU 基于字词 N 元语法或标记进行操作。

聊天

与机器学习系统(通常是大语言模型)进行来回对话的内容。聊天中的上一次互动(您输入的内容以及大语言模型的回答)会成为聊天后续部分的上下文。

聊天机器人是大语言模型的一种应用。

检查点

用于捕获模型参数在训练期间或训练完成后的状态的数据。例如,在训练期间,您可以执行以下操作:

- 停止训练,可能是出于有意,也可能是由于某些错误。

- 捕获检查点。

- 稍后,重新加载检查点,可能是在不同的硬件上。

- 重新开始训练。

选择合理的替代方案 (COPA)

一个用于评估 LLM 在识别前提的两个备选答案中哪个更优方面的能力的数据集。数据集中的每个挑战都包含三个组成部分:

- 前提,通常是陈述后跟问题

- 前提中提出的问题的两种可能答案,其中一种正确,另一种不正确

- 正确答案

例如:

- 前提:该男子弄伤了脚趾。导致这种情况的原因是什么?

- 可能的回答:

- 他的袜子破了个洞。

- 他把锤子掉在了脚上。

- 正确答案:2

COPA 是 SuperGLUE 集成模型的一个组成部分。

类别

标签可以所属的类别。 例如:

分类模型可预测类别。 相比之下,回归模型预测的是数字,而不是类别。

如需了解详情,请参阅机器学习速成课程中的分类。

类别平衡的数据集

一个包含 分类 标签的数据集,其中每个类别的实例数量大致相等。例如,假设有一个植物学数据集,其二元标签可以是本地植物或非本地植物:

- 如果某个数据集包含 515 种本地植物和 485 种非本地植物,则该数据集属于类别平衡的数据集。

- 包含 875 种本地植物和 125 种非本地植物的数据集属于分类不平衡的数据集。

在类平衡数据集和类不平衡数据集之间没有明确的界限。只有当在高度类别不平衡的数据集上训练的模型无法收敛时,这种区别才变得重要。如需了解详情,请参阅机器学习速成课程中的数据集:不平衡的数据集。

分类模型

- 一个模型,用于预测输入句子的语言(法语?西班牙语? 意大利语?)。

- 一个模型,用于预测树种(枫树?橡树?猴面包树?)。

- 用于预测特定医疗状况是阳性还是阴性的模型。

相比之下,回归模型预测的是数字,而不是类别。

以下是两种常见的分类模型:

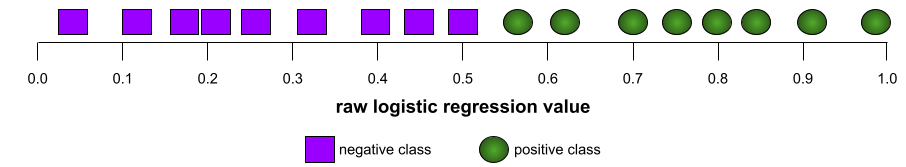

分类阈值

在二元分类中,一个介于 0 到 1 之间的数字,用于将逻辑回归模型的原始输出转换为正类别或负类别的预测结果。请注意,分类阈值是人为选择的值,而不是通过模型训练选择的值。

逻辑回归模型会输出一个介于 0 到 1 之间的原始值。然后,执行以下操作:

- 如果此原始值大于分类阈值,则预测为正类别。

- 如果此原始值小于分类阈值,则预测为负类。

例如,假设分类阈值为 0.8。如果原始值为 0.9,则模型预测为正类别。如果原始值为 0.7,则模型预测为负类别。

如需了解详情,请参阅机器学习速成课程中的阈值和混淆矩阵。

分类器

分类模型的非正式术语。

类别不平衡的数据集

一种分类的数据集,其中每个类的总标签数量差异很大。例如,假设有一个二元分类数据集,其两个标签的划分如下所示:

- 100 万个负值标签

- 10 个正值标签

负标签与正标签的比率为 100,000 比 1,因此这是一个分类不平衡的数据集。

相比之下,以下数据集是类别平衡的,因为负标签与正标签的比率相对接近 1:

- 517 个负值标签

- 483 个正值标签

多类别数据集也可能存在类别不平衡问题。例如,以下多类别分类数据集也存在类别不平衡问题,因为一个标签的示例数量远多于其他两个标签:

- 1,000,000 个标签,类别为“绿色”

- 200 个标签,类别为“紫色”

- 350 个带有“橙色”类别的标签

训练类别不平衡的数据集可能会带来特殊挑战。如需了解详情,请参阅机器学习速成课程中的不平衡的数据集。

裁剪

一种处理离群值的方法,通过执行以下一项或两项操作来实现:

- 将大于最大阈值的特征值减小到该最大阈值。

- 将小于最小阈值的特征值增加到该最小阈值。

例如,假设某个特定特征的值中只有不到 0.5% 不在 40-60 的范围内。在这种情况下,您可以执行以下操作:

- 将超过 60(最大阈值)的所有值裁剪到正好 60。

- 将小于 40(最低阈值)的所有值裁剪到正好 40。

离群值可能会损坏模型,有时会导致训练期间权重溢出。某些离群值也可能会严重影响准确率等指标。剪切是一种限制损坏的常用技术。

如需了解详情,请参阅机器学习速成课程中的数值数据:归一化。

Cloud TPU

一种专用硬件加速器,旨在加快 Google Cloud 上的机器学习工作负载的处理速度。

聚类

对相关的示例进行分组,尤其是在非监督式学习期间。在所有示例均分组完毕后,相关人员便可选择性地为每个聚类赋予含义。

聚类算法有很多。例如,k-means 算法会根据样本与形心的接近程度对样本进行聚类,如下图所示:

之后,研究人员便可查看这些聚类并进行其他操作,例如,将聚类 1 标记为“矮型树”,将聚类 2 标记为“全尺寸树”。

再举一个例子,例如基于样本与中心点距离的聚类算法,如下所示:

如需了解详情,请参阅聚类分析课程。

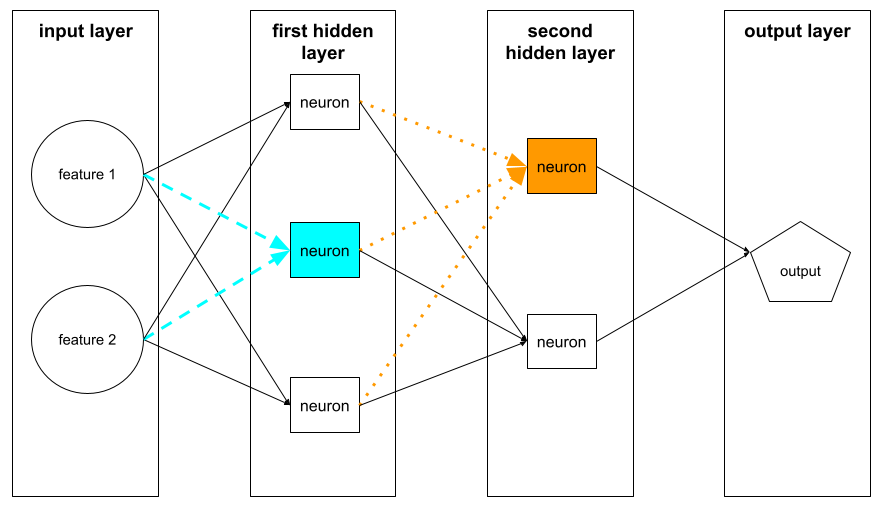

协同适应

一种不良行为,是指神经元几乎完全依赖其他特定神经元的输出(而不是依赖该网络的整体行为)来预测训练数据中的模式。如果验证数据中未呈现会导致协同适应的模式,则协同适应会导致过拟合。Dropout 正规化可减少协同适应,因为 dropout 可确保神经元不会完全依赖其他特定神经元。

协同过滤

根据许多其他用户的兴趣,对一位用户的兴趣做出预测。协同过滤通常用在推荐系统中。

如需了解详情,请参阅推荐系统课程中的协同过滤。

承诺银行 (CB)

一个用于评估 LLM 在确定段落作者是否相信该段落中的目标子句方面的熟练程度的数据集。数据集中的每个条目都包含:

- 一段文字

- 相应段落中的目标子句

- 一个布尔值,用于指示相应段落的作者是否认为目标子句

例如:

- 原文:听到阿耳特弥斯大笑,真是开心。她是个很严肃的孩子。 我不知道她还挺幽默的。

- 目标子句:她很有幽默感

- 布尔值:True,表示作者认为目标子句

CommitmentBank 是 SuperGLUE 集成模型的一个组成部分。

紧凑型模型

任何旨在在计算资源有限的小型设备上运行的小型模型。例如,紧凑型模型可以在手机、平板电脑或嵌入式系统上运行。

计算

(名词)模型或系统使用的计算资源,例如处理能力、内存和存储空间。

请参阅加速器芯片。

概念漂移

特征与标签之间的关系发生变化。 随着时间的推移,概念漂移会降低模型的质量。

在训练期间,模型会学习训练集中特征与其标签之间的关系。如果训练集中的标签是现实世界的良好代理,那么模型应该能够做出良好的现实世界预测。不过,由于概念漂移,模型的预测往往会随着时间的推移而退化。

例如,假设有一个二元分类模型,用于预测特定汽车型号是否“省油”。也就是说,这些特征可以是:

- 车辆重量

- 发动机压缩

- 传输类型

而标签为以下任一值:

- 省油

- 不省油

不过,“省油型汽车”的概念一直在变化。1994 年被标记为省油的汽车型号在 2024 年几乎肯定会被标记为不省油。出现概念漂移的模型往往会随着时间的推移,做出越来越无用的预测。

与非平稳性进行比较和对比。

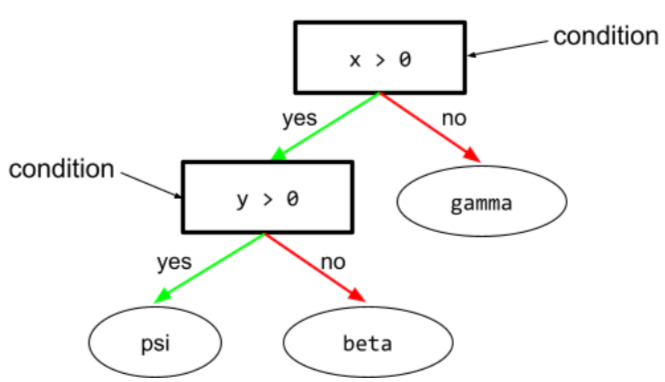

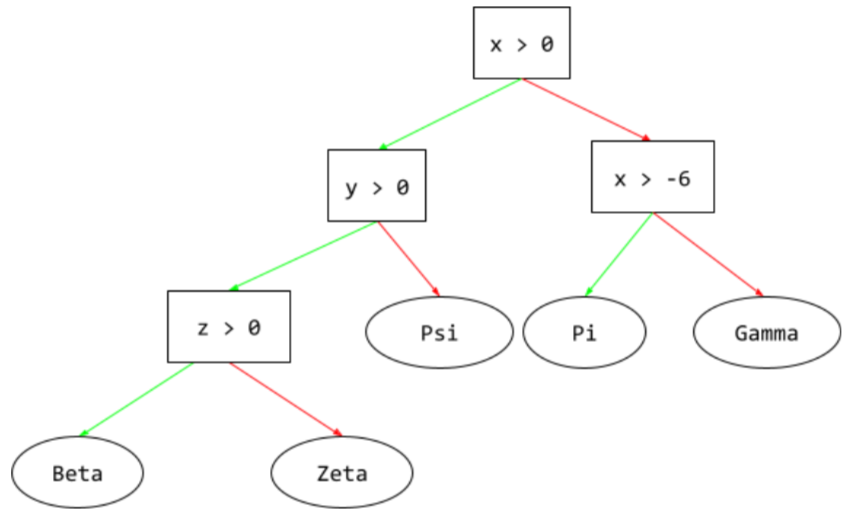

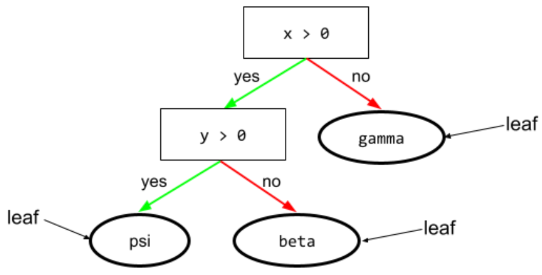

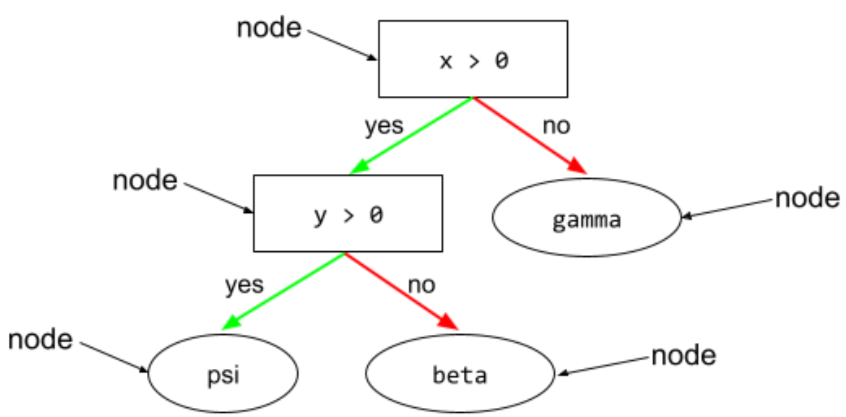

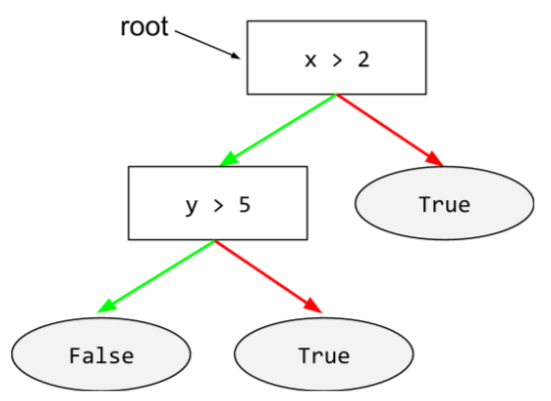

condition

在决策树中,执行测试的任何节点。例如,以下决策树包含两个条件:

条件也称为拆分或测试。

与叶的对比条件。

另请参阅:

如需了解详情,请参阅决策森林课程中的条件类型。

虚构

与幻觉的含义相同。

与“幻觉”相比,“虚构”可能是一个更准确的技术术语。 不过,幻觉一词先流行起来。

配置

分配用于训练模型的初始属性值的过程,包括:

在机器学习项目中,可以通过特殊的配置文件或使用以下配置库来完成配置:

确认偏差

一种以认可已有观念和假设的方式寻找、解读、支持和召回信息的倾向。 机器学习开发者可能会无意中以影响到支撑其现有观念的结果的方式收集或标记数据。确认偏差是一种隐性偏差。

实验者偏差是一种确认偏差,实验者会不断地训练模型,直到模型的预测结果能证实他们先前的假设为止。

混淆矩阵

一种 NxN 表格,用于总结分类模型做出的正确和错误预测的数量。例如,假设某个二元分类模型的混淆矩阵如下所示:

| 肿瘤(预测) | 非肿瘤(预测) | |

|---|---|---|

| 肿瘤(标准答案) | 18 (TP) | 1 (FN) |

| 非肿瘤(标准答案) | 6(FP) | 452(突尼斯) |

上述混淆矩阵显示了以下内容:

- 在 19 个标准答案为“肿瘤”的预测中,模型正确分类了 18 个,错误分类了 1 个。

- 在标准答案为“非肿瘤”的 458 次预测中,模型正确分类了 452 次,错误分类了 6 次。

多类别分类问题的混淆矩阵可帮助您发现错误模式。例如,假设有一个 3 类多类分类模型,用于对三种不同的鸢尾花类型(维吉尼亚鸢尾、变色鸢尾和山鸢尾)进行分类,那么该模型的混淆矩阵如下所示。当标准答案为 Virginica 时,混淆矩阵显示,模型更有可能错误地预测为 Versicolor,而不是 Setosa:

| Setosa(预测) | Versicolor(预测) | Virginica(预测) | |

|---|---|---|---|

| Setosa(标准答案) | 88 | 12 | 0 |

| Versicolor(标准答案) | 6 | 141 | 7 |

| Virginica(标准答案) | 2 | 27 | 109 |

再举一个例子,某个混淆矩阵可以揭示,经过训练以识别手写数字的模型往往会将 4 错误地预测为 9,或者将 7 错误地预测为 1。

混淆矩阵包含足够的信息来计算各种效果指标,包括精确率和召回率。

成分句法分析

将句子划分为更小的语法结构(“成分”)。 机器学习系统的后续部分(例如自然语言理解模型)可以比原始句子更轻松地解析这些成分。例如,请看以下句子:

我的朋友收养了两只猫。

成分句法分析器可以将此句子划分为以下两个成分:

- 我的朋友是一个名词短语。

- 领养了两只猫是一个动词短语。

这些成分可以进一步细分为更小的成分。 例如,动词短语

领养了两只猫

可进一步细分为:

- adopted 是一个动词。

- 两只猫是另一个名词短语。

情境化语言嵌入

一种嵌入,能够以流利的人类说话者的方式“理解”字词和短语。情境化语言嵌入可以理解复杂的语法、语义和上下文。

例如,考虑英文单词 cow 的嵌入。word2vec 等旧版嵌入可以表示英语单词,使得嵌入空间中从 cow 到 bull 的距离与从 ewe(母羊)到 ram(公羊)或从 female 到 male 的距离相似。情境化语言嵌入可以更进一步,识别出英语使用者有时会随意使用 cow 一词来表示母牛或公牛。

上下文窗口

模型可在给定提示中处理的 token 数量。上下文窗口越大,模型可用于提供连贯一致的回答的信息就越多。

连续特征

一种浮点特征,具有无限范围的可能值,例如温度或体重。

与离散特征相对。

便利抽样

使用未以科学方法收集的数据集,以便快速运行实验。之后,务必改为使用以科学方法收集的数据集。

收敛

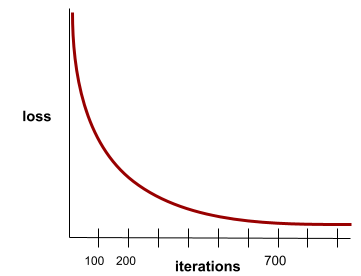

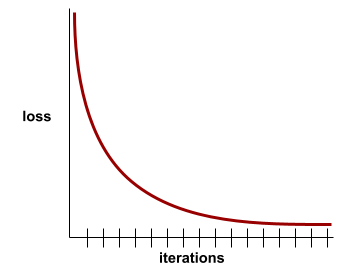

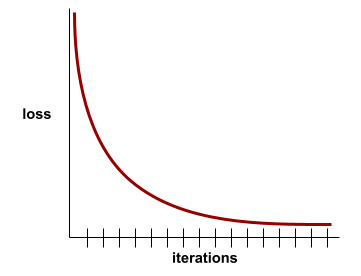

当损失值在每次迭代中的变化非常小或根本没有变化时,即达到收敛状态。例如,以下损失曲线表明模型在大约 700 次迭代时收敛:

如果继续训练无法改进模型,则表示模型已收敛。

在深度学习中,损失值有时会在许多次迭代中保持不变或几乎不变,然后才会最终下降。在损失值长期保持不变的情况下,您可能会暂时产生错误的收敛感。

另请参阅早停法。

如需了解详情,请参阅机器学习速成课程中的模型收敛和损失曲线。

对话式编码

您与生成式 AI 模型之间为创建软件而进行的迭代对话。您发出一个描述某软件的提示。然后,模型会使用该说明生成代码。然后,您发出新的提示,以解决之前提示或生成的代码中的缺陷,模型会生成更新后的代码。您和 AI 会不断来回沟通,直到生成的软件足够好为止。

对话编码本质上是氛围编码的原始含义。

与规范化编码相对。

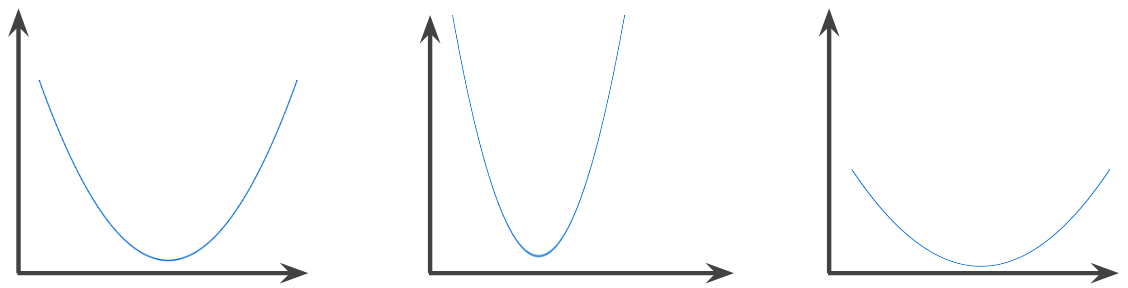

凸函数

一种函数,函数图像以上的区域为凸集。典型的凸函数形状类似于字母 U。例如,以下函数均为凸函数:

相比之下,以下函数不是凸函数。请注意,图表上方的区域不是凸集:

严格凸函数只有一个局部最小值点,该点也是全局最小值点。经典的 U 形函数是严格凸函数。不过,有些凸函数(例如直线)则不是 U 形函数。

如需了解详情,请参阅机器学习速成课程中的收敛和凸函数。

凸优化

使用梯度下降等数学技巧来寻找凸函数的最小值。机器学习方面的大量研究都是专注于如何通过公式将各种问题表示成凸优化问题,以及如何更高效地解决这些问题。

如需完整的详细信息,请参阅 Boyd 和 Vandenberghe 合著的 Convex Optimization(《凸优化》)。

凸集

欧氏空间的一个子集,该子集中任意两点之间的连线完全位于该子集中。例如,以下两种形状是凸集:

相比之下,以下两种形状不是凸集:

卷积

在数学中,简单来说,是指两个函数的混合。在机器学习中,卷积结合使用卷积过滤器和输入矩阵来训练权重。

如果没有卷积,机器学习算法就需要学习大张量中每个单元各自的权重。例如,如果机器学习算法在 2K x 2K 的图片上进行训练,则必须找到 400 万个单独的权重。而使用卷积,机器学习算法只需算出卷积过滤器中每个单元的权重,大大减少了训练模型所需的内存。应用卷积滤波器时,只需在各个细胞中复制该滤波器,使每个细胞都乘以该滤波器。

卷积滤波器

卷积运算中的两个参与方之一。(另一个 actor 是输入矩阵的一个切片。)卷积过滤器是一种矩阵,其秩与输入矩阵相同,但形状小一些。 例如,对于 28x28 的输入矩阵,滤波器可以是任何小于 28x28 的二维矩阵。

在照片处理中,卷积滤波器中的所有单元格通常都设置为由 1 和 0 组成的恒定模式。在机器学习中,卷积过滤器通常以随机数作为初始值,然后网络会训练理想值。

卷积层

深度神经网络的一个层,卷积过滤器会在其中传递输入矩阵。以下面的 3x3 卷积过滤器为例:

![一个 3x3 矩阵,包含以下值:[[0,1,0], [1,0,1], [0,1,0]]](https://developers.google.com/static/machine-learning/glossary/images/ConvolutionalFilter33.svg?hl=zh-cn)

以下动画展示了一个卷积层,其中包含 9 个涉及 5x5 输入矩阵的卷积运算。请注意,每个卷积运算都针对输入矩阵的不同 3x3 切片进行运算。生成的 3x3 矩阵(右侧)包含 9 次卷积运算的结果:

![动画:显示两个矩阵。第一个矩阵是 5x5 矩阵:[[128,97,53,201,198], [35,22,25,200,195],

[37,24,28,197,182], [33,28,92,195,179], [31,40,100,192,177]]。

第二个矩阵是 3x3 矩阵:

[[181,303,618], [115,338,605], [169,351,560]]。

第二个矩阵是通过对 5x5 矩阵的不同 3x3 子集应用卷积滤波器 [[0, 1, 0], [1, 0, 1], [0, 1, 0]] 计算得出的。](https://developers.google.com/static/machine-learning/glossary/images/AnimatedConvolution.gif?hl=zh-cn)

卷积神经网络

一种神经网络,其中至少有一层为卷积层。典型的卷积神经网络由以下层的某种组合构成:

卷积神经网络在某些类型的问题(例如图像识别)中取得了巨大成功。

卷积运算

如下所示的两步数学运算:

- 对卷积过滤器和输入矩阵切片执行元素级乘法。(输入矩阵切片与卷积过滤器具有相同的秩和大小。)

- 对生成的积矩阵中的所有值求和。

例如,考虑以下 5x5 输入矩阵:

![5x5 矩阵:[[128,97,53,201,198], [35,22,25,200,195],

[37,24,28,197,182], [33,28,92,195,179], [31,40,100,192,177]]。](https://developers.google.com/static/machine-learning/glossary/images/ConvolutionalLayerInputMatrix.svg?hl=zh-cn)

现在,假设有以下 2x2 卷积过滤器:

![2x2 矩阵:[[1, 0], [0, 1]]](https://developers.google.com/static/machine-learning/glossary/images/ConvolutionalLayerFilter.svg?hl=zh-cn)

每个卷积运算都涉及输入矩阵的单个 2x2 切片。例如,假设我们使用输入矩阵左上角的 2x2 切片。因此,此切片的卷积运算如下所示:

![将卷积滤波器 [[1, 0], [0, 1]] 应用于输入矩阵的左上角 2x2 部分,即 [[128,97], [35,22]]。

卷积滤波器会保留 128 和 22,但会将 97 和 35 归零。因此,卷积运算会产生值 150 (128+22)。](https://developers.google.com/static/machine-learning/glossary/images/ConvolutionalLayerOperation.svg?hl=zh-cn)

卷积层由一系列卷积运算组成,每个卷积运算都针对不同的输入矩阵切片。

COPA

选择合理替代方案的缩写。

费用

与损失的含义相同。

共同训练

一种半监督式学习方法,在满足以下所有条件时特别有用:

共同训练实际上是将独立信号放大为更强的信号。例如,假设有一个分类模型,用于将每辆二手车归类为好或坏。一组预测性特征可能侧重于汽车的年份、品牌和型号等汇总特征;另一组预测性特征可能侧重于前车主的驾驶记录和汽车的保养历史记录。

关于协同训练的开创性论文是 Blum 和 Mitchell 撰写的使用协同训练组合带标签和不带标签的数据。

反事实公平性

一种公平性指标,用于检查分类模型是否会针对以下两种个体生成相同的结果:一种个体与另一种个体完全相同,只是在一种或多种敏感属性方面有所不同。评估分类模型的反事实公平性是发现模型中潜在偏差来源的一种方法。

如需了解详情,请参阅以下任一内容:

- 公平性:反事实公平性,机器学习速成课程。

- 当世界相撞时:在公平性中整合不同的反事实假设

覆盖偏差

请参阅选择性偏差。

歧义

含义不明确的句子或词组。 歧义是自然语言理解的一个重大问题。 例如,标题“Red Tape Holds Up Skyscraper”存在歧义,因为 NLU 模型可能会从字面解读该标题,也可能会从象征角度进行解读。

评论者

与 Deep Q-Network 的含义相同。

交叉熵

将对数损失推广到多类别分类问题。交叉熵可以量化两种概率分布之间的差异。另请参阅困惑度。

交叉验证

一种机制,使用从训练集中保留的一个或多个不重叠的数据子集测试模型,以估计该模型泛化到新数据的效果。

累积分布函数 (CDF)

一种用于定义小于或等于目标值的样本频率的函数。例如,假设存在一个连续值的正态分布。CDF 会告诉您,大约 50% 的样本应小于或等于平均值,大约 84% 的样本应小于或等于平均值加一个标准差。

D

数据分析

根据样本、测量结果和可视化内容理解数据。数据分析在首次收到数据集时且构建第一个模型之前特别有用。此外,数据分析在理解实验和调试系统问题方面也至关重要。

数据增强

通过转换现有样本来创建其他样本,人为地增加训练样本的范围和数量。例如,假设图像是其中一个特征,但数据集包含的图像样本不足以供模型学习有用的关联。 理想情况下,您需要向数据集添加足够的有标签图像,才能使模型正常训练。如果不可行,则可以通过数据增强旋转、拉伸和翻转每张图像,以生成原始照片的多个变体,这样可能会生成足够的有标签数据来实现很好的训练效果。

DataFrame

一种热门的 pandas 数据类型,用于表示内存中的数据集。

DataFrame 类似于表格或电子表格。DataFrame 的每一列都有一个名称(标题),每一行都由一个唯一编号标识。

DataFrame 中的每一列都以二维数组的形式构建,但每一列都可以分配自己的数据类型。

另请参阅官方 pandas.DataFrame 参考页面。

数据并行处理

一种可扩展训练或推理的方式,可将整个模型复制到多个设备上,然后将输入数据的一个子集传递给每个设备。数据并行处理可支持针对非常大的批次大小进行训练和推理;不过,数据并行处理要求模型足够小,以适应所有设备。

数据并行处理通常可加快训练和推理速度。

另请参阅模型并行性。

Dataset API (tf.data)

一种高阶 TensorFlow API,用于读取数据并将其转换为机器学习算法所需的格式。tf.data.Dataset 对象表示一系列元素,其中每个元素都包含一个或多个张量。tf.data.Iterator 对象可用于访问 Dataset 的元素。

数据集(data set 或 dataset)

原始数据的集合,通常(但不一定)以以下格式之一进行整理:

- 电子表格

- 采用 CSV(逗号分隔值)格式的文件

决策边界

模型在二元分类或多类别分类问题中学习到的类别之间的分隔符。例如,在以下表示某个二元分类问题的图片中,决策边界是橙色类别和蓝色类别之间的分界线:

决策森林

一种由多个决策树创建的模型。决策森林通过汇总其决策树的预测结果来进行预测。常见的决策森林类型包括随机森林和梯度提升树。

如需了解详情,请参阅决策森林课程中的决策森林部分。

决策阈值

与分类阈值的含义相同。

决策树

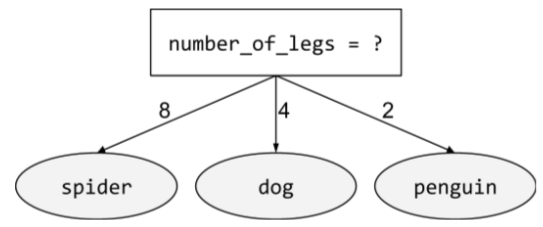

一种监督式学习模型,由一组按层次结构组织的条件和叶组成。例如,以下是一个决策树:

解码器

一般来说,任何将经过处理的密集型内部表示形式转换为更原始的稀疏型外部表示形式的机器学习系统。

解码器通常是较大模型的组成部分,并且经常与编码器配对使用。

在序列到序列任务中,解码器从编码器生成的内部状态开始预测下一个序列。

如需了解 Transformer 架构中解码器的定义,请参阅 Transformer。

如需了解详情,请参阅机器学习速成课程中的大型语言模型。

深度模型

深度模型也称为深度神经网络。

与宽度模型相对。

一种非常流行的深度神经网络

与深度模型的含义相同。

深度 Q 网络 (DQN)

在 Q-learning 中,深度神经网络会预测 Q 函数。

评价者是深度 Q 网络的同义词。

人口统计均等

一种公平性指标,如果模型分类的结果不依赖于给定的敏感属性,则满足该指标。

例如,如果小人国人和巨人国人都申请了 Glubbdubdrib 大学,那么如果录取的小人国人百分比与录取的大人国人百分比相同,则实现了人口统计学上的平等,无论一个群体是否比另一个群体平均而言更符合条件。

与均衡赔率和机会均等形成对比,后者允许分类结果总体上取决于敏感属性,但不允许某些指定标准答案标签的分类结果取决于敏感属性。如需查看直观图表,了解在优化人口统计学均等性时需要做出的权衡,请参阅“通过更智能的机器学习避免歧视”。

如需了解详情,请参阅机器学习速成课程中的公平性:人口统计学奇偶性。

降噪

一种常见的自监督式学习方法,其中:

去噪功能可实现从无标签示例中学习。 原始数据集用作目标或标签,而含噪数据用作输入。

部分掩码语言模型使用以下去噪方式:

- 通过屏蔽部分令牌,人为地向无标签句子添加噪声。

- 模型会尝试预测原始 token。

密集特征

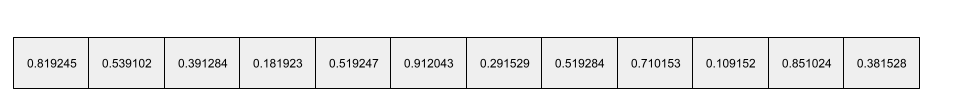

一种特征,其中大多数或所有值都不为零,通常是浮点值的 Tensor。例如,以下 10 元素张量是密集张量,因为其中 9 个值不为零:

| 8 | 3 | 7 | 5 | 2 | 4 | 0 | 4 | 9 | 6 |

与稀疏特征相对。

密集层

与全连接层的含义相同。

深度

神经网络中以下各项的总和:

例如,具有 5 个隐藏层和 1 个输出层的神经网络的深度为 6。

请注意,输入层不会影响深度。

深度可分离卷积神经网络 (sepCNN)

基于 Inception 的卷积神经网络架构,但其中的 Inception 模块被替换为深度可分离卷积。也称为 Xception。

深度可分离卷积(也简称为可分离卷积)将标准 3D 卷积分解为两个单独的卷积运算,这两个运算在计算上更高效:首先是深度为 1 (n ✕ n ✕ 1) 的深度卷积,然后是长度和宽度为 1 (1 ✕ 1 ✕ n) 的点状卷积。

如需了解详情,请参阅 Xception:使用深度可分离卷积的深度学习。

派生标签

与代理标签的含义相同。

设备

一个多含义术语,具有以下两种可能的定义:

- 一类可运行 TensorFlow 会话的硬件,包括 CPU、GPU 和 TPU。

- 在 加速器芯片(GPU 或 TPU)上训练机器学习模型时,实际操控张量和嵌入的系统部分。设备在加速器芯片上运行。相比之下,宿主通常在 CPU 上运行。

差分隐私

在机器学习中,一种用于保护模型训练集中包含的任何敏感数据(例如个人信息)免遭泄露的匿名化方法。这种方法可确保模型不会学习或记住有关特定个人的太多信息。为此,我们在模型训练期间进行抽样并添加噪声,以模糊单个数据点,从而降低泄露敏感训练数据的风险。

差分隐私也用于机器学习之外的领域。例如,数据科学家有时会在计算不同人口统计特征的产品使用情况统计信息时使用差分隐私来保护个人隐私。

降维

减少用于表示特征向量中特定特征的维度的数量,通常通过转换为嵌入向量来实现此操作。

维度

一个具有多重含义的术语,包括以下含义:

Tensor中的坐标级别数量。例如:

- 标量有零个维度,例如

["Hello"]。 - 向量有一个维度,例如

[3, 5, 7, 11]。 - 矩阵有两个维度,例如

[[2, 4, 18], [5, 7, 14]]。 您可以使用一个坐标唯一指定一维向量中的特定单元;您需要使用两个坐标唯一指定二维矩阵中的特定单元。

- 标量有零个维度,例如

特征向量中的条目数。

嵌入层中的元素数。

直接提示

与零样本提示的含义相同。

离散特征

一种特征,包含有限个可能值。例如,值可能仅为 animal、vegetable 或 mineral 的特征是离散(或分类)特征。

与连续特征相对。

判别模型

一种通过一个或多个特征组成的集合预测标签的模型。更正式地讲,判别模型会根据特征和权重定义输出的条件概率;即:

p(output | features, weights)

例如,如果一个模型要通过特征和权重预测某封电子邮件是否是垃圾邮件,那么该模型为判别模型。

绝大多数监督式学习模型(包括分类模型和回归模型)都是判别模型。

与生成模型相对。

判别器

一种确定示例是真实还是虚假的系统。

或者,生成对抗网络中的子系统,用于确定生成器创建的样本是真实的还是虚假的。

如需了解详情,请参阅 GAN 课程中的判别器。

不同影响

做出有关人员的决策,但这些决策对不同的人口子群组的影响不成比例。这通常是指算法决策过程对某些子群体的伤害或益处大于其他子群体的情况。

例如,假设某个算法用于确定小人国居民是否符合微型住宅贷款的申请条件,如果申请人的邮寄地址包含某个邮政编码,该算法更有可能将申请人归类为“不符合条件”。如果大端序小人国居民比小端序小人国居民更可能拥有此邮政编码的邮寄地址,那么此算法可能会造成差别影响。

与差别对待形成对比,后者侧重于当子群组特征是算法决策过程的显式输入时导致的不公平现象。

差别待遇

在算法决策过程中纳入受试者的敏感属性,以便区别对待不同的人群子群组。

例如,假设有一种算法,可根据小人国居民在贷款申请中提供的数据来确定他们是否符合微型住宅贷款的条件。如果算法使用 Lilliputian 的派别(大端或小端)作为输入,则会在该维度上实施差别对待。

与差异化影响(侧重于算法决策对子群组的社会影响方面的差异,无论这些子群组是否是模型的输入)形成对比。

蒸馏

将一个模型(称为教师)的大小缩减为较小的模型(称为学生),并尽可能忠实地模拟原始模型的预测结果。知识蒸馏之所以有用,是因为较小的模型(学生)与较大的模型(教师)相比,具有以下两个主要优势:

- 推理时间更短

- 减少了内存和能耗用量

不过,学生的预测结果通常不如教师的预测结果。

蒸馏训练学生模型,以最大限度地减少基于学生模型和教师模型预测输出之间差异的损失函数。

比较和对比蒸馏与以下术语:

如需了解详情,请参阅机器学习速成课程中的 LLM:微调、蒸馏和提示工程。

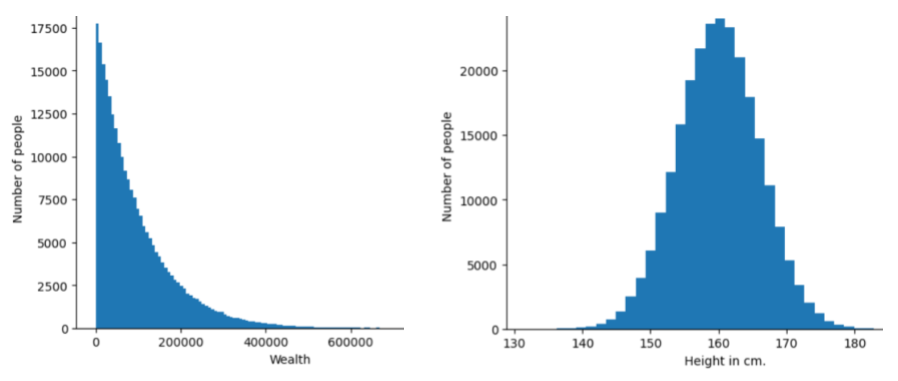

分布式训练

给定特征或标签的不同值的频次和范围。分布可反映特定值的可能性。

下图显示了两种不同分布的直方图:

- 左侧:财富与拥有相应财富的人数之间的幂律分布。

- 右侧是身高与拥有相应身高的人数之间的正态分布。

了解每个特征和标签的分布情况有助于您确定如何归一化值和检测离群值。

分布外是指未出现在数据集中的值或非常罕见的值。例如,对于由猫图片组成的数据集,土星的图片会被视为分布外数据。

分裂式层次聚类

请参阅层次聚类。

降采样

一个多含义术语,可以理解为下列两种含义之一:

- 减少特征中的信息量,以便更有效地训练模型。例如,在训练图像识别模型之前,将高分辨率图像降采样为分辨率较低的格式。

- 使用占比异常低、得到过度代表的类别样本训练模型,以改进未得到充分代表的类别的模型训练效果。 例如,在类别不平衡的数据集中,模型往往会学习到很多关于多数类的知识,但关于少数类的知识却不够。降采样有助于平衡多数类和少数类的训练量。

如需了解详情,请参阅机器学习速成课程中的数据集:不平衡的数据集。

DQN

深度 Q 网络的缩写。

dropout 正规化

一种在训练神经网络时很有用的正则化形式。Dropout 正规化的运作机制是,在一个梯度步中移除从神经网络层中随机选择的固定数量的单元。丢弃的单元越多,正则化就越强。这类似于训练神经网络以模拟较小网络的指数级规模集成。如需完整的详细信息,请参阅 Dropout: A Simple Way to Prevent Neural Networks from Overfitting(《Dropout:一种防止神经网络过拟合的简单方法》)。

动态

经常或持续做的事情。 在机器学习中,“动态”和“在线”是同义词。以下是机器学习中动态和在线的常见用途:

- 动态模型(或在线模型)是一种会频繁或持续重新训练的模型。

- 动态训练(或在线训练)是指频繁或持续的训练过程。

- 动态推理(或在线推理)是指根据需要生成预测的过程。

动态模型

一种经常(甚至持续)重新训练的模型。动态模型是“终身学习者”,会不断适应不断变化的数据。动态模型也称为在线模型。

与静态模型相对。

E

即刻执行

一种 TensorFlow 编程环境,操作可在其中立即运行。相比之下,在图执行中调用的操作在得到明确评估之前不会运行。即刻执行是一种命令式接口,就像大多数编程语言中的代码一样。相比图执行程序,调试即刻执行程序通常要容易得多。

早停法

一种正则化方法,涉及在训练损失停止下降之前结束训练。在早停法中,当验证数据集的损失开始增加时(即泛化性能变差时),您会故意停止训练模型。

与提前退出相对。

推土机距离 (EMD)

一种衡量两种分布相对相似度的指标。 推土机距离越小,分布越相似。

修改距离

衡量两个文本字符串彼此之间的相似程度。 在机器学习中,编辑距离非常有用,原因如下:

- 编辑距离很容易计算。

- 编辑距离可以比较已知彼此相似的两个字符串。

- 编辑距离可以确定不同字符串与给定字符串的相似程度。

编辑距离有多种定义,每种定义都使用不同的字符串操作。如需查看示例,请参阅 Levenshtein 距离。

Einsum 表示法

一种用于描述如何组合两个张量的有效表示法。张量组合方式为:将一个张量的元素与另一个张量的元素相乘,然后将乘积相加。 Einsum 表示法使用符号来标识每个张量的轴,并重新排列这些相同的符号来指定新结果张量的形状。

NumPy 提供了一个通用的 Einsum 实现。

嵌入层

一种特殊的隐藏层,可针对高维分类特征进行训练,以逐步学习低维嵌入向量。与仅基于高维分类特征进行训练相比,嵌入层可让神经网络的训练效率大幅提高。

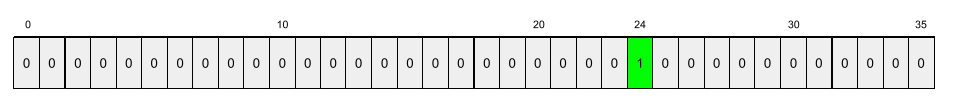

例如,地球目前支持约 73,000 种树。假设树种是模型中的一个特征,那么模型的输入层将包含一个长度为 73,000 的独热向量。

例如,baobab 可能会以如下方式表示:

包含 73,000 个元素的数组非常长。如果您不向模型添加嵌入层,则由于要乘以 72,999 个零,训练将非常耗时。假设您选择的嵌入层包含 12 个维度。因此,嵌入层将逐渐学习每种树木的新嵌入向量。

在某些情况下,哈希处理是嵌入层的合理替代方案。

如需了解详情,请参阅机器学习速成课程中的嵌入。

嵌入空间

更高维度的向量空间中的特征所映射到的 d 维向量空间。嵌入空间经过训练,可捕获对预期应用有意义的结构。

嵌入向量

从广义上讲,是指来自任何 隐藏层的浮点数数组,用于描述该隐藏层的输入。 通常,嵌入向量是在嵌入层中训练的浮点数数组。例如,假设嵌入层必须学习地球上 73,000 种树木中每种树木的嵌入向量。以下数组可能就是一棵猴面包树的嵌入向量:

嵌入向量不是一堆随机数字。嵌入层通过训练来确定这些值,类似于神经网络在训练期间学习其他权重的方式。数组的每个元素都是树种在某个特征方面的评级。哪个元素代表哪种树种的特征?人类很难确定这一点。

嵌入向量在数学上令人称奇之处在于,相似的内容具有相似的浮点数组。例如,相似的树种比不相似的树种具有更相似的浮点数集。红杉和巨杉是相关的树种,因此它们将具有比红杉和椰子树更相似的一组浮点数。即使您使用相同的输入重新训练模型,嵌入向量中的数字也会在每次重新训练模型时发生变化。

经验累积分布函数(eCDF 或 EDF)

基于真实数据集的实证测量结果的累积分布函数。函数在 x 轴上任意点的值是数据集中小于或等于指定值的观测值的比例。

经验风险最小化 (ERM)

选择可最大限度减少训练集损失的函数。与结构风险最小化相对。

编码器

一般来说,任何将原始、稀疏或外部表示形式转换为经过更多处理、更密集或更内部的表示形式的机器学习系统。

编码器通常是较大模型的组成部分,并且经常与解码器配对使用。有些 Transformer 会将编码器与解码器配对,不过其他 Transformer 只使用编码器或只使用解码器。

有些系统使用编码器的输出作为分类或回归网络的输入。

在序列到序列任务中,编码器会接收输入序列并返回内部状态(一个向量)。然后,解码器使用该内部状态来预测下一个序列。

如需了解 Transformer 架构中编码器的定义,请参阅 Transformer。

如需了解详情,请参阅机器学习速成课程中的大语言模型:什么是大语言模型。

endpoints

可通过网络寻址的位置(通常为网址),服务可通过该位置访问。

集成学习

一组独立训练的模型,其预测结果经过平均处理或汇总。在许多情况下,集成模型比单个模型能做出更好的预测。例如,随机森林是由多个决策树构建的集成学习模型。请注意,并非所有决策森林都是集成学习模型。

如需了解详情,请参阅机器学习速成课程中的随机森林。

熵

在 信息论中,用于描述概率分布的不可预测程度。或者,熵也可以定义为每个示例包含的信息量。当随机变量的所有值具有相同的可能性时,分布的熵最大。

具有两个可能值“0”和“1”(例如,二元分类问题中的标签)的集合的熵具有以下公式:

H = -p log p - q log q = -p log p - (1-p) * log (1-p)

其中:

- H 是熵。

- p 是“1”示例的比例。

- q 是“0”示例的比例。请注意,q = (1 - p)

- 对数通常为以 2 为底的对数。在本例中,熵单位为比特。

例如,假设情况如下:

- 100 个示例包含值“1”

- 300 个示例包含值“0”

因此,熵值为:

- p = 0.25

- q = 0.75

- H = (-0.25)log2(0.25) - (0.75)log2(0.75) = 每个示例 0.81 位

完全平衡的集合(例如,200 个“0”和 200 个“1”)的每个示例的熵为 1.0 位。随着集合变得越来越不平衡,其熵会趋向于 0.0。

在决策树中,熵有助于制定信息增益,从而帮助分裂器在分类决策树的增长过程中选择条件。

将熵与以下对象进行比较:

熵通常称为香农熵。

如需了解详情,请参阅决策森林课程中的使用数值特征进行二元分类的精确分裂器。

环境

在强化学习中,包含智能体并允许智能体观察世界状态的世界。例如,所表示的世界可以是国际象棋等游戏,也可以是迷宫等现实世界。当智能体对环境应用某项操作时,环境会在状态之间转换。

分集

周期数

在训练时,对整个训练集的一次完整遍历,不会漏掉任何一个样本。

一个周期表示 N/批次大小次训练迭代,其中 N 是样本总数。

例如,假设存在以下情况:

- 该数据集包含 1,000 个示例。

- 批次大小为 50 个样本。

因此,一个周期需要 20 次迭代:

1 epoch = (N/batch size) = (1,000 / 50) = 20 iterations

如需了解详情,请参阅机器学习速成课程中的线性回归:超参数。

epsilon-greedy 策略

在强化学习中,一种政策,以 epsilon 概率遵循随机政策,否则遵循贪婪政策。例如,如果 epsilon 为 0.9,则政策有 90% 的时间遵循随机政策,有 10% 的时间遵循贪婪政策。

在连续的剧集中,算法会减小 epsilon 的值,以便从遵循随机政策转为遵循贪婪政策。通过调整政策,智能体首先会随机探索环境,然后贪婪地利用随机探索的结果。

机会均等

一种公平性指标,用于评估模型是否能针对敏感属性的所有值同样准确地预测出理想结果。换句话说,如果模型的理想结果是正类别,那么目标就是让所有组的真正例率保持一致。

机会平等与赔率均衡有关,后者要求所有群组的真正例率和假正例率都相同。

假设 Glubbdubdrib 大学允许小人国人和巨人国人参加严格的数学课程。Lilliputians 的中学提供完善的数学课程,绝大多数学生都符合大学课程的入学条件。Brobdingnagians 的中学根本不提供数学课程,因此,他们的学生中只有极少数人符合条件。如果合格学生被录取的机会均等,无论他们是小人国人还是巨人国人,那么对于“已录取”这一首选标签,就满足了在国籍(小人国或巨人国)方面的机会均等。

例如,假设有 100 名小人国人和 100 名巨人国人申请了 Glubbdubdrib 大学,录取决定如下:

表 1. Lilliputian 申请者(90% 符合条件)

| 符合资格 | 不合格 | |

|---|---|---|

| 已录取 | 45 | 3 |

| 已拒绝 | 45 | 7 |

| 总计 | 90 | 10 |

|

被录取的合格学生所占百分比:45/90 = 50% 被拒的不合格学生所占百分比:7/10 = 70% 被录取的小人国学生所占总百分比:(45+3)/100 = 48% |

||

表 2. Brobdingnagian 申请者(10% 符合条件):

| 符合资格 | 不合格 | |

|---|---|---|

| 已录取 | 5 | 9 |

| 已拒绝 | 5 | 81 |

| 总计 | 10 | 90 |

|

符合条件的学生录取百分比:5/10 = 50% 不符合条件的学生拒绝百分比:81/90 = 90% Brobdingnagian 学生总录取百分比:(5+9)/100 = 14% |

||

上述示例满足了合格学生在入学方面的机会平等,因为合格的利立浦特人和布罗卜丁奈格人都只有 50% 的入学机会。

虽然满足了机会均等,但未满足以下两个公平性指标:

- 人口统计均等:小人国人和巨人国人被大学录取的比例不同;48% 的小人国学生被录取,但只有 14% 的巨人国学生被录取。

- 机会均等:虽然符合条件的小人国学生和巨人国学生被录取的几率相同,但不符合条件的小人国学生和巨人国学生被拒的几率相同这一额外限制条件并未得到满足。不合格的 Lilliputian 的拒绝率为 70%,而不合格的 Brobdingnagian 的拒绝率为 90%。

如需了解详情,请参阅机器学习速成课程中的公平性:机会平等。

均衡赔率

一种公平性指标,用于评估模型是否能针对敏感属性的所有值,同样准确地预测正类别和负类别的结果,而不仅仅是其中一个类别。换句话说,所有组的真正例率和假负例率都应相同。

均衡赔率与机会均等相关,后者仅关注单个类别(正类别或负类别)的错误率。

例如,假设 Glubbdubdrib 大学允许小人国人和巨人国人同时参加一项严格的数学课程。Lilliputians 的中学提供完善的数学课程,绝大多数学生都符合大学课程的入学条件。Brobdingnagians 的中学根本不提供数学课程,因此,他们的学生中只有极少数人符合条件。只要满足以下条件,即可实现均衡赔率:无论申请者是小人国人还是巨人国人,如果他们符合条件,被该计划录取的可能性都相同;如果他们不符合条件,被拒绝的可能性也相同。

假设有 100 名小人国人和 100 名巨人国人申请了 Glubbdubdrib 大学,并且录取决定如下:

表 3. Lilliputian 申请者(90% 符合条件)

| 符合资格 | 不合格 | |

|---|---|---|

| 已录取 | 45 | 2 |

| 已拒绝 | 45 | 8 |

| 总计 | 90 | 10 |

|

被录取的合格学生所占百分比:45/90 = 50% 被拒绝的不合格学生所占百分比:8/10 = 80% 被录取的 Lilliputian 学生总数所占百分比:(45+2)/100 = 47% |

||

表 4. Brobdingnagian 申请者(10% 符合条件):

| 符合资格 | 不合格 | |

|---|---|---|

| 已录取 | 5 | 18 |

| 已拒绝 | 5 | 72 |

| 总计 | 10 | 90 |

|

符合条件的学生被录取百分比:5/10 = 50% 不符合条件的学生被拒绝百分比:72/90 = 80% Brobdingnagian 学生被录取总百分比:(5+18)/100 = 23% |

||

由于符合条件的小人国学生和巨人国学生被录取的概率均为 50%,而不符合条件的小人国学生和巨人国学生被拒绝的概率均为 80%,因此满足了赔率均等条件。

“监督学习中的机会平等”中对均衡赔率的正式定义如下:“如果预测变量 Ŷ 和受保护属性 A 在以结果 Y 为条件的情况下相互独立,则预测变量 Ŷ 满足关于受保护属性 A 和结果 Y 的均衡赔率。”

Estimator

已弃用的 TensorFlow API。使用 tf.keras 而不是 Estimator。

evals

主要用作 LLM 评估的缩写。 更广泛地说,evals 是任何形式的评估的缩写。

评估

衡量模型质量或比较不同模型的过程。

若要评估监督式机器学习模型,您通常需要根据验证集和测试集来判断模型的效果。评估 LLM 通常涉及更广泛的质量和安全性评估。

完全匹配

一种非此即彼的指标,即模型的输出要么与标准答案或参考文本完全一致,要么完全不一致。例如,如果标准答案是 orange,则只有模型输出 orange 满足完全匹配条件。

完全匹配还可以评估输出为序列(已排名商品列表)的模型。一般来说,完全匹配要求生成的排名列表与实际情况完全一致;也就是说,两个列表中的每个项目都必须按相同的顺序排列。不过,如果评估依据包含多个正确序列,那么完全匹配仅要求模型的输出与一个正确序列匹配。

示例

一行特征的值,可能还包含一个标签。监督式学习中的示例大致分为两类:

例如,假设您正在训练一个模型,以确定天气条件对学生考试成绩的影响。以下是三个带标签的示例:

| 功能 | 标签 | ||

|---|---|---|---|

| 温度 | 湿度 | 压力 | 测试分数 |

| 15 | 47 | 998 | 良好 |

| 19 | 34 | 1020 | 极佳 |

| 18 | 92 | 1012 | 差 |

以下是三个未标记的示例:

| 温度 | 湿度 | 压力 | |

|---|---|---|---|

| 12 | 62 | 1014 | |

| 21 | 47 | 1017 | |

| 19 | 41 | 1021 |

数据集的行通常是示例的原始来源。 也就是说,一个示例通常由数据集中的一部分列组成。此外,示例中的特征还可以包括合成特征,例如特征交叉。

如需了解详情,请参阅“机器学习简介”课程中的监督式学习。

经验回放

在强化学习中,一种用于减少训练数据中时间相关性的 DQN 技术。代理将状态转换存储在回放缓冲区中,然后从回放缓冲区中抽样转换以创建训练数据。

实验者偏差

请参阅确认偏差。

梯度爆炸问题

深度神经网络(尤其是循环神经网络)中的梯度出人意料地变得陡峭(高)的趋势。陡峭的梯度通常会导致深度神经网络中每个节点的权重发生非常大的更新。

如果模型出现梯度爆炸问题,则很难或无法进行训练。梯度裁剪可以缓解此问题。

与梯度消失问题相对。

极简摘要 (xsum)

用于评估 LLM 总结单个文档的能力的数据集。数据集中的每个条目都包含以下内容:

- 由英国广播公司 (BBC) 撰写的一份文档。

- 相应文档的单句摘要。

如需了解详情,请参阅不要给我详细信息,只要摘要!Topic-Aware Convolutional Neural Networks for Extreme Summarization。

F

F1

一种“汇总”二元分类指标,同时依赖于精确率和召回率。公式如下:

真实性

在机器学习领域中,一种描述模型(其输出基于现实)的属性。事实性是一个概念,而不是一个指标。 例如,假设您向大型语言模型发送以下提示:

食盐的化学式是什么?

如果模型侧重于事实性,则会回答:

NaCl

人们很容易认为所有模型都应基于事实。不过,某些提示(例如以下提示)应促使生成式 AI 模型优化创意性,而不是事实性。

给我讲一个关于宇航员和毛毛虫的五行打油诗。

这样创作出的五行打油诗不太可能基于现实。

与事实依据相对。

公平性约束

对算法应用限制条件,以确保满足一项或多项公平性定义。公平性限制的示例包括:- 对模型输出进行后处理。

- 更改损失函数,以纳入对违反公平性指标的行为的惩罚。

- 直接向优化问题添加数学约束。

公平性指标

可衡量的“公平性”的数学定义。 一些常用的公平性指标包括:

许多公平性指标是互斥的;请参阅公平性指标互不相容。

假负例 (FN)

被模型错误地预测为负类别的样本。例如,模型预测某封电子邮件不是垃圾邮件(负类别),但该电子邮件实际上是垃圾邮件。

假负例率

模型错误地将实际正例预测为负例的比例。以下公式用于计算假负率:

如需了解详情,请参阅机器学习速成课程中的阈值和混淆矩阵。

假正例 (FP)

被模型错误地预测为正类别的样本。例如,模型预测某封电子邮件是垃圾邮件(正类别),但该电子邮件实际上不是垃圾邮件。

如需了解详情,请参阅机器学习速成课程中的阈值和混淆矩阵。

假正例率 (FPR)

模型错误地将实际负例预测为正例的比例。以下公式用于计算假正例率:

假正例率是 ROC 曲线的 x 轴。

如需了解详情,请参阅机器学习速成课程中的分类:ROC 和 AUC。

快速衰减

一种用于提升 LLM 性能的训练技术。快速衰减是指在训练期间快速降低学习速率。此策略有助于防止模型对训练数据出现过拟合,并提高泛化能力。

功能

机器学习模型的输入变量。一个示例包含一个或多个特征。例如,假设您正在训练一个模型,以确定天气条件对学生考试成绩的影响。下表显示了三个示例,每个示例都包含三个特征和一个标签:

| 功能 | 标签 | ||

|---|---|---|---|

| 温度 | 湿度 | 压力 | 测试分数 |

| 15 | 47 | 998 | 92 |

| 19 | 34 | 1020 | 84 |

| 18 | 92 | 1012 | 87 |

与标签相对。

如需了解详情,请参阅“机器学习简介”课程中的监督式学习。

特征组合

例如,假设有一个“情绪预测”模型,它使用以下四个区间之一来表示温度:

freezingchillytemperatewarm

并以以下三个区间之一表示风速:

stilllightwindy

如果不进行特征交叉,线性模型会针对上述七个不同的分桶分别进行独立训练。因此,模型会基于 freezing 进行训练,而不会基于 windy 进行训练。

或者,您也可以创建温度和风速的特征交叉。此合成特征将具有以下 12 个可能的值:

freezing-stillfreezing-lightfreezing-windychilly-stillchilly-lightchilly-windytemperate-stilltemperate-lighttemperate-windywarm-stillwarm-lightwarm-windy

借助特征交叉,模型可以学习freezing-windy天和freezing-still天之间的情绪差异。

如果您根据两个各自具有许多不同分桶的特征创建合成特征,则生成的特征交叉将具有大量可能的组合。例如,如果一个特征有 1,000 个分桶,另一个特征有 2,000 个分桶,那么生成的特征交叉将有 2,000,000 个分桶。

从形式上讲,交叉联接是笛卡尔积。

特征交叉主要用于线性模型,很少用于神经网络。

如需了解详情,请参阅机器学习速成课程中的类别数据:特征交叉。

特征工程

一种包含以下步骤的流程:

- 确定哪些特征可能在训练模型方面非常有用。

- 将数据集中的原始数据转换为这些特征的高效版本。

例如,您可能会认为 temperature 是一项有用的功能。然后,您可以尝试使用分桶来优化模型可从不同 temperature 范围中学习的内容。

如需了解详情,请参阅机器学习速成课程中的数值数据:模型如何使用特征向量提取数据。

特征提取

一个多含义术语,具有下列两种含义之一:

特征重要性

与变量重要性的含义相同。

功能集

机器学习模型训练时采用的一组特征。 例如,用于预测房价的模型的简单特征集可能包含邮政编码、房产面积和房产状况。

特征规范

介绍从 tf.Example 协议缓冲区提取特征数据所需的信息。由于 tf.Example 协议缓冲区只是一个数据容器,因此您必须指定以下内容:

- 要提取的数据(即特征的键)

- 数据类型(例如,float 或 int)

- 长度(固定或可变)

特征向量

构成示例的特征值数组。特征向量在训练和推理期间作为输入。例如,具有两个离散特征的模型的特征向量可能如下所示:

[0.92, 0.56]

每个示例都为特征向量提供不同的值,因此下一个示例的特征向量可能如下所示:

[0.73, 0.49]

特征工程决定了如何在特征向量中表示特征。例如,具有 5 个可能值的二元分类特征可以使用独热编码来表示。在这种情况下,特定示例的特征向量部分将包含四个零和一个位于第三位置的 1.0,如下所示:

[0.0, 0.0, 1.0, 0.0, 0.0]

再举一个例子,假设您的模型包含三个特征:

- 采用独热编码表示的具有五个可能值的二元分类特征;例如:

[0.0, 1.0, 0.0, 0.0, 0.0] - 另一个具有三个可能值的二元分类特征,以独热编码表示;例如:

[0.0, 0.0, 1.0] - 浮点特征;例如:

8.3。

在这种情况下,每个示例的特征向量将由 9 个值表示。根据上一个列表中的示例值,特征向量将为:

0.0 1.0 0.0 0.0 0.0 0.0 0.0 1.0 8.3

如需了解详情,请参阅机器学习速成课程中的数值数据:模型如何使用特征向量提取数据。

特征化

从文档或视频等输入源中提取特征并将这些特征映射到特征向量的过程。

一些机器学习专家将“featurization”用作特征工程或特征提取的同义词。

联合学习

是一种分布式机器学习方法,使用驻留在智能手机等设备上的分散样本来训练机器学习模型。在联邦学习中,一部分设备会从中央协调服务器下载当前模型。设备会使用存储在设备上的示例来改进模型。然后,设备会将模型改进(但不会上传训练示例)上传到协调服务器,在服务器上,这些改进会与其他更新聚合,从而生成改进的全局模型。聚合完成后,设备计算出的模型更新不再需要,可以舍弃。

由于训练示例永远不会上传,因此联邦学习遵循聚焦数据收集和数据最小化的隐私原则。

如需了解详情,请参阅联邦学习漫画(没错,就是漫画)。

反馈环

在机器学习中,一种模型预测会影响同一模型或其他模型的训练数据的情况。例如,推荐电影的模型会影响用户看到的电影,进而影响后续的电影推荐模型。

如需了解详情,请参阅机器学习速成课程中的生产环境机器学习系统:需要提出的问题。

前馈神经网络 (FFN)

没有循环或递归连接的神经网络。例如,传统深度神经网络属于前馈神经网络。与循环的循环神经网络相对。

少量样本学习

一种机器学习方法,通常用于对象分类,旨在仅通过少量训练示例来训练有效的分类模型。

少样本提示

包含多个(“少量”)示例的提示,用于演示大语言模型应如何回答。例如,以下冗长的问题包含两个示例,向大型语言模型展示了如何回答查询。

| 一个提示的组成部分 | 备注 |

|---|---|

| 指定国家/地区的官方货币是什么? | 您希望 LLM 回答的问题。 |

| 法国:欧元 | 举个例子。 |

| 英国:英镑 | 再举一个例子。 |

| 印度: | 实际查询。 |

与零样本提示和单样本提示相比,少样本提示通常会产生更理想的结果。不过,少样本提示需要更长的提示。

如需了解详情,请参阅机器学习速成课程中的提示工程。

小提琴

一个以 Python 为先的配置库,用于设置函数和类的值,而无需侵入式代码或基础设施。对于 Pax(以及其他机器学习代码库),这些函数和类表示模型和训练 超参数。

Fiddle 假设机器学习代码库通常分为以下几部分:

- 库代码,用于定义层和优化器。

- 数据集“粘合”代码,用于调用库并将所有内容连接在一起。

Fiddle 以未评估且可变的形式捕获粘合代码的调用结构。

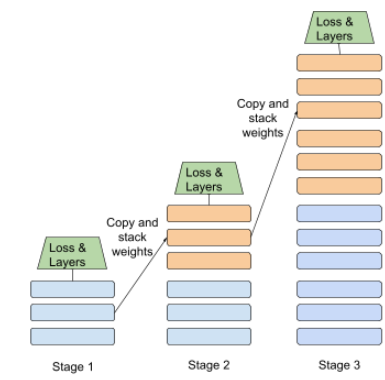

微调

对预训练模型执行的第二次特定任务训练,以针对特定应用场景优化其参数。例如,某些大型语言模型的完整训练序列如下所示:

- 预训练:在海量通用数据集(例如所有英文版维基百科页面)上训练大语言模型。

- 微调:训练预训练模型以执行特定任务,例如回答医疗查询。微调通常涉及数百或数千个专注于特定任务的示例。

再举一例,大型图片模型的完整训练序列如下所示:

- 预训练:在庞大的通用图片数据集(例如 Wikimedia Commons 中的所有图片)上训练大型图片模型。

- 微调:训练预训练模型以执行特定任务,例如生成虎鲸图片。

微调可能需要采用以下策略的任意组合:

- 修改预训练模型的所有现有参数。这有时称为“完全微调”。

- 仅修改预训练模型的部分现有参数(通常是离输出层最近的层),同时保持其他现有参数不变(通常是离输入层最近的层)。请参阅参数高效微调。

- 添加更多层,通常是在最接近输出层的现有层之上添加。

微调是一种迁移学习。因此,微调可能会使用与训练预训练模型时不同的损失函数或模型类型。例如,您可以对预训练的大型图像模型进行微调,以生成一个回归模型,该模型可返回输入图像中鸟的数量。

比较和对比微调与以下术语:

如需了解详情,请参阅机器学习速成课程中的微调。

Flash 模型

一系列相对较小的 Gemini 模型,经过优化,可实现快速响应和低延迟。Flash 模型专为需要快速响应和高吞吐量的各种应用而设计。

Flax

一个基于 JAX 构建的用于深度学习的高性能开源 库。Flax 提供用于训练 神经网络的函数,以及用于评估其性能的方法。

Flaxformer

一个基于 Flax 构建的开源 Transformer 库,主要用于自然语言处理和多模态研究。

忘记门控

长短期记忆细胞中用于调节信息流经细胞的部分。遗忘门通过决定从细胞状态中舍弃哪些信息来保持上下文。

基础模型

一种非常大的预训练模型,使用庞大而多样的训练集进行训练。基础模型可以执行以下两项操作:

换句话说,基础模型在一般意义上已经非常强大,但可以进一步自定义,以便在特定任务中发挥更大的作用。

成功次数所占的比例

用于评估机器学习模型生成的文本的指标。 成功率是指“成功”生成的文本输出数量除以生成的文本输出总数量。例如,如果某个大型语言模型生成了 10 个代码块,其中 5 个成功生成,那么成功率就是 50%。

虽然成功率在整个统计学中都非常有用,但在机器学习中,此指标主要用于衡量可验证的任务,例如代码生成或数学问题。

完整 softmax

与 softmax 的含义相同。

与候选采样相对。

如需了解详情,请参阅机器学习速成课程中的神经网络:多类别分类。

全连接层

全连接层又称为密集层。

函数转换

一种以函数为输入并返回转换后的函数作为输出的函数。JAX 使用函数转换。

G

GAN

生成对抗网络的缩写。

Gemini

由 Google 最先进的 AI 组成的生态系统。此生态系统的要素包括:

- 各种 Gemini 模型。

- 与 Gemini 模型进行交互的对话式界面。 用户输入提示,Gemini 会针对这些提示给出回答。

- 各种 Gemini API。

- 基于 Gemini 模型的各种商业产品;例如 Gemini for Google Cloud。

Gemini 模型

Google 基于先进的 Transformer 的多模态模型。Gemini 模型专为与智能体集成而设计。

用户可以通过多种方式与 Gemini 模型互动,包括通过交互式对话界面和 SDK。

Gemma

一系列轻量级开放模型,采用与 Gemini 模型相同的研究成果和技术构建而成。有多种不同的 Gemma 模型可供选择,每种模型都提供不同的功能,例如视觉、代码和指令遵循。如需了解详情,请参阅 Gemma。

GenAI 或 genAI

生成式 AI 的缩写。

泛化

模型针对以前未见过的新数据做出正确预测的能力。能够泛化的模型与过拟合模型正好相反。

如需了解详情,请参阅机器学习速成课程中的泛化。

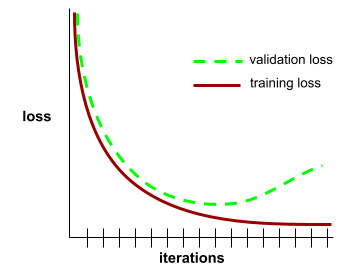

泛化曲线

泛化曲线可以帮助您检测可能出现的过拟合。例如,以下泛化曲线表明出现过拟合,因为验证损失最终明显高于训练损失。

如需了解详情,请参阅机器学习速成课程中的泛化。

广义线性模型

一种基于其他类型噪声(例如 Poisson 噪声或类别噪声)的其他类型模型,是基于 Gaussian 噪声的最小二乘回归模型的泛化。广义线性模型的示例包括:

- 逻辑回归

- 多类别回归

- 最小二乘回归

可以通过凸优化找到广义线性模型的参数。

广义线性模型具有以下特性:

- 最优的最小二乘回归模型的平均预测结果等于训练数据的平均标签。

- 最优的逻辑回归模型预测的平均概率等于训练数据的平均标签。

广义线性模型的能力受其特征的限制。与深度模型不同,广义线性模型无法“学习新特征”。

生成的文本

一般来说,指机器学习模型输出的文本。在评估大型语言模型时,某些指标会将生成的文本与参考文本进行比较。例如,假设您要确定某个机器学习模型从法语翻译为荷兰语的有效性。在此示例中:

- 生成的文本是机器学习模型输出的荷兰语翻译。

- 参考文本是人工翻译人员(或软件)创建的荷兰语译文。

请注意,某些评估策略不涉及参考文本。

生成对抗网络 (GAN)

一种用于创建新数据的系统,其中生成器负责创建数据,而判别器负责确定创建的数据是否有效。

如需了解详情,请参阅生成对抗网络课程。

生成式 AI

一个新兴的变革性领域,没有正式定义。 不过,大多数专家都认为,生成式 AI 模型可以创建(“生成”)以下类型的内容:

- 复杂

- 连贯

- 原图

生成式 AI 的示例包括:

- 大语言模型,可生成复杂的原创文本并回答问题。

- 图片生成模型,可生成独一无二的图片。

- 音频和音乐生成模型,可以创作原创音乐或生成逼真的语音。

- 视频生成模型,可生成原创视频。

一些较早的技术(包括 LSTM 和 RNN)也可以生成原创且连贯的内容。一些专家认为这些早期技术属于生成式 AI,而另一些专家则认为,真正的生成式 AI 需要能够生成比这些早期技术更复杂的输出。

与预测性机器学习相对。

生成模型

实际上是指执行以下任一操作的模型:

- 从训练数据集创建(生成)新样本。 例如,用诗歌数据集进行训练后,生成模型可以创作诗歌。生成对抗网络的生成器部分属于此类别。

- 确定新样本来自训练集或通过创建训练集的机制创建的概率。例如,用包含英文句子的数据集进行训练后,生成模型可确定新输入是有效英文句子的概率。

从理论上讲,生成模型可以辨别数据集中样本或特定特征的分布情况。具体来说:

p(examples)

非监督式学习模型属于生成模型。

与判别模型相对。

生成器

与判别模型相对。

Gini 不纯度

与 entropy 类似的指标。拆分器使用从 Gini 不纯度或熵派生的值来为分类决策树组成条件。 信息增益源自熵。没有普遍接受的源自 Gini 不纯度的指标等效术语;不过,此未命名的指标与信息增益同样重要。

Gini 不纯度也称为 Gini 指数,或简称为 Gini。

黄金数据集

一组人工整理的数据,用于捕获标准答案。团队可以使用一个或多个黄金数据集来评估模型的质量。

有些黄金数据集捕获了标准答案的不同子网域。例如,用于图片分类的黄金数据集可能会捕获光照条件和图片分辨率。

黄金回答

2 + 2

黄金响应应为:

4

Google AI Studio

Google 提供的一款工具,可提供简单易用的界面,用于测试和构建使用 Google 大语言模型的应用。 如需了解详情,请参阅 Google AI Studio 首页。

GPT(生成式预训练转换器)

由 OpenAI 开发的一系列基于 Transformer 的大语言模型。

GPT 变体可应用于多种模态,包括:

- 图片生成(例如 ImageGPT)

- 文本到图像生成(例如 DALL-E)。

渐变色

相对于所有自变量的偏导数向量。在机器学习中,梯度是模型函数偏导数的向量。梯度指向最高速上升的方向。

梯度累积

一种反向传播技术,它仅在每个周期更新一次参数,而不是在每次迭代时更新。在处理每个 mini-batch 后,梯度累积只会更新梯度总和。然后,在处理完周期中的最后一个小批次后,系统最终会根据所有梯度变化的总和来更新参数。

当批次大小与可用于训练的内存量相比非常大时,梯度累积非常有用。当内存出现问题时,人们自然会倾向于减小批次大小。不过,在正常反向传播中,减小批次大小会增加参数更新次数。梯度累积使模型能够避免内存问题,同时仍能高效训练。

梯度提升(决策)树 (GBT)

一种决策森林,其中:

如需了解详情,请参阅决策森林课程中的梯度提升决策树。

梯度提升

一种训练算法,其中训练弱模型以迭代方式提高强模型的质量(减少损失)。例如,弱模型可以是线性模型或小型决策树模型。强模型成为之前训练的所有弱模型的总和。

在最简单的梯度提升形式中,每次迭代都会训练一个弱模型来预测强模型的损失梯度。然后,通过减去预测梯度来更新强模型的输出,类似于 梯度下降。

其中:

- $F_{0}$ 是初始强模型。

- $F_{i+1}$ 是下一个强模型。

- $F_{i}$ 是当前的强模型。

- $\xi$ 是一个介于 0.0 和 1.0 之间的值,称为收缩率,类似于梯度下降中的学习率。

- $f_{i}$ 是经过训练的弱模型,用于预测 $F_{i}$ 的损失梯度。

梯度提升的现代变体还在计算中纳入了损失的二阶导数(Hessian)。

决策树通常用作梯度提升中的弱模型。请参阅梯度提升(决策)树。

梯度裁剪

一种常用机制,用于在通过梯度下降来训练模型时,通过人为限制(剪裁)梯度的最大值来缓解梯度爆炸问题。

梯度下降法

一种可最大限度减少损失的数学技术。 梯度下降法以迭代方式调整权重和偏差,逐渐找到可将损失降至最低的最佳组合。

梯度下降比机器学习早得多。

如需了解详情,请参阅机器学习速成课程中的线性回归:梯度下降。

图表

TensorFlow 中的一种计算规范。图中的节点表示操作。边缘具有方向,表示将某项操作的结果(一个Tensor)作为一个操作数传递给另一项操作。可以使用 TensorBoard 可视化图。

图执行

一种 TensorFlow 编程环境,在该环境中,图执行程序会先构造一个图,然后执行该图的所有部分或某些部分。图执行是 TensorFlow 1.x 中的默认执行模式。

与即刻执行相对。

贪婪策略

标准答案关联性

一种模型的属性,其输出基于(“依据”)特定的源材料。例如,假设您将一本完整的物理教科书作为输入内容(“上下文”)提供给大语言模型。然后,您向该大语言模型提出一个物理学问题。 如果模型的回答反映了该教科书中的信息,则表示该模型以该教科书为依据。请注意,接地模型并不总是事实模型。例如,输入的物理教科书可能包含错误。

标准答案

现实。

实际发生的事情。

例如,假设有一个二元分类模型,用于预测大学一年级学生是否会在六年内毕业。此模型的标准答案是相应学生是否在 6 年内实际毕业。

群体归因偏差

假设某个人的真实情况适用于相应群体中的每个人。如果使用便利抽样收集数据,群体归因偏差的影响会加剧。在非代表性样本中,归因可能不会反映现实。

另请参阅群外同质性偏差和群内偏差。另请参阅机器学习速成课程中的公平性:偏差类型,了解详情。

H

幻觉

生成式 AI 模型生成看似合理但实际上不正确的输出,并声称自己正在对现实世界做出断言。例如,如果生成式 AI 模型声称巴拉克·奥巴马于 1865 年去世,则表示该模型出现了幻觉。

哈希技术

机器学习中对分类数据进行分桶的机制,尤其适合以下情形:类别数量庞大,但实际出现在数据集中的类别数量相对较小。

例如,地球上约有 7.3 万种树。您可以用 7.3 万个单独的分类桶表示所有 7.3 万种树中的每一种。或者,如果实际出现在数据集中的树只有 200 种,您可以进行哈希处理,将这些树种划分到约 500 个桶中。

一个桶可能包含多个树种。例如,哈希可能会将“猴面包树”和“红枫”这两个基因相异的树种放入同一个桶中。无论如何,哈希仍然是将大型分类集合映射到所选数量的桶的好方法。通过以确定的方式对值进行分组,哈希将具有大量可能值的分类特征变为更少数量的值。

如需了解详情,请参阅机器学习速成课程中的类别型数据:词汇和独热编码。

启发法

一种简单且可快速实施的问题解决方案。例如,“采用启发法,我们实现了 86% 准确率。当我们改为使用深度神经网络时,准确率上升到 98%。”

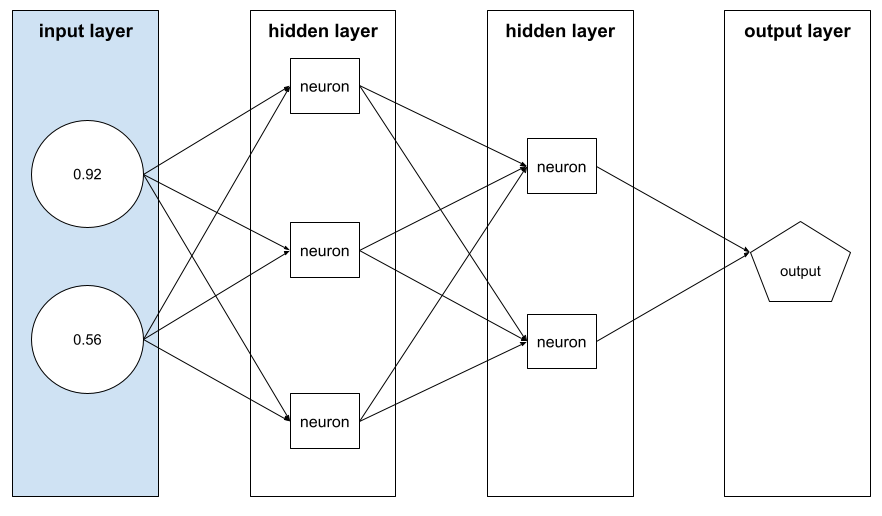

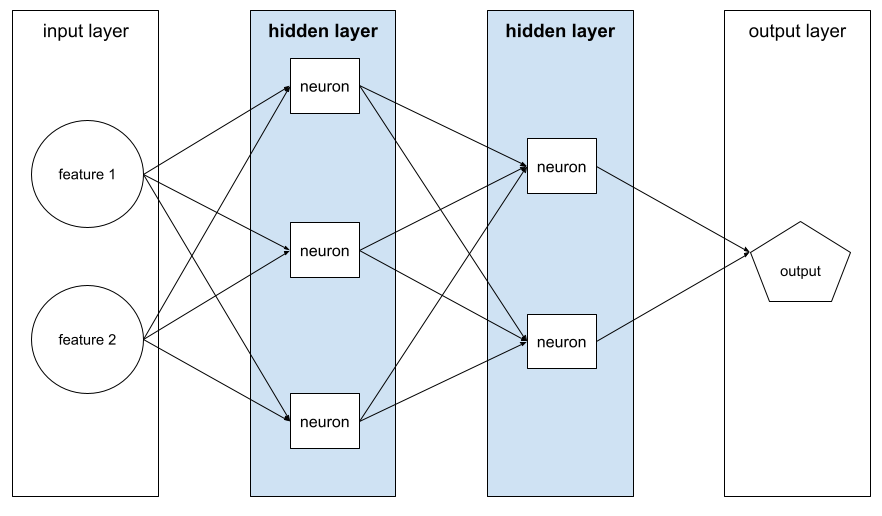

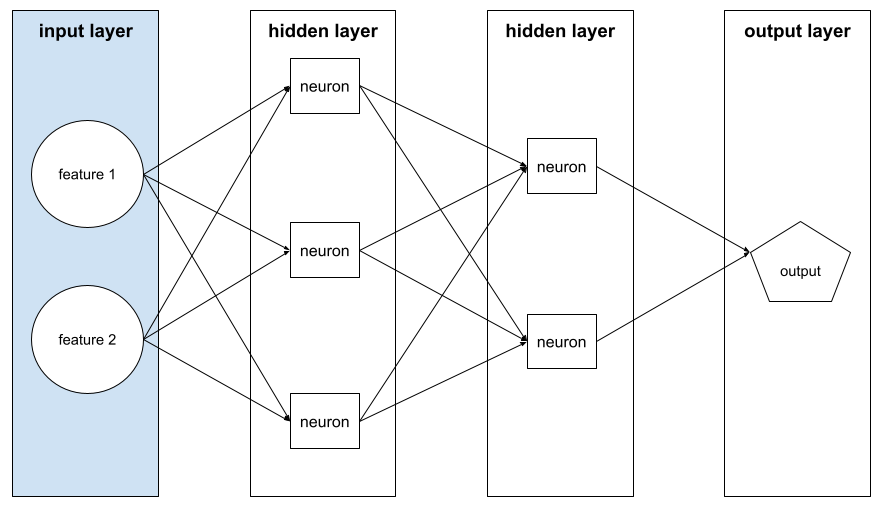

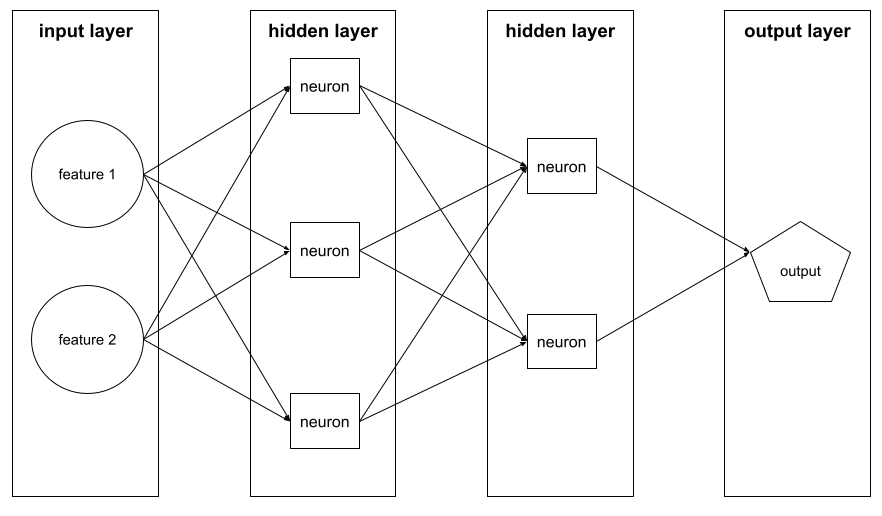

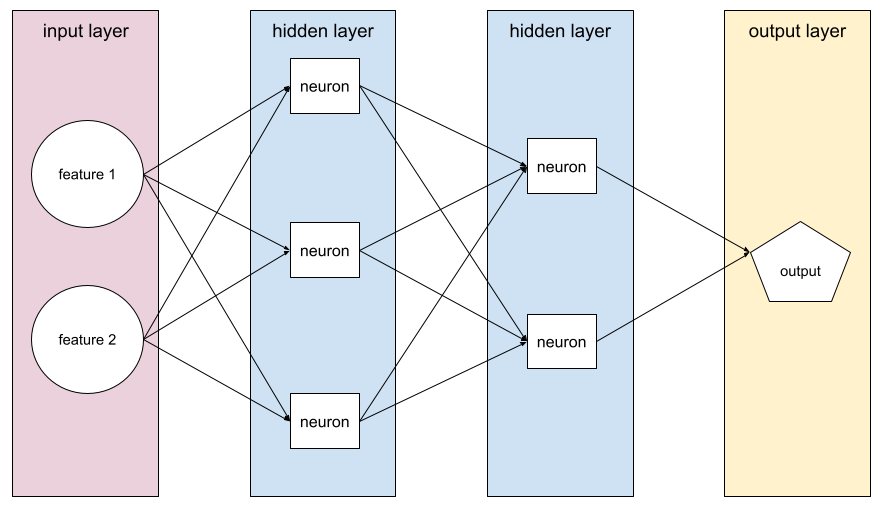

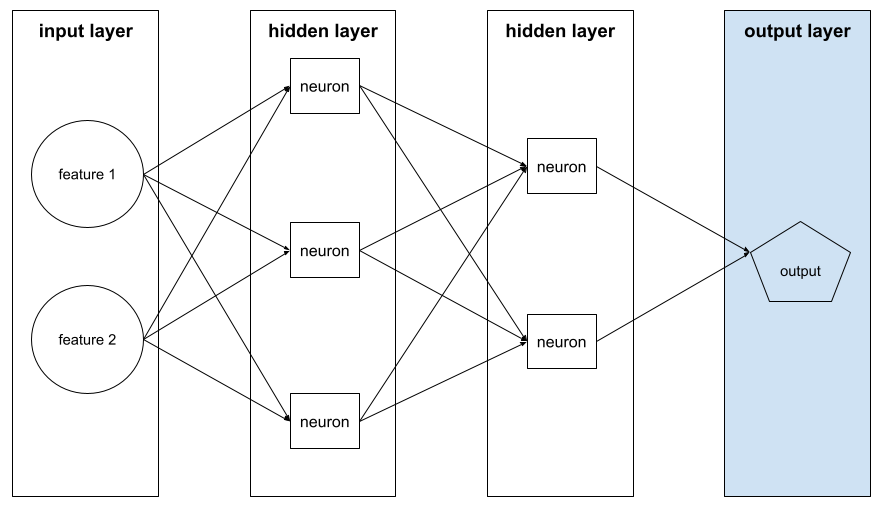

隐藏层

神经网络中介于输入层(特征)和输出层(预测)之间的层。每个隐藏层都包含一个或多个神经元。例如,以下神经网络包含两个隐藏层,第一个隐藏层有 3 个神经元,第二个隐藏层有 2 个神经元:

深度神经网络包含多个隐藏层。例如,上图所示的是一个深度神经网络,因为该模型包含两个隐藏层。

如需了解详情,请参阅机器学习速成课程中的神经网络:节点和隐藏层。

层次聚类

一类聚类算法,用于创建聚类树。层次聚类非常适合分层数据,例如植物分类。层次聚类算法有两种类型:

- 凝聚式层次聚类首先将每个样本分配到其自己的聚类,然后以迭代方式合并最近的聚类,以创建层次树。

- 分裂式层次聚类首先将所有样本分组到一个聚类,然后以迭代方式将该聚类划分为一个层次树。

与形心聚类相对。

如需了解详情,请参阅聚类课程中的聚类算法。

爬坡

一种用于以迭代方式改进(“爬山”)机器学习模型的算法,直到模型不再改进(“到达山顶”)为止。该算法的一般形式如下:

- 构建初始模型。

- 通过对训练或微调方式进行小幅调整,创建新的候选模型。这可能需要使用略有不同的训练集或不同的超参数。

- 评估新的候选模型,并采取以下某项操作:

- 如果候选模型的表现优于初始模型,则该候选模型会成为新的初始模型。在这种情况下,请重复第 1 步、第 2 步和第 3 步。

- 如果没有模型优于初始模型,则说明您已到达山顶,应停止迭代。

如需有关超参数调节的指导,请参阅深度学习调优实战手册。如需有关特征工程的指导,请参阅机器学习速成课程的数据模块。

合页损失函数

一类用于分类的损失函数,旨在找到尽可能远离每个训练示例的决策边界,从而使示例和边界之间的边距最大化。核支持向量机使用合页损失函数(或相关函数,例如平方合页损失函数)。对于二元分类,铰链损失函数的定义如下:

其中,y 是真实标签(-1 或 +1),y' 是分类模型的原始输出:

因此,铰链损失与 (y * y') 的对比图如下所示:

历史偏差

一种已经存在于现实世界中并已进入数据集的偏见。这些偏见往往会反映出既有的文化刻板印象、人口统计学不平等现象以及对某些社会群体的偏见。

例如,假设有一个分类模型,用于预测贷款申请人是否会拖欠贷款,该模型是根据 20 世纪 80 年代来自两个不同社区的本地银行的历史贷款违约数据训练的。如果社区 A 的过往申请人拖欠贷款的可能性是社区 B 的申请人的 6 倍,模型可能会学习到历史偏差,导致模型不太可能批准社区 A 的贷款,即使导致该社区拖欠率较高的历史条件已不再相关。

如需了解详情,请参阅机器学习速成课程中的公平性:偏差类型。

留出数据

训练期间故意不使用(“留出”)的样本。验证数据集和测试数据集都属于留出数据。留出数据有助于评估模型向训练时所用数据之外的数据进行泛化的能力。与基于训练数据集的损失相比,基于留出数据集的损失有助于更好地估算基于未见过的数据集的损失。

主机

在加速器芯片(GPU 或 TPU)上训练机器学习模型时,系统中负责控制以下两方面的部分:

- 代码的整体流程。

- 输入流水线的提取和转换。

主机通常在 CPU 上运行,而不是在加速器芯片上运行;设备在加速器芯片上处理张量。

人工评估

一种由人来评判机器学习模型输出质量的过程;例如,让双语人士评判机器学习翻译模型的质量。人工评估对于评判没有唯一正确答案的模型特别有用。

人机协同 (HITL)

一种定义宽泛的表达方式,可能表示以下任一含义:

- 以批判性或怀疑性态度看待生成式 AI 输出的政策。

- 一种策略或系统,用于确保人们帮助塑造、评估和改进模型的行为。通过人机协同,AI 可以同时受益于机器智能和人类智能。例如,在某个系统中,AI 生成代码,然后由软件工程师审核,该系统就是人机循环系统。

超参数

在模型训练的连续运行期间,您或超参数调节服务(例如 Vizier)调整的变量。例如,学习速率就是一种超参数。您可以在一次训练会话之前将学习率设置为 0.01。如果您认为 0.01 过高,或许可以在下一次训练会话中将学习率设置为 0.003。

如需了解详情,请参阅机器学习速成课程中的线性回归:超参数。

超平面

将空间划分为两个子空间的边界。例如,直线是二维空间中的超平面,平面是三维空间中的超平面。 在机器学习中,超平面通常是分隔高维空间的边界。核支持向量机利用超平面将正类别和负类别区分开来(通常是在极高维度空间中)。

I

i.i.d.

独立同分布的缩写。

图像识别

对图像中的物体、图案或概念进行分类的过程。 图像识别也称为图像分类。

不平衡的数据集

与分类不平衡的数据集的含义相同。

隐性偏差

根据一个人的心智模式和记忆自动建立关联或做出假设。隐性偏差会影响以下方面:

- 数据的收集和分类方式。

- 机器学习系统的设计和开发方式。

例如,在构建用于识别婚礼照片的分类模型时,工程师可能会将照片中的白色裙子用作一个特征。不过,白色裙子只在某些时代和某些文化中是一种婚礼习俗。

另请参阅确认偏差。

插补

值插补的简短形式。

公平性指标互不相容

某些公平性概念互不相容,无法同时满足。因此,没有一种通用的指标可用于量化公平性,并适用于所有机器学习问题。

虽然这可能令人沮丧,但公平性指标互不相容并不意味着公平性方面的努力是徒劳的。相反,它表明必须根据特定机器学习问题的具体情况来定义公平性,目的是防止出现特定于其应用场景的危害。

如需更详细地了解公平性指标的不兼容性,请参阅“公平性的(不)可能性”。

上下文学习

与少样本提示的含义相同。

独立同分布 (i.i.d)

从不发生变化且每次抽取的值不依赖于之前抽取的值的分布中抽取的数据。i.i.d. 是机器学习的理想情况 - 一种实用的数学结构,但在现实世界中几乎从未发现过。例如,某个网页的访问者在短时间内的分布可能为 i.i.d.,即分布在该短时间内没有变化,且一位用户的访问行为通常与另一位用户的访问行为无关。不过,如果您扩大时间范围,网页访问者的季节性差异可能会显现出来。

另请参阅非平稳性。

个体公平性

一种公平性指标,用于检查相似的个体是否被归为相似的类别。例如,Brobdingnagian Academy 可能希望通过确保成绩和标准化考试分数完全相同的两名学生获得入学的可能性相同,来满足个人公平性。

请注意,个体公平性完全取决于您如何定义“相似性”(在本例中为成绩和考试分数),如果相似性指标遗漏了重要信息(例如学生课程的严格程度),您可能会引入新的公平性问题。

如需详细了解个体公平性,请参阅“通过感知实现公平”。

推理

在传统机器学习中,推断是指以下过程:通过将训练过的模型应用于无标签样本做出预测。如需了解详情,请参阅“机器学习简介”课程中的监督式学习。

在大语言模型中,推理是指使用训练好的模型针对输入提示生成回答的过程。

推理在统计学中具有略有不同的含义。如需了解详情,请参阅 维基百科中有关统计学推断的文章。

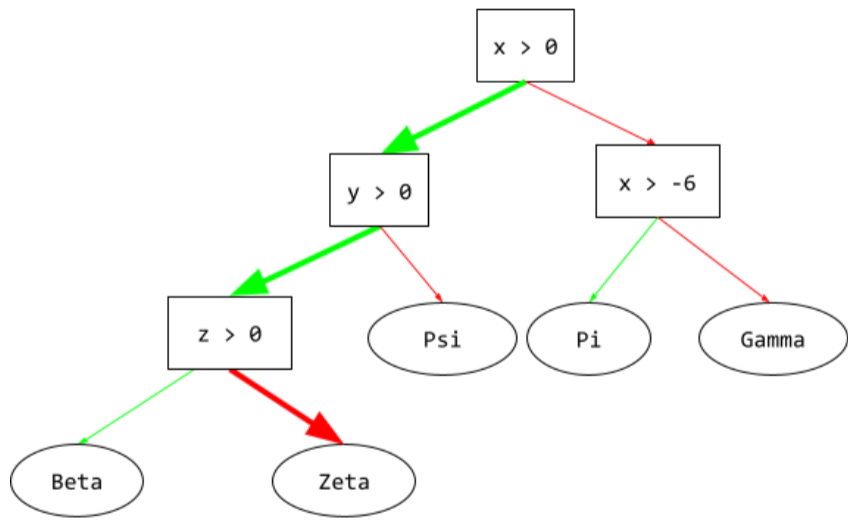

推理路径

在决策树中,在推理过程中,特定示例从根到其他条件所采用的路线,最终以叶结束。例如,在以下决策树中,较粗的箭头显示了具有以下特征值的示例的推理路径:

- x = 7

- y = 12

- z = -3

下图中的推理路径在到达叶节点 (Zeta) 之前会经历三种情况。

三条粗箭头显示了推理路径。

如需了解详情,请参阅“决策森林”课程中的决策树。

信息增益

在决策森林中,节点熵与其子节点熵的加权(按示例数量)和之间的差。节点的熵是指该节点中示例的熵。

例如,请考虑以下熵值:

- 父节点的熵 = 0.6

- 一个子节点的熵(包含 16 个相关示例)= 0.2

- 另一个子节点的熵(有 24 个相关示例)= 0.1

因此,40% 的示例位于一个子节点中,而 60% 的示例位于另一个子节点中。因此:

- 子节点的加权熵之和 = (0.4 * 0.2) + (0.6 * 0.1) = 0.14

因此,信息增益为:

- 信息增益 = 父节点的熵 - 子节点的加权熵之和

- 信息增益 = 0.6 - 0.14 = 0.46

群内偏差

对自身所属的群组或自身特征表现出偏向。 如果测试人员或评分者由机器学习开发者的好友、家人或同事组成,那么群内偏差可能会导致产品测试或数据集无效。

如需了解详情,请参阅机器学习速成课程中的公平性:偏差类型。

输入生成器

一种将数据加载到神经网络中的机制。

输入生成器可以看作是一个组件,负责将原始数据处理为张量,然后对这些张量进行迭代以生成用于训练、评估和推理的批次。

输入层

神经网络中用于存储特征向量的层。也就是说,输入层为训练或推理提供示例。例如,以下神经网络中的输入层包含两个特征:

在集合条件中

在决策树中,一种用于测试一组项中是否存在某个项的条件。 例如,以下是一个集合内条件:

house-style in [tudor, colonial, cape]

在推理期间,如果房屋风格 特征的值为 tudor、colonial 或 cape,则此条件的评估结果为“是”。如果房屋风格特征的值为其他值(例如 ranch),则此条件的计算结果为“否”。

与测试独热编码特征的条件相比,集合内条件通常会生成更高效的决策树。

实例

与示例的含义相同。

指令调优

一种微调形式,可提高生成式 AI 模型遵循指令的能力。指令调优是指使用一系列指令提示训练模型,这些指令提示通常涵盖各种各样的任务。经过指令调优的模型往往能够针对各种任务的零样本提示生成实用的回答。

比较和对比:

可解释性

能够以人类可理解的方式解释或呈现机器学习模型的推理过程。

例如,大多数线性回归模型都具有很高的可解释性。(您只需查看每个特征的训练权重。)决策森林也具有很高的可解释性。不过,某些模型仍需进行复杂的可视化处理,才能变得可解释。

您可以使用 Learning Interpretability Tool (LIT) 来解读机器学习模型。

评分者间一致性信度

衡量人工标注者在执行任务时达成一致的频率。 如果评分者意见不一致,可能需要改进任务说明。 有时也称为注释者间一致性信度或评分者间可靠性信度。另请参阅 Cohen's kappa(最热门的评分者间一致性信度衡量指标之一)。

如需了解详情,请参阅机器学习速成课程中的类别数据:常见问题。

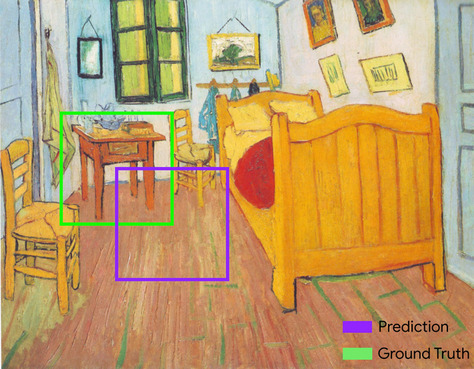

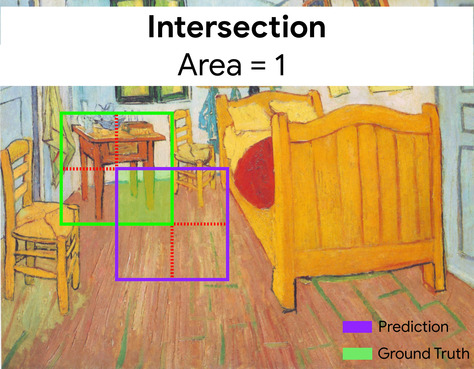

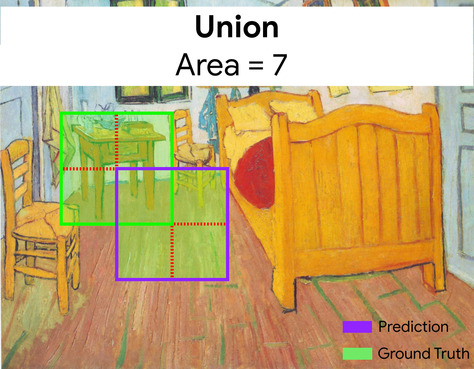

交并比 (IoU)

两个集合的交集除以并集。在机器学习图像检测任务中,IoU 用于衡量模型预测的边界框相对于标准答案边界框的准确性。在这种情况下,两个框的 IoU 是重叠面积与总面积的比值,其值范围为 0(预测的边界框与标准答案边界框不重叠)到 1(预测的边界框与标准答案边界框的坐标完全相同)。

例如,在下图中:

- 预测的边界框(用于界定模型预测的画作中床头柜所在位置的坐标)以紫色轮廓显示。

- 标准答案边界框(用于界定画作中床头柜实际所在位置的坐标)以绿色轮廓显示。

在此示例中,预测和标准答案的边界框的交集(左下图)为 1,预测和标准答案的边界框的并集(右下图)为 7,因此 IoU 为 \(\frac{1}{7}\)。

IoU

交并比的缩写。

商品矩阵

在推荐系统中,由矩阵分解生成的嵌入向量矩阵,其中包含有关每个商品的潜在信号。项矩阵的每一行都包含所有项的单个潜在特征的值。 以电影推荐系统为例。项矩阵中的每一列表示一部电影。潜在信号可能表示类型,也可能是更难以解读的信号,其中涉及类型、明星、影片年代或其他因素之间的复杂互动关系。

项矩阵与要进行分解的目标矩阵具有相同的列数。例如,假设某个影片推荐系统要评估 10000 部影片,则项矩阵会有 10000 个列。

项目

在推荐系统中,系统推荐的实体。例如,视频是音像店推荐的推荐项,而书籍是书店推荐的推荐项。

迭代

在训练期间,对模型的参数(即模型的权重和偏差)进行一次更新。批次大小决定了模型在一次迭代中处理的样本数量。例如,如果批次大小为 20,则模型会在调整参数之前处理 20 个样本。

在训练神经网络时,单次迭代涉及以下两个传递:

- 一次前向传递,用于评估单个批次的损失。

- 一次反向传递(反向传播),用于根据损失和学习速率调整模型参数。

如需了解详情,请参阅机器学习速成课程中的梯度下降。

J

JAX

一个数组计算库,将 XLA(加速线性代数)和自动微分功能结合在一起,可实现高性能的数值计算。JAX 提供了一个简单而强大的 API,用于编写具有可组合转换的加速数值代码。JAX 提供以下功能:

grad(自动微分)jit(即时编译)vmap(自动矢量化或批处理)pmap(并行化)

JAX 是一种用于表达和组合数值代码转换的语言,类似于 Python 的 NumPy 库,但范围要大得多。(事实上,JAX 下的 .numpy 库是 Python NumPy 库的功能等效但完全重写的版本。)

JAX 通过将模型和数据转换为适合在 GPU 和 TPU 加速器芯片上并行处理的形式,特别适合加速许多机器学习任务。

Flax、Optax、Pax 和许多其他库都是基于 JAX 基础架构构建的。

K

Keras

一种热门的 Python 机器学习 API。Keras 能够在多种深度学习框架上运行,其中包括 TensorFlow(在该框架上,Keras 作为 tf.keras 提供)。

核支持向量机 (KSVM)

一种分类算法,旨在通过将输入数据向量映射到更高维度的空间,最大限度地扩大正类别和负类别之间的边际。以某个输入数据集包含一百个特征的分类问题为例。为了最大化正类别和负类别之间的边际,核支持向量机可以在内部将这些特征映射到百万维度的空间。核支持向量机使用合页损失函数。

关键点

图片中特定特征的坐标。例如,对于区分花卉种类的图像识别模型,关键点可能是每个花瓣的中心、花茎、雄蕊等。

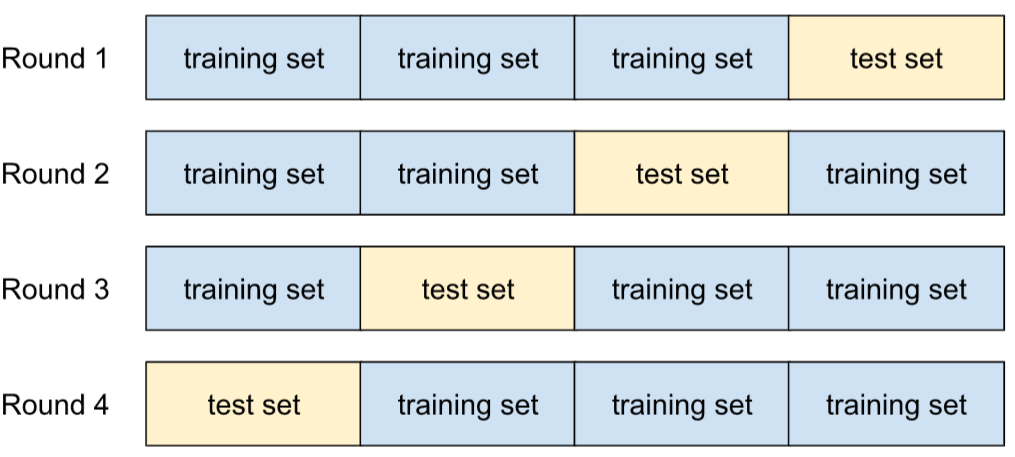

k 折叠交叉验证

一种用于预测模型泛化到新数据的能力的算法。k 折交叉验证中的 k 是指您将数据集的示例划分成的相等组数;也就是说,您将训练和测试模型 k 次。在每轮训练和测试中,一个不同的组是测试集,所有剩余的组都成为训练集。经过 k 轮训练和测试后,计算所选测试指标的平均值和标准差。

例如,假设您的数据集包含 120 个示例。进一步假设,您决定将 k 设置为 4。因此,在对示例进行随机排序后,您将数据集划分为四个包含 30 个示例的相等组,并进行四轮训练和测试:

例如,均方误差 (MSE) 可能是线性回归模型中最有意义的指标。因此,您需要计算所有四轮的 MSE 的平均值和标准差。

k-means

一种热门的聚类算法,用于对非监督式学习中的样本进行分组。k-means 算法基本上会执行以下操作:

- 以迭代方式确定最佳的 k 中心点(称为形心)。

- 将每个样本分配到最近的形心。与同一个形心距离最近的样本属于同一个组。

k-means 算法会挑选形心位置,以最大限度地减小每个样本与其最接近形心之间的距离的累积平方。

例如,请看以下狗身高与狗宽度的散点图:

如果 k=3,k-means 算法将确定三个形心。每个样本都会分配到离它最近的形心,从而产生三个组:

假设某制造商想要确定适合小型犬、中型犬和大型犬的毛衣的理想尺寸。这三个形心分别表示相应聚类中每只狗的平均身高和平均宽度。因此,制造商可能应根据这三个形心来确定毛衣尺码。请注意,聚类的质心通常不是聚类中的示例。

上图显示了仅包含两个特征(身高和体重)的示例的 k-means。请注意,K-means 可以跨多个特征对示例进行分组。

如需了解详情,请参阅聚类课程中的什么是 K-means 聚类?

k-median

与 k-means 紧密相关的聚类算法。两者的实际区别如下:

- 对于 k-means,确定形心的方法是,最大限度地减小候选形心与它的每个样本之间的距离平方和。

- 对于 k-median,确定形心的方法是,最大限度地减小候选形心与它的每个样本之间的距离总和。

请注意,距离的定义也有所不同:

- k-means 采用从形心到样本的欧几里得距离。(在二维空间中,欧几里得距离即使用勾股定理计算斜边。)例如,(2,2) 与 (5,-2) 之间的 k-means 距离为:

- k-median 采用从形心到样本的 曼哈顿距离。这个距离是每个维度中绝对差值的总和。例如,(2,2) 与 (5,-2) 之间的 k-median 距离为:

L

L0 正则化

一种正则化,用于惩罚模型中非零权重的总数。例如,具有 11 个非零权重的模型会比具有 10 个非零权重的类似模型受到更严重的惩罚。

L0 正则化有时称为 L0 范数正则化。

L1 损失

一种损失函数,用于计算实际标签值与模型预测的值之间的差的绝对值。例如,以下是针对包含 5 个示例的批次计算 L1 损失的示例:

| 示例的实际值 | 模型的预测值 | 增量的绝对值 |

|---|---|---|

| 7 | 6 | 1 |

| 5 | 4 | 1 |

| 8 | 11 | 3 |

| 4 | 6 | 2 |

| 9 | 8 | 1 |

| 8 = L1 损失 | ||

平均绝对误差是每个样本的平均 L1 损失。

如需了解详情,请参阅机器学习速成课程中的线性回归:损失。

L1 正则化

一种正则化,根据权重的绝对值总和按比例惩罚权重。L1 正则化有助于使不相关或几乎不相关的特征的权重正好为 0。权重为 0 的特征实际上已从模型中移除。

与 L2 正则化相对。

L2 损失

一种损失函数,用于计算实际标签值与模型预测的值之间的平方差。例如,以下是针对包含 5 个示例的批次计算 L2 损失的示例:

| 示例的实际值 | 模型的预测值 | 增量的平方 |

|---|---|---|

| 7 | 6 | 1 |

| 5 | 4 | 1 |

| 8 | 11 | 9 |

| 4 | 6 | 4 |

| 9 | 8 | 1 |

| 16 = L2 损失 | ||

由于取平方值,因此 L2 损失会放大离群值的影响。也就是说,与 L1 损失相比,L2 损失对不良预测的反应更强烈。例如,前一个批次的 L1 损失将为 8 而不是 16。请注意,一个离群值就占了 16 个中的 9 个。

回归模型通常使用 L2 损失作为损失函数。

均方误差是指每个样本的平均 L2 损失。 平方损失是 L2 损失的另一种叫法。

如需了解详情,请参阅机器学习速成课程中的逻辑回归:损失和正规化。

L2 正则化

一种正则化,根据权重的平方和按比例惩罚权重。L2 正则化有助于使离群值(具有较大正值或较小负值)权重接近 0,但又不正好为 0。值非常接近 0 的特征会保留在模型中,但对模型的预测影响不大。

L2 正则化始终可以提高线性模型的泛化能力。

与 L1 正则化相对。

如需了解详情,请参阅机器学习速成课程中的过拟合:L2 正则化。

标签

每个有标签样本都包含一个或多个特征和一个标签。例如,在垃圾邮件检测数据集中,标签可能是“垃圾邮件”或“非垃圾邮件”。在降雨量数据集中,标签可能是特定时间段内的降雨量。

如需了解详情,请参阅《机器学习简介》中的监督式学习。

有标签示例

包含一个或多个特征和一个标签的示例。例如,下表显示了房屋估值模型中的三个带标签的示例,每个示例都包含三个特征和一个标签:

| 卧室数量 | 浴室数量 | 房屋年龄 | 房价(标签) |

|---|---|---|---|

| 3 | 2 | 15 | $345,000 |

| 2 | 1 | 72 | 17.9 万美元 |

| 4 | 2 | 34 | 39.2 万美元 |

在监督式机器学习中,模型基于带标签的样本进行训练,并基于无标签的样本进行预测。

将有标签样本与无标签样本进行对比。

如需了解详情,请参阅《机器学习简介》中的监督式学习。

标签泄露

一种模型设计缺陷,其中特征是标签的代理。例如,假设有一个二元分类模型,用于预测潜在客户是否会购买特定产品。假设模型的某个特征是一个名为 SpokeToCustomerAgent 的布尔值。进一步假设,只有在潜在客户实际购买产品后,才会为其分配客户服务人员。在训练期间,模型将快速学习 SpokeToCustomerAgent 与标签之间的关联。

如需了解详情,请参阅机器学习速成课程中的监控流水线。

lambda

与正则化率的含义相同。

Lambda 是一个过载的术语。我们在此关注的是该术语在正则化中的定义。

LaMDA(对话应用语言模型)

Google 开发的一种基于 Transformer 的大语言模型,经过大量对话数据集训练,可以生成逼真的对话回答。

LaMDA:我们富有突破性的对话技术提供了概览。

landmarks

与关键点的含义相同。

语言模型

一种用于估计较长的 token 序列中出现某个 token 或 token 序列的概率的模型。

如需了解详情,请参阅机器学习速成课程中的什么是语言模型?。

大语言模型

至少是一个具有极高数量参数的语言模型。更通俗地说,任何基于 Transformer 的语言模型,例如 Gemini 或 GPT。

如需了解详情,请参阅机器学习速成课程中的大语言模型 (LLM)。

延迟时间

模型处理输入并生成回答所需的时间。 高延迟响应的生成时间比低延迟响应的生成时间长。

影响大语言模型延迟时间的因素包括:

- 输入和输出 token 长度

- 模型的复杂程度

- 模型运行的基础设施

优化延迟时间对于打造响应迅速且人性化的应用至关重要。

潜在空间

与嵌入空间的含义相同。

图层

例如,下图展示了一个包含 1 个输入层、2 个隐藏层和 1 个输出层的神经网络:

在 TensorFlow 中,层也是 Python 函数,以张量和配置选项作为输入,然后生成其他张量作为输出。

Layers API (tf.layers)

一种 TensorFlow API,用于以层组合的方式构建深度神经网络。通过 Layers API,您可以构建不同类型的层,例如:

tf.layers.Dense用于全连接层。tf.layers.Conv2D,表示卷积层。

Layers API 遵循 Keras Layers API 规范。 也就是说,除了前缀不同之外,Layers API 中的所有函数都具有与 Keras layers API 中对应的函数相同的名称和签名。

leaf

决策树中的任何端点。与条件不同,叶不执行测试。相反,叶节点是一种可能的预测。叶也是推理路径的终端节点。

例如,以下决策树包含三个叶节点:

如需了解详情,请参阅“决策森林”课程中的决策树。

Learning Interpretability Tool (LIT)

一种直观的交互式模型理解和数据可视化工具。

您可以使用开源 LIT 来解读模型,或直观呈现文本、图片和表格数据。

学习速率

一个浮点数,用于告知梯度下降算法在每次迭代时调整权重和偏差的幅度。例如,0.3 的学习速率调整权重和偏差的力度是 0.1 的学习速率的三倍。

学习速率是一个重要的超参数。如果您将学习速率设置得过低,训练将耗时过长。如果您将学习速率设置得过高,梯度下降法通常难以实现收敛。

如需了解详情,请参阅机器学习速成课程中的线性回归:超参数。

最小二乘回归

Levenshtein 距离

一种编辑距离指标,用于计算将一个字词更改为另一个字词所需的最少删除、插入和替换操作次数。例如,“heart”和“darts”这两个字之间的 Levenshtein 距离为 3,因为以下 3 次编辑是将一个字转换为另一个字所需的最少更改次数:

- heart → deart(将“h”替换为“d”)

- deart → dart(删除“e”)

- dart → darts(插入“s”)

请注意,上述序列并非唯一包含 3 次编辑的路径。

线性

一种仅通过加法和乘法即可表示的两个或多个变量之间的关系。

线性关系的图是一条直线。

与非线性相对。

线性模型

一种为每个特征分配一个权重以进行预测的模型。(线性模型还包含偏差。)相比之下,深度模型中特征与预测之间的关系通常是非线性的。

与深度模型相比,线性模型通常更易于训练,且可解释性更强。不过,深度模型可以学习特征之间的复杂关系。

线性回归

一种机器学习模型,同时满足以下两个条件:

将线性回归与逻辑回归进行对比。 此外,还要将回归与分类进行对比。

如需了解详情,请参阅机器学习速成课程中的线性回归。

LIT

Learning Interpretability Tool (LIT) 的缩写,之前称为 Language Interpretability Tool。

LLM

大语言模型的缩写。

大语言模型评估

用于评估大型语言模型 (LLM) 性能的一组指标和基准。概括来讲,大语言模型评估:

- 帮助研究人员确定 LLM 需要改进的方面。

- 有助于比较不同的 LLM,并确定最适合特定任务的 LLM。

- 帮助确保 LLM 的使用安全且合乎道德。

如需了解详情,请参阅机器学习速成课程中的大型语言模型 (LLM)。

逻辑回归

一种可预测概率的回归模型。逻辑回归模型具有以下特征:

- 标签为分类。逻辑回归一词通常是指二元逻辑回归,即计算具有两个可能值的标签的概率的模型。一种不太常见的变体是多项式逻辑回归,它会计算具有两个以上可能值的标签的概率。

- 训练期间的损失函数为对数损失函数。(对于具有两个以上可能值的标签,可以并行放置多个 Log Loss 单位。)

- 该模型采用线性架构,而非深度神经网络。不过,此定义的其余部分也适用于预测类别标签概率的深度模型。

例如,假设有一个逻辑回归模型,用于计算输入电子邮件是垃圾邮件或非垃圾邮件的概率。 在推理过程中,假设模型预测值为 0.72。因此,模型会估计:

- 电子邮件有 72% 的可能性是垃圾邮件。

- 电子邮件不是垃圾邮件的概率为 28%。

逻辑回归模型采用以下两步架构:

- 模型通过应用输入特征的线性函数来生成原始预测结果 (y')。

- 该模型使用原始预测结果作为 sigmoid 函数的输入,该函数会将原始预测结果转换为介于 0 和 1 之间的值(不包括 0 和 1)。

与任何回归模型一样,逻辑回归模型也会预测一个数值。不过,此数字通常会成为二元分类模型的一部分,如下所示:

- 如果预测的数值大于分类阈值,则二元分类模型会预测为正类别。

- 如果预测的数字小于分类阈值,二元分类模型会预测负类别。

如需了解详情,请参阅机器学习速成课程中的逻辑回归。

logits

分类模型生成的原始(未归一化)预测向量,通常随后会传递给归一化函数。如果模型要解决的是多类别分类问题,那么 logits 通常会成为 softmax 函数的输入。 然后,softmax 函数会生成一个(归一化)概率向量,其中每个可能类别对应一个值。

对数损失

如需了解详情,请参阅机器学习速成课程中的逻辑回归:损失和正规化。

对数几率

某个事件的对数几率。

长短期记忆 (LSTM)

循环神经网络中的一种细胞,用于在手写识别、机器翻译和图片说明等应用中处理数据序列。LSTM 通过在内部记忆状态中基于新输入和 RNN 中之前单元的上下文来维护历史记录,从而解决训练 RNN 时因数据序列过长而出现的梯度消失问题。

LoRA

低秩自适应性的缩写。

损失

在监督式模型的训练期间,用于衡量模型的预测与标签之间的差距。

损失函数用于计算损失。

如需了解详情,请参阅机器学习速成课程中的线性回归:损失。

损失聚合器

一种机器学习算法,通过合并多个模型的预测结果并使用这些预测结果进行单个预测,来提高模型的性能。因此,损失聚合器可以减少预测的方差,并提高预测的准确性。

损失曲线

以训练迭代次数为自变量的损失函数图。下图显示了典型的损失曲线:

损失曲线可以绘制以下所有类型的损失:

另请参阅泛化曲线。

如需了解详情,请参阅机器学习速成课程中的过拟合:解读损失曲线。

损失函数

在训练或测试期间,用于计算一批示例的损失的数学函数。对于做出良好预测的模型,损失函数会返回较低的损失;对于做出不良预测的模型,损失函数会返回较高的损失。

训练的目标通常是尽量减少损失函数返回的损失。

损失函数有很多不同的种类。根据您要构建的模型类型选择合适的损失函数。例如:

损失曲面

权重与损失的图表。梯度下降法旨在找到损失曲面在局部最低点时的权重。

“迷失在中间”效应

LLM 更倾向于有效使用长上下文窗口开头和结尾的信息,而不是中间的信息。也就是说,在给定长上下文的情况下,中间丢失效应会导致准确率:

- 当形成回答的相关信息位于上下文的开头或结尾附近时,相对较高。

- 当形成回答的相关信息位于上下文的中间时,为相对较低。

该术语源自 Lost in the Middle: How Language Models Use Long Contexts。

低秩自适应 (LoRA)

一种参数高效的微调技术,用于“冻结”模型的预训练权重(使其无法再被修改),然后在模型中插入一小部分可训练的权重。这组可训练的权重(也称为“更新矩阵”)比基础模型小得多,因此训练速度也快得多。

LoRA 具有以下优势:

- 提高模型在应用微调的网域中的预测质量。

- 比需要微调模型所有参数的技术更快。

- 通过支持同时部署多个共享同一基础模型的专业模型,降低推理的计算成本。

LSTM

长短期记忆的缩写。

M

机器学习

一种通过输入数据训练模型的程序或系统。经过训练的模型可以根据从与训练该模型时使用的数据集具有相同分布的新(从未见过)数据集中提取的数据做出有用的预测。

机器学习还指与这些程序或系统相关的研究领域。

如需了解详情,请参阅机器学习简介课程。

机器翻译

使用软件(通常是机器学习模型)将文本从一种人类语言转换为另一种人类语言,例如从英语转换为日语。

多数类

分类不平衡的数据集内更为常见的标签。例如,假设一个数据集内包含 99% 的负标签和 1% 的正标签,那么负标签为多数类。

与少数类相对。

如需了解详情,请参阅机器学习速成课程中的数据集:不平衡的数据集。

马尔可夫决策过程 (MDP)

一种表示决策模型的图,其中做出决策(或采取行动)以在假设马尔可夫性质成立的情况下,浏览一系列状态。在强化学习中,这些状态之间的转换会返回一个数值奖励。

马尔可夫性质

某些环境的属性,其中状态转换完全由当前状态和智能体的动作中隐含的信息决定。

遮盖语言模型

一种语言模型,用于预测候选令牌填入序列中空白处的概率。例如,遮盖语言模型可以计算候选字词的概率,以替换以下句子中的下划线:

帽子里的____回来了。

文献通常使用字符串“MASK”而不是下划线。 例如:

帽子上的“MASK”又回来了。

大多数现代掩码语言模型都是双向的。

math-pass@k

一种用于确定 LLM 在 K 次尝试内解决数学问题的准确性的指标。例如,math-pass@2 衡量的是 LLM 在两次尝试内解决数学问题的能力。在 math-pass@2 上达到 0.85 的准确率表明,LLM 在两次尝试中能够解决 85% 的数学问题。

math-pass@k 与 pass@k 指标相同,只不过 math-pass@k 专门用于数学评估。

matplotlib

一个开源 Python 2D 绘制库。matplotlib 可以帮助您可视化机器学习的各个不同方面。

矩阵分解

在数学中,矩阵分解是一种寻找其点积近似目标矩阵的矩阵的机制。

在推荐系统中,目标矩阵通常包含用户对商品的评分。例如,电影推荐系统的目标矩阵可能如下所示,其中正整数表示用户评分,0 表示用户未对该电影进行评分:

| 卡萨布兰卡 | 《费城故事》 | Black Panther | 神奇女侠 | 《低俗小说》 | |

|---|---|---|---|---|---|

| 用户 1 | 5.0 | 3.0 | 0.0 | 2.0 | 0.0 |

| 用户 2 | 4.0 | 0.0 | 0.0 | 1.0 | 5.0 |

| 用户 3 | 3.0 | 1.0 | 4.0 | 5.0 | 0.0 |

电影推荐系统旨在预测无评分电影的用户评分。例如,用户 1 会喜欢《黑豹》吗?

推荐系统采用的一种方法是,使用矩阵分解生成以下两个矩阵:

例如,对我们的三名用户和五个推荐项进行矩阵分解,会得到以下用户矩阵和项矩阵:

User Matrix Item Matrix 1.1 2.3 0.9 0.2 1.4 2.0 1.2 0.6 2.0 1.7 1.2 1.2 -0.1 2.1 2.5 0.5

用户矩阵和项矩阵的点积会得到一个推荐矩阵,其中不仅包含原始用户评分,还包含对每位用户未观看影片的预测。 例如,假设用户 1 对《卡萨布兰卡》的评分为 5.0。对应于推荐矩阵中该单元格的点积应该在 5.0 左右,计算方式如下:

(1.1 * 0.9) + (2.3 * 1.7) = 4.9更重要的是,用户 1 会喜欢《黑豹》吗?计算第一行和第三列所对应的点积,得到的预测评分为 4.3:

(1.1 * 1.4) + (2.3 * 1.2) = 4.3矩阵分解通常会生成用户矩阵和项矩阵,这两个矩阵合在一起明显比目标矩阵更为紧凑。

MBPP

Mostly Basic Python Problems 的缩写。

平均绝对误差 (MAE)

使用 L1 损失时,每个样本的平均损失。按如下方式计算平均绝对误差:

- 计算批次的 L1 损失。

- 将 L1 损失除以批次中的样本数。

例如,假设有以下一批包含 5 个示例的数据,请考虑计算 L1 损失:

| 示例的实际值 | 模型的预测值 | 损失(实际值与预测值之间的差值) |

|---|---|---|

| 7 | 6 | 1 |

| 5 | 4 | 1 |

| 8 | 11 | 3 |

| 4 | 6 | 2 |

| 9 | 8 | 1 |

| 8 = L1 损失 | ||

因此,L1 损失为 8,示例数量为 5。因此,平均绝对误差为:

Mean Absolute Error = L1 loss / Number of Examples Mean Absolute Error = 8/5 = 1.6

前 k 名的平均精确率均值 (mAP@k)

验证数据集中所有 k 处的平均精确率得分的统计平均值。平均精确率(取前 k 个结果)的一个用途是判断推荐系统生成的推荐的质量。

虽然“平均平均值”一词听起来有些冗余,但该指标的名称是合适的。毕竟,此指标会计算多个 k 值处的平均精确率的平均值。

均方误差 (MSE)

使用 L2 损失时,每个样本的平均损失。按如下方式计算均方误差:

- 计算批次的 L2 损失。

- 将 L2 损失除以批次中的样本数。

例如,假设以下一批包含 5 个示例,损失如下:

| 实际值 | 模型预测 | 损失 | 平方损失 |

|---|---|---|---|

| 7 | 6 | 1 | 1 |

| 5 | 4 | 1 | 1 |

| 8 | 11 | 3 | 9 |

| 4 | 6 | 2 | 4 |

| 9 | 8 | 1 | 1 |

| 16 = L2 损失 | |||

因此,均方误差为:

Mean Squared Error = L2 loss / Number of Examples Mean Squared Error = 16/5 = 3.2

TensorFlow Playground 使用均方差来计算损失值。

网格

在机器学习并行编程中,一个与将数据和模型分配给 TPU 芯片以及定义这些值将如何分片或复制相关的术语。

网格是一个多含义术语,可以理解为下列两种含义之一:

- TPU 芯片的物理布局。

- 一种用于将数据和模型映射到 TPU 芯片的抽象逻辑构造。

无论哪种情况,网格都被指定为形状。

元学习

一种发现或改进学习算法的机器学习子集。 元学习系统还可以旨在训练模型,使其能够从少量数据或从之前任务中获得的经验中快速学习新任务。元学习算法通常会尝试实现以下目标:

- 改进或学习人工设计的特征(例如初始化程序或优化器)。

- 提高数据效率和计算效率。

- 改善泛化效果。

元学习与少量样本学习有关。

指标

您关心的一项统计数据。

目标是机器学习系统尝试优化的指标。

Metrics API (tf.metrics)

用于评估模型的 TensorFlow API。例如,tf.metrics.accuracy 用于确定模型的预测与标签的匹配频率。

小批次

在一次迭代中处理的批次的一小部分随机选择的子集。 小批次的批次大小通常介于 10 到 1,000 个样本之间。

例如,假设整个训练集(完整批次)包含 1,000 个样本。进一步假设您将每个小批次的批次大小设置为 20。因此,每次迭代都会确定 1,000 个示例中随机 20 个示例的损失,然后相应地调整权重和偏差。

计算小批次的损失比计算完整批次中所有示例的损失要高效得多。

如需了解详情,请参阅机器学习速成课程中的线性回归:超参数。

小批次随机梯度下降法

一种使用小批次的梯度下降算法。也就是说,小批次随机梯度下降法会根据一小部分训练数据估算梯度。常规随机梯度下降法使用的小批次的大小为 1。

minimax 损失

一种基于生成数据与真实数据分布之间的交叉熵的生成对抗网络的损失函数。

第一篇论文中使用了 minimax 损失来描述生成对抗网络。

如需了解详情,请参阅生成对抗网络课程中的损失函数。

少数类

分类不平衡的数据集内不常见的标签。例如,假设一个数据集内包含 99% 的负标签和 1% 的正标签,那么正标签为少数类。

与多数类相对。

如需了解详情,请参阅机器学习速成课程中的数据集:不平衡的数据集。

混合专家

一种通过仅使用一部分参数(称为“专家”)来处理给定输入令牌或示例来提高神经网络效率的方案。门控网络会将每个输入 token 或示例路由到合适的专家。

如需了解详情,请参阅以下任一论文:

机器学习

机器学习的缩写。

MMIT

多模态指令调优的缩写。

MNIST

由 LeCun、Cortes 和 Burges 编译的公用数据集,其中包含 60,000 张图像,每张图像显示人类如何手动写下从 0 到 9 的特定数字。每张图像存储为 28x28 的整数数组,其中每个整数是 0 到 255(含边界值)之间的灰度值。

MNIST 是机器学习的标准数据集,通常用于测试新的机器学习方法。如需了解详情,请参阅 MNIST 手写数字数据库。

modality

高级别数据类别。例如,数字、文字、图片、视频和音频是五种不同的模态。

模型

一般来说,任何处理输入数据并返回输出的数学结构。换句话说,模型是系统进行预测所需的一组形参和结构。 在监督式机器学习中,模型将示例作为输入,并推断出预测结果作为输出。在监督式机器学习中,模型略有不同。例如:

您可以保存、恢复或复制模型。

非监督式机器学习也会生成模型,通常是一个可以将输入示例映射到最合适的聚类的函数。

模型容量

模型可以学习的问题的复杂性。模型可以学习的问题越复杂,模型的能力就越高。模型能力通常会随着模型参数数量的增加而增强。如需了解分类模型容量的正式定义,请参阅 VC 维度。

模型级联

一种可为特定推理查询选择理想模型的系统。

假设有一组模型,从非常大(大量形参)到小得多(形参少得多)。与较小的模型相比,超大型模型在推理时会消耗更多计算资源。不过,与较小的模型相比,非常大的模型通常可以推理出更复杂的请求。模型级联会确定推理查询的复杂程度,然后选择合适的模型来执行推理。 模型级联的主要目的是通过通常选择较小的模型来降低推理成本,并且仅针对更复杂的查询选择较大的模型。

假设有一个小型模型在手机上运行,而该模型的较大版本在远程服务器上运行。良好的模型级联可让较小的模型处理简单请求,仅在处理复杂请求时调用远程模型,从而降低成本和延迟时间。

另请参阅模型路由器。

模型并行处理

一种扩展训练或推理的方式,可将一个模型的不同部分放置在不同的设备上。模型并行化可实现无法在单个设备上运行的大型模型。

为了实现模型并行性,系统通常会执行以下操作:

- 将模型分片(划分)为更小的部分。

- 将这些较小部分的训练分配到多个处理器中。 每个处理器都会训练模型的一部分。

- 合并结果以创建单个模型。

模型并行会减慢训练速度。

另请参阅数据并行。

模型路由器

用于确定模型级联中推理的理想模型的算法。模型路由器本身通常是一个机器学习模型,它会逐渐学习如何为给定的输入选择最佳模型。不过,模型路由器有时可能是一种更简单的非机器学习算法。

模型训练

确定最佳模型的过程。

MOE

专家混合的缩写。

造势

一种复杂的梯度下降算法,其中学习步长不仅取决于当前步长的导数,还取决于紧邻的前一步长(或多个步长)的导数。动量涉及计算梯度随时间的指数加权移动平均值,类似于物理学中的动量。动量有时可以防止学习陷入局部最小值。

Mostly Basic Python Problems (MBPP)

一个用于评估 LLM 在生成 Python 代码方面的熟练程度的数据集。 Mostly Basic Python Problems 提供了大约 1,000 个众包编程问题。 数据集中的每个问题都包含:

- 任务说明

- 解决方案代码

- 三个自动化测试用例

MT

机器翻译的缩写。

多类别分类

在监督式学习中,一种分类问题,其中数据集包含两个以上的标签类别。例如,Iris 数据集中的标签必须是以下三个类别之一:

- setosa 鸢尾花

- 弗吉尼亚鸢尾

- 杂色鸢尾

如果模型是使用 Iris 数据集训练的,并且可以根据新示例预测 Iris 类型,则该模型执行的是多类别分类。

相比之下,如果分类问题要区分的类别正好是两个,则属于二元分类模型。例如,预测电子邮件是垃圾邮件还是非垃圾邮件的电子邮件模型就是二元分类模型。

在聚类问题中,多类别分类是指两个以上的聚类。

如需了解详情,请参阅机器学习速成课程中的神经网络:多类别分类。

多类别逻辑回归

多头自注意力

自注意力的一种扩展,可针对输入序列中的每个位置多次应用自注意力机制。

Transformer 引入了多头自注意力机制。

多模态指令调优

一种经过指令调优的模型,可以处理文本以外的输入,例如图片、视频和音频。

多模态模型

输入、输出或两者都包含多种模态的模型。例如,假设有一个模型将图片和文本说明(两种模态)作为特征,并输出一个分数,用于指示文本说明与图片的匹配程度。因此,此模型的输入是多模态的,而输出是单模态的。

多项分类

与多类别分类的含义相同。

多项回归

与多类别逻辑回归的含义相同。

多句阅读理解 (MultiRC)

用于评估 LLM 回答多项选择题能力的数据集。 数据集中的每个示例都包含:

- 上下文段落

- 关于该段落的问题

- 问题的多个答案。每个答案都标有“正确”或“错误”。 多个答案可能为 True。

例如:

上下文段落:

苏珊想举办一个生日派对。她给所有朋友打了电话。她有 5 个朋友。苏珊的妈妈说,苏珊可以邀请他们所有人参加派对。她的第一个朋友因生病而无法参加派对。她的第二个朋友要出差。她的第三个朋友不太确定父母是否会同意。第四位朋友回复了“不确定”。第五位朋友肯定会去参加聚会。苏珊有点难过。派对当天,这五位朋友都来了。每个朋友都给苏珊带了礼物。苏珊很高兴,并在下周给每位朋友寄了一张感谢卡。

问题:苏珊生病的朋友康复了吗?

多个答案:

- 是的,她已康复。(正确)

- 否。(错误)

- 可以。(正确)

- 没有,她没有康复。(错误)

- 是的,她参加了苏珊的派对。(正确)

MultiRC 是 SuperGLUE 集成模型的一个组成部分。

如需了解详情,请参阅Looking Beyond the Surface: A Challenge Set for Reading Comprehension over Multiple Sentences。

多任务处理

多任务模型是通过训练适合每项不同任务的数据来创建的。这样一来,模型便可学习在不同任务之间共享信息,从而更有效地学习。

针对多项任务训练的模型通常具有更强的泛化能力,并且在处理不同类型的数据时更加稳健。

否

Nano

一款相对较小的 Gemini 模型,专为在设备上使用而设计。如需了解详情,请参阅 Gemini Nano。

NaN 陷阱

模型中的一个数字在训练期间变成 NaN,这会导致模型中的很多或所有其他数字最终也会变成 NaN。

NaN 是 Not a Number 的缩写。

自然语言处理

一个领域,旨在教导计算机使用语言规则来处理用户说出或输入的内容。几乎所有现代自然语言处理都依赖于机器学习。自然语言理解

一种自然语言处理的子集,用于确定用户说出或输入的内容的意图。自然语言理解可以超越自然语言处理,考虑语言的复杂方面,例如上下文、讽刺和情感。

负类别

在二元分类中,一种类别称为正类别,另一种类别称为负类别。正类别是模型正在测试的事物或事件,负类别则是另一种可能性。例如:

- 在医学检查中,负类别可以是“非肿瘤”。

- 在电子邮件分类模型中,负类别可以是“非垃圾邮件”。

与正类别相对。

负采样

与候选采样的含义相同。

神经架构搜索 (NAS)

一种用于自动设计神经网络架构的技术。NAS 算法可以减少训练神经网络所需的时间和资源。

NAS 通常使用:

- 搜索空间,即一组可能的架构。

- 适应度函数,用于衡量特定架构在给定任务上的表现。

NAS 算法通常从一小部分可能的架构开始,随着算法对有效架构的了解不断深入,逐渐扩大搜索空间。适应度函数通常基于架构在训练集上的性能,而算法通常使用强化学习技术进行训练。

事实证明,NAS 算法能够有效地为各种任务(包括图像分类、文本分类和机器翻译)找到高性能的架构。

输出表示

包含至少一个隐藏层的模型。深度神经网络是一种包含多个隐藏层的神经网络。例如,下图显示了一个包含两个隐藏层的深度神经网络。

神经网络中的每个神经元都连接到下一层中的所有节点。例如,在上图中,请注意第一个隐藏层中的每个神经元都分别连接到第二个隐藏层中的两个神经元。

在计算机上实现的神经网络有时称为人工神经网络,以区别于大脑和其他神经系统中的神经网络。

某些神经网络可以模拟不同特征与标签之间极其复杂的非线性关系。

如需了解详情,请参阅机器学习速成课程中的神经网络。

神经元

在机器学习中,指神经网络的隐藏层中的一个独立单元。每个神经元都会执行以下两步操作:

第一个隐藏层中的神经元接受来自输入层中特征值的输入。任何隐藏层(第一个隐藏层除外)中的神经元都会接受来自前一个隐藏层中神经元的输入。例如,第二个隐藏层中的神经元接受来自第一个隐藏层中神经元的输入。

下图突出显示了两个神经元及其输入。

神经网络中的神经元会模拟大脑和神经系统其他部位的神经元行为。

N-gram

N 个字词的有序序列。例如,“truly madly”属于二元语法。由于顺序很重要,因此“madly truly”和“truly madly”是不同的二元语法。

| 否 | 此类 N 元语法的名称 | 示例 |

|---|---|---|

| 2 | 二元语法 | to go、go to、eat lunch、eat dinner |

| 3 | 三元语法 | ate too much、happily ever after、the bell tolls |

| 4 | 四元语法 | walk in the park、dust in the wind、the boy ate lentils |

很多自然语言理解模型依赖 N 元语法来预测用户将输入或说出的下一个字词。例如,假设用户输入了“happily ever”。 基于三元语法的 NLU 模型可能会预测该用户接下来将输入“after”一词。

N 元语法与词袋(无序字词集)相对。

如需了解详情,请参阅机器学习速成课程中的大型语言模型。

NLP

自然语言处理的缩写。

NLU

自然语言理解的缩写。

节点(决策树)

如需了解详情,请参阅决策森林课程中的决策树。

节点(神经网络)

如需了解详情,请参阅机器学习速成课程中的神经网络。

节点(TensorFlow 图)

TensorFlow 图中的操作。

噪声

一般来说,噪声是指数据集中掩盖信号的所有内容。将噪声引入数据中的方式各种各样。例如:

- 人工评分者在添加标签时出错。

- 人类和仪器错误记录或忽略特征值。

非二元性别条件

包含两种以上可能结果的 条件。例如,以下非二元条件包含三种可能的结果:

如需了解详情,请参阅决策森林课程中的条件类型。

非线性

一种无法仅通过加法和乘法表示的两个或多个变量之间的关系。线性关系可以用直线表示,而非线性关系则不能用直线表示。例如,假设有两个模型,每个模型都将单个特征与单个标签相关联。左侧的模型是线性模型,右侧的模型是非线性模型:

如需尝试不同类型的非线性函数,请参阅机器学习速成课程中的神经网络:节点和隐藏层。

无回答偏差

请参阅选择性偏差。

非平稳性

一种值会随一个或多个维度(通常是时间)而变化的特征。 例如,请考虑以下非平稳性示例:

- 特定商店的泳衣销量会随季节而变化。

- 特定地区中特定水果的收获量在一年中的大部分时间为零,但在短时间内会很大。

- 由于气候变化,年平均气温正在发生变化。

与平稳性相对。

没有唯一正确答案 (NORA)

具有多个正确回答的提示。 例如,以下提示没有唯一正确的答案:

给我讲个关于大象的有趣笑话。

评估“没有唯一正确答案”的提示的回答通常比评估有唯一正确答案的提示的回答更具主观性。例如,评估一个大象笑话需要一种系统性的方法来确定该笑话有多好笑。

NORA

没有唯一正确答案的缩写。

归一化

从广义上讲,是将变量的实际值范围转换为标准值范围的过程,例如:

- -1 至 +1

- 0 至 1

- Z 得分(大致介于 -3 到 +3 之间)

例如,假设某个特征的实际值范围为 800 到 2,400。作为特征工程的一部分,您可以将实际值归一化到标准范围内,例如 -1 到 +1。

归一化是特征工程中的一项常见任务。如果特征向量中的每个数值特征都具有大致相同的范围,模型通常会更快地完成训练(并生成更好的预测结果)。

另请参阅 Z 得分归一化。

如需了解详情,请参阅机器学习速成课程中的数值数据:归一化。

笔记本 LM

一款基于 Gemini 的工具,可让用户上传文档,然后使用提示来提问、总结或整理这些文档。例如,作者可以上传几篇短篇小说,然后让 NotebookLM 找出它们的共同主题,或者确定哪篇小说最适合改编成电影。

新颖点检测

确定新(新颖)样本是否与训练集来自同一分布的过程。换句话说,在训练集上训练后,新颖性检测会确定新示例(在推理期间或在额外训练期间)是否为离群点。

与离群值检测相对。

数值数据

用整数或实数表示的特征。 例如,房屋估值模型可能会将房屋面积(以平方英尺或平方米为单位)表示为数值数据。将特征表示为数值数据表明,特征的值与标签之间存在数学关系。也就是说,房屋的平方米数可能与房屋的价值存在某种数学关系。

并非所有整数数据都应表示为数值数据。例如,世界某些地区的邮政编码是整数;不过,整数邮政编码不应在模型中表示为数值数据。这是因为邮政编码 20000 的效果并不是邮政编码 10000 的两倍(或一半)。此外,虽然不同的邮政编码确实与不同的房地产价值相关联,但我们不能假设邮政编码为 20000 的房地产价值是邮政编码为 10000 的房地产价值的两倍。邮政编码应表示成分类数据。

数值特征有时称为连续特征。

如需了解详情,请参阅机器学习速成课程中的处理数值数据。

NumPy

一个 开源数学库,在 Python 中提供高效的数组操作。pandas 基于 NumPy 构建。

O

目标

算法尝试优化的指标。

目标函数

模型旨在优化的数学公式或指标。 例如,线性回归的目标函数通常是均方损失。因此,在训练线性回归模型时,训练旨在尽量减少均方损失。

在某些情况下,目标是最大化目标函数。 例如,如果目标函数是准确率,则目标是最大限度地提高准确率。

另请参阅损失。

斜向条件

在决策树中,涉及多个特征的条件。例如,如果高度和宽度都是特征,则以下是倾斜条件:

height > width

与轴对齐条件相对。

如需了解详情,请参阅决策森林课程中的条件类型。

离线

与 static 的含义相同。

离线推理

模型生成一批预测,然后缓存(保存)这些预测的过程。然后,应用可以从缓存中访问推理出的预测结果,而无需重新运行模型。

例如,假设有一个模型每 4 小时生成一次本地天气预报(预测)。每次运行模型后,系统都会缓存所有本地天气预报。天气应用从缓存中检索预报。

离线推理也称为静态推理。

与在线推理相对。 如需了解详情,请参阅机器学习速成课程中的生产环境中的机器学习系统:静态推理与动态推理。

独热编码

将分类数据表示为一个向量,其中:

- 一个元素设置为 1。

- 所有其他元素均设置为 0。

独热编码常用于表示拥有有限个可能值的字符串或标识符。例如,假设某个名为 Scandinavia 的分类特征有五个可能的值:

- "丹麦"

- “瑞典”

- “挪威”

- “芬兰”

- “冰岛”

独热编码可以将这五个值分别表示为:

| 国家/地区 | 向量 | ||||

|---|---|---|---|---|---|

| "丹麦" | 1 | 0 | 0 | 0 | 0 |

| “瑞典” | 0 | 1 | 0 | 0 | 0 |

| “挪威” | 0 | 0 | 1 | 0 | 0 |

| “芬兰” | 0 | 0 | 0 | 1 | 0 |

| “冰岛” | 0 | 0 | 0 | 0 | 1 |

借助独热编码,模型可以根据这五个国家/地区中的每一个来学习不同的关联。

将特征表示为数值数据是独热编码的替代方案。遗憾的是,以数字形式表示斯堪的纳维亚国家/地区并不是一个好的选择。例如,请考虑以下数字表示法:

- “丹麦”为 0

- “瑞典”为 1

- “挪威”为 2

- “芬兰”为 3

- “冰岛”是 4

借助数值编码,模型将以数学方式解读原始数字,并尝试基于这些数字进行训练。不过,冰岛的某项指标实际上并非挪威的两倍(或一半),因此模型会得出一些奇怪的结论。

如需了解详情,请参阅机器学习速成课程中的类别型数据:词汇和独热编码。

一个正确答案 (ORA)

判断正误:土星比火星大。

唯一正确的回答是正确。

与没有唯一正确答案相对。

单样本学习

一种机器学习方法,通常用于对象分类,旨在从单个训练示例中学习有效的分类模型。

单样本提示

包含一个示例的提示,用于演示大型语言模型应如何回答。例如,以下提示包含一个示例,用于向大语言模型展示应如何回答查询。

| 一个提示的组成部分 | 备注 |

|---|---|

| 指定国家/地区的官方货币是什么? | 您希望 LLM 回答的问题。 |

| 法国:欧元 | 举个例子。 |

| 印度: | 实际查询。 |

比较并对比一次性提示与以下术语:

一对多

假设某个分类问题有 N 个类别,一种解决方案包含 N 个单独的二元分类模型 - 一个二元分类模型对应一种可能的结果。例如,假设有一个模型可将示例分类为动物、植物或矿物,那么一对多解决方案将提供以下三个单独的二元分类模型:

- 动物与非动物

- 蔬菜与非蔬菜

- 矿物质与非矿物质

在线

与动态的含义相同。

在线推理

根据需求生成预测。例如,假设某个应用将输入内容传递给模型,并发出预测请求。使用在线推理的系统会通过运行模型来响应请求(并将预测结果返回给应用)。

与离线推理相对。

如需了解详情,请参阅机器学习速成课程中的生产环境中的机器学习系统:静态推理与动态推理。

操作 (op)

在 TensorFlow 中,任何创建、操纵或销毁Tensor的过程都属于操作。例如,矩阵相乘运算会接受两个张量作为输入,并生成一个张量作为输出。

Optax

一个适用于 JAX 的梯度处理和优化库。Optax 通过提供可按自定义方式重新组合的构建块来促进研究,以优化深度神经网络等参数化模型。其他目标包括:

- 提供可读、经过充分测试且高效的核心组件实现。

- 通过将低级成分组合成自定义优化器(或其他梯度处理组件)来提高效率。

- 让任何人都能轻松贡献想法,从而加快新想法的采用速度。

optimizer

梯度下降法的一种具体实现。热门优化器包括:

- AdaGrad,即 ADAptive GRADient descent。

- Adam,表示“ADAptive with Momentum”(自适应动量)。

ORA

一个正确答案的缩写。

群外同质性偏差

在比较态度、价值观、性格特质和其他特征时,倾向于认为群外成员之间比群内成员更为相似。群内成员是指您经常与之互动的人员;群外成员是指您不经常与之互动的人员。如果您通过让参与者提供有关群外成员的特性来创建数据集,相比参与者列出的群内成员的特性,群外成员的这些特性可能不太细微且更加刻板。

例如,小人国居民可以详细描述其他小人国居民的房屋,指出建筑风格、窗户、门和大小之间的细微差异。但是,同样的小人国居民可能直接声称大人国居民住的房屋完全一样。

群外同质性偏差是一种群体归因偏差。

另请参阅群内偏差。

离群值检测

与新颖点检测相对。

离群数据

与大多数其他值相差甚远的值。在机器学习中,以下任何一项都属于离群值:

- 值比平均值高大约 3 个标准偏差的输入数据。

- 绝对值很高的权重。

- 与实际值相差很大的预测值。

例如,假设 widget-price 是某个模型的特征。

假设平均值 widget-price 为 7 欧元,标准差为 1 欧元。因此,包含 12 欧元或 2 欧元的示例会被视为离群值,因为这两个价格与平均值的差值均为 5 个标准差。widget-price

离群值通常是由拼写错误或其他输入错误造成的。在其他情况下,离群值并非错误;毕竟,距离平均值五个标准差的值很少见,但并非不可能。

离群值常常会导致模型训练出现问题。裁剪是管理离群值的一种方法。

如需了解详情,请参阅机器学习速成课程中的处理数值数据。

袋外评估(OOB 评估)

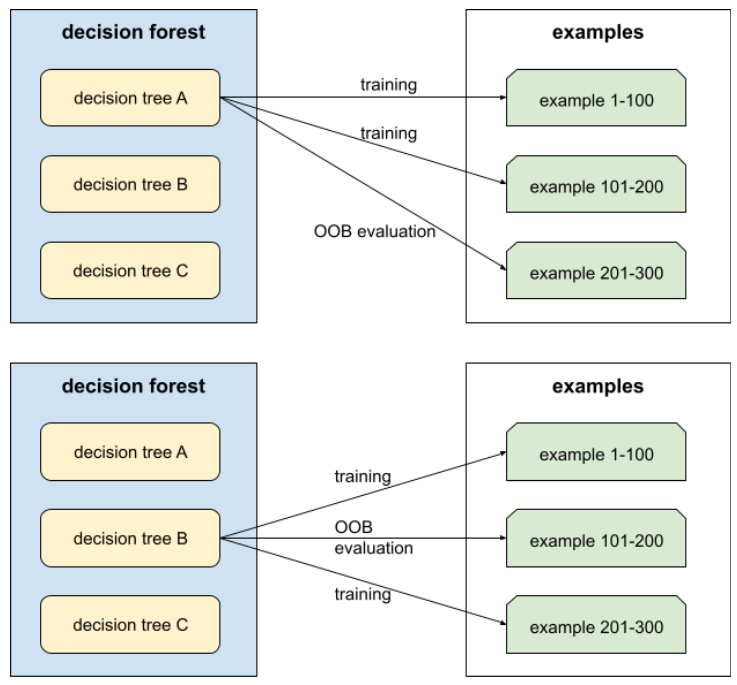

一种用于评估决策森林质量的机制,通过针对决策树训练期间未使用的示例来测试每棵决策树。例如,在下图中,请注意,系统会使用大约三分之二的示例来训练每个决策树,然后使用剩余的三分之一示例进行评估。

袋外评估是一种计算效率高且保守的交叉验证机制近似值。在交叉验证中,每个交叉验证轮次都会训练一个模型(例如,在 10 折交叉验证中会训练 10 个模型)。使用 OOB 评估时,系统会训练单个模型。由于 bagging 在训练期间会从每棵树中留出一些数据,因此 OOB 评估可以使用这些数据来近似交叉验证。

如需了解详情,请参阅决策森林课程中的袋外评估。

输出层

神经网络的“最终”层。输出层包含预测结果。

下图展示了一个小型深度神经网络,其中包含一个输入层、两个隐藏层和一个输出层:

过拟合

创建的模型与训练数据过于匹配,以致于模型无法根据新数据做出正确的预测。

正则化可以减少过拟合。 在庞大而多样的训练集上进行训练也有助于减少过拟合。

如需了解详情,请参阅机器学习速成课程中的过拟合。

过采样

在分类不平衡的数据集中重复使用少数类的示例,以创建更平衡的训练集。

例如,假设有一个二元分类问题,其中多数类与少数类的比率为 5,000:1。如果数据集包含 100 万个示例,那么少数类只包含大约 200 个示例,这可能不足以进行有效训练。为了克服这一不足,您可以多次对这 200 个示例进行过采样(重复使用),从而可能获得足够的示例来进行有效训练。

在过采样时,您需要注意避免过拟合。

与欠采样相对。

P

打包数据

一种更高效的数据存储方法。

打包数据是指以压缩格式或以其他方式存储数据,以便更高效地访问数据。打包数据可最大限度地减少访问所需内存和计算量,从而加快训练速度并提高模型推理效率。

打包数据通常与其他技术(例如数据增强和正则化)搭配使用,以进一步提高模型的性能。

PaLM

Pathways 语言模型的缩写。

pandas

基于 numpy 构建的面向列的数据分析 API。 许多机器学习框架(包括 TensorFlow)都支持将 Pandas 数据结构作为输入。如需了解详情,请参阅 Pandas 文档。

参数

模型在训练期间学习的权重和偏差。例如,在线性回归模型中,参数包括以下公式中的偏差 (b) 和所有权重(w1、w2 等):

相比之下,超参数是您(或超参数调节服务)提供给模型的值。例如,学习速率就是一种超参数。

参数高效调优

一组用于比完全微调更高效地微调大型预训练语言模型 (PLM)的技术。与完全微调相比,参数高效调优通常会微调少得多的参数,但通常会生成一个大语言模型,其性能与通过完全微调构建的大语言模型相当(或几乎相当)。

比较参数高效调优与以下方法的异同:

参数高效调优也称为参数高效微调。

参数服务器 (PS)

一种作业,负责在分布式环境中跟踪模型参数。

参数更新

在训练期间调整模型参数的操作,通常在单次梯度下降迭代中进行。

偏导数

一种导数,其中除一个变量之外的所有变量都被视为常量。 例如,f(x, y) 相对于 x 的偏导数是将 f 视为仅以 x 为变量的函数的导数(即保持 y 不变)。f 对 x 的偏导数仅关注 x 如何变化,而忽略公式中的所有其他变量。

参与偏差

与无回答偏差的含义相同。请参阅选择性偏差。

划分策略

在参数服务器间分割变量的算法。

前 k 名中的通过率 (pass@k)

一种用于确定大语言模型生成的代码(例如 Python)质量的指标。 更具体地说,pass@k 表示在生成的 k 个代码块中,至少有一个代码块通过所有单元测试的可能性。

大语言模型通常难以针对复杂的编程问题生成优质代码。软件工程师通过提示大语言模型为同一问题生成多个 (k) 解决方案来应对这一问题。然后,软件工程师会针对单元测试对每个解决方案进行测试。通过率(在 k 处)的计算取决于单元测试的结果:

- 如果这些解决方案中的一个或多个通过了单元测试,则 LLM 通过了该代码生成挑战。

- 如果没有任何解决方案通过单元测试,则 LLM 未能通过该代码生成挑战。

通过率(在 k 处)的公式如下:

\[\text{pass at k} = \frac{\text{total number of passes}} {\text{total number of challenges}}\]

一般来说,k 值越高,pass@k 得分就越高;不过,k 值越高,所需的大型语言模型和单元测试资源就越多。

Pathways Language Model (PaLM)

一种旧版模型,是 Gemini 模型的前身。

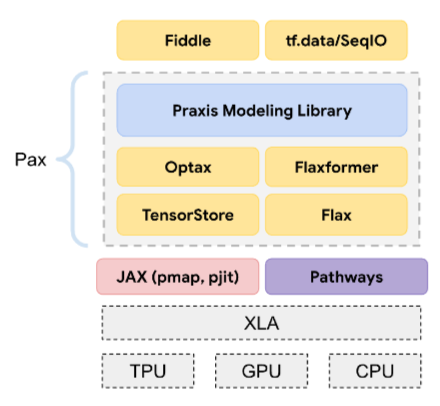

Pax

一种编程框架,旨在训练大规模神经网络 模型,这些模型非常庞大,以至于需要跨多个 TPU 加速器芯片 切片或 Pod。

Pax 基于 Flax 构建,而 Flax 基于 JAX 构建。

感知机

一种系统(硬件或软件),该系统先获取一个或多个输入值,接着对这些输入的加权和运行函数,然后计算单个输出值。在机器学习中,该函数通常是非线性函数,例如 ReLU、sigmoid 或 tanh。 例如,以下感知机依赖 S 型函数来处理三个输入值:

在下图中,感知机先获取三个输入,每个输入本身会经过权重的修改,然后才进入感知机:

性能

一个多含义术语,具有以下含义:

- 在软件工程中的标准含义。即:软件的运行速度有多快(或有多高效)?

- 在机器学习中的含义。在机器学习领域,性能旨在回答以下问题:模型的准确度有多高?即模型在预测方面的表现有多好?

排列变量重要性

一种变量重要性,用于评估在对特征值进行置换后模型预测误差的增加情况。排列变量重要性是一种与模型无关的指标。

困惑度

一种衡量指标,用于衡量模型能够多好地完成任务。 例如,假设您的任务是读取用户在手机键盘上输入的前几个字母,并提供可能的补全字词列表。此任务的困惑度 P 大致是指您需要提供多少个猜测,才能使您的列表包含用户尝试输入的实际字词。

困惑度与交叉熵的关系如下:

流水线

围绕机器学习算法的基础设施。流水线包括收集数据、将数据放入训练数据文件、训练一个或多个模型,以及将模型导出到生产环境。

如需了解详情,请参阅“管理机器学习项目”课程中的 ML 流水线。

流水线

一种模型并行处理形式,其中模型的处理分为连续的阶段,每个阶段在不同的设备上执行。当某个阶段处理一个批次时,前一个阶段可以处理下一个批次。

另请参阅分阶段训练。

pjit

一种 JAX 函数,用于拆分代码以在多个加速器芯片上运行。用户将一个函数传递给 pjit,该函数会返回一个具有等效语义但已编译为在多个设备(例如 GPU 或 TPU 核心)上运行的 XLA 计算的函数。

pjit 使用户能够通过 SPMD 分区器对计算进行分片,而无需重写计算。

自 2023 年 3 月起,pjit 已与 jit 合并。如需了解详情,请参阅分布式数组和自动并行化。

PLM

预训练语言模型的缩写。

pmap

一种 JAX 函数,可在多个底层硬件设备(CPU、GPU 或 TPU)上执行输入函数的副本,并使用不同的输入值。pmap 依赖于 SPMD。

政策

池化

将一个或多个由前面的卷积层创建的矩阵压缩为较小的矩阵。 池化通常涉及取池化区域中的最大值或平均值。例如,假设我们有以下 3x3 矩阵:

![3x3 矩阵 [[5,3,1], [8,2,5], [9,4,3]]。](https://developers.google.com/static/machine-learning/glossary/images/PoolingStart.svg?hl=zh-cn)

池化运算与卷积运算类似:将矩阵分割为多个切片,然后按步长逐个运行卷积运算。例如,假设池化运算以 1x1 的步长将卷积矩阵分割为 2x2 的切片。如下图所示,系统会执行四次池化操作。假设每个池化操作都会选择相应切片中的最大值:

![输入矩阵为 3x3,值如下:[[5,3,1], [8,2,5], [9,4,3]]。

输入矩阵的左上角 2x2 子矩阵为 [[5,3], [8,2]],因此左上角池化操作会生成值 8(即 5、3、8 和 2 中的最大值)。输入矩阵的右上角 2x2 子矩阵为 [[3,1], [2,5]],因此右上角池化运算会产生值 5。输入矩阵的左下角 2x2 子矩阵为 [[8,2], [9,4]],因此左下角池化操作会产生值 9。输入矩阵的右下角 2x2 子矩阵为 [[2,5], [4,3]],因此右下角池化操作会生成值 5。总而言之,池化操作会生成 2x2 矩阵 [[8,5], [9,5]]。](https://developers.google.com/static/machine-learning/glossary/images/PoolingConvolution.svg?hl=zh-cn)

池化有助于在输入矩阵中实现平移不变性。

用于视觉应用的池化更正式的名称是空间池化。时序应用通常将池化称为时序池化。在不太正式的场合,池化通常称为子采样或下采样。

位置编码

一种将序列中词法单元的位置信息添加到词法单元嵌入中的技术。Transformer 模型使用位置编码来更好地了解序列不同部分之间的关系。

位置编码的常见实现方式是使用正弦函数。(具体而言,正弦函数的频率和振幅由序列中令牌的位置决定。)这种技术使 Transformer 模型能够学习根据序列中不同部分的位置来关注它们。

正类别

您要测试的类。

例如,在癌症模型中,正类别可以是“肿瘤”。 在电子邮件分类模型中,正类别可以是“垃圾邮件”。

与负类别相对。

后处理

在模型运行后调整模型输出。 后期处理可用于强制执行公平性限制,而无需修改模型本身。

例如,可以对二元分类模型应用后处理,方法是设置分类阈值,以通过检查真正例率对于某个属性的所有值是否相同,来确保该属性的机会均等。

经过后训练的模型

一个宽泛定义的术语,通常是指经过一些后处理的预训练模型,例如经过以下一项或多项处理:

PR AUC(PR 曲线下的面积)

通过绘制不同分类阈值的(召回率、精确率)点而获得的插值精确率-召回率曲线下的面积。

Praxis

Pax 的核心高性能机器学习库。Praxis 通常称为“Layer 库”。

Praxis 不仅包含 Layer 类的定义,还包含其大部分支持组件,包括:

Praxis 为 Model 类提供定义。

精确度

一种分类模型指标,可为您提供以下信息:

当模型预测为正类别时,预测正确的百分比是多少?

公式如下:

其中: