1. Knowledge acquisition

Themes naturally arise as you explore your typology of stakeholders, capture their unique information needs, and apply different granularities to frame your questions. To help you sort and structure your theme of questions, we created a knowledge-acquisition framework that provides you with a robust, deliberate, and repeatable approach to produce transparency documentation.

Knowledge acquisition is the extraction, structuring, and organization of knowledge from one source—usually human experts—so that it can be used in, for example, the product or technology on which you work.

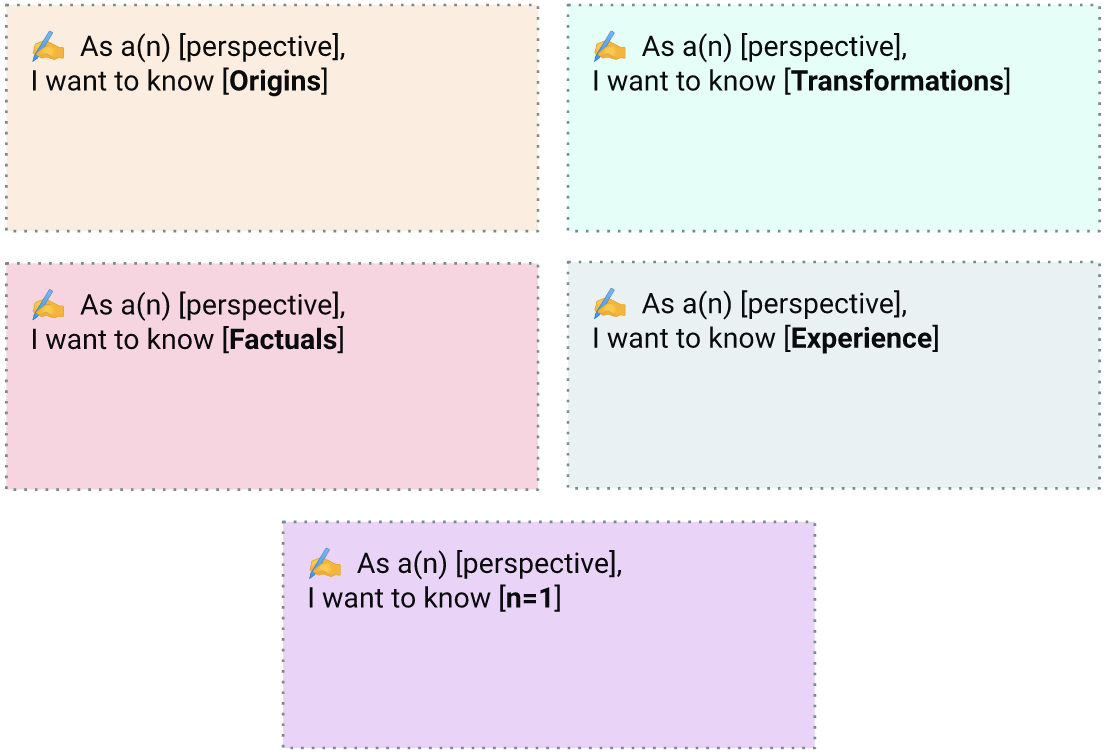

Our framework is called OFTEn, a conceptual tool for systematic consideration of how topics promulgate across all parts of a Data Card. We created it through detailed inductive and deductive dataset-transparency investigations.

OFTEn

OFTEn is an abbreviation for the general stages in the dataset life cycle: Origins, Factuals, Transformations, Experience, and n = 1 (Samples).

Origins

The Origins stage involves the various planning activities that dictate final outcome, such as the definition of requirements, collection or sourcing methods, and design and policy decisions.

Themes that emerge from origin-type questions include the following:

- Authors and owners

- Motivations

- Intended applications

- Collection methods

- Licenses

- Versions

- Sources

- Errata

- Accountable parties

Factuals

The Factuals stage represents the statistical and other factual attributes that describe the dataset, deviations from the original plan, and any pre-wrangling analysis.

Themes that emerge from factual-type questions include the following:

- Number of instances

- Number of features

- Number of labels

- Source of labels

- Source of data

- Breakdown of subgroups

- Shape of features

- Description of features

- Missing or duplicates

- Inclusion criterion

Transformations

The Transformations stage includes summaries of labeling, annotation, or validation tasks. Depending on the dataset, inter-rater adjudication processes might arise here. Also, feature engineering and modifications made to handle privacy, security, or personal identifiable information (PII) counts as transformations.

Themes that capture transformation-type questions include the following:

- Rating or annotation

- Filtering

- Processing

- Validation

- Statistical properties

- Synthetic features

- Handling PII

- Sensitive variables

- Impact on fairness

- Skews or biases

Experience

The Experience stage involves using the data for specific tasks, undergoing access training, making modifications to suit the task, acquiring results and comparing to other similar datasets, and noting any expected or unexpected behaviors.

Themes that illustrate experience-type questions include the following:

- Intended performance

- Unintended application

- Unexpected performance

- Caveats

- Insights

- Experiences

- Stories

- Use

- Use-case evaluation

n = 1 (Samples)

The n = 1 (Samples) stage involves the ins and outs of distribution data points, the demonstration of noteworthy data points with specific attributes and, where applicable, the modeling of outcomes on them.

Themes that sample-type questions demonstrate include the following:

- Examples or links to typical examples and outliers.

- Examples that yield false positives or false negatives.

- Examples that demonstrate handling of null or zero feature values.

Example

For an example, the following set of questions were arranged with OFTEn:

Who | What | When | Where | Why | How | |

Origins | Who publishes the dataset? Are they different from dataset owners? | What are the incentives for data labellers, providers, and experts employed for this dataset? | When was this dataset created? Launched? | Where did the funding come from? | Why was this dataset created? What was the process prior? | How were the methods decided and how many parties were involved? |

Factuals | Who is the data about? Are labelers representative of people in the data? | What are the subgroups in the data that can affect outcomes in machine learning? | What time period does the data represent? When data expires or runs abnormally? | Where can the dataset be accessed? Where was the data collected or created? | Why were the reported metrics chosen? Why were the specific labels chosen? | How many unique labels exist in the dataset? How were these generated? |

Transformations | How was PII handled in this dataset? Can outcomes from this dataset be used to identify individuals? | What methods were used to clean or verify this dataset? | When should features be engineered and how? Do these need to be updated? | Do location features correlate with other sensitive features? | Why were the chosen transformations applied to the dataset? | How are biases or PII handled in the data? |

Experience | Who can use this dataset, and for what tasks? Are there any trainings required? | What were the methods, results, or errors discovered when the dataset was used? | Under what circumstances and when should this dataset not be used? | Where in the world is this dataset accessible? Where has it been used? | Why is the expected representation of the dataset different from the observed representation? | How expensive is data in different parts of the world? |

n = 1 (Samples) | Is the datapoint typical or atypical? How do models behave here? | What is the size of the datapoint? What's the consent, redaction, and withdrawal process to intervene in a datapoint? | When does the outcome on a datapoint change? Show examples through counterfactuals? | What factors are baked into the datapoint? What are the risks involved if things go wrong with predictions? | Why is this image datapoint cropped a certain way? Why are certain categories not populated in this datapoint? | How does this datapoint relate to a real-world input?How does the outcome relate to a real-world output? |

We found that Data Cards with a clear underlying OFTEn structure are easy to expand and update. With OFTEn, Data Cards can grow over time to include topics that are typically excluded from documentation, such as feedback from downstream agents, notable differences across versions, and ad hoc audits or investigations from producers or agents.

Summary

The following table summarizes the OFTEn framework and describes the general stages in a dataset's lifecycle:

Stage | Description |

Origins | Early stages of a dataset's lifecycle when decisions to create a dataset are made. |

Factuals | Actual data collection processes and raw outputs. |

Transformations | Raw data is transformed into a usable form through operations like filtering, validating, parsing, formatting, and cleaning. |

Experience | Dataset is tested, benchmarked, or deployed in practice (experimental, production, or research). |

n = 1 (Samples) | Actual samples from the dataset—or vignettes— that represent normal data points and outliers. |

There are two ways that you can use OFTEn when you create a Data Card:

- Inductively, OFTEn supports activities with agents to formulate questions about datasets and related models that are critical for decision-making. We find that when many agents come together to brainstorm questions with an OFTEn structure, it reveals information that's necessary for targeted decision making.

- Deductively, OFTEn can be used to assess if a Data Card accurately represents the dataset, which results in formative effects on documentation and dataset. For example, early-stage datasets are more skewed towards Origins and Factuals, whereas mature datasets are expected to be skewed toward Experience.

With OFTEn, you can brainstorm and check how well your questions cover the lifecycle of your dataset, which ensures that your content will eventually be comprehensive and streamlined. It not only helps you find redundancies in the types of questions that you create, but it also addresses any gaps that you might find along the way.

2. Frame questions with OFTEn

- Think about some of your stakeholders and agent information journeys (AIJs) that you formulated in the previous module, and then use the following prompts to help structure your thoughts.

- If some of your questions already fall nicely into one of the OFTEn categories, label them as such.

- If your questions don't fall into one of the OFTEn categories, choose one of your agents from the previous module and then create at least one question per OFTEn category for the agent.

- Create additional questions based on the five Ws (who, what, where, when, and why) and one H (how) to expand the depth of your OFTEn category.

- If applicable, repeat these steps for the next agent.

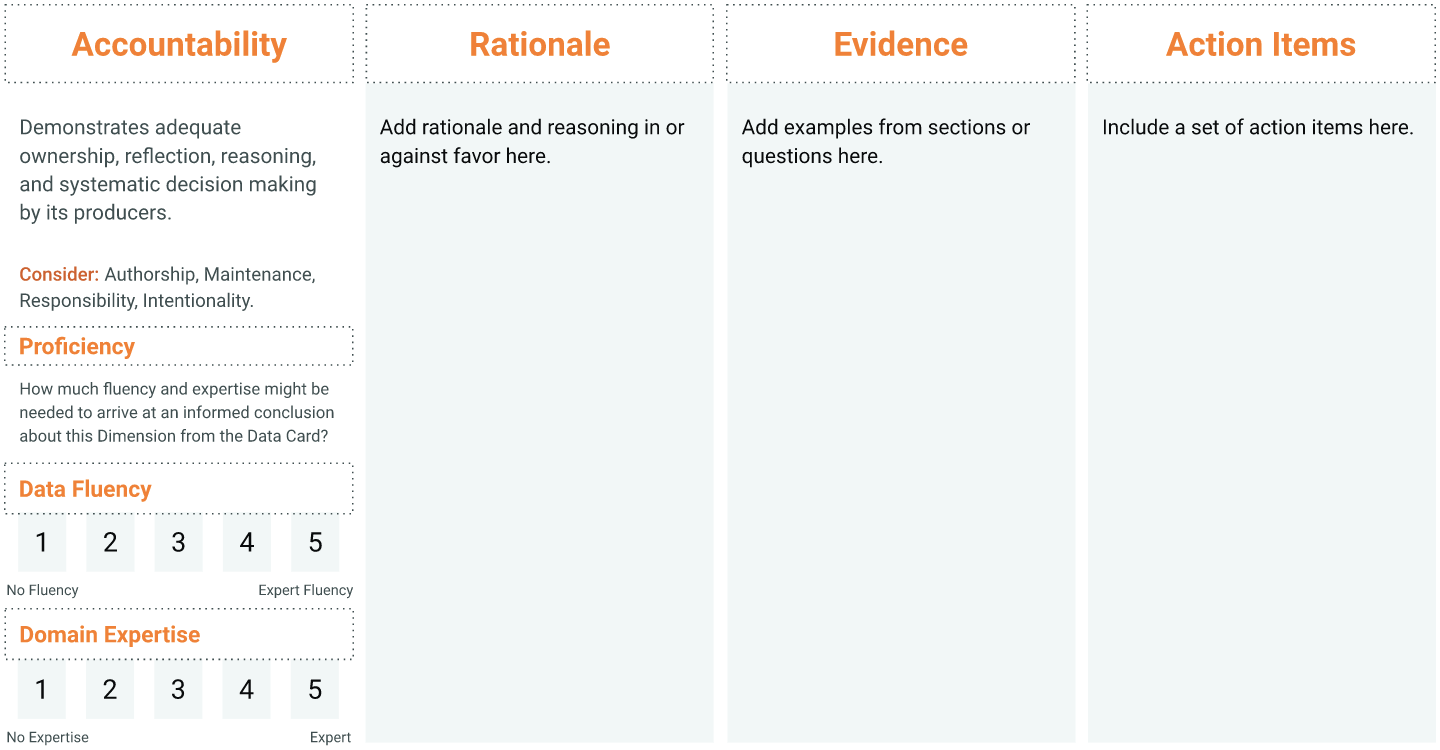

3. Dimensions

Now that you understand OFTEn and created questions to include in your Data Card, you're ready to uncover insights about your questions by doing a first pass of your Data Card. To do so, we're introducing dimensions, which are high-level descriptions of the different types of judgments that readers make, which provide directional insights into the usefulness and readability of the Data Card. In other words, can your Data Card help readers arrive at an informed conclusion about your dataset?

Accountable

A Data Card that's accountable is owned and maintained by people who demonstrate adequate ownership, reflection, reasoning, and systematic decision-making regarding the dataset and its use.

Example areas | Example questions |

Authorship, responsibility, maintenance, intentions | As a [perspective], I want to know... |

Utility or use

A Data Card that's useful provides details that satisfy the information needs of the readers, which leads to a responsible decision-making process that establishes the suitability of the dataset for their tasks and goals.

Example areas | Example questions |

Producer needs,agent needs, user needs, societal needs | As a [perspective], I want to know... |

Quality

A Data Card that's of high quality summarizes the rigor, integrity, and completeness of the dataset, often communicated in a manner that's accessible and understandable to readers of different backgrounds.

Example areas | Example questions |

Validity,reliability, integrity, reproducibility | As a [perspective], I want to know... |

Impact or consequences of use

A Data Card that adequately details the impact of dataset usage sets expectations for outcomes when using and managing the dataset, and acknowledges any first- or second-order consequences that could negatively impact the readers goals.

Example areas | Example questions |

Efficacious, relevance, group benefit,implications of deviations | As a [perspective], I want to know... |

Risks and recommendations

A Data Card that offers good recommendations makes readers aware of known and potential risks and limitations that stem from the provenance, representation, use, or context of use, and provides enough information and alternatives to help readers make responsible trade-offs.

Example areas | Example questions |

Risk magnitude, mitigations, recommendations, group harm | As a [perspective], I want to know... |

Summary

With dimensions, you can evaluate your set of questions to ensure they align with your goals and desirable outcomes. Even though you haven't quite answered a question in your Data Card yet, it's best to catch any mistakes before you get too deep into the dataset-documentation process.

The following table summarizes the five dimensions:

Stage | Description |

Accountability | Statements that express different stakeholders' reflective, reasonable, and systematic decisions regarding the trust in the dataset. |

Utility | Provides details that satisfy readers' responsible decision-making process needs and establishes use-cases suitability as it pertains to their goals. |

Quality | Summarizes the rigor, integrity, and completeness of the dataset that's communicated in a manner that's accessible to many readers. |

Impact and consequences | Information that helps readers achieve their desirable outcomes when they use and manage the dataset, and acknowledges consequences that could negatively impact their goals. |

Risks and recommendations | Makes readers aware of known and potential risks associated with the dataset that stem from representation, use, or context of use. |

With these different types of dimensions, you can uncover insights about the content quality, readability, and utility of your Data Card even before you begin to complete it. They help you identify action items that contribute to a more robust and refined Data Card template.

4. Evaluate your questions with dimensions

- Begin with a single dimension, and then determine how much fluency and expertise is necessary to arrive at an informed conclusion based on the complexity of your set of questions.

- Provide a rationale and reasoning for how well that dimension is currently supported by your set of questions.

- Provide evidence that supports your rationale through an example question or two from your set of questions.

- If your dimension seems undesirable, note the steps that must be taken to refine or address shortcomings. If you work with a team of stakeholders, assign responsibility should some stakeholders be better equipped at addressing certain questions.

- Repeat these steps for the next dimension.

The following is an example template that you can use to capture your dimensions evaluation:

This evaluation process can take anywhere from 15 minutes to an hour, depending on the amount of questions that you create and the variety of stakeholders that you need to consider for your Data Card.

5. Congratulations

Congratulations! You have a way to inspect the questions that you created for your Data Card. Now you're ready to answer them.