Page Summary

-

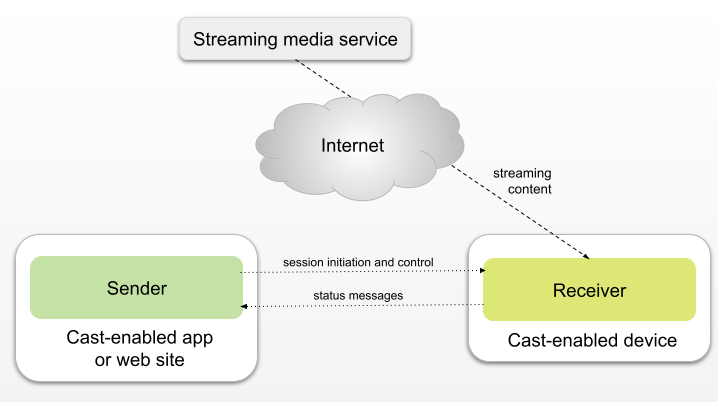

The Cast SDK enables a user to select and play streaming content from a Sender device to a Receiver device while controlling playback with the Sender.

-

A Sender is an app or device that initiates and controls a Cast session, while a Receiver is an app on a Cast-enabled device that responds to Sender commands and handles content streaming.

-

The Google Cast SDK supports developing Sender apps for Android, iOS, and Web platforms, and Receiver apps for Web and Android TV.

-

Developers building a Cast-based solution need to create both Sender and Receiver apps, while those using Google's Default Media Receiver only need to build a Sender app.

-

Receiver apps can be Web Receivers (Custom, Styled Media, Default) or Android TV Receivers, each offering different levels of customization and capabilities.

The Cast SDK allows a user to select streaming audio-visual content using a Sender, and play it on (or cast it to) another device known as the Receiver, while controlling playback using the Sender.

The term Sender refers to an app that plays the role of controller for the Cast session. A Sender initiates the Cast session and manages the user's interaction with the content.

There are many kinds of Senders, including mobile apps and Google Chrome web apps, as well as virtual control surfaces on touch-enabled Google Home devices. The media controls in the Chrome web browser function as a Sender, as does Google Assistant itself.

The term Receiver refers to an app running on a Cast-enabled device that is responsible for responding to Sender commands and for conveying streaming content from an online streaming service to the Cast-enabled device. Examples of Cast-enabled Receiver devices include Chromecasts, smart televisions, smart screens, and smart speakers.

The Cast SDK also supports multiple Senders connecting to a Cast session. For instance, one Sender could start a session on a Receiver and another Sender could join the same session to control playback, load new content, or queue more content for later.

App components and architecture

Google Cast supports Sender app development for Android, iOS, and Cast-supported web platforms, such as Google Chrome.

A Receiver app runs on a Cast-enabled device, examples of which include:

- A Chromecast connected to a high-definition television or sound system.

- A Cast-enabled television.

- A Cast-enabled smartscreen.

- A Cast-enabled Android device, such as Android TV.

A Sender controls media playback on a Receiver using

Media Playback Messages defined by the SDK. If

an app use case requires sending data that is not formally defined in the

standard Media Playback Messages, you may use the customData field provided by

several message types to pass ancillary data.

There are two basic Cast app development scenarios:

- An end-to-end Cast-based solution. In this scenario, the developer must build both the Sender app and two Receiver apps: an Android TV Receiver and a Web Receiver (more on this below).

- A Cast-enabled Sender app that can cast content (a screen or a multimedia stream) to Google’s default Cast Receiver, which is called the Default Media Receiver. In this scenario, the developer only needs to build one app, the Sender. The Default Media Receiver is useful for learning and very limited playback scenarios. It is not practical for receivers that require custom business logic, credentials, rights management, or analytics.

The Google Cast SDK

The Google Cast SDK is made up of several Cast API libraries. Along with the APIs, there are two kinds of documentation:

- API guides that orient you in how to use the APIs with sample code demonstrating Cast features, and

- Codelab tutorials that instruct you in the process of developing specific kinds of Cast apps.

The Cast APIs are divided according to platform and Cast app type. Table 1 contains links to the guides for the various Cast APIs.

| Platform | Sender | Receiver |

|---|---|---|

| Android | Android Sender apps | Android TV Receiver Overview |

| Web | Web Sender apps | Web Receiver Overview |

| iOS | iOS Sender apps | n/a |

Types of Sender Apps

Sender Apps can run on three platforms:

- Web

- Android

- iOS

Although the choice of Sender type will likely be driven largely by the Sender platforms that you intend to support, it’s important to know the capabilities and limitations of each type of Sender (see Table 3).

| Capability | Android Sender | iOS Sender | Web Sender |

|---|---|---|---|

| Ad breaks and companion ads within a media stream | |||

| Styled text tracks | |||

| Group, style and activate media tracks | |||

| Autoplay and Queueing (edit, reorder, update) | |||

| Custom channels | |||

| Custom actions | |||

| Full Cast UI, including controller and minicontroller | |||

| Intent to Join |

Types of Receiver apps

Receiver apps handle communication between the Sender app and the Cast device. There are two main types of Receiver: the Web Receiver and the Android TV Receiver. You are expected to provide a Web Receiver at a minimum, and encouraged to provide an Android TV Receiver to maximize the user's experience with your application.

There are three types of Web Receiver, each with a distinct set of qualities and capabilities:

- The Custom Receiver, which allows for custom logic, branding and modification of controls.

- The Styled Media Receiver, which allows for customized branding.

- The Default Receiver, which is the most basic type. This does not allow for any customization, and is not suitable for production apps.

In addition to the capabilities provided by the Custom Receiver, the Android TV Receiver provides Cast Connect, a set of capabilities that provide a native experience for your users, seamlessly combining Cast with Android TV.

Table 2 highlights the capabilities of the different types of Receivers.

| Android TV Receiver | Custom Receiver | Styled Media Receiver (SMR) | Default Media Receiver | |

|---|---|---|---|---|

| Platform | ||||

| Android-based (Java/Kotlin) | ||||

| Web-based (HTML5) | ||||

| Requirements | ||||

| Must be registered | ||||

| Capabilities | ||||

| HLS and DASH media playback | ||||

| Support for touch controls | ||||

| Handles voice commands from Assistant-enabled devices | ||||

| Customizable visual style and branding | ||||

| Handles custom messages | ||||

| Cast Connect | ||||

The choice between the three Web Receiver options depends on which media types the app needs to support, the degree of UI customization required, and any custom logic requirements.

Determine which type of Web Receiver to build

Provided your app can integrate with Cast, use the following prompts to determine which type of Web Receiver you should build:

| Create a Custom Receiver if: | |||||

The app requires one or more of the following special capabilities:

|

OR |

|

|||

| Create a Styled Media Receiver if: | |||||

|

| Use the Default Media Receiver if: | |||||

|