Page Summary

-

Gemini supports multimodal prompts, accepting text and image inputs while providing text-only responses.

-

Multimodal prompts allow for a variety of use cases like image classification, object recognition, and creative text generation based on images.

-

Gemini can interpret and reason about images, enabling tasks like counting objects, understanding handwriting, and inferring temporal information from scenes.

-

Advanced multimodal prompts can combine multiple skills like handwriting recognition, logical reasoning, and world knowledge for creative and practical applications.

-

Experimentation with different multimodal prompts is encouraged to explore the full potential of Gemini's capabilities.

Multimodal prompts are a type of prompts for large language models (LLMs) that combine multiple input type formats. By formats we refer to inputs such as text or images. Multimodal prompts and LLMs that support them enable a variety of interesting use cases including image classification, handwriting recognition, translation and other creative scenarios.

In this document, we'll focus on the types of prompts that can be achieved when inputting text and image into the Gemini model and receiving a text-only response by exploring 8 interesting examples.

What is a multimodal prompt?

Today, Gemini can now be prompted with a mixture of text and/or image input and return a text-only response. The text can be used to provide context for the image or it could be used to request the model to operate or generate a response about the image.

For example, the following is a multimodal prompt:

"What color is the cat?"

The text in this prompt provides context for the image by clarifying that there is a cat in the image and that we are mainly interested in the cat only and the color of the cat.

3 simple multimodal prompts

The following are three very simple examples of providing an image along with text to an LLM and accepting a text-only response. We will explore the concepts of recognising a type of entity in an image, classifying entities in an image without predetermined knowledge and finally an example of combining recognition with calculation.

Classification

"Does this image contain a cat? Respond with either true or false."

Response from Gemini:

True

This is a very simple example that highlights the LLM's ability to recognise the existence of something in an image or not and to respond to the developer in a boolean manner. This approach can be useful for detection of specific content for filtering or other purposes.

It is worth noting that dedicated image recognition models can be potentially more effective at very specific tasks. However, creating custom ML models takes time and expertise. Solutions using Gemini can be built more rapidly. Therefore it is important to compare the quality of responses available between different models and decide based on these trade offs.

Recognition

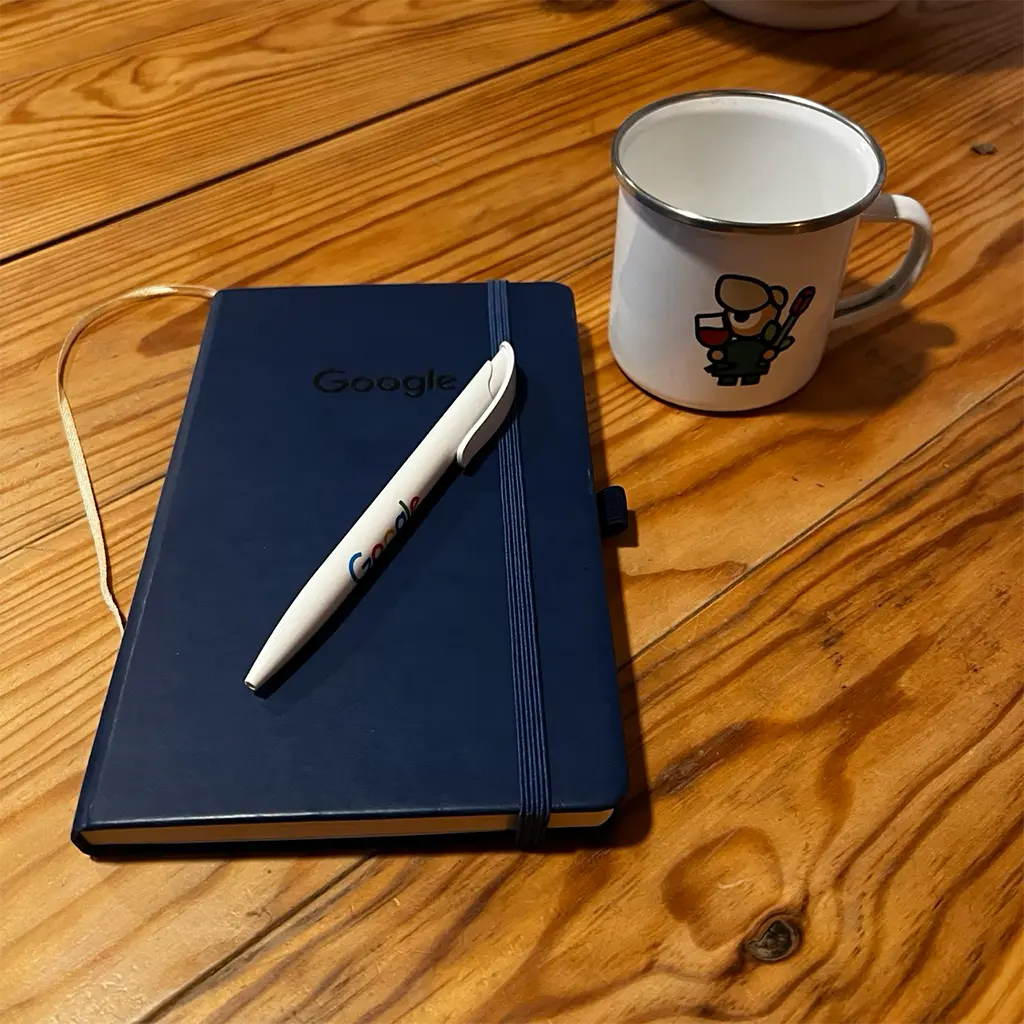

"Give me a list of all the important things in this picture."

Response from Gemini:

- A Google notebook

- A Google pen

- A mug

The above example highlights the fact we can request an open question to the LLM regarding the content appearing in the image. Using Gemini, the image classification process does not require different models for different types of objects (i.e. books or mugs). Instead, using a single model, we have identified all the significant elements in a single pass.

Counting

"Count the number of cats in this picture. Give me only the final number in

the response.

Only give me back a number"

Response from Gemini:

3

In this example we contain the request to only a particular type object and specify that we want it counted in the picture. Furthermore, we've asked that the response be contained to a pure numerical response in the prompt. However, depending on the number of objects in the image that need to be counted the quality may vary. As Gemini is a general purpose multimodal model its precision beyond small numbers may be limited.

5 advanced multimodal prompts

Next we'll walk through some interesting examples of more advanced ways that multimodal prompting with text and image input can be utilized.

Text Recognition, Reasoning & Calculation

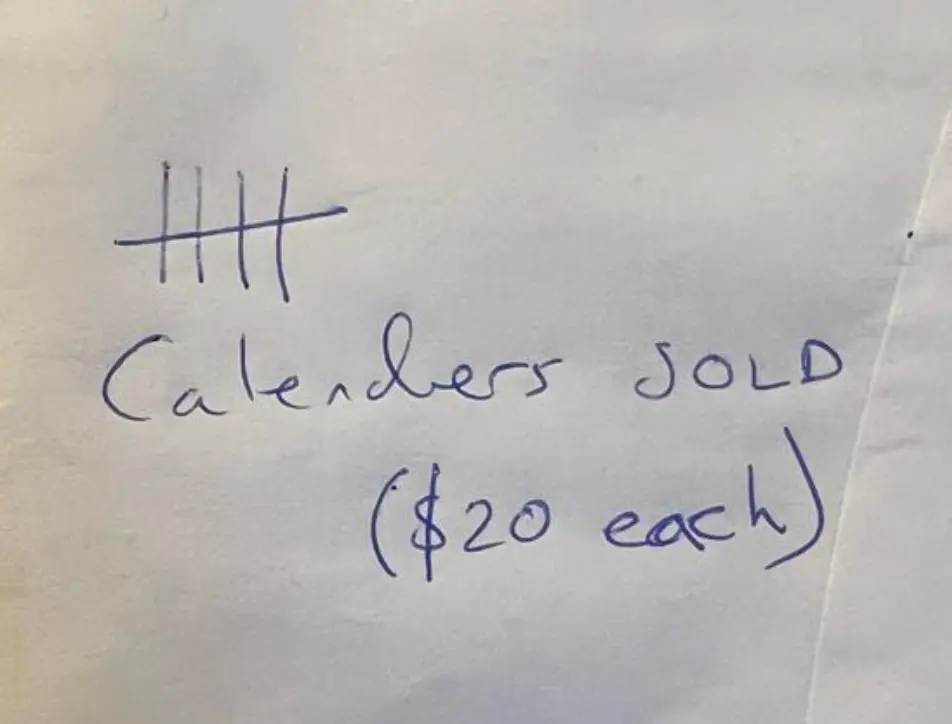

"How much money did we make today total? Explain your reasoning."

Response from Gemini:

The total amount of money made today is $100.

This is because the note says that 5 calendars were sold at $20 each. 5 x $20 = $100.

This more advanced prompt and image demonstrates several features of Gemini at once. First is handwriting recognition, the AI has recognised that the text written in English referred to how many calendars were sold and at what price each was sold at. Furthermore, with our prompt indicating the concept of tallying numbers, the AI has recognised the notation for a 5 point tally (i.e. 4 vertical lines and a single horizontal strikethrough). Finally, with our prompt guiding the AI through the steps of calculation we are able to receive a walkthrough of how the AI calculated the revenue.

World Context & Reasoning

"What time of day is it in this picture in 24 hour time."

Response from Gemini:

19:30

This example highlights the model's ability to recognise a scene's lighting and infer temporal information from the imagery within a certain timeframe.

Interpretation & Creativity

"Write a haiku about this photo."

Response from Gemini:

A bench by the lake,

A view of the mountains beyond,

A moment of peace.

Here we see the power of Gemini for storytelling and more creative ways of using generative AI. By providing an image as the starting point we can surprise ourselves with where the AI takes the storyline or what features from the image the AI decides to integrate in particular with the story. Pure text prompting for story generation is often limited to our own initial idea but a real world photo can serve as a powerful starting point for the AI's thought process.

Logical Progression

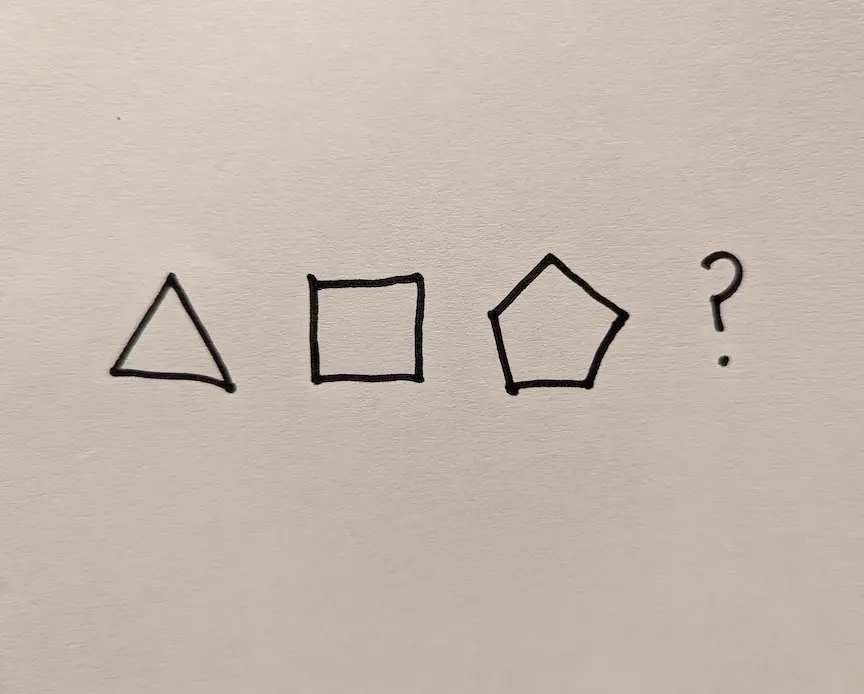

"What comes next? Explain your reasoning"

Response from Gemini:

The triangle has 3 sides, the square has 4 sides, and the pentagon has 5 sides. The number of sides increases by 1 for each shape. Therefore, the next shape should have 6 sides, which is a hexagon.

This example yet again combines multiple skills of Gemini. Not only does it recognise the shapes but it understands that the shapes, while slightly crudely drawn, are intended to be distinct mathematically bound shapes with specific attributes (e.g. 3 sides, 4 sides, 5 sides).

On top of this, the presence of the question mark does not confuse Gemini in its interpretation of the logical progression of the geometric shapes. Instead Gemini "sees" that this is a mathematical progression from 3, 4 to 5 and that therefore the last shape would be 6-sided and fittingly proposes a hexagon.

World Interpretation & Understanding

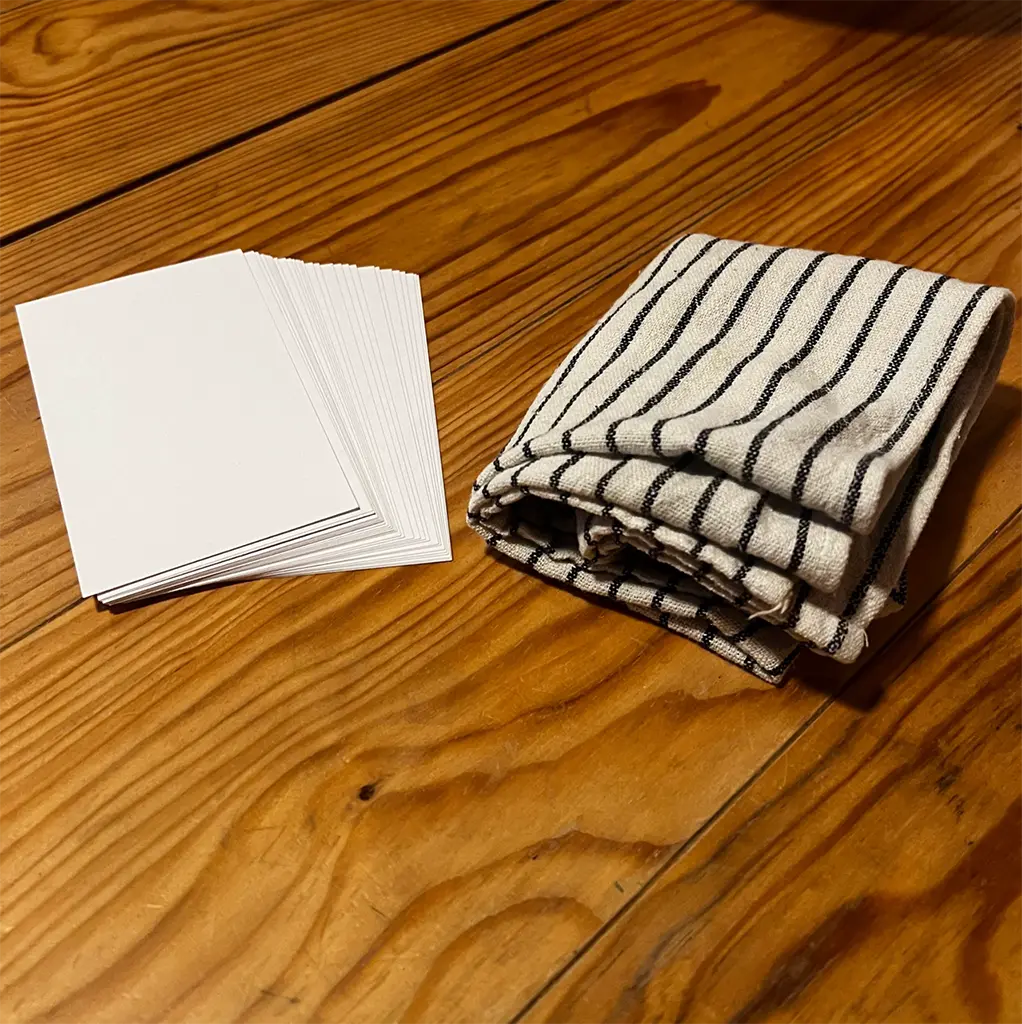

Which of these would be better for writing on? Which would be better for making into a scarf?

Response from Gemini:

The paper would be better for writing on because it is a smooth surface and the ink would not bleed through. The fabric would be better for making into a scarf because it is soft and would keep you warm.

Here we are leveraging Gemini in a very inventive way. The AI not only understands the imagery but it infers attributes and real-world physicality from what it sees. The reasoning is based on the visual understanding of the materials of the objects and how they interact with the purposes outlined in the prompt.

Conclusion

These are just a few examples of the ways that multimodal prompts can be used. With LLMs, you can use multimodal prompts to not only understand text and images but to form new ideas or extract understanding from them. We encourage you to experiment with different types of multimodal prompts and see what you can create.