Page Summary

-

This glossary defines a wide range of machine learning terms, including those specific to TensorFlow and large language models.

-

It provides clear explanations, examples, and applications of each term for a comprehensive understanding.

-

The content covers various aspects of machine learning, such as model types, training processes, fairness, and evaluation metrics.

-

It serves as a valuable resource for anyone seeking to grasp the terminology and fundamental concepts in this field.

-

The glossary also addresses advanced topics like deep learning, reinforcement learning, and natural language processing.

This glossary defines artificial intelligence terms.

A

ablation

A technique for evaluating the importance of a feature or component by temporarily removing it from a model. You then retrain the model without that feature or component, and if the retrained model performs significantly worse, then the removed feature or component was likely important.

For example, suppose you train a classification model on 10 features and achieve 88% precision on the test set. To check the importance of the first feature, you can retrain the model using only the nine other features. If the retrained model performs significantly worse (for instance, 55% precision), then the removed feature was probably important. Conversely, if the retrained model performs equally well, then that feature was probably not that important.

Ablation can also help determine the importance of:

- Larger components, such as an entire subsystem of a larger ML system

- Processes or techniques, such as a data preprocessing step

In both cases, you would observe how the system's performance changes (or doesn't change) after you've removed the component.

A/B testing

A statistical way of comparing two (or more) techniques—the A and the B. Typically, the A is an existing technique, and the B is a new technique. A/B testing not only determines which technique performs better but also whether the difference is statistically significant.

A/B testing usually compares a single metric on two techniques; for example, how does model accuracy compare for two techniques? However, A/B testing can also compare any finite number of metrics.

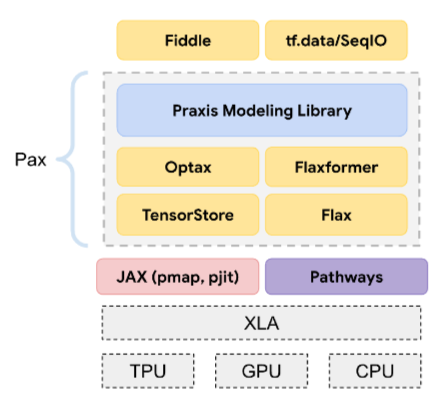

accelerator chip

A category of specialized hardware components designed to perform key computations needed for deep learning algorithms.

Accelerator chips (or just accelerators, for short) can significantly increase the speed and efficiency of training and inference tasks compared to a general-purpose CPU. They are ideal for training neural networks and similar computationally intensive tasks.

Examples of accelerator chips include:

- Google's Tensor Processing Units (TPUs) with dedicated hardware for deep learning.

- NVIDIA's GPUs which, though initially designed for graphics processing, are designed to enable parallel processing, which can significantly increase processing speed.

accuracy

The number of correct classification predictions divided by the total number of predictions. That is:

For example, a model that made 40 correct predictions and 10 incorrect predictions would have an accuracy of:

Binary classification provides specific names for the different categories of correct predictions and incorrect predictions. So, the accuracy formula for binary classification is as follows:

where:

- TP is the number of true positives (correct predictions).

- TN is the number of true negatives (correct predictions).

- FP is the number of false positives (incorrect predictions).

- FN is the number of false negatives (incorrect predictions).

Compare and contrast accuracy with precision and recall.

See Classification: Accuracy, recall, precision and related metrics in Machine Learning Crash Course for more information.

action

In reinforcement learning, the mechanism by which the agent transitions between states of the environment. The agent chooses the action by using a policy.

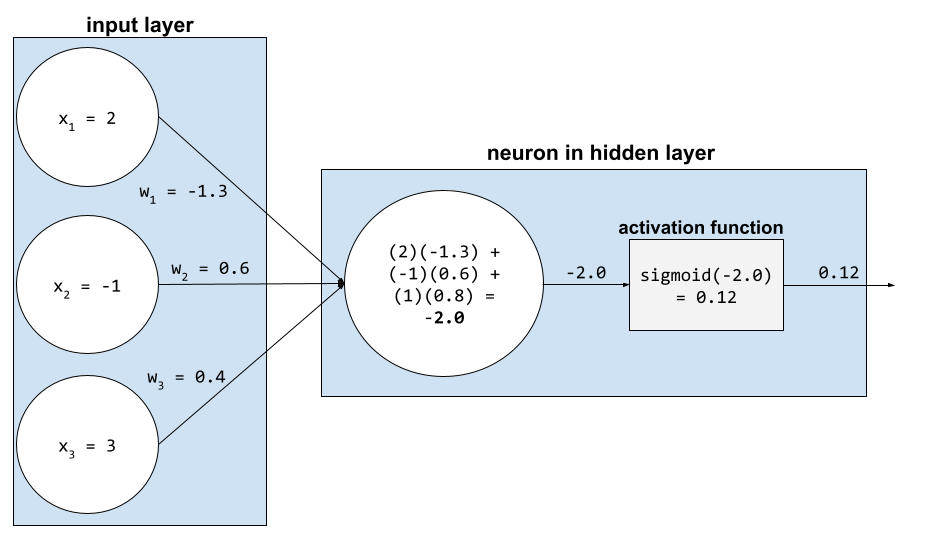

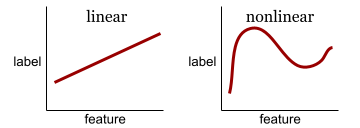

activation function

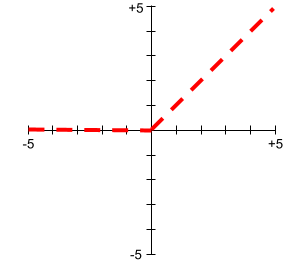

A function that enables neural networks to learn nonlinear (complex) relationships between features and the label.

Popular activation functions include:

The plots of activation functions are never single straight lines. For example, the plot of the ReLU activation function consists of two straight lines:

A plot of the sigmoid activation function looks as follows:

See Neural networks: Activation functions in Machine Learning Crash Course for more information.

active learning

A training approach in which the algorithm chooses some of the data it learns from. Active learning is particularly valuable when labeled examples are scarce or expensive to obtain. Instead of blindly seeking a diverse range of labeled examples, an active learning algorithm selectively seeks the particular range of examples it needs for learning.

AdaGrad

A sophisticated gradient descent algorithm that rescales the gradients of each parameter, effectively giving each parameter an independent learning rate. For a full explanation, see Adaptive Subgradient Methods for Online Learning and Stochastic Optimization.

adaptation

Synonym for tuning or fine-tuning.

agent

Software that can reason about multimodal user inputs in order to plan and execute actions on behalf of the user.

In reinforcement learning, an agent is the entity that uses a policy to maximize the expected return gained from transitioning between states of the environment.

agentic

The adjective form of agent. Agentic refers to the qualities that agents possess (such as autonomy).

agentic workflow

A dynamic process in which an agent autonomously plans and executes actions to achieve a goal. The process may involve reasoning, invoking external tools, and self-correcting its plan.

agglomerative clustering

AI slop

Output from a generative AI system that favors quantity over quality. For example, a web page with AI slop is filled with cheaply produced, AI-generated, low-quality content.

anomaly detection

The process of identifying outliers. For example, if the mean for a certain feature is 100 with a standard deviation of 10, then anomaly detection should flag a value of 200 as suspicious.

AR

Abbreviation for augmented reality.

area under the PR curve

See PR AUC (Area under the PR Curve).

area under the ROC curve

See AUC (Area under the ROC curve).

artificial general intelligence

A non-human mechanism that demonstrates a broad range of problem solving, creativity, and adaptability. For example, a program demonstrating artificial general intelligence could translate text, compose symphonies, and excel at games that have not yet been invented.

artificial intelligence

A non-human program or model that can solve sophisticated tasks. For example, a program or model that translates text or a program or model that identifies diseases from radiologic images both exhibit artificial intelligence.

Formally, machine learning is a sub-field of artificial intelligence. However, in recent years, some organizations have begun using the terms artificial intelligence and machine learning interchangeably.

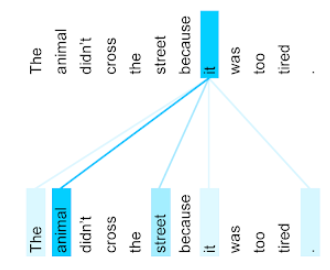

attention

A mechanism used in a neural network that indicates the importance of a particular word or part of a word. Attention compresses the amount of information a model needs to predict the next token/word. A typical attention mechanism might consist of a weighted sum over a set of inputs, where the weight for each input is computed by another part of the neural network.

Refer also to self-attention and multi-head self-attention, which are the building blocks of Transformers.

See LLMs: What's a large language model? in Machine Learning Crash Course for more information about self-attention.

attribute

Synonym for feature.

In machine learning fairness, attributes often refer to characteristics pertaining to individuals.

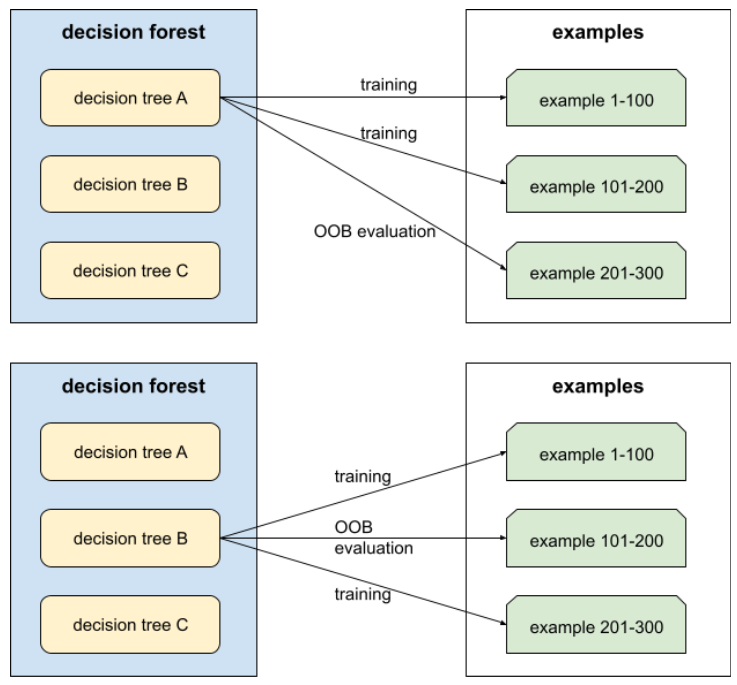

attribute sampling

A tactic for training a decision forest in which each decision tree considers only a random subset of possible features when learning the condition. Generally, a different subset of features is sampled for each node. In contrast, when training a decision tree without attribute sampling, all possible features are considered for each node.

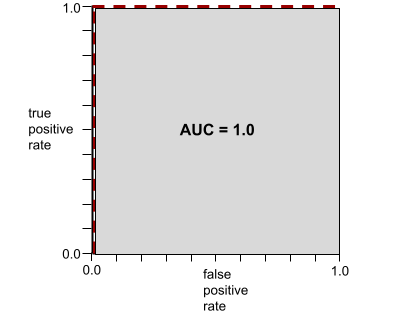

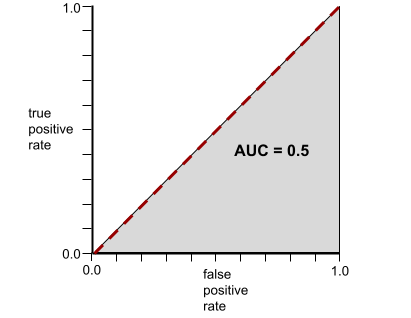

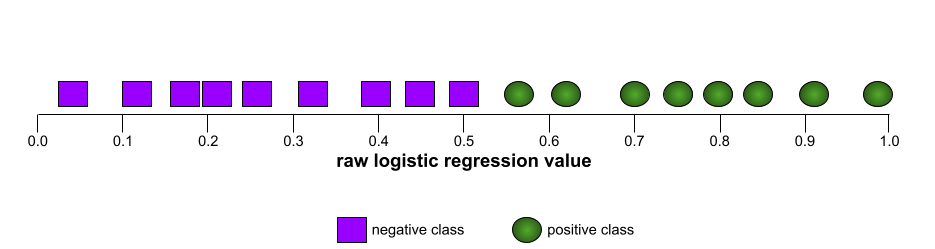

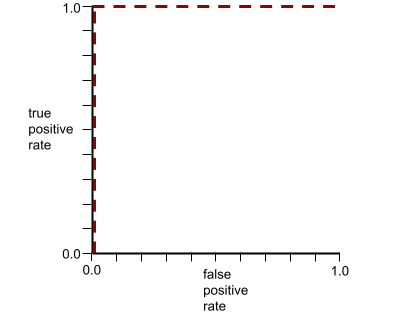

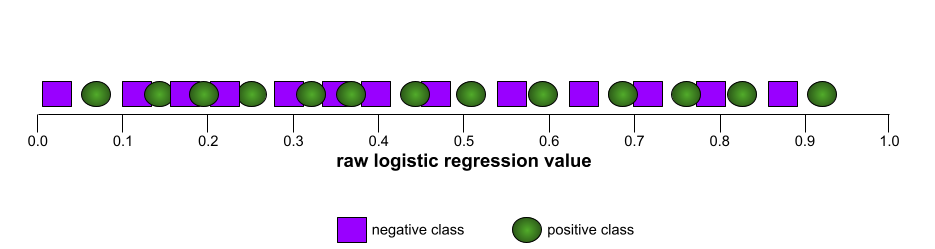

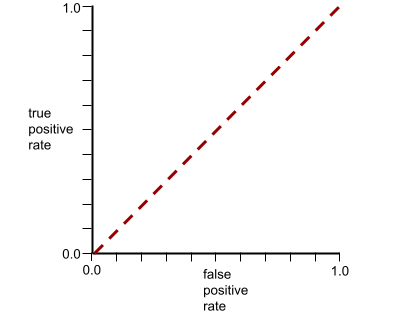

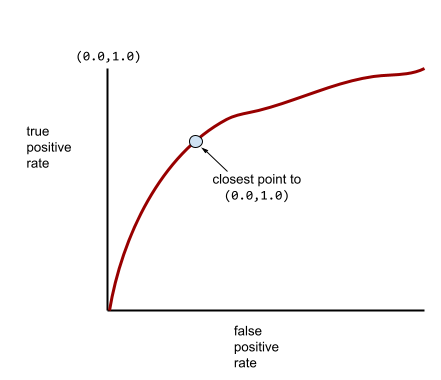

AUC (Area under the ROC curve)

A number between 0.0 and 1.0 representing a binary classification model's ability to separate positive classes from negative classes. The closer the AUC is to 1.0, the better the model's ability to separate classes from each other.

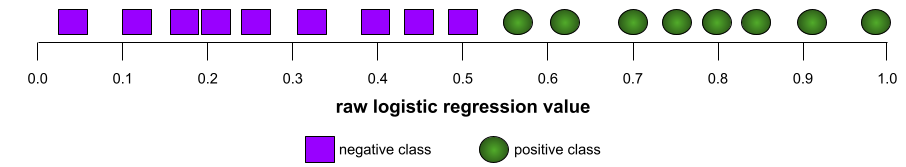

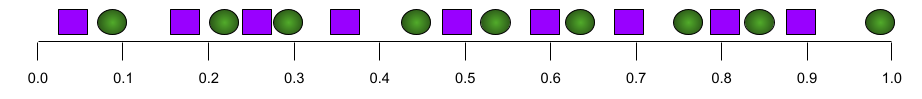

For example, the following illustration shows a classification model that separates positive classes (green ovals) from negative classes (purple rectangles) perfectly. This unrealistically perfect model has an AUC of 1.0:

Conversely, the following illustration shows the results for a classification model that generated random results. This model has an AUC of 0.5:

Yes, the preceding model has an AUC of 0.5, not 0.0.

Most models are somewhere between the two extremes. For instance, the following model separates positives from negatives somewhat, and therefore has an AUC somewhere between 0.5 and 1.0:

AUC ignores any value you set for classification threshold. Instead, AUC considers all possible classification thresholds.

See Classification: ROC and AUC in Machine Learning Crash Course for more information.

augmented reality

A technology that superimposes a computer-generated image on a user's view of the real world, thus providing a composite view.

autoencoder

A system that learns to extract the most important information from the input. Autoencoders are a combination of an encoder and decoder. Autoencoders rely on the following two-step process:

- The encoder maps the input to a (typically) lossy lower-dimensional (intermediate) format.

- The decoder builds a lossy version of the original input by mapping the lower-dimensional format to the original higher-dimensional input format.

Autoencoders are trained end-to-end by having the decoder attempt to reconstruct the original input from the encoder's intermediate format as closely as possible. Because the intermediate format is smaller (lower-dimensional) than the original format, the autoencoder is forced to learn what information in the input is essential, and the output won't be perfectly identical to the input.

For example:

- If the input data is a graphic, the non-exact copy would be similar to the original graphic, but somewhat modified. Perhaps the non-exact copy removes noise from the original graphic or fills in some missing pixels.

- If the input data is text, an autoencoder would generate new text that mimics (but is not identical to) the original text.

See also variational autoencoders.

automatic evaluation

Using software to judge the quality of a model's output.

When model output is relatively straightforward, a script or program can compare the model's output to a golden response. This type of automatic evaluation is sometimes called programmatic evaluation. Metrics such as ROUGE or BLEU are often useful for programmatic evaluation.

When model output is complex or has no one right answer, a separate ML program called an autorater sometimes performs the automatic evaluation.

Contrast with human evaluation.

automation bias

When a human decision maker favors recommendations made by an automated decision-making system over information made without automation, even when the automated decision-making system makes errors.

See Fairness: Types of bias in Machine Learning Crash Course for more information.

AutoML

Any automated process for building machine learning models. AutoML can automatically do tasks such as the following:

- Search for the most appropriate model.

- Tune hyperparameters.

- Prepare data (including performing feature engineering).

- Deploy the resulting model.

AutoML is useful for data scientists because it can save them time and effort in developing machine learning pipelines and improve prediction accuracy. It is also useful to non-experts, by making complicated machine learning tasks more accessible to them.

See Automated Machine Learning (AutoML) in Machine Learning Crash Course for more information.

autorater evaluation

A hybrid mechanism for judging the quality of a generative AI model's output that combines human evaluation with automatic evaluation. An autorater is an ML model trained on data created by human evaluation. Ideally, an autorater learns to mimic a human evaluator.Prebuilt autoraters are available, but the best autoraters are fine-tuned specifically to the task you are evaluating.

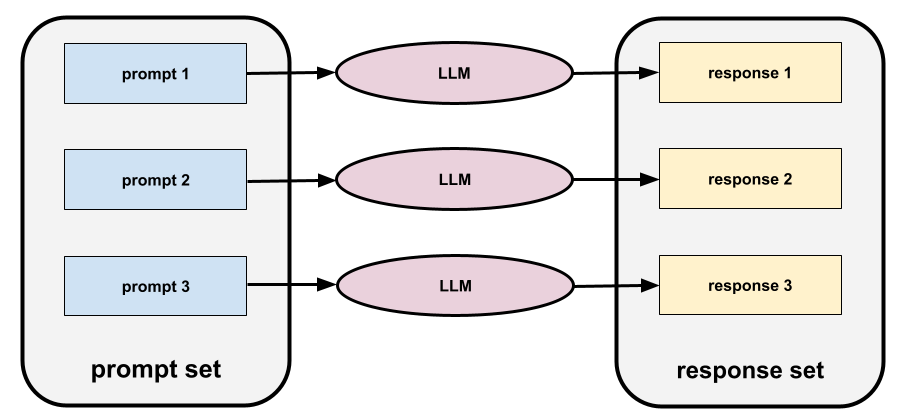

auto-regressive model

A model that infers a prediction based on its own previous predictions. For example, auto-regressive language models predict the next token based on the previously predicted tokens. All Transformer-based large language models are auto-regressive.

In contrast, GAN-based image models are usually not auto-regressive since they generate an image in a single forward-pass and not iteratively in steps. However, certain image generation models are auto-regressive because they generate an image in steps.

auxiliary loss

A loss function—used in conjunction with a neural network model's main loss function—that helps accelerate training during the early iterations when weights are randomly initialized.

Auxiliary loss functions push effective gradients to the earlier layers. This facilitates convergence during training by combating the vanishing gradient problem.

average precision at k

A metric for summarizing a model's performance on a single prompt that generates ranked results, such as a numbered list of book recommendations. Average precision at k is, well, the average of the precision at k values for each relevant result. The formula for average precision at k is therefore:

\[{\text{average precision at k}} = \frac{1}{n} \sum_{i=1}^n {\text{precision at k for each relevant item} } \]

where:

- \(n\) is the number of relevant items in the list.

Contrast with recall at k.

axis-aligned condition

In a decision tree, a condition

that involves only a single feature. For example, if area

is a feature, then the following is an axis-aligned condition:

area > 200

Contrast with oblique condition.

B

backpropagation

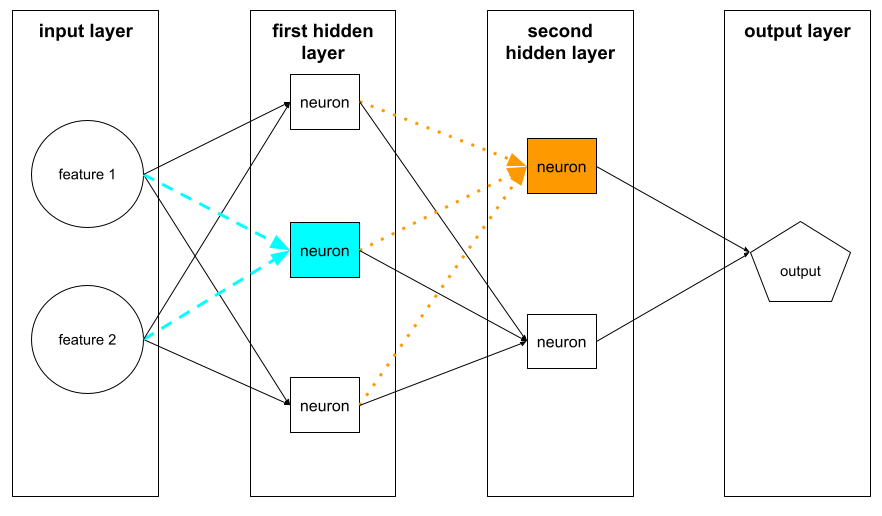

The algorithm that implements gradient descent in neural networks.

Training a neural network involves many iterations of the following two-pass cycle:

- During the forward pass, the system processes a batch of examples to yield prediction(s). The system compares each prediction to each label value. The difference between the prediction and the label value is the loss for that example. The system aggregates the losses for all the examples to compute the total loss for the current batch.

- During the backward pass (backpropagation), the system reduces loss by adjusting the weights of all the neurons in all the hidden layer(s).

Neural networks often contain many neurons across many hidden layers. Each of those neurons contribute to the overall loss in different ways. Backpropagation determines whether to increase or decrease the weights applied to particular neurons.

The learning rate is a multiplier that controls the degree to which each backward pass increases or decreases each weight. A large learning rate will increase or decrease each weight more than a small learning rate.

In calculus terms, backpropagation implements the chain rule. from calculus. That is, backpropagation calculates the partial derivative of the error with respect to each parameter.

Years ago, ML practitioners had to write code to implement backpropagation. Modern ML APIs like Keras now implement backpropagation for you. Phew!

See Neural networks in Machine Learning Crash Course for more information.

bagging

A method to train an ensemble where each constituent model trains on a random subset of training examples sampled with replacement. For example, a random forest is a collection of decision trees trained with bagging.

The term bagging is short for bootstrap aggregating.

See Random forests in the Decision Forests course for more information.

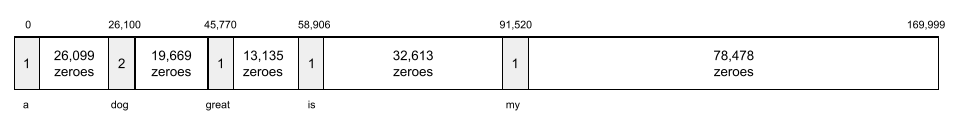

bag of words

A representation of the words in a phrase or passage, irrespective of order. For example, bag of words represents the following three phrases identically:

- the dog jumps

- jumps the dog

- dog jumps the

Each word is mapped to an index in a sparse vector, where the vector has an index for every word in the vocabulary. For example, the phrase the dog jumps is mapped into a feature vector with non-zero values at the three indexes corresponding to the words the, dog, and jumps. The non-zero value can be any of the following:

- A 1 to indicate the presence of a word.

- A count of the number of times a word appears in the bag. For example, if the phrase were the maroon dog is a dog with maroon fur, then both maroon and dog would be represented as 2, while the other words would be represented as 1.

- Some other value, such as the logarithm of the count of the number of times a word appears in the bag.

baseline

A model used as a reference point for comparing how well another model (typically, a more complex one) is performing. For example, a logistic regression model might serve as a good baseline for a deep model.

For a particular problem, the baseline helps model developers quantify the minimal expected performance that a new model must achieve for the new model to be useful.

base model

A pre-trained model that can serve as the starting point for fine-tuning to address specific tasks or applications.

See also pre-trained model and foundation model.

batch

The set of examples used in one training iteration. The batch size determines the number of examples in a batch.

See epoch for an explanation of how a batch relates to an epoch.

See Linear regression: Hyperparameters in Machine Learning Crash Course for more information.

batch inference

The process of inferring predictions on multiple unlabeled examples divided into smaller subsets ("batches").

Batch inference can take advantage of the parallelization features of accelerator chips. That is, multiple accelerators can simultaneously infer predictions on different batches of unlabeled examples, dramatically increasing the number of inferences per second.

See Production ML systems: Static versus dynamic inference in Machine Learning Crash Course for more information.

batch normalization

Normalizing the input or output of the activation functions in a hidden layer. Batch normalization can provide the following benefits:

- Make neural networks more stable by protecting against outlier weights.

- Enable higher learning rates, which can speed training.

- Reduce overfitting.

batch size

The number of examples in a batch. For instance, if the batch size is 100, then the model processes 100 examples per iteration.

The following are popular batch size strategies:

- Stochastic Gradient Descent (SGD), in which the batch size is 1.

- Full batch, in which the batch size is the number of examples in the entire training set. For instance, if the training set contains a million examples, then the batch size would be a million examples. Full batch is usually an inefficient strategy.

- mini-batch in which the batch size is usually between 10 and 1000. Mini-batch is usually the most efficient strategy.

See the following for more information:

- Production ML systems: Static versus dynamic inference in Machine Learning Crash Course.

- Deep Learning Tuning Playbook.

Bayesian neural network

A probabilistic neural network that accounts for uncertainty in weights and outputs. A standard neural network regression model typically predicts a scalar value; for example, a standard model predicts a house price of 853,000. In contrast, a Bayesian neural network predicts a distribution of values; for example, a Bayesian model predicts a house price of 853,000 with a standard deviation of 67,200.

A Bayesian neural network relies on Bayes' Theorem to calculate uncertainties in weights and predictions. A Bayesian neural network can be useful when it is important to quantify uncertainty, such as in models related to pharmaceuticals. Bayesian neural networks can also help prevent overfitting.

Bayesian optimization

A probabilistic regression model technique for optimizing computationally expensive objective functions by instead optimizing a surrogate that quantifies the uncertainty using a Bayesian learning technique. Since Bayesian optimization is itself very expensive, it is usually used to optimize expensive-to-evaluate tasks that have a small number of parameters, such as selecting hyperparameters.

Bellman equation

In reinforcement learning, the following identity satisfied by the optimal Q-function:

\[Q(s, a) = r(s, a) + \gamma \mathbb{E}_{s'|s,a} \max_{a'} Q(s', a')\]

Reinforcement learning algorithms apply this identity to create Q-learning using the following update rule:

\[Q(s,a) \gets Q(s,a) + \alpha \left[r(s,a) + \gamma \displaystyle\max_{\substack{a_1}} Q(s',a') - Q(s,a) \right] \]

Beyond reinforcement learning, the Bellman equation has applications to dynamic programming. See the Wikipedia entry for Bellman equation.

BERT (Bidirectional Encoder Representations from Transformers)

A model architecture for text representation. A trained BERT model can act as part of a larger model for text classification or other ML tasks.

BERT has the following characteristics:

- Uses the Transformer architecture, and therefore relies on self-attention.

- Uses the encoder part of the Transformer. The encoder's job is to produce good text representations, rather than to perform a specific task like classification.

- Is bidirectional.

- Uses masking for unsupervised training.

BERT's variants include:

See Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing for an overview of BERT.

bias (ethics/fairness)

1. Stereotyping, prejudice or favoritism towards some things, people, or groups over others. These biases can affect collection and interpretation of data, the design of a system, and how users interact with a system. Forms of this type of bias include:

- automation bias

- confirmation bias

- experimenter's bias

- group attribution bias

- implicit bias

- in-group bias

- out-group homogeneity bias

2. Systematic error introduced by a sampling or reporting procedure. Forms of this type of bias include:

Not to be confused with the bias term in machine learning models or prediction bias.

See Fairness: Types of bias in Machine Learning Crash Course for more information.

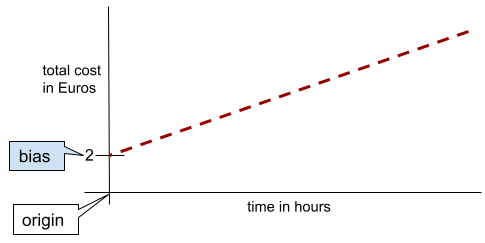

bias (math) or bias term

An intercept or offset from an origin. Bias is a parameter in machine learning models, which is symbolized by either of the following:

- b

- w0

For example, bias is the b in the following formula:

In a simple two-dimensional line, bias just means "y-intercept." For example, the bias of the line in the following illustration is 2.

Bias exists because not all models start from the origin (0,0). For example, suppose an amusement park costs 2 Euros to enter and an additional 0.5 Euro for every hour a customer stays. Therefore, a model mapping the total cost has a bias of 2 because the lowest cost is 2 Euros.

Bias is not to be confused with bias in ethics and fairness or prediction bias.

See Linear Regression in Machine Learning Crash Course for more information.

bidirectional

A term used to describe a system that evaluates the text that both precedes and follows a target section of text. In contrast, a unidirectional system only evaluates the text that precedes a target section of text.

For example, consider a masked language model that must determine probabilities for the word or words representing the underline in the following question:

What is the _____ with you?

A unidirectional language model would have to base its probabilities only on the context provided by the words "What", "is", and "the". In contrast, a bidirectional language model could also gain context from "with" and "you", which might help the model generate better predictions.

bidirectional language model

A language model that determines the probability that a given token is present at a given location in an excerpt of text based on the preceding and following text.

bigram

An N-gram in which N=2.

binary classification

A type of classification task that predicts one of two mutually exclusive classes:

- the positive class

- the negative class

For example, the following two machine learning models each perform binary classification:

- A model that determines whether email messages are spam (the positive class) or not spam (the negative class).

- A model that evaluates medical symptoms to determine whether a person has a particular disease (the positive class) or doesn't have that disease (the negative class).

Contrast with multi-class classification.

See also logistic regression and classification threshold.

See Classification in Machine Learning Crash Course for more information.

binary condition

In a decision tree, a condition that has only two possible outcomes, typically yes or no. For example, the following is a binary condition:

temperature >= 100

Contrast with non-binary condition.

See Types of conditions in the Decision Forests course for more information.

binning

Synonym for bucketing.

black box model

A model whose "reasoning" is impossible or difficult for humans to understand. That is, although humans can see how prompts affect responses, humans can't determine exactly how a black box model determines the response. In other words, a black box model is lacking interpretability.

Most deep models and large language models are black boxes.

BLEU (Bilingual Evaluation Understudy)

A metric between 0.0 and 1.0 for evaluating machine translations, for example, from Spanish to Japanese.

To calculate a score, BLEU typically compares an ML model's translation (generated text) to a human expert's translation (reference text). The degree to which N-grams in the generated text and reference text match determines the BLEU score.

The original paper on this metric is BLEU: a Method for Automatic Evaluation of Machine Translation.

See also BLEURT.

BLEURT (Bilingual Evaluation Understudy from Transformers)

A metric for evaluating machine translations from one language to another, particularly to and from English.

For translations to and from English, BLEURT aligns more closely to human ratings than BLEU. Unlike BLEU, BLEURT emphasizes semantic (meaning) similarities and can accommodate paraphrasing.

BLEURT relies on a pre-trained large language model (BERT to be exact) that is then fine-tuned on text from human translators.

The original paper on this metric is BLEURT: Learning Robust Metrics for Text Generation.

Boolean Questions (BoolQ)

A dataset for evaluating an LLM's proficiency in answering yes-or-no questions. Each of the challenges in the dataset has three components:

- A query

- A passage implying the answer to the query.

- The correct answer, which is either yes or no.

For example:

- Query: Are there any nuclear power plants in Michigan?

- Passage: ...three nuclear power plants supply Michigan with about 30% of its electricity.

- Correct answer: Yes

Researchers gathered the questions from anonymized, aggregated Google Search queries and then used Wikipedia pages to ground the information.

For more information, see BoolQ: Exploring the Surprising Difficulty of Natural Yes/No Questions.

BoolQ is a component of the SuperGLUE ensemble.

BoolQ

Abbreviation for Boolean Questions.

boosting

A machine learning technique that iteratively combines a set of simple and not very accurate classification models (referred to as "weak classifiers") into a classification model with high accuracy (a "strong classifier") by upweighting the examples that the model is currently misclassifying.

See Gradient Boosted Decision Trees? in the Decision Forests course for more information.

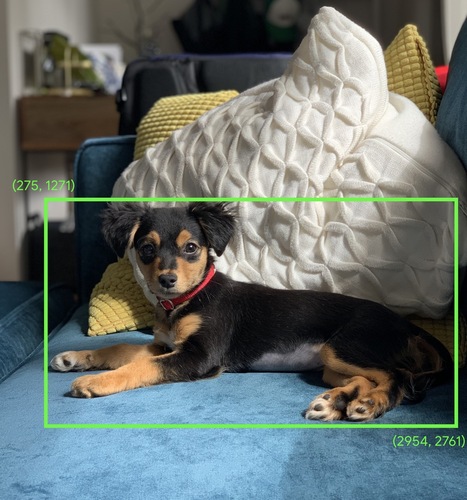

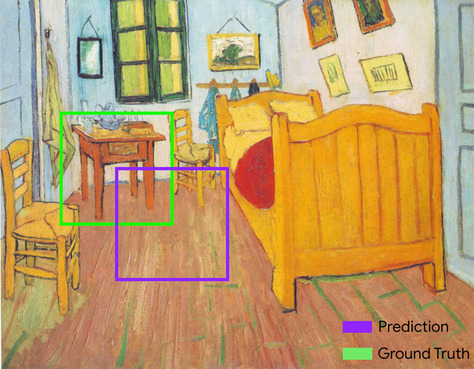

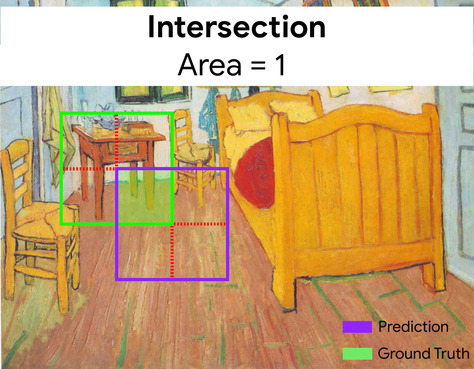

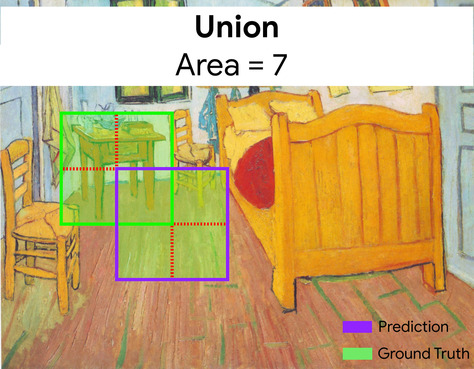

bounding box

In an image, the (x, y) coordinates of a rectangle around an area of interest, such as the dog in the image below.

broadcasting

Expanding the shape of an operand in a matrix math operation to dimensions compatible for that operation. For example, linear algebra requires that the two operands in a matrix addition operation must have the same dimensions. Consequently, you can't add a matrix of shape (m, n) to a vector of length n. Broadcasting enables this operation by virtually expanding the vector of length n to a matrix of shape (m, n) by replicating the same values down each column.

See the following description of broadcasting in NumPy for more details.

bucketing

Converting a single feature into multiple binary features called buckets or bins, typically based on a value range. The chopped feature is typically a continuous feature.

For example, instead of representing temperature as a single continuous floating-point feature, you could chop ranges of temperatures into discrete buckets, such as:

- <= 10 degrees Celsius would be the "cold" bucket.

- 11 - 24 degrees Celsius would be the "temperate" bucket.

- >= 25 degrees Celsius would be the "warm" bucket.

The model will treat every value in the same bucket identically. For

example, the values 13 and 22 are both in the temperate bucket, so the

model treats the two values identically.

See Numerical data: Binning in Machine Learning Crash Course for more information.

C

calibration layer

A post-prediction adjustment, typically to account for prediction bias. The adjusted predictions and probabilities should match the distribution of an observed set of labels.

candidate generation

The initial set of recommendations chosen by a recommendation system. For example, consider a bookstore that offers 100,000 titles. The candidate generation phase creates a much smaller list of suitable books for a particular user, say 500. But even 500 books is way too many to recommend to a user. Subsequent, more expensive, phases of a recommendation system (such as scoring and re-ranking) reduce those 500 to a much smaller, more useful set of recommendations.

See Candidate generation overview in the Recommendation Systems course for more information.

candidate sampling

A training-time optimization that calculates a probability for all the positive labels, using, for example, softmax, but only for a random sample of negative labels. For instance, given an example labeled beagle and dog, candidate sampling computes the predicted probabilities and corresponding loss terms for:

- beagle

- dog

- a random subset of the remaining negative classes (for example, cat, lollipop, fence).

The idea is that the negative classes can learn from less frequent negative reinforcement as long as positive classes always get proper positive reinforcement, and this is indeed observed empirically.

Candidate sampling is more computationally efficient than training algorithms that compute predictions for all negative classes, particularly when the number of negative classes is very large.

categorical data

Features having a specific set of possible values. For example,

consider a categorical feature named traffic-light-state, which can only

have one of the following three possible values:

redyellowgreen

By representing traffic-light-state as a categorical feature,

a model can learn the

differing impacts of red, green, and yellow on driver behavior.

Categorical features are sometimes called discrete features.

Contrast with numerical data.

See Working with categorical data in Machine Learning Crash Course for more information.

causal language model

Synonym for unidirectional language model.

See bidirectional language model to contrast different directional approaches in language modeling.

CB

Abbreviation for CommitmentBank.

centroid

The center of a cluster as determined by a k-means or k-median algorithm. For example, if k is 3, then the k-means or k-median algorithm finds 3 centroids.

See Clustering algorithms in the Clustering course for more information.

centroid-based clustering

A category of clustering algorithms that organizes data into nonhierarchical clusters. k-means is the most widely used centroid-based clustering algorithm.

Contrast with hierarchical clustering algorithms.

See Clustering algorithms in the Clustering course for more information.

chain-of-thought prompting

A prompt engineering technique that encourages a large language model (LLM) to explain its reasoning, step by step. For example, consider the following prompt, paying particular attention to the second sentence:

How many g forces would a driver experience in a car that goes from 0 to 60 miles per hour in 7 seconds? In the answer, show all relevant calculations.

The LLM's response would likely:

- Show a sequence of physics formulas, plugging in the values 0, 60, and 7 in appropriate places.

- Explain why it chose those formulas and what the various variables mean.

Chain-of-thought prompting forces the LLM to perform all the calculations, which might lead to a more correct answer. In addition, chain-of-thought prompting enables the user to examine the LLM's steps to determine whether or not the answer makes sense.

Character N-gram F-score (ChrF)

A metric to evaluate machine translation models. Character N-gram F-score determines the degree to which N-grams in reference text overlap the N-grams in an ML model's generated text.

Character N-gram F-score is similar to metrics in the ROUGE and BLEU families, except that:

- Character N-gram F-score operates on character N-grams.

- ROUGE and BLEU operate on word N-grams or tokens.

chat

The contents of a back-and-forth dialogue with an ML system, typically a large language model. The previous interaction in a chat (what you typed and how the large language model responded) becomes the context for subsequent parts of the chat.

A chatbot is an application of a large language model.

checkpoint

Data that captures the state of a model's parameters either during training or after training is completed. For example, during training, you can:

- Stop training, perhaps intentionally or perhaps as the result of certain errors.

- Capture the checkpoint.

- Later, reload the checkpoint, possibly on different hardware.

- Restart training.

Choice of Plausible Alternatives (COPA)

A dataset for evaluating how well an LLM can identify the better of two alternative answers to a premise. Each of the challenges in the dataset consists of three components:

- A premise, which is typically a statement followed by a question

- Two possible answers to the question posed in the premise, one of which is correct and the other incorrect

- The correct answer

For example:

- Premise: The man broke his toe. What was the CAUSE of this?

- Possible answers:

- He got a hole in his sock.

- He dropped a hammer on his foot.

- Correct answer: 2

COPA is a component of the SuperGLUE ensemble.

class

A category that a label can belong to. For example:

- In a binary classification model that detects spam, the two classes might be spam and not spam.

- In a multi-class classification model that identifies dog breeds, the classes might be poodle, beagle, pug, and so on.

A classification model predicts a class. In contrast, a regression model predicts a number rather than a class.

See Classification in Machine Learning Crash Course for more information.

class-balanced dataset

A dataset containing categorical labels in which the number of instances of each category is approximately equal. For example, consider a botanical dataset whose binary label can be either native plant or nonnative plant:

- A dataset with 515 native plants and 485 nonnative plants is a class-balanced dataset.

- A dataset with 875 native plants and 125 nonnative plants is a class-imbalanced dataset.

A formal dividing line between class-balanced datasets and class-imbalanced datasets doesn't exist. The distinction only becomes important when a model trained on a highly class-imbalanced dataset can't converge. See Datasets: imbalanced datasets in Machine Learning Crash Course for details.

classification model

A model whose prediction is a class. For example, the following are all classification models:

- A model that predicts an input sentence's language (French? Spanish? Italian?).

- A model that predicts tree species (Maple? Oak? Baobab?).

- A model that predicts the positive or negative class for a particular medical condition.

In contrast, regression models predict numbers rather than classes.

Two common types of classification models are:

classification threshold

In a binary classification, a number between 0 and 1 that converts the raw output of a logistic regression model into a prediction of either the positive class or the negative class. Note that the classification threshold is a value that a human chooses, not a value chosen by model training.

A logistic regression model outputs a raw value between 0 and 1. Then:

- If this raw value is greater than the classification threshold, then the positive class is predicted.

- If this raw value is less than the classification threshold, then the negative class is predicted.

For example, suppose the classification threshold is 0.8. If the raw value is 0.9, then the model predicts the positive class. If the raw value is 0.7, then the model predicts the negative class.

The choice of classification threshold strongly influences the number of false positives and false negatives.

See Thresholds and the confusion matrix in Machine Learning Crash Course for more information.

classifier

A casual term for a classification model.

class-imbalanced dataset

A dataset for a classification in which the total number of labels of each class differs significantly. For example, consider a binary classification dataset whose two labels are divided as follows:

- 1,000,000 negative labels

- 10 positive labels

The ratio of negative to positive labels is 100,000 to 1, so this is a class-imbalanced dataset.

In contrast, the following dataset is class-balanced because the ratio of negative labels to positive labels is relatively close to 1:

- 517 negative labels

- 483 positive labels

Multi-class datasets can also be class-imbalanced. For example, the following multi-class classification dataset is also class-imbalanced because one label has far more examples than the other two:

- 1,000,000 labels with class "green"

- 200 labels with class "purple"

- 350 labels with class "orange"

Training class-imbalanced datasets can present special challenges. See Imbalanced datasets in Machine Learning Crash Course for details.

See also entropy, majority class, and minority class.

clipping

A technique for handling outliers by doing either or both of the following:

- Reducing feature values that are greater than a maximum threshold down to that maximum threshold.

- Increasing feature values that are less than a minimum threshold up to that minimum threshold.

For example, suppose that <0.5% of values for a particular feature fall outside the range 40–60. In this case, you could do the following:

- Clip all values over 60 (the maximum threshold) to be exactly 60.

- Clip all values under 40 (the minimum threshold) to be exactly 40.

Outliers can damage models, sometimes causing weights to overflow during training. Some outliers can also dramatically spoil metrics like accuracy. Clipping is a common technique to limit the damage.

Gradient clipping forces gradient values within a designated range during training.

See Numerical data: Normalization in Machine Learning Crash Course for more information.

Cloud TPU

A specialized hardware accelerator designed to speed up machine learning workloads on Google Cloud.

clustering

Grouping related examples, particularly during unsupervised learning. Once all the examples are grouped, a human can optionally supply meaning to each cluster.

Many clustering algorithms exist. For example, the k-means algorithm clusters examples based on their proximity to a centroid, as in the following diagram:

A human researcher could then review the clusters and, for example, label cluster 1 as "dwarf trees" and cluster 2 as "full-size trees."

As another example, consider a clustering algorithm based on an example's distance from a center point, illustrated as follows:

See the Clustering course for more information.

co-adaptation

An undesirable behavior in which neurons predict patterns in training data by relying almost exclusively on outputs of specific other neurons instead of relying on the network's behavior as a whole. When the patterns that cause co-adaptation are not present in validation data, then co-adaptation causes overfitting. Dropout regularization reduces co-adaptation because dropout ensures neurons cannot rely solely on specific other neurons.

collaborative filtering

Making predictions about the interests of one user based on the interests of many other users. Collaborative filtering is often used in recommendation systems.

See Collaborative filtering in the Recommendation Systems course for more information.

CommitmentBank (CB)

A dataset for evaluating an LLM's proficiency in determining whether the author of a passage believes a target clause within that passage. Each entry in the dataset contains:

- A passage

- A target clause within that passage

- A Boolean value indicating whether the passage's author believes the target clause

For example:

- Passage: What fun to hear Artemis laugh. She's such a serious child. I didn't know she had a sense of humor.

- Target clause: she had a sense of humor

- Boolean: True, which means the author believes the target clause

CommitmentBank is a component of the SuperGLUE ensemble.

compact model

Any small model designed to run on small devices with limited computational resources. For example, compact models can run on mobile phones, tablets, or embedded systems.

compute

(Noun) The computational resources used by a model or system, such as processing power, memory, and storage.

See accelerator chips.

concept drift

A shift in the relationship between features and the label. Over time, concept drift reduces a model's quality.

During training, the model learns the relationship between the features and their labels in the training set. If the labels in the training set are good proxies for the real-world, then the model should make good real world predictions. However, due to concept drift, the model's predictions tend to degrade over time.

For example, consider a binary classification model that predicts whether or not a certain car model is "fuel efficient." That is, the features could be:

- car weight

- engine compression

- transmission type

while the label is either:

- fuel efficient

- not fuel efficient

However, the concept of "fuel efficient car" keeps changing. A car model labeled fuel efficient in 1994 would almost certainly be labeled not fuel efficient in 2024. A model suffering from concept drift tends to make less and less useful predictions over time.

Compare and contrast with nonstationarity.

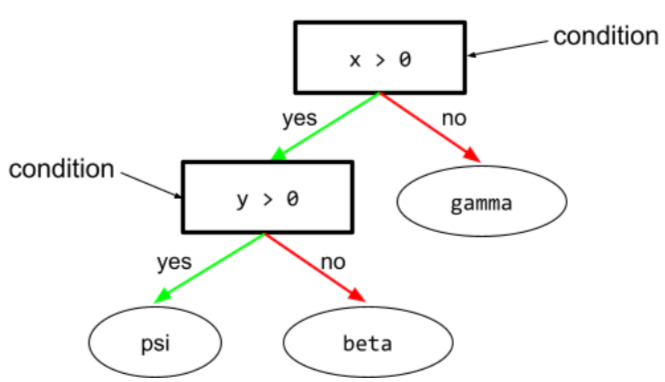

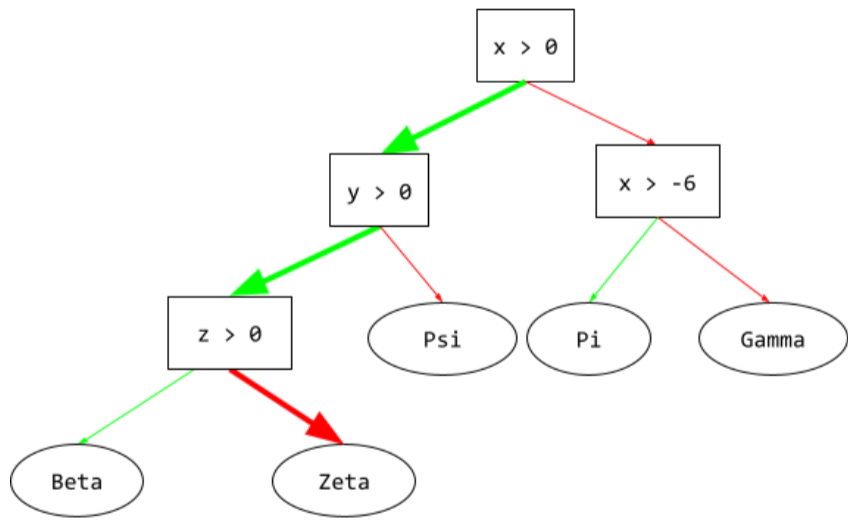

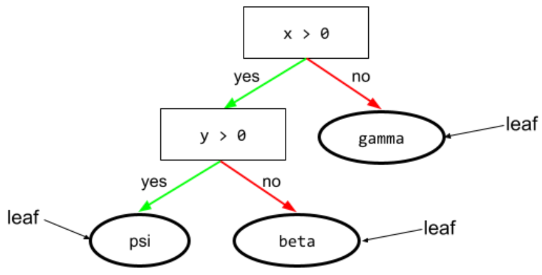

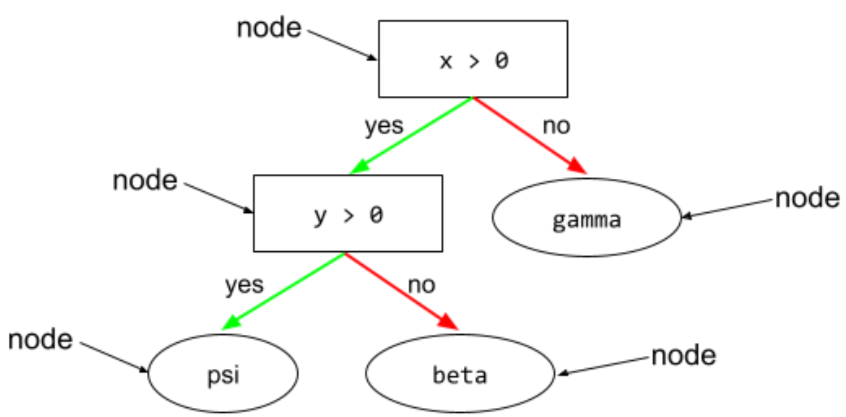

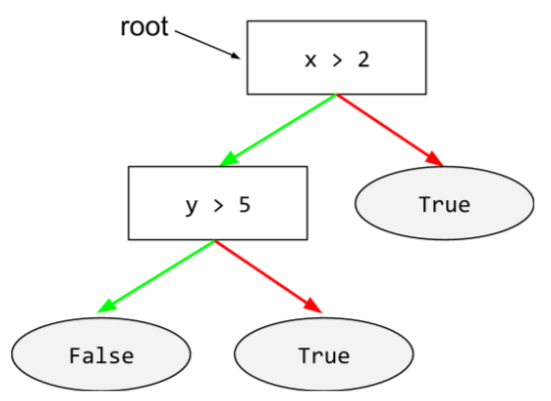

condition

In a decision tree, any node that performs a test. For example, the following decision tree contains two conditions:

A condition is also called a split or a test.

Contrast condition with leaf.

See also:

See Types of conditions in the Decision Forests course for more information.

confabulation

Synonym for hallucination.

Confabulation is probably a more technically accurate term than hallucination. However, hallucination became popular first.

configuration

The process of assigning the initial property values used to train a model, including:

- the model's composing layers

- the location of the data

- hyperparameters such as:

In machine learning projects, configuration can be done through a special configuration file or using configuration libraries such as the following:

confirmation bias

The tendency to search for, interpret, favor, and recall information in a way that confirms one's pre-existing beliefs or hypotheses. Machine learning developers may inadvertently collect or label data in ways that influence an outcome supporting their existing beliefs. Confirmation bias is a form of implicit bias.

Experimenter's bias is a form of confirmation bias in which an experimenter continues training models until a pre-existing hypothesis is confirmed.

confusion matrix

An NxN table that summarizes the number of correct and incorrect predictions that a classification model made. For example, consider the following confusion matrix for a binary classification model:

| Tumor (predicted) | Non-Tumor (predicted) | |

|---|---|---|

| Tumor (ground truth) | 18 (TP) | 1 (FN) |

| Non-Tumor (ground truth) | 6 (FP) | 452 (TN) |

The preceding confusion matrix shows the following:

- Of the 19 predictions in which ground truth was Tumor, the model correctly classified 18 and incorrectly classified 1.

- Of the 458 predictions in which ground truth was Non-Tumor, the model correctly classified 452 and incorrectly classified 6.

The confusion matrix for a multi-class classification problem can help you identify patterns of mistakes. For example, consider the following confusion matrix for a 3-class multi-class classification model that categorizes three different iris types (Virginica, Versicolor, and Setosa). When the ground truth was Virginica, the confusion matrix shows that the model was far more likely to mistakenly predict Versicolor than Setosa:

| Setosa (predicted) | Versicolor (predicted) | Virginica (predicted) | |

|---|---|---|---|

| Setosa (ground truth) | 88 | 12 | 0 |

| Versicolor (ground truth) | 6 | 141 | 7 |

| Virginica (ground truth) | 2 | 27 | 109 |

As yet another example, a confusion matrix could reveal that a model trained to recognize handwritten digits tends to mistakenly predict 9 instead of 4, or mistakenly predict 1 instead of 7.

Confusion matrixes contain sufficient information to calculate a variety of performance metrics, including precision and recall.

constituency parsing

Dividing a sentence into smaller grammatical structures ("constituents"). A later part of the ML system, such as a natural language understanding model, can parse the constituents more easily than the original sentence. For example, consider the following sentence:

My friend adopted two cats.

A constituency parser can divide this sentence into the following two constituents:

- My friend is a noun phrase.

- adopted two cats is a verb phrase.

These constituents can be further subdivided into smaller constituents. For example, the verb phrase

adopted two cats

could be further subdivided into:

- adopted is a verb.

- two cats is another noun phrase.

contextualized language embedding

An embedding that comes close to "understanding" words and phrases in ways that fluent human speakers can. Contextualized language embeddings can understand complex syntax, semantics, and context.

For example, consider embeddings of the English word cow. Older embeddings such as word2vec can represent English words such that the distance in the embedding space from cow to bull is similar to the distance from ewe (female sheep) to ram (male sheep) or from female to male. Contextualized language embeddings can go a step further by recognizing that English speakers sometimes casually use the word cow to mean either cow or bull.

context window

The number of tokens a model can process in a given prompt. The larger the context window, the more information the model can use to provide coherent and consistent responses to the prompt.

continuous feature

A floating-point feature with an infinite range of possible values, such as temperature or weight.

Contrast with discrete feature.

convenience sampling

Using a dataset not gathered scientifically in order to run quick experiments. Later on, it's essential to switch to a scientifically gathered dataset.

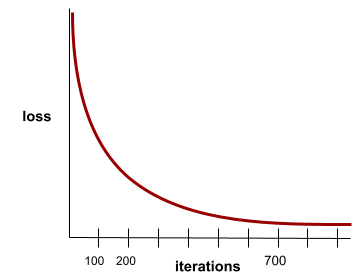

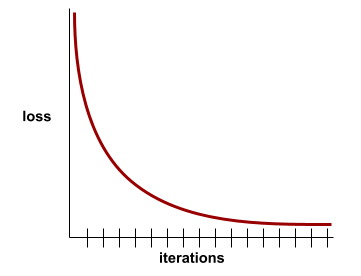

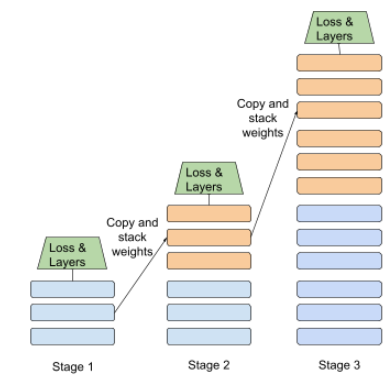

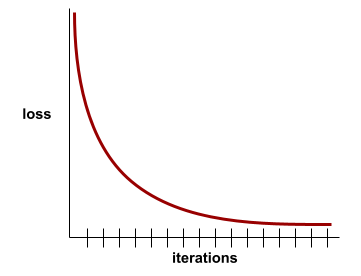

convergence

A state reached when loss values change very little or not at all with each iteration. For example, the following loss curve suggests convergence at around 700 iterations:

A model converges when additional training won't improve the model.

In deep learning, loss values sometimes stay constant or nearly so for many iterations before finally descending. During a long period of constant loss values, you may temporarily get a false sense of convergence.

See also early stopping.

See Model convergence and loss curves in Machine Learning Crash Course for more information.

conversational coding

An iterative dialog between you and a generative AI model for the purpose of creating software. You issue a prompt describing some software. Then, the model uses that description to generate code. Then, you issue a new prompt to address the flaws in the previous prompt or in the generated code, and the model generates updated code. You two keep going back and forth until the generated software is good enough.

Conversation coding is essentially the original meaning of vibe coding.

Contrast with specificational coding.

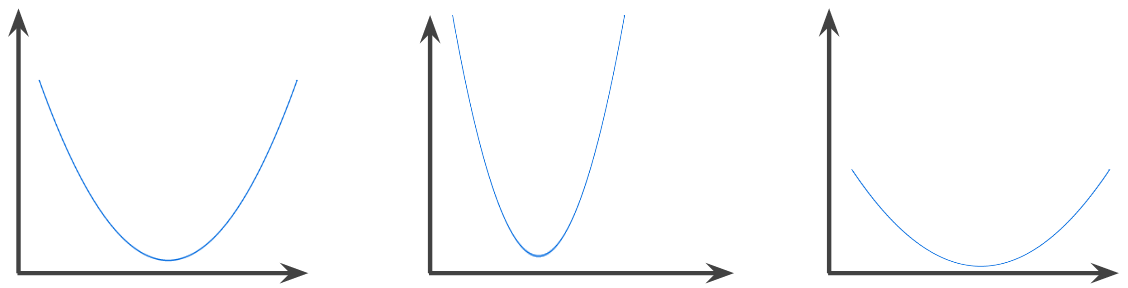

convex function

A function in which the region above the graph of the function is a convex set. The prototypical convex function is shaped something like the letter U. For example, the following are all convex functions:

In contrast, the following function is not convex. Notice how the region above the graph is not a convex set:

A strictly convex function has exactly one local minimum point, which is also the global minimum point. The classic U-shaped functions are strictly convex functions. However, some convex functions (for example, straight lines) are not U-shaped.

See Convergence and convex functions in Machine Learning Crash Course for more information.

convex optimization

The process of using mathematical techniques such as gradient descent to find the minimum of a convex function. A great deal of research in machine learning has focused on formulating various problems as convex optimization problems and in solving those problems more efficiently.

For complete details, see Boyd and Vandenberghe, Convex Optimization.

convex set

A subset of Euclidean space such that a line drawn between any two points in the subset remains completely within the subset. For instance, the following two shapes are convex sets:

In contrast, the following two shapes are not convex sets:

convolution

In mathematics, casually speaking, a mixture of two functions. In machine learning, a convolution mixes the convolutional filter and the input matrix in order to train weights.

The term "convolution" in machine learning is often a shorthand way of referring to either convolutional operation or convolutional layer.

Without convolutions, a machine learning algorithm would have to learn a separate weight for every cell in a large tensor. For example, a machine learning algorithm training on 2K x 2K images would be forced to find 4M separate weights. Thanks to convolutions, a machine learning algorithm only has to find weights for every cell in the convolutional filter, dramatically reducing the memory needed to train the model. When the convolutional filter is applied, it is simply replicated across cells such that each is multiplied by the filter.

convolutional filter

One of the two actors in a convolutional operation. (The other actor is a slice of an input matrix.) A convolutional filter is a matrix having the same rank as the input matrix, but a smaller shape. For example, given a 28x28 input matrix, the filter could be any 2D matrix smaller than 28x28.

In photographic manipulation, all the cells in a convolutional filter are typically set to a constant pattern of ones and zeroes. In machine learning, convolutional filters are typically seeded with random numbers and then the network trains the ideal values.

convolutional layer

A layer of a deep neural network in which a convolutional filter passes along an input matrix. For example, consider the following 3x3 convolutional filter:

![A 3x3 matrix with the following values: [[0,1,0], [1,0,1], [0,1,0]]](/static/machine-learning/glossary/images/ConvolutionalFilter33.svg)

The following animation shows a convolutional layer consisting of 9 convolutional operations involving the 5x5 input matrix. Notice that each convolutional operation works on a different 3x3 slice of the input matrix. The resulting 3x3 matrix (on the right) consists of the results of the 9 convolutional operations:

![An animation showing two matrixes. The first matrix is the 5x5

matrix: [[128,97,53,201,198], [35,22,25,200,195],

[37,24,28,197,182], [33,28,92,195,179], [31,40,100,192,177]].

The second matrix is the 3x3 matrix:

[[181,303,618], [115,338,605], [169,351,560]].

The second matrix is calculated by applying the convolutional

filter [[0, 1, 0], [1, 0, 1], [0, 1, 0]] across

different 3x3 subsets of the 5x5 matrix.](/static/machine-learning/glossary/images/AnimatedConvolution.gif)

convolutional neural network

A neural network in which at least one layer is a convolutional layer. A typical convolutional neural network consists of some combination of the following layers:

Convolutional neural networks have had great success in certain kinds of problems, such as image recognition.

convolutional operation

The following two-step mathematical operation:

- Element-wise multiplication of the convolutional filter and a slice of an input matrix. (The slice of the input matrix has the same rank and size as the convolutional filter.)

- Summation of all the values in the resulting product matrix.

For example, consider the following 5x5 input matrix:

![The 5x5 matrix: [[128,97,53,201,198], [35,22,25,200,195],

[37,24,28,197,182], [33,28,92,195,179], [31,40,100,192,177]].](/static/machine-learning/glossary/images/ConvolutionalLayerInputMatrix.svg)

Now imagine the following 2x2 convolutional filter:

![The 2x2 matrix: [[1, 0], [0, 1]]](/static/machine-learning/glossary/images/ConvolutionalLayerFilter.svg)

Each convolutional operation involves a single 2x2 slice of the input matrix. For example, suppose we use the 2x2 slice at the top-left of the input matrix. So, the convolution operation on this slice looks as follows:

![Applying the convolutional filter [[1, 0], [0, 1]] to the top-left

2x2 section of the input matrix, which is [[128,97], [35,22]].

The convolutional filter leaves the 128 and 22 intact, but zeroes

out the 97 and 35. Consequently, the convolution operation yields

the value 150 (128+22).](/static/machine-learning/glossary/images/ConvolutionalLayerOperation.svg)

A convolutional layer consists of a series of convolutional operations, each acting on a different slice of the input matrix.

COPA

Abbreviation for Choice of Plausible Alternatives.

cost

Synonym for loss.

co-training

A semi-supervised learning approach particularly useful when all of the following conditions are true:

- The ratio of unlabeled examples to labeled examples in the dataset is high.

- This is a classification problem (binary or multi-class).

- The dataset contains two different sets of predictive features that are independent of each other and complementary.

Co-training essentially amplifies independent signals into a stronger signal. For example, consider a classification model that categorizes individual used cars as either Good or Bad. One set of predictive features might focus on aggregate characteristics such as the year, make, and model of the car; another set of predictive features might focus on the previous owner's driving record and the car's maintenance history.

The seminal paper on co-training is Combining Labeled and Unlabeled Data with Co-Training by Blum and Mitchell.

counterfactual fairness

A fairness metric that checks whether a classification model produces the same result for one individual as it does for another individual who is identical to the first, except with respect to one or more sensitive attributes. Evaluating a classification model for counterfactual fairness is one method for surfacing potential sources of bias in a model.

See either of the following for more information:

- Fairness: Counterfactual fairness in Machine Learning Crash Course.

- When Worlds Collide: Integrating Different Counterfactual Assumptions in Fairness

coverage bias

See selection bias.

crash blossom

A sentence or phrase with an ambiguous meaning. Crash blossoms present a significant problem in natural language understanding. For example, the headline Red Tape Holds Up Skyscraper is a crash blossom because an NLU model could interpret the headline literally or figuratively.

critic

Synonym for Deep Q-Network.

cross-entropy

A generalization of Log Loss to multi-class classification problems. Cross-entropy quantifies the difference between two probability distributions. See also perplexity.

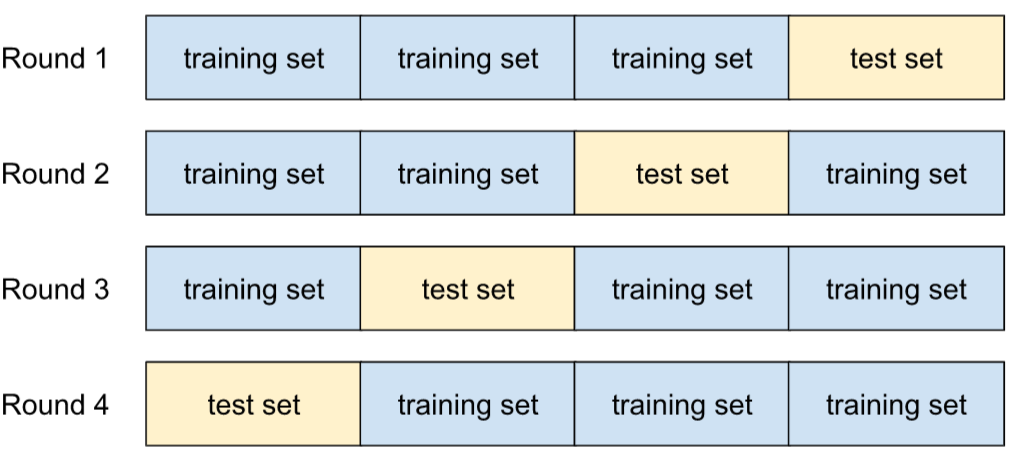

cross-validation

A mechanism for estimating how well a model would generalize to new data by testing the model against one or more non-overlapping data subsets withheld from the training set.

cumulative distribution function (CDF)

A function that defines the frequency of samples less than or equal to a target value. For example, consider a normal distribution of continuous values. A CDF tells you that approximately 50% of samples should be less than or equal to the mean and that approximately 84% of samples should be less than or equal to one standard deviation above the mean.

D

data analysis

Obtaining an understanding of data by considering samples, measurement, and visualization. Data analysis can be particularly useful when a dataset is first received, before one builds the first model. It is also crucial in understanding experiments and debugging problems with the system.

data augmentation

Artificially boosting the range and number of training examples by transforming existing examples to create additional examples. For example, suppose images are one of your features, but your dataset doesn't contain enough image examples for the model to learn useful associations. Ideally, you'd add enough labeled images to your dataset to enable your model to train properly. If that's not possible, data augmentation can rotate, stretch, and reflect each image to produce many variants of the original picture, possibly yielding enough labeled data to enable excellent training.

DataFrame

A popular pandas data type for representing datasets in memory.

A DataFrame is analogous to a table or a spreadsheet. Each column of a DataFrame has a name (a header), and each row is identified by a unique number.

Each column in a DataFrame is structured like a 2D array, except that each column can be assigned its own data type.

See also the official pandas.DataFrame reference page.

data parallelism

A way of scaling training or inference that replicates an entire model onto multiple devices and then passes a subset of the input data to each device. Data parallelism can enable training and inference on very large batch sizes; however, data parallelism requires that the model be small enough to fit on all devices.

Data parallelism typically speeds training and inference.

See also model parallelism.

Dataset API (tf.data)

A high-level TensorFlow API for reading data and

transforming it into a form that a machine learning algorithm requires.

A tf.data.Dataset object represents a sequence of elements, in which

each element contains one or more Tensors. A tf.data.Iterator

object provides access to the elements of a Dataset.

data set or dataset

A collection of raw data, commonly (but not exclusively) organized in one of the following formats:

- a spreadsheet

- a file in CSV (comma-separated values) format

decision boundary

The separator between classes learned by a model in a binary class or multi-class classification problems. For example, in the following image representing a binary classification problem, the decision boundary is the frontier between the orange class and the blue class:

decision forest

A model created from multiple decision trees. A decision forest makes a prediction by aggregating the predictions of its decision trees. Popular types of decision forests include random forests and gradient boosted trees.

See the Decision Forests section in the Decision Forests course for more information.

decision threshold

Synonym for classification threshold.

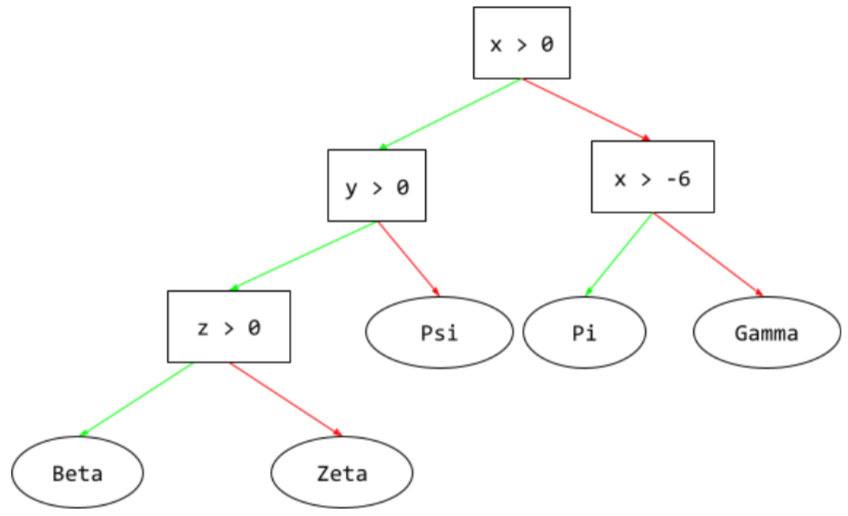

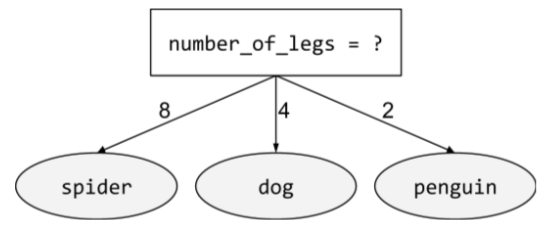

decision tree

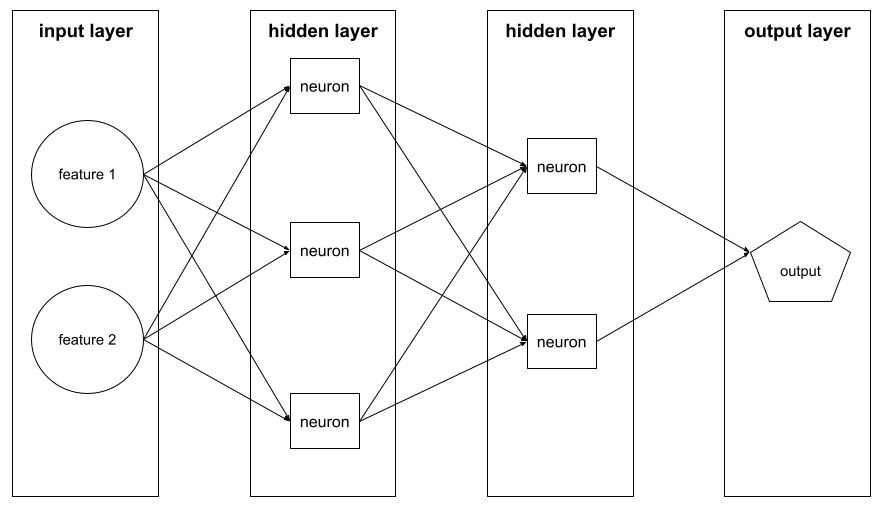

A supervised learning model composed of a set of conditions and leaves organized hierarchically. For example, the following is a decision tree:

decoder

In general, any ML system that converts from a processed, dense, or internal representation to a more raw, sparse, or external representation.

Decoders are often a component of a larger model, where they are frequently paired with an encoder.

In sequence-to-sequence tasks, a decoder starts with the internal state generated by the encoder to predict the next sequence.

Refer to Transformer for the definition of a decoder within the Transformer architecture.

See Large language models in Machine Learning Crash Course for more information.

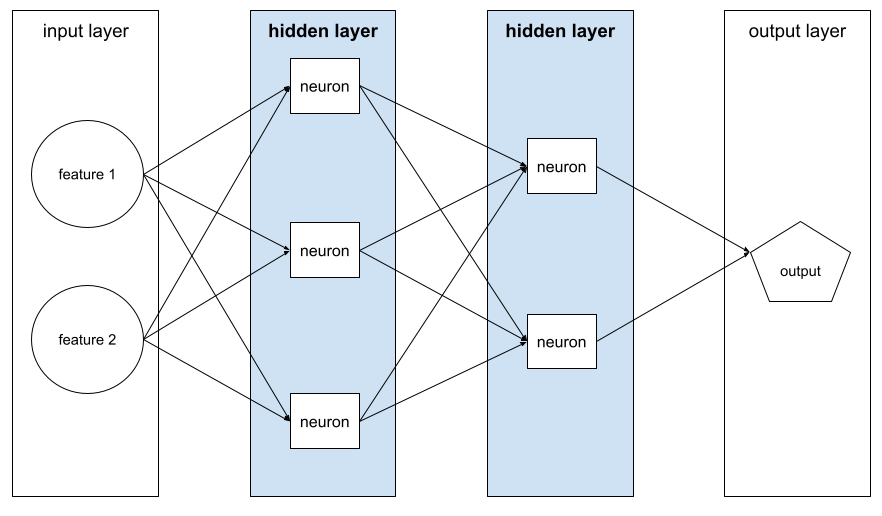

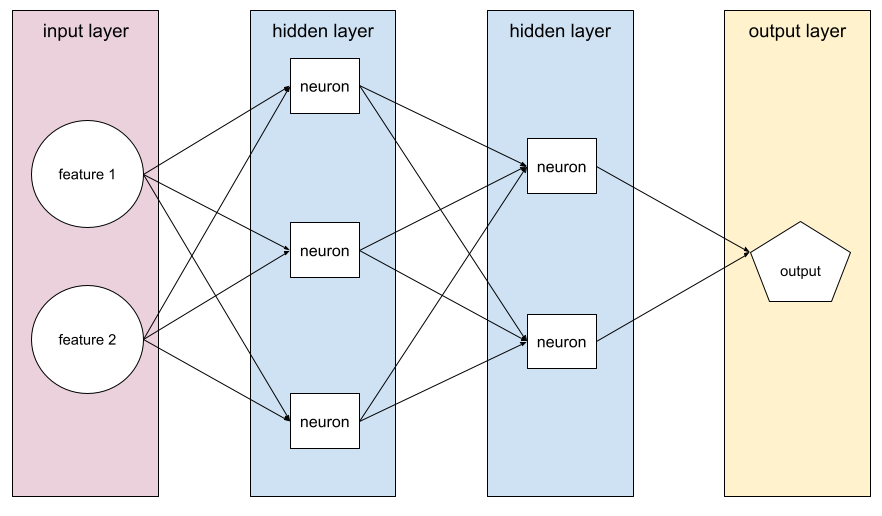

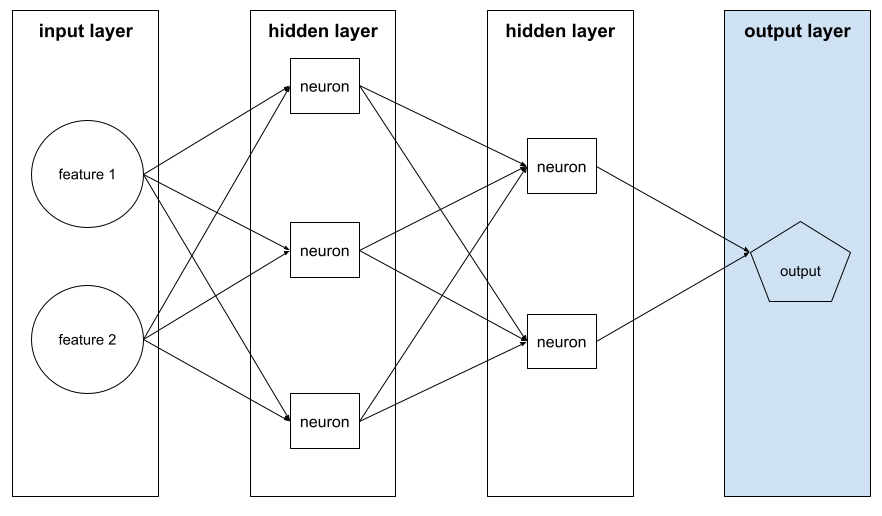

deep model

A neural network containing more than one hidden layer.

A deep model is also called a deep neural network.

Contrast with wide model.

deep neural network

Synonym for deep model.

Deep Q-Network (DQN)

In Q-learning, a deep neural network that predicts Q-functions.

Critic is a synonym for Deep Q-Network.

demographic parity

A fairness metric that is satisfied if the results of a model's classification are not dependent on a given sensitive attribute.

For example, if both Lilliputians and Brobdingnagians apply to Glubbdubdrib University, demographic parity is achieved if the percentage of Lilliputians admitted is the same as the percentage of Brobdingnagians admitted, irrespective of whether one group is on average more qualified than the other.

Contrast with equalized odds and equality of opportunity, which permit classification results in aggregate to depend on sensitive attributes, but don't permit classification results for certain specified ground truth labels to depend on sensitive attributes. See "Attacking discrimination with smarter machine learning" for a visualization exploring the tradeoffs when optimizing for demographic parity.

See Fairness: demographic parity in Machine Learning Crash Course for more information.

denoising

A common approach to self-supervised learning in which:

Denoising enables learning from unlabeled examples. The original dataset serves as the target or label and the noisy data as the input.

Some masked language models use denoising as follows:

- Noise is artificially added to an unlabeled sentence by masking some of the tokens.

- The model tries to predict the original tokens.

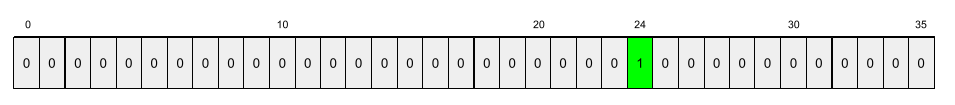

dense feature

A feature in which most or all values are nonzero, typically a Tensor of floating-point values. For example, the following 10-element Tensor is dense because 9 of its values are nonzero:

| 8 | 3 | 7 | 5 | 2 | 4 | 0 | 4 | 9 | 6 |

Contrast with sparse feature.

dense layer

Synonym for fully connected layer.

depth

The sum of the following in a neural network:

- the number of hidden layers

- the number of output layers, which is typically 1

- the number of any embedding layers

For example, a neural network with five hidden layers and one output layer has a depth of 6.

Notice that the input layer doesn't influence depth.

depthwise separable convolutional neural network (sepCNN)

A convolutional neural network architecture based on Inception, but where Inception modules are replaced with depthwise separable convolutions. Also known as Xception.

A depthwise separable convolution (also abbreviated as separable convolution) factors a standard 3D convolution into two separate convolution operations that are more computationally efficient: first, a depthwise convolution, with a depth of 1 (n ✕ n ✕ 1), and then second, a pointwise convolution, with length and width of 1 (1 ✕ 1 ✕ n).

To learn more, see Xception: Deep Learning with Depthwise Separable Convolutions.

derived label

Synonym for proxy label.

device

An overloaded term with the following two possible definitions:

- A category of hardware that can run a TensorFlow session, including CPUs, GPUs, and TPUs.

- When training an ML model on accelerator chips (GPUs or TPUs), the part of the system that actually manipulates tensors and embeddings. The device runs on accelerator chips. In contrast, the host typically runs on a CPU.

differential privacy

In machine learning, an anonymization approach to protect any sensitive data (for example, an individual's personal information) included in a model's training set from being exposed. This approach ensures that the model doesn't learn or remember much about a specific individual. This is accomplished by sampling and adding noise during model training to obscure individual data points, mitigating the risk of exposing sensitive training data.

Differential privacy is also used outside of machine learning. For example, data scientists sometimes use differential privacy to protect individual privacy when computing product usage statistics for different demographics.

dimension reduction

Decreasing the number of dimensions used to represent a particular feature in a feature vector, typically by converting to an embedding vector.

dimensions

Overloaded term having any of the following definitions:

The number of levels of coordinates in a Tensor. For example:

- A scalar has zero dimensions; for example,

["Hello"]. - A vector has one dimension; for example,

[3, 5, 7, 11]. - A matrix has two dimensions; for example,

[[2, 4, 18], [5, 7, 14]]. You can uniquely specify a particular cell in a one-dimensional vector with one coordinate; you need two coordinates to uniquely specify a particular cell in a two-dimensional matrix.

- A scalar has zero dimensions; for example,

The number of entries in a feature vector.

The number of elements in an embedding layer.

direct prompting

Synonym for zero-shot prompting.

discrete feature

A feature with a finite set of possible values. For example, a feature whose values may only be animal, vegetable, or mineral is a discrete (or categorical) feature.

Contrast with continuous feature.

discriminative model

A model that predicts labels from a set of one or more features. More formally, discriminative models define the conditional probability of an output given the features and weights; that is:

p(output | features, weights)

For example, a model that predicts whether an email is spam from features and weights is a discriminative model.

The vast majority of supervised learning models, including classification and regression models, are discriminative models.

Contrast with generative model.

discriminator

A system that determines whether examples are real or fake.

Alternatively, the subsystem within a generative adversarial network that determines whether the examples created by the generator are real or fake.

See The discriminator in the GAN course for more information.

disparate impact

Making decisions about people that impact different population subgroups disproportionately. This usually refers to situations where an algorithmic decision-making process harms or benefits some subgroups more than others.

For example, suppose an algorithm that determines a Lilliputian's eligibility for a miniature-home loan is more likely to classify them as "ineligible" if their mailing address contains a certain postal code. If Big-Endian Lilliputians are more likely to have mailing addresses with this postal code than Little-Endian Lilliputians, then this algorithm may result in disparate impact.

Contrast with disparate treatment, which focuses on disparities that result when subgroup characteristics are explicit inputs to an algorithmic decision-making process.

disparate treatment

Factoring subjects' sensitive attributes into an algorithmic decision-making process such that different subgroups of people are treated differently.

For example, consider an algorithm that determines Lilliputians' eligibility for a miniature-home loan based on the data they provide in their loan application. If the algorithm uses a Lilliputian's affiliation as Big-Endian or Little-Endian as an input, it is enacting disparate treatment along that dimension.

Contrast with disparate impact, which focuses on disparities in the societal impacts of algorithmic decisions on subgroups, irrespective of whether those subgroups are inputs to the model.

distillation

The process of reducing the size of one model (known as the teacher) into a smaller model (known as the student) that emulates the original model's predictions as faithfully as possible. Distillation is useful because the smaller model has two key benefits over the larger model (the teacher):

- Faster inference time

- Reduced memory and energy usage

However, the student's predictions are typically not as good as the teacher's predictions.

Distillation trains the student model to minimize a loss function based on the difference between the outputs of the predictions of the student and teacher models.

Compare and contrast distillation with the following terms:

See LLMs: Fine-tuning, distillation, and prompt engineering in Machine Learning Crash Course for more information.

distribution

The frequency and range of different values for a given feature or label. A distribution captures how likely a particular value is.

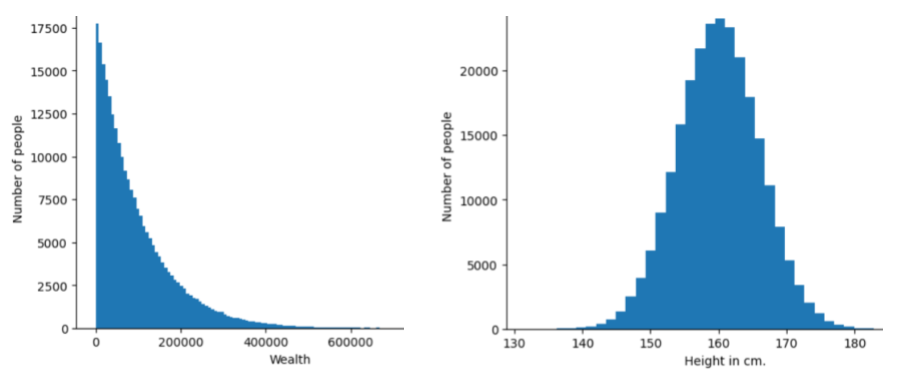

The following image shows histograms of two different distributions:

- On the left, a power law distribution of wealth versus the number of people possessing that wealth.

- On the right, a normal distribution of height versus the number of people possessing that height.

Understanding each feature and label's distribution can help you determine how to normalize values and detect outliers.

The phrase out of distribution refers to a value that doesn't appear in the dataset or is very rare. For example, an image of the planet Saturn would be considered out of distribution for a dataset consisting of cat images.

divisive clustering

downsampling

Overloaded term that can mean either of the following:

- Reducing the amount of information in a feature in order to train a model more efficiently. For example, before training an image recognition model, downsampling high-resolution images to a lower-resolution format.

- Training on a disproportionately low percentage of over-represented class examples in order to improve model training on under-represented classes. For example, in a class-imbalanced dataset, models tend to learn a lot about the majority class and not enough about the minority class. Downsampling helps balance the amount of training on the majority and minority classes.

See Datasets: Imbalanced datasets in Machine Learning Crash Course for more information.

DQN

Abbreviation for Deep Q-Network.

dropout regularization

A form of regularization useful in training neural networks. Dropout regularization removes a random selection of a fixed number of the units in a network layer for a single gradient step. The more units dropped out, the stronger the regularization. This is analogous to training the network to emulate an exponentially large ensemble of smaller networks. For full details, see Dropout: A Simple Way to Prevent Neural Networks from Overfitting.

dynamic

Something done frequently or continuously. The terms dynamic and online are synonyms in machine learning. The following are common uses of dynamic and online in machine learning:

- A dynamic model (or online model) is a model that is retrained frequently or continuously.

- Dynamic training (or online training) is the process of training frequently or continuously.

- Dynamic inference (or online inference) is the process of generating predictions on demand.

dynamic model

A model that is frequently (maybe even continuously) retrained. A dynamic model is a "lifelong learner" that constantly adapts to evolving data. A dynamic model is also known as an online model.

Contrast with static model.

E

eager execution

A TensorFlow programming environment in which operations run immediately. In contrast, operations called in graph execution don't run until they are explicitly evaluated. Eager execution is an imperative interface, much like the code in most programming languages. Eager execution programs are generally far easier to debug than graph execution programs.

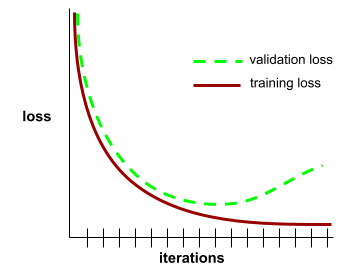

early stopping

A method for regularization that involves ending training before training loss finishes decreasing. In early stopping, you intentionally stop training the model when the loss on a validation dataset starts to increase; that is, when generalization performance worsens.

Contrast with early exit.

earth mover's distance (EMD)

A measure of the relative similarity of two distributions. The lower the earth mover's distance, the more similar the distributions.

edit distance

A measurement of how similar two text strings are to each other. In machine learning, edit distance is useful for the following reasons:

- Edit distance is easy to compute.

- Edit distance can compare two strings known to be similar to each other.

- Edit distance can determine the degree to which different strings are similar to a given string.

Several definitions of edit distance exist, each using different string operations. See Levenshtein distance for an example.

Einsum notation

An efficient notation for describing how two tensors are to be combined. The tensors are combined by multiplying the elements of one tensor by the elements of the other tensor and then summing the products. Einsum notation uses symbols to identify the axes of each tensor, and those same symbols are rearranged to specify the shape of the new resulting tensor.

NumPy provides a common Einsum implementation.

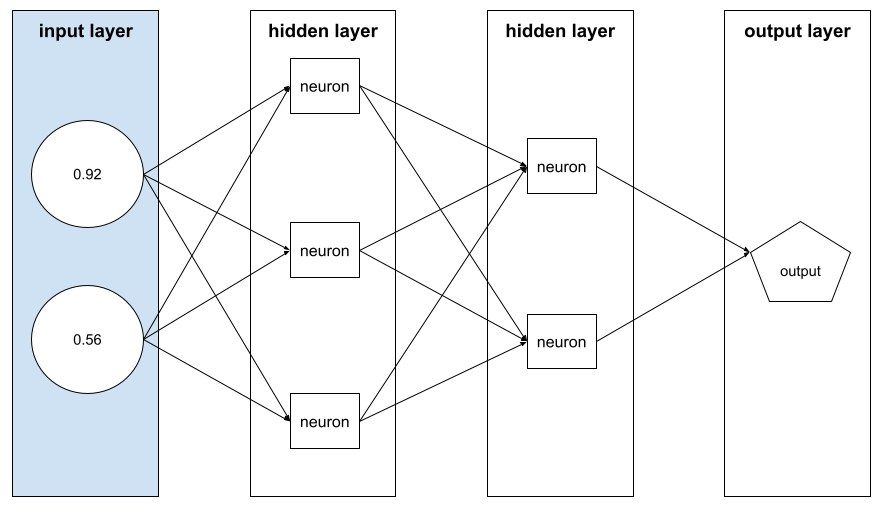

embedding layer

A special hidden layer that trains on a high-dimensional categorical feature to gradually learn a lower dimension embedding vector. An embedding layer enables a neural network to train far more efficiently than training just on the high-dimensional categorical feature.

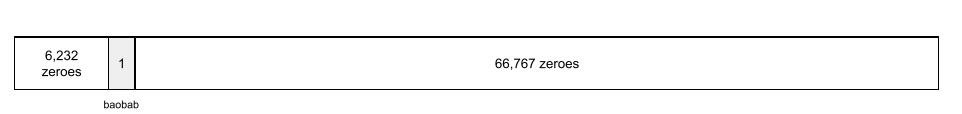

For example, Earth currently supports about 73,000 tree species. Suppose

tree species is a feature in your model, so your model's

input layer includes a one-hot vector 73,000

elements long.

For example, perhaps baobab would be represented something like this:

A 73,000-element array is very long. If you don't add an embedding layer to the model, training is going to be very time consuming due to multiplying 72,999 zeros. Perhaps you pick the embedding layer to consist of 12 dimensions. Consequently, the embedding layer will gradually learn a new embedding vector for each tree species.

In certain situations, hashing is a reasonable alternative to an embedding layer.

See Embeddings in Machine Learning Crash Course for more information.

embedding space

The d-dimensional vector space that features from a higher-dimensional vector space are mapped to. Embedding space is trained to capture structure that is meaningful for the intended application.

The dot product of two embeddings is a measure of their similarity.

embedding vector

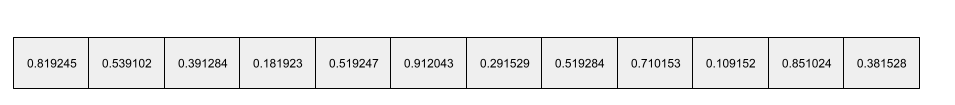

Broadly speaking, an array of floating-point numbers taken from any hidden layer that describe the inputs to that hidden layer. Often, an embedding vector is the array of floating-point numbers trained in an embedding layer. For example, suppose an embedding layer must learn an embedding vector for each of the 73,000 tree species on Earth. Perhaps the following array is the embedding vector for a baobab tree:

An embedding vector is not a bunch of random numbers. An embedding layer determines these values through training, similar to the way a neural network learns other weights during training. Each element of the array is a rating along some characteristic of a tree species. Which element represents which tree species' characteristic? That's very hard for humans to determine.