Page Summary

-

The WebP library provides APIs for decoding and encoding WebP images, offering both simple and advanced options for controlling the process.

-

Decoding APIs allow for retrieving image information, decoding into various color formats, and advanced features like cropping and rescaling during decoding.

-

Encoding APIs support both lossy and lossless compression, with options to customize parameters like quality, speed, and filtering for fine-grained control.

-

The advanced encoding API uses

WebPConfigandWebPPicturestructures to manage compression settings and image data, offering flexibility in handling the input and output. -

Developers can leverage these APIs to optimize WebP image compression based on specific quality, size, and speed requirements for their applications.

This section describes the API for the encoder and decoder that are included in the WebP library. This API description pertains to version 1.6.0.

Headers and Libraries

When you install libwebp, a directory named webp/

will be installed at the typical location for your platform. For example, on

Unix platforms, the following header files would be copied to

/usr/local/include/webp/.

decode.h

encode.h

types.h

The libraries are located in the usual library directories. The static and

dynamic libraries are in /usr/local/lib/ on Unix platforms.

Simple Decoding API

To get started using the decoding API, you must ensure that you have the library and header files installed as described above.

Include the decoding API header in your C/C++ code as follows:

#include "webp/decode.h"

int WebPGetInfo(const uint8_t* data, size_t data_size, int* width, int* height);

This function will validate the WebP image header and retrieve the image width

and height. Pointers *width and *height can be passed NULL if deemed

irrelevant.

Input Attributes

- data

- Pointer to WebP image data

- data_size

- This is the size of the memory block pointed to by

datacontaining the image data.

Returns

- false

- Error code returned in the case of (a) formatting error(s).

- true

- On success.

*widthand*heightare only valid on successful return. - width

- Integer value. The range is limited from 1 to 16383.

- height

- Integer value. The range is limited from 1 to 16383.

struct WebPBitstreamFeatures {

int width; // Width in pixels.

int height; // Height in pixels.

int has_alpha; // True if the bitstream contains an alpha channel.

int has_animation; // True if the bitstream is an animation.

int format; // 0 = undefined (/mixed), 1 = lossy, 2 = lossless

}

VP8StatusCode WebPGetFeatures(const uint8_t* data,

size_t data_size,

WebPBitstreamFeatures* features);

This function will retrieve features from the bitstream. The *features

structure is filled with information gathered from the bitstream:

Input Attributes

- data

- Pointer to WebP image data

- data_size

- This is the size of the memory block pointed to by

datacontaining the image data.

Returns

VP8_STATUS_OK- When the features are successfully retrieved.

VP8_STATUS_NOT_ENOUGH_DATA- When more data is needed to retrieve the features from headers.

Additional VP8StatusCode error values in other cases.

- features

- Pointer to WebPBitstreamFeatures structure.

uint8_t* WebPDecodeRGBA(const uint8_t* data, size_t data_size, int* width, int* height);

uint8_t* WebPDecodeARGB(const uint8_t* data, size_t data_size, int* width, int* height);

uint8_t* WebPDecodeBGRA(const uint8_t* data, size_t data_size, int* width, int* height);

uint8_t* WebPDecodeRGB(const uint8_t* data, size_t data_size, int* width, int* height);

uint8_t* WebPDecodeBGR(const uint8_t* data, size_t data_size, int* width, int* height);

These functions decode a WebP image pointed to by data.

WebPDecodeRGBAreturns RGBA image samples in[r0, g0, b0, a0, r1, g1, b1, a1, ...]order.WebPDecodeARGBreturns ARGB image samples in[a0, r0, g0, b0, a1, r1, g1, b1, ...]order.WebPDecodeBGRAreturns BGRA image samples in[b0, g0, r0, a0, b1, g1, r1, a1, ...]order.WebPDecodeRGBreturns RGB image samples in[r0, g0, b0, r1, g1, b1, ...]order.WebPDecodeBGRreturns BGR image samples in[b0, g0, r0, b1, g1, r1, ...]order.

The code that calls any of these functions must delete the data buffer

(uint8_t*) returned by these functions with WebPFree().

Input Attributes

- data

- Pointer to WebP image data

- data_size

- This is the size of the memory block pointed to by

datacontaining the image data - width

- Integer value. The range is limited currently from 1 to 16383.

- height

- Integer value. The range is limited currently from 1 to 16383.

Returns

- uint8_t*

- Pointer to decoded WebP image samples in linear RGBA/ARGB/BGRA/RGB/BGR order respectively.

uint8_t* WebPDecodeRGBAInto(const uint8_t* data, size_t data_size,

uint8_t* output_buffer, int output_buffer_size, int output_stride);

uint8_t* WebPDecodeARGBInto(const uint8_t* data, size_t data_size,

uint8_t* output_buffer, int output_buffer_size, int output_stride);

uint8_t* WebPDecodeBGRAInto(const uint8_t* data, size_t data_size,

uint8_t* output_buffer, int output_buffer_size, int output_stride);

uint8_t* WebPDecodeRGBInto(const uint8_t* data, size_t data_size,

uint8_t* output_buffer, int output_buffer_size, int output_stride);

uint8_t* WebPDecodeBGRInto(const uint8_t* data, size_t data_size,

uint8_t* output_buffer, int output_buffer_size, int output_stride);

These functions are variants of the above ones and decode the image directly

into a pre-allocated buffer output_buffer. The maximum storage available in

this buffer is indicated by output_buffer_size. If this storage is not

sufficient (or an error occurred), NULL is returned. Otherwise,

output_buffer is returned, for convenience.

The parameter output_stride specifies the distance (in bytes) between

scanlines. Hence, output_buffer_size is expected to be at least

output_stride * picture - height.

Input Attributes

- data

- Pointer to WebP image data

- data_size

- This is the size of the memory block pointed to by

datacontaining the image data - output_buffer_size

- Integer value. Size of allocated buffer

- output_stride

- Integer value. Specifies the distance between scanlines.

Returns

- output_buffer

- Pointer to decoded WebP image.

- uint8_t*

output_bufferif function succeeds;NULLotherwise.

Advanced Decoding API

WebP decoding supports an advanced API to provide ability to have on-the-fly cropping and rescaling, something of great usefulness on memory-constrained environments like mobile phones. Basically, the memory usage will scale with the output's size, not the input's when one only needs a quick preview or a zoomed in portion of an otherwise too-large picture. Some CPU can be saved too, incidentally.

WebP decoding comes in two variants viz full image decoding and incremental decoding over small input buffers. Users can optionally provide an external memory buffer for decoding the image. The following code sample will go through the steps of using the advanced decoding API.

First we need to initialize a configuration object:

#include "webp/decode.h"

WebPDecoderConfig config;

CHECK(WebPInitDecoderConfig(&config));

// One can adjust some additional decoding options:

config.options.no_fancy_upsampling = 1;

config.options.use_scaling = 1;

config.options.scaled_width = scaledWidth();

config.options.scaled_height = scaledHeight();

// etc.

The decode options are gathered within the WebPDecoderConfig

structure:

struct WebPDecoderOptions {

int bypass_filtering; // if true, skip the in-loop filtering

int no_fancy_upsampling; // if true, use faster pointwise upsampler

int use_cropping; // if true, cropping is applied first

int crop_left, crop_top; // top-left position for cropping.

// Will be snapped to even values.

int crop_width, crop_height; // dimension of the cropping area

int use_scaling; // if true, scaling is applied afterward

int scaled_width, scaled_height; // final resolution

int use_threads; // if true, use multi-threaded decoding

int dithering_strength; // dithering strength (0=Off, 100=full)

int flip; // if true, flip output vertically

int alpha_dithering_strength; // alpha dithering strength in [0..100]

};

Optionally the bitstream features can be read into config.input,

in case we need to know them in advance. For instance it can handy to know

whether the picture has some transparency at all. Note that this will

also parse the bitstream's header, and is therefore a good way of knowing

if the bitstream looks like a valid WebP one.

CHECK(WebPGetFeatures(data, data_size, &config.input) == VP8_STATUS_OK);

Then we need to setup the decoding memory buffer in case we want to supply it directly instead of relying on the decoder for its allocation. We only need to supply the pointer to the memory as well as the total size of the buffer and the line stride (distance in bytes between scanlines).

// Specify the desired output colorspace:

config.output.colorspace = MODE_BGRA;

// Have config.output point to an external buffer:

config.output.u.RGBA.rgba = (uint8_t*)memory_buffer;

config.output.u.RGBA.stride = scanline_stride;

config.output.u.RGBA.size = total_size_of_the_memory_buffer;

config.output.is_external_memory = 1;

The image is ready to be decoded. There are two possible variants for decoding the image. We can decode the image in one go using:

CHECK(WebPDecode(data, data_size, &config) == VP8_STATUS_OK);

Alternately, we can use the incremental method to progressively decode the image as fresh bytes become available:

WebPIDecoder* idec = WebPINewDecoder(&config.output);

CHECK(idec != NULL);

while (additional_data_is_available) {

// ... (get additional data in some new_data[] buffer)

VP8StatusCode status = WebPIAppend(idec, new_data, new_data_size);

if (status != VP8_STATUS_OK && status != VP8_STATUS_SUSPENDED) {

break;

}

// The above call decodes the current available buffer.

// Part of the image can now be refreshed by calling

// WebPIDecGetRGB()/WebPIDecGetYUVA() etc.

}

WebPIDelete(idec); // the object doesn't own the image memory, so it can

// now be deleted. config.output memory is preserved.

The decoded image is now in config.output (or, rather, in config.output.u.RGBA in this case, since the requested output colorspace was MODE_BGRA). Image can be saved, displayed or otherwise processed. Afterward, we only need to reclaim the memory allocated in config's object. It's safe to call this function even if the memory is external and wasn't allocated by WebPDecode():

WebPFreeDecBuffer(&config.output);

Using this API the image can also be decoded to YUV and YUVA formats, using

MODE_YUV and MODE_YUVA respectively. This format is also called

Y'CbCr.

Simple Encoding API

Some very simple functions are supplied for encoding arrays of RGBA samples

in most common layouts. They are declared in the webp/encode.h

header as:

size_t WebPEncodeRGB(const uint8_t* rgb, int width, int height, int stride, float quality_factor, uint8_t** output);

size_t WebPEncodeBGR(const uint8_t* bgr, int width, int height, int stride, float quality_factor, uint8_t** output);

size_t WebPEncodeRGBA(const uint8_t* rgba, int width, int height, int stride, float quality_factor, uint8_t** output);

size_t WebPEncodeBGRA(const uint8_t* bgra, int width, int height, int stride, float quality_factor, uint8_t** output);

The quality factor quality_factor ranges from 0 to 100 and

controls the loss and quality during compression. The value 0 corresponds to low

quality and small output sizes, whereas 100 is the highest quality and largest

output size.

Upon success, the compressed bytes are placed in the *output

pointer, and the size in bytes is returned (otherwise 0 is returned, in case

of failure). The caller must call WebPFree() on the *output

pointer to reclaim memory.

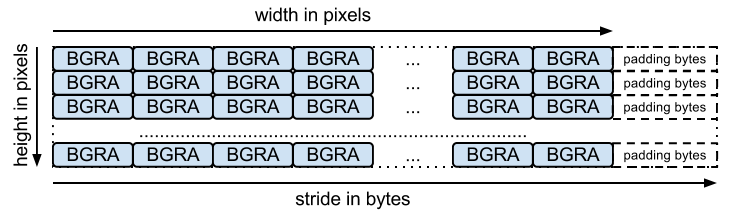

The input array should be a packed array of bytes (one for each channel, as

expected by the name of the function). stride corresponds to the

number of bytes needed to jump from one row to the next. For instance,

BGRA layout is:

There are equivalent functions for lossless encoding, with signatures:

size_t WebPEncodeLosslessRGB(const uint8_t* rgb, int width, int height, int stride, uint8_t** output);

size_t WebPEncodeLosslessBGR(const uint8_t* bgr, int width, int height, int stride, uint8_t** output);

size_t WebPEncodeLosslessRGBA(const uint8_t* rgba, int width, int height, int stride, uint8_t** output);

size_t WebPEncodeLosslessBGRA(const uint8_t* bgra, int width, int height, int stride, uint8_t** output);

Note these functions, like the lossy versions, use the library's default

settings. For lossless this means 'exact' is disabled. RGB values in fully

transparent areas (that is, areas with alpha values equal to 0) will be

modified to improve compression. To avoid this, use WebPEncode() and set

WebPConfig::exact to 1.

Advanced Encoding API

Under the hood, the encoder comes with numerous advanced encoding parameters.

They can be useful to better balance the trade-off between compression

efficiency and processing time.

These parameters are gathered within the WebPConfig structure.

The most used fields of this structure are:

struct WebPConfig {

int lossless; // Lossless encoding (0=lossy(default), 1=lossless).

float quality; // between 0 and 100. For lossy, 0 gives the smallest

// size and 100 the largest. For lossless, this

// parameter is the amount of effort put into the

// compression: 0 is the fastest but gives larger

// files compared to the slowest, but best, 100.

int method; // quality/speed trade-off (0=fast, 6=slower-better)

WebPImageHint image_hint; // Hint for image type (lossless only for now).

// Parameters related to lossy compression only:

int target_size; // if non-zero, set the desired target size in bytes.

// Takes precedence over the 'compression' parameter.

float target_PSNR; // if non-zero, specifies the minimal distortion to

// try to achieve. Takes precedence over target_size.

int segments; // maximum number of segments to use, in [1..4]

int sns_strength; // Spatial Noise Shaping. 0=off, 100=maximum.

int filter_strength; // range: [0 = off .. 100 = strongest]

int filter_sharpness; // range: [0 = off .. 7 = least sharp]

int filter_type; // filtering type: 0 = simple, 1 = strong (only used

// if filter_strength > 0 or autofilter > 0)

int autofilter; // Auto adjust filter's strength [0 = off, 1 = on]

int alpha_compression; // Algorithm for encoding the alpha plane (0 = none,

// 1 = compressed with WebP lossless). Default is 1.

int alpha_filtering; // Predictive filtering method for alpha plane.

// 0: none, 1: fast, 2: best. Default if 1.

int alpha_quality; // Between 0 (smallest size) and 100 (lossless).

// Default is 100.

int pass; // number of entropy-analysis passes (in [1..10]).

int show_compressed; // if true, export the compressed picture back.

// In-loop filtering is not applied.

int preprocessing; // preprocessing filter (0=none, 1=segment-smooth)

int partitions; // log2(number of token partitions) in [0..3]

// Default is set to 0 for easier progressive decoding.

int partition_limit; // quality degradation allowed to fit the 512k limit on

// prediction modes coding (0: no degradation,

// 100: maximum possible degradation).

int use_sharp_yuv; // if needed, use sharp (and slow) RGB->YUV conversion

};

Note that most of these parameters are accessible for experimentation

using the cwebp command line tool.

The input samples should be wrapped into a WebPPicture structure.

This structure can store input samples in either RGBA or YUVA format, depending

on the value of the use_argb flag.

The structure is organized as follows:

struct WebPPicture {

int use_argb; // To select between ARGB and YUVA input.

// YUV input, recommended for lossy compression.

// Used if use_argb = 0.

WebPEncCSP colorspace; // colorspace: should be YUVA420 or YUV420 for now (=Y'CbCr).

int width, height; // dimensions (less or equal to WEBP_MAX_DIMENSION)

uint8_t *y, *u, *v; // pointers to luma/chroma planes.

int y_stride, uv_stride; // luma/chroma strides.

uint8_t* a; // pointer to the alpha plane

int a_stride; // stride of the alpha plane

// Alternate ARGB input, recommended for lossless compression.

// Used if use_argb = 1.

uint32_t* argb; // Pointer to argb (32 bit) plane.

int argb_stride; // This is stride in pixels units, not bytes.

// Byte-emission hook, to store compressed bytes as they are ready.

WebPWriterFunction writer; // can be NULL

void* custom_ptr; // can be used by the writer.

// Error code for the latest error encountered during encoding

WebPEncodingError error_code;

};

This structure also has a function to emit the compressed bytes as they

are made available. See below for an example with an in-memory writer.

Other writers can directly store data to a file (see

examples/cwebp.c for such an example).

The general flow for encoding using the advanced API looks like the following:

First, we need to set up an encoding configuration containing the compression parameters. Note that the same configuration can be used for compressing several different images afterward.

#include "webp/encode.h"

WebPConfig config;

if (!WebPConfigPreset(&config, WEBP_PRESET_PHOTO, quality_factor)) return 0; // version error

// Add additional tuning:

config.sns_strength = 90;

config.filter_sharpness = 6;

config.alpha_quality = 90;

config_error = WebPValidateConfig(&config); // will verify parameter ranges (always a good habit)

Then, the input samples need to be referenced into a WebPPicture either

by reference or copy. Here is an example allocating the buffer for holding

the samples. But one can easily set up a "view" to an already-allocated

sample array. See the WebPPictureView() function.

// Setup the input data, allocating a picture of width x height dimension

WebPPicture pic;

if (!WebPPictureInit(&pic)) return 0; // version error

pic.width = width;

pic.height = height;

if (!WebPPictureAlloc(&pic)) return 0; // memory error

// At this point, 'pic' has been initialized as a container, and can receive the YUVA or RGBA samples.

// Alternatively, one could use ready-made import functions like WebPPictureImportRGBA(), which will take

// care of memory allocation. In any case, past this point, one will have to call WebPPictureFree(&pic)

// to reclaim allocated memory.

To emit the compressed bytes, a hook is called every time new bytes are

available. Here's a simple example with the memory-writer declared in

webp/encode.h. This initialization is likely to be needed for

each picture to compress:

// Set up a byte-writing method (write-to-memory, in this case):

WebPMemoryWriter writer;

WebPMemoryWriterInit(&writer);

pic.writer = WebPMemoryWrite;

pic.custom_ptr = &writer;

We are now ready to compress the input samples (and free their memory afterward):

int ok = WebPEncode(&config, &pic);

WebPPictureFree(&pic); // Always free the memory associated with the input.

if (!ok) {

printf("Encoding error: %d\n", pic.error_code);

} else {

printf("Output size: %d\n", writer.size);

}

For more advanced use of the API and structure, it is recommended to look

at the documentation available in the webp/encode.h header.

Reading the example code examples/cwebp.c can prove useful

for discovering less used parameters.