Looker Studio has its own cache system for reports. When creating your connector, you can implement a custom cache to facilitate faster reports and avoid APR rate-limits.

For example, you are creating a connector which provides historical weather data for the last 7 days for a specific zip code. Your connector is becoming quite popular but the external API that you are fetching the data from has strict rate limits. The API only updates its data daily, so for a specific zip code, there is no need to fetch the same data multiple times within a day. Using this solution guide, you can implement a daily cache for each zip code.

Requirements

- A Firebase Realtime database. If you don’t have access to one, create a Google Cloud Platform (GCP) project and follow the Get started guide to create your own Firebase Realtime Database instance.

- A GCP service account to read and write data from Firebase Realtime Database.

- A Community Connector that fetches data from a source.

Limitations

- This solution cannot be used with Looker Studio Advanced Services. When you use Looker Studio Advanced Services, your connector code in Apps Script does not have access to the data. Thus you cannot cache the data using Apps Script.

- Report editors and viewers cannot reset this specific cache.

Solution

Implement a service account

- Create a service account in your Google Cloud project.

- Ensure this service account has BigQuery access in the cloud project.

- Required Identity and Access Management (IAM) Roles:

Firebase Admin

- Required Identity and Access Management (IAM) Roles:

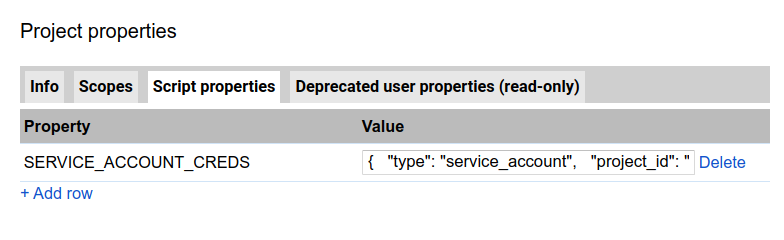

- Download the JSON file to get the service accounts keys. Store the file's

content in your connector project’s script properties. After adding the

keys, should look similar to this in Apps Script UI:

- Include the OAuth2 for Apps Script library in your Apps Script project.

- Implement the required OAuth2 code for the service account:

Implement code to read and write from Firebase

You will use the Firebase Database REST API to read and write to a Firebase Realtime database. The following code implements the methods needed for accessing this API.

Implement getData()

The structure for your existing getData() code without caching should look

like this:

To use caching in your getData() code, follow these steps:

- Determine the 'chunk' or 'unit' of data that should be cached.

Create a unique key to store the minimum unit of data in the cache.

For the example implementation,zipcodefromconfigparamsis being used as the key.

Optional: For per-user cache, create a composite key with the base key and user identity. Example implementation:

js var baseKey = getBaseKey(request); var userEmail = Session.getEffectiveUser().getEmail(); var hasheduserEmail = getHashedValue(userEmail); var compositeKey = baseKey + hasheduserEmail;If cached data exists, check if cache is fresh.

In the example, the cached data for a specific zip code is saved with the current date. When data is retrieved from the cache, date for the cache is checked against the current date.var cacheForZipcode = { data: <data being cached>, ymd: <current date in YYYYMMDD format> }If cached data does not exist or the cached data is not fresh, fetch data from source and store it in cache.

In the following example, main.js includes getData() code with caching

implemented.

Example code

Additional resources

The Chrome UX Connector facilitates a dashboard based on a ~20GB BigQuery table to thousands of users. This connector uses Firebase Realtime Database along with Apps Script Cache Service for a two layered caching approach. See code for implementation details.