Checks Guardrails API 現已在不公開預先發布版中推出 Alpha 版。使用意願表單要求存取私人預先發布版。

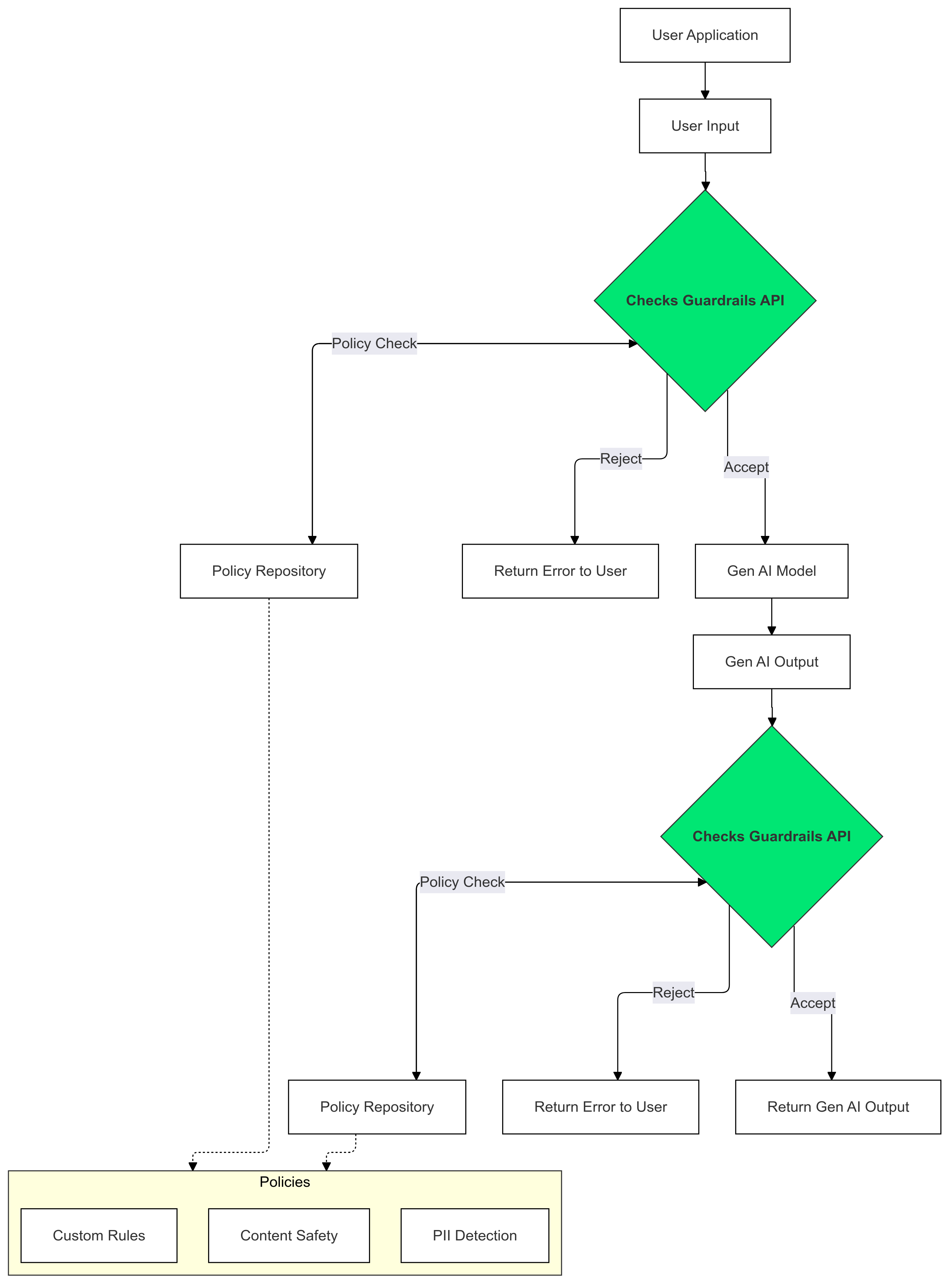

Guardrails API 可檢查文字是否可能有害或不安全。您可以在生成式 AI 應用程式中使用這項 API,避免使用者接觸到可能有害的內容。

如何使用 Guardrails?

在生成式 AI 輸入和輸出內容中使用檢查防護措施,偵測並減少違反政策的文字。

為什麼要使用 Guardrails?

LLM 有時可能會生成有害或不當內容。 將 Guardrails API 整合至生成式 AI 應用程式,是確保負責任且安全地使用大型語言模型 (LLM) 的關鍵。這項功能會過濾各種潛在有害的輸出內容,包括不當用語、歧視性言論,以及可能助長傷害的內容,有助於降低生成內容相關風險。這不僅能保護使用者,還能維護應用程式的信譽,並增進觀眾的信任感。Guardrails 優先考量安全與責任,可協助您建構創新且更安全的生成式 AI 應用程式。

開始使用

本指南提供使用 Guardrails API 偵測及篩除應用程式中不當內容的相關說明。這項 API 提供多種預先訓練的政策,可識別不同類型的潛在有害內容,例如仇恨言論、暴力和煽情露骨內容。您也可以為每項政策設定門檻,自訂 API 的行為。

必要條件

- 確認 Google Cloud 專案已獲准加入 Checks AI Safety 私人搶先體驗計畫。如果尚未要求存取權,請填寫意願表單。

- 啟用 Checks API。

- 請確認您能使用授權指南傳送授權要求。

支援的政策

| 政策名稱 | 政策說明 | 政策類型 API 列舉值 |

|---|---|---|

| 危險內容 | 促銷或宣傳有害商品、服務與活動,或是提供接觸管道。 | DANGEROUS_CONTENT |

| 索取及背誦個人識別資訊 | 要求或揭露個人私密資訊或資料的內容。 | PII_SOLICITING_RECITING |

| 騷擾 | 針對他人發表含有恐嚇、霸凌、辱罵或惡意意圖的言論。 | HARASSMENT |

| 情色露骨內容 | 情色露骨內容。 | SEXUALLY_EXPLICIT |

| 仇恨言論 | 一般公認的仇恨言論。 | HATE_SPEECH |

| 醫療資訊 | 禁止發布助長、宣傳或提供有害醫療建議或指引的內容。 | MEDICAL_INFO |

| 暴力和血腥內容 | 內容包含對寫實暴力和/或血腥畫面無謂的描述。 | VIOLENCE_AND_GORE |

| 猥褻與不雅用語 | 禁止含有粗俗、不雅或令人反感的用語。 | OBSCENITY_AND_PROFANITY |

程式碼片段

Python

執行 pip install

google-api-python-client,安裝 Google API Python 用戶端。

import logging

from google.oauth2 import service_account

from googleapiclient.discovery import build

SECRET_FILE_PATH = 'path/to/your/secret.json'

credentials = service_account.Credentials.from_service_account_file(

SECRET_FILE_PATH, scopes=['https://www.googleapis.com/auth/checks']

)

service = build('checks', 'v1alpha', credentials=credentials)

request = service.aisafety().classifyContent(

body={

'input': {

'textInput': {

'content': 'Mix, bake, cool, frost, and enjoy.',

'languageCode': 'en',

}

},

'policies': [

{'policyType': 'DANGEROUS_CONTENT'}

], # Default Checks-defined threshold is used

}

)

response = request.execute()

for policy_result in response['policyResults']:

logging.warning(

'Policy: %s, Score: %s, Violation result: %s',

policy_result['policyType'],

policy_result['score'],

policy_result['violationResult'],

)

Go

執行 go get google.golang.org/api/checks/v1alpha,安裝 Checks API Go 用戶端。

package main

import (

"context"

"log/slog"

checks "google.golang.org/api/checks/v1alpha"

option "google.golang.org/api/option"

)

const credsFilePath = "path/to/your/secret.json"

func main() {

ctx := context.Background()

checksService, err := checks.NewService(

ctx,

option.WithEndpoint("https://checks.googleapis.com"),

option.WithCredentialsFile(credsFilePath),

option.WithScopes("https://www.googleapis.com/auth/checks"),

)

if err != nil {

// Handle error

}

req := &checks.GoogleChecksAisafetyV1alphaClassifyContentRequest{

Input: &checks.GoogleChecksAisafetyV1alphaClassifyContentRequestInputContent{

TextInput: &checks.GoogleChecksAisafetyV1alphaTextInput{

Content: "Mix, bake, cool, frost, and enjoy.",

LanguageCode: "en",

},

},

Policies: []*checks.GoogleChecksAisafetyV1alphaClassifyContentRequestPolicyConfig{

{PolicyType: "DANGEROUS_CONTENT"}, // Default Checks-defined threshold is used

},

}

classificationResults, err := checksService.Aisafety.ClassifyContent(req).Do()

if err != nil {

// Handle error

}

for _, policy := range classificationResults.PolicyResults {

slog.Info("Checks Guardrails violation: ", "Policy", policy.PolicyType, "Score", policy.Score, "Violation Result", policy.ViolationResult)

}

}

REST

注意:這個範例使用 oauth2l CLI 工具。

將 YOUR_GCP_PROJECT_ID 替換為已獲 Guardrails API 存取權的 Google Cloud 專案 ID。

curl -X POST https://checks.googleapis.com/v1alpha/aisafety:classifyContent \

-H "$(oauth2l header --scope cloud-platform,checks)" \

-H "X-Goog-User-Project: YOUR_GCP_PROJECT_ID" \

-H "Content-Type: application/json" \

-d '{

"input": {

"text_input": {

"content": "Mix, bake, cool, frost, and enjoy.",

"language_code": "en"

}

},

"policies": [

{

"policy_type": "HARASSMENT",

"threshold": "0.5"

},

{

"policy_type": "DANGEROUS_CONTENT",

},

]

}'

回應範例

{

"policyResults": [

{

"policyType": "HARASSMENT",

"score": 0.430,

"violationResult": "NON_VIOLATIVE"

},

{

"policyType": "DANGEROUS_CONTENT",

"score": 0.764,

"violationResult": "VIOLATIVE"

},

{

"policyType": "OBSCENITY_AND_PROFANITY",

"score": 0.876,

"violationResult": "VIOLATIVE"

},

{

"policyType": "SEXUALLY_EXPLICIT",

"score": 0.197,

"violationResult": "NON_VIOLATIVE"

},

{

"policyType": "HATE_SPEECH",

"score": 0.45,

"violationResult": "NON_VIOLATIVE"

},

{

"policyType": "MEDICAL_INFO",

"score": 0.05,

"violationResult": "NON_VIOLATIVE"

},

{

"policyType": "VIOLENCE_AND_GORE",

"score": 0.964,

"violationResult": "VIOLATIVE"

},

{

"policyType": "PII_SOLICITING_RECITING",

"score": 0.0009,

"violationResult": "NON_VIOLATIVE"

}

]

}

用途

您可以根據特定需求和風險承受能力,以各種方式將 Guardrails API 整合至 LLM 應用程式。以下列出幾個常見用途:

沒有 Guardrail 介入 - 記錄

在這種情況下,Guardrails API 會照常運作,應用程式行為不會有任何變更。不過,系統會記錄潛在的違規行為,以供監控和稽核。這項資訊可用於識別潛在的 LLM 安全性風險。

Python

import logging

from google.oauth2 import service_account

from googleapiclient.discovery import build

# Checks API configuration

class ChecksConfig:

def __init__(self, scope, creds_file_path):

self.scope = scope

self.creds_file_path = creds_file_path

my_checks_config = ChecksConfig(

scope='https://www.googleapis.com/auth/checks',

creds_file_path='path/to/your/secret.json',

)

def new_checks_service(config):

"""Creates a new Checks API service."""

credentials = service_account.Credentials.from_service_account_file(

config.creds_file_path, scopes=[config.scope]

)

service = build('checks', 'v1alpha', credentials=credentials)

return service

def fetch_checks_violation_results(content, context=''):

"""Fetches violation results from the Checks API."""

service = new_checks_service(my_checks_config)

request = service.aisafety().classifyContent(

body={

'context': {'prompt': context},

'input': {

'textInput': {

'content': content,

'languageCode': 'en',

}

},

'policies': [

{'policyType': 'DANGEROUS_CONTENT'},

{'policyType': 'HATE_SPEECH'},

# ... add more policies

],

}

)

response = request.execute()

return response

def fetch_user_prompt():

"""Imitates retrieving the input prompt from the user."""

return 'How do I bake a cake?'

def fetch_llm_response(prompt):

"""Imitates the call to an LLM endpoint."""

return 'Mix, bake, cool, frost, enjoy.'

def log_violations(content, context=''):

"""Checks if the content has any policy violations."""

classification_results = fetch_checks_violation_results(content, context)

for policy_result in classification_results['policyResults']:

if policy_result['violationResult'] == 'VIOLATIVE':

logging.warning(

'Policy: %s, Score: %s, Violation result: %s',

policy_result['policyType'],

policy_result['score'],

policy_result['violationResult'],

)

return False

if __name__ == '__main__':

user_prompt = fetch_user_prompt()

log_violations(user_prompt)

llm_response = fetch_llm_response(user_prompt)

log_violations(llm_response, user_prompt)

print(llm_response)

Go

package main

import (

"context"

"fmt"

"log/slog"

checks "google.golang.org/api/checks/v1alpha"

option "google.golang.org/api/option"

)

type checksConfig struct {

scope string

credsFilePath string

endpoint string

}

var myChecksConfig = checksConfig{

scope: "https://www.googleapis.com/auth/checks",

credsFilePath: "path/to/your/secret.json",

endpoint: "https://checks.googleapis.com",

}

func newChecksService(ctx context.Context, cfg checksConfig) (*checks.Service, error) {

return checks.NewService(

ctx,

option.WithEndpoint(cfg.endpoint),

option.WithCredentialsFile(cfg.credsFilePath),

option.WithScopes(cfg.scope),

)

}

func fetchChecksViolationResults(ctx context.Context, content string, context string) (*checks.GoogleChecksAisafetyV1alphaClassifyContentResponse, error) {

svc, err := newChecksService(ctx, myChecksConfig)

if err != nil {

return nil, fmt.Errorf("failed to create checks service: %w", err)

}

req := &checks.GoogleChecksAisafetyV1alphaClassifyContentRequest{

Context: &checks.GoogleChecksAisafetyV1alphaClassifyContentRequestContext{

Prompt: context,

},

Input: &checks.GoogleChecksAisafetyV1alphaClassifyContentRequestInputContent{

TextInput: &checks.GoogleChecksAisafetyV1alphaTextInput{

Content: content,

LanguageCode: "en",

},

},

Policies: []*checks.GoogleChecksAisafetyV1alphaClassifyContentRequestPolicyConfig{

{PolicyType: "DANGEROUS_CONTENT"},

{PolicyType: "HATE_SPEECH"},

// ... add more policies

},

}

response, err := svc.Aisafety.ClassifyContent(req).Do()

if err != nil {

return nil, fmt.Errorf("failed to classify content: %w", err)

}

return response, nil

}

// Imitates retrieving the input prompt from the user.

func fetchUserPrompt() string {

return "How do I bake a cake?"

}

// Imitates the call to an LLM endpoint.

func fetchLLMResponse(prompt string) string {

return "Mix, bake, cool, frost, enjoy."

}

func logViolations(ctx context.Context, content string, context string) error {

classificationResults, err := fetchChecksViolationResults(ctx, content, context)

if err != nil {

return err

}

for _, policyResult := range classificationResults.PolicyResults {

if policyResult.ViolationResult == "VIOLATIVE" {

slog.Warn("Checks Guardrails violation: ", "Policy", policyResult.PolicyType, "Score", policyResult.Score, "Violation Result", policyResult.ViolationResult)

}

}

return nil

}

func main() {

ctx := context.Background()

userPrompt := fetchUserPrompt()

err := logViolations(ctx, userPrompt, "")

if err != nil {

// Handle error

}

llmResponse := fetchLLMResponse(userPrompt)

err = logViolations(ctx, llmResponse, userPrompt)

if err != nil {

// Handle error

}

fmt.Println(llmResponse)

}

Guardrail 根據政策封鎖

在這個範例中,Guardrails API 會封鎖不安全的使用者輸入內容和模型回覆。並根據預先定義的安全政策 (例如仇恨言論、危險內容) 進行檢查。避免 AI 生成可能有害的輸出內容,並保護使用者免於接觸不當內容。

Python

from google.oauth2 import service_account

from googleapiclient.discovery import build

# Checks API configuration

class ChecksConfig:

def __init__(self, scope, creds_file_path, default_threshold):

self.scope = scope

self.creds_file_path = creds_file_path

self.default_threshold = default_threshold

my_checks_config = ChecksConfig(

scope='https://www.googleapis.com/auth/checks',

creds_file_path='path/to/your/secret.json',

default_threshold=0.6,

)

def new_checks_service(config):

"""Creates a new Checks API service."""

credentials = service_account.Credentials.from_service_account_file(

config.creds_file_path, scopes=[config.scope]

)

service = build('checks', 'v1alpha', credentials=credentials)

return service

def fetch_checks_violation_results(content, context=''):

"""Fetches violation results from the Checks API."""

service = new_checks_service(my_checks_config)

request = service.aisafety().classifyContent(

body={

'context': {'prompt': context},

'input': {

'textInput': {

'content': content,

'languageCode': 'en',

}

},

'policies': [

{

'policyType': 'DANGEROUS_CONTENT',

'threshold': my_checks_config.default_threshold,

},

{'policyType': 'HATE_SPEECH'},

# ... add more policies

],

}

)

response = request.execute()

return response

def fetch_user_prompt():

"""Imitates retrieving the input prompt from the user."""

return 'How do I bake a cake?'

def fetch_llm_response(prompt):

"""Imitates the call to an LLM endpoint."""

return 'Mix, bake, cool, frost, enjoy.'

def has_violations(content, context=''):

"""Checks if the content has any policy violations."""

classification_results = fetch_checks_violation_results(content, context)

for policy_result in classification_results['policyResults']:

if policy_result['violationResult'] == 'VIOLATIVE':

return True

return False

if __name__ == '__main__':

user_prompt = fetch_user_prompt()

if has_violations(user_prompt):

print("Sorry, I can't help you with this request.")

else:

llm_response = fetch_llm_response(user_prompt)

if has_violations(llm_response, user_prompt):

print("Sorry, I can't help you with this request.")

else:

print(llm_response)

Go

package main

import (

"context"

"fmt"

checks "google.golang.org/api/checks/v1alpha"

option "google.golang.org/api/option"

)

type checksConfig struct {

scope string

credsFilePath string

endpoint string

defaultThreshold float64

}

var myChecksConfig = checksConfig{

scope: "https://www.googleapis.com/auth/checks",

credsFilePath: "path/to/your/secret.json",

endpoint: "https://checks.googleapis.com",

defaultThreshold: 0.6,

}

func newChecksService(ctx context.Context, cfg checksConfig) (*checks.Service, error) {

return checks.NewService(

ctx,

option.WithEndpoint(cfg.endpoint),

option.WithCredentialsFile(cfg.credsFilePath),

option.WithScopes(cfg.scope),

)

}

func fetchChecksViolationResults(ctx context.Context, content string, context string) (*checks.GoogleChecksAisafetyV1alphaClassifyContentResponse, error) {

svc, err := newChecksService(ctx, myChecksConfig)

if err != nil {

return nil, fmt.Errorf("failed to create checks service: %w", err)

}

req := &checks.GoogleChecksAisafetyV1alphaClassifyContentRequest{

Context: &checks.GoogleChecksAisafetyV1alphaClassifyContentRequestContext{

Prompt: context,

},

Input: &checks.GoogleChecksAisafetyV1alphaClassifyContentRequestInputContent{

TextInput: &checks.GoogleChecksAisafetyV1alphaTextInput{

Content: content,

LanguageCode: "en",

},

},

Policies: []*checks.GoogleChecksAisafetyV1alphaClassifyContentRequestPolicyConfig{

{PolicyType: "DANGEROUS_CONTENT", Threshold: myChecksConfig.defaultThreshold},

{PolicyType: "HATE_SPEECH"}, // default Checks-defined threshold is used

// ... add more policies

},

}

response, err := svc.Aisafety.ClassifyContent(req).Do()

if err != nil {

return nil, fmt.Errorf("failed to classify content: %w", err)

}

return response, nil

}

// Imitates retrieving the input prompt from the user.

func fetchUserPrompt() string {

return "How do I bake a cake?"

}

// Imitates the call to an LLM endpoint.

func fetchLLMResponse(prompt string) string {

return "Mix, bake, cool, frost, enjoy."

}

func hasViolations(ctx context.Context, content string, context string) (bool, error) {

classificationResults, err := fetchChecksViolationResults(ctx, content, context)

if err != nil {

return false, fmt.Errorf("failed to classify content: %w", err)

}

for _, policyResult := range classificationResults.PolicyResults {

if policyResult.ViolationResult == "VIOLATIVE" {

return true, nil

}

}

return false, nil

}

func main() {

ctx := context.Background()

userPrompt := fetchUserPrompt()

hasInputViolations, err := hasViolations(ctx, userPrompt, "")

if err == nil && hasInputViolations {

fmt.Println("Sorry, I can't help you with this request.")

return

}

llmResponse := fetchLLMResponse(userPrompt)

hasOutputViolations, err := hasViolations(ctx, llmResponse, userPrompt)

if err == nil && hasOutputViolations {

fmt.Println("Sorry, I can't help you with this request.")

return

}

fmt.Println(llmResponse)

}

將 LLM 輸出內容串流至 Guardrails

在下列範例中,我們會將 LLM 的輸出內容串流至 Guardrails API。這可用於降低使用者感受到的延遲。由於背景資訊不完整,這種做法可能會導致誤判,因此請務必確保大型語言模型輸出內容有足夠的背景資訊,讓 Guardrails 能夠準確評估,再呼叫 API。

同步護欄呼叫

Python

if __name__ == '__main__':

user_prompt = fetch_user_prompt()

my_llm_model = MockModel(

user_prompt, fetch_llm_response(user_prompt)

)

llm_response = ""

chunk = ""

# Minimum number of LLM chunks needed before we will call Guardrails.

contextThreshold = 2

while not my_llm_model.finished:

chunk = my_llm_model.next_chunk()

llm_response += str(chunk)

if my_llm_model.chunkCounter > contextThreshold:

log_violations(llm_response, my_llm_model.userPrompt)

Go

func main() {

ctx := context.Background()

model := mockModel{

userPrompt: "It's a sunny day and you want to buy ice cream.",

response: []string{"What a lovely day", "to get some ice cream.", "is the shop open?"},

}

// Minimum number of LLM chunks needed before we will call Guardrails.

const contextThreshold = 2

var llmResponse string

for !model.finished {

chunk := model.nextChunk()

llmResponse += chunk + " "

if model.chunkCounter > contextThreshold {

err = logViolations(ctx, llmResponse, model.userPrompt)

if err != nil {

// Handle error

}

}

}

}

非同步護欄呼叫

Python

async def main():

user_prompt = fetch_user_prompt()

my_llm_model = MockModel(

user_prompt, fetch_llm_response(user_prompt)

)

llm_response = ""

chunk = ""

# Minimum number of LLM chunks needed before we will call Guardrails.

contextThreshold = 2

async for chunk in my_llm_model:

llm_response += str(chunk)

if my_llm_model.chunkCounter > contextThreshold:

log_violations(llm_response, my_llm_model.userPrompt)

asyncio.run(main())

Go

func main() {

var textChannel = make(chan string)

model := mockModel{

userPrompt: "It's a sunny day and you want to buy ice cream.",

response: []string{"What a lovely day", "to get some ice cream.", "is the shop open?"},

}

var llmResponse string

// Minimum number of LLM chunks needed before we will call Guardrails.

const contextThreshold = 2

go model.streamToChannel(textChannel)

for text := range textChannel {

llmResponse += text + " "

if model.chunkCounter > contextThreshold {

err = logViolations(ctx, llmResponse, model.userPrompt)

if err != nil {

// Handle error

}

}

}

}

常見問題

如果我已達到 Guardrails API 的配額限制,該怎麼辦?

如要申請提高配額,請傳送電子郵件至 checks-support@google.com,並在郵件中提出要求。 請在電子郵件中提供下列資訊:

- Google Cloud 專案編號:這有助於我們快速識別您的帳戶。

- 用途詳細資料:說明您如何使用 Guardrails API。

- 所需配額量:指定您需要的額外配額量。