PageSpeed Insights (PSI) จะรายงานประสบการณ์ของผู้ใช้บนเว็บในอุปกรณ์เคลื่อนที่และอุปกรณ์เดสก์ท็อป รวมถึงให้คำแนะนำเกี่ยวกับวิธีปรับปรุงหน้าเว็บ

PSI ให้ข้อมูลทั้งในห้องทดลองและภาคสนามเกี่ยวกับหน้าเว็บ ข้อมูลใน Lab มีประโยชน์สำหรับการแก้ไขข้อบกพร่อง เนื่องจากตรวจพบในสภาพแวดล้อมที่มีการควบคุม แต่อาจไม่ได้แสดงจุดคอขวดจากการใช้งานจริง ข้อมูลภาคสนามมีประโยชน์ในการบันทึกประสบการณ์การใช้งานจริงของผู้ใช้ แต่มีชุดเมตริกที่จำกัดกว่า ดู วิธีคิด เกี่ยวกับเครื่องมือความเร็วสำหรับข้อมูลเพิ่มเติมเกี่ยวกับข้อมูลทั้ง 2 ประเภท

ข้อมูลประสบการณ์ของผู้ใช้จริง

ข้อมูลประสบการณ์ของผู้ใช้จริงใน PSI ขับเคลื่อนโดยประสบการณ์ของผู้ใช้ Chrome Report (CrUX) PSI รายงานประสบการณ์ของผู้ใช้จริงเกี่ยวกับ First Contentful Paint (FCP), Interaction to Next Paint (INP), Largest Contentful Paint (LCP) และ Cumulative Layout Shift (CLS) ในช่วงการเก็บรวบรวมข้อมูล 28 วันที่ผ่านมา PSI ยังรายงานด้วย ประสบการณ์ของเมตริกทดลอง Time to First Byte (TTFB)

ต้องมีข้อมูลที่เพียงพอจึงจะแสดงข้อมูลประสบการณ์ของผู้ใช้สำหรับหน้าเว็บหนึ่งๆ ได้ ที่จะรวมไว้ในชุดข้อมูล CrUX หน้าเว็บอาจมีข้อมูลไม่เพียงพอหากเพิ่งเผยแพร่หรือมีตัวอย่างจากผู้ใช้จริงน้อยเกินไป ในกรณีนี้ PSI จะถูกระงับ ย้อนกลับไปยังรายละเอียดระดับต้นทาง ซึ่งครอบคลุมประสบการณ์ของผู้ใช้ทั้งหมดในทุกหน้าของ เว็บไซต์ของคุณ บางครั้งต้นทางอาจมีข้อมูลไม่เพียงพอ ซึ่งในกรณีนี้ PSI จะ แสดงข้อมูลประสบการณ์ของผู้ใช้จริงไม่ได้

การประเมินคุณภาพของประสบการณ์

PSI จะจัดหมวดหมู่คุณภาพของประสบการณ์ของผู้ใช้ออกเป็น 3 หมวดหมู่ ได้แก่ ดี ต้องปรับปรุง หรือแย่ PSI กําหนดเกณฑ์ต่อไปนี้ให้สอดคล้องกับโครงการริเริ่ม Web Vitals

| ดี | ต้องปรับปรุง | แย่ | |

|---|---|---|---|

| FCP | [0, 1800 มิลลิวินาที] | (1800 มิลลิวินาที, 3,000 มิลลิวินาที] | มากกว่า 3,000 มิลลิวินาที |

| LCP | [0, 2,500 มิลลิวินาที] | (2500ms, 4000ms] | มากกว่า 4,000 มิลลิวินาที |

| CLS | [0, 0.1] | (0.1, 0.25] | มากกว่า 0.25 |

| INP | [0, 200 มิลลิวินาที] | (200ms, 500ms] | มากกว่า 500 มิลลิวินาที |

| TTFB (ทดลอง) | [0, 800 มิลลิวินาที] | (800 มิลลิวินาที, 1800 มิลลิวินาที] | มากกว่า 1800 มิลลิวินาที |

การกระจายและค่าเมตริกที่เลือก

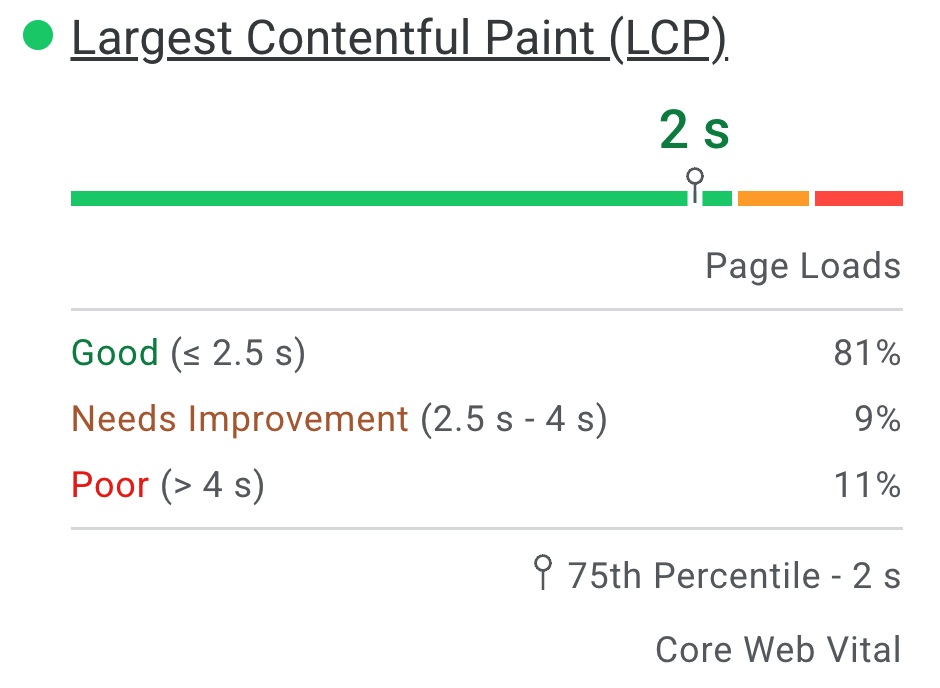

PSI นำเสนอการกระจายของเมตริกเหล่านี้เพื่อให้นักพัฒนาซอฟต์แวร์สามารถทำความเข้าใจช่วง ของหน้าหรือต้นทางนั้น การแจกแจงนี้แบ่งออกเป็น 3 หมวดหมู่ ได้แก่ ดี ต้องปรับปรุง และแย่ ซึ่งแสดงด้วยแถบสีเขียว สีเหลือง และสีแดง ตัวอย่างเช่น การเห็น 11% ภายในแถบสีเหลืองของ LCP บ่งบอกว่าค่า 11% ของค่า LCP ที่พบทั้งหมด จะอยู่ระหว่าง 2,500 ถึง 4,000 มิลลิวินาที

PSI รายงานเปอร์เซ็นไทล์ที่ 75 เหนือแถบการกระจายสําหรับเมตริกทั้งหมด วันที่ 75 เลือกเปอร์เซ็นไทล์เพื่อให้นักพัฒนาแอปเข้าใจ ทำให้ผู้ใช้ได้รับประสบการณ์ที่น่าหงุดหงิดบนเว็บไซต์ ค่าเมตริกของช่องเหล่านี้จะจัดประเภทเป็น "ดี/ต้องปรับปรุง/แย่" โดยใช้เกณฑ์เดียวกันกับที่แสดงด้านบน

Core Web Vitals

Core Web Vitals คือชุดสัญญาณประสิทธิภาพทั่วไปที่มีความสําคัญต่อประสบการณ์การใช้งานเว็บทั้งหมด เมตริกของ Core Web Vitals ได้แก่ INP, LCP และ CLS โดยอาจเป็น รวมที่ระดับหน้าหรือระดับต้นทาง สำหรับการรวมที่มีข้อมูลเพียงพอในทุก เมตริก 3 รายการ ผลรวมผ่านการประเมิน Core Web Vitals หากเปอร์เซ็นไทล์ที่ 75 จากเมตริกทั้ง 3 รายการ ถือว่าดี มิฉะนั้นการรวมจะไม่ผ่านการประเมิน หาก การสรุปรวมมีข้อมูลไม่เพียงพอสำหรับ INP ซึ่งจะทำให้ผ่านการประเมินหากทั้ง 75 เปอร์เซ็นต์ไทล์ของ LCP และ CLS อยู่ในระดับดี หาก LCP หรือ CLS มีข้อมูลไม่เพียงพอ ระบบจะประเมินการรวมระดับหน้าเว็บหรือระดับต้นทางไม่ได้

ความแตกต่างระหว่างข้อมูลฟิลด์ใน PSI และ CrUX

ความแตกต่างระหว่างข้อมูลฟิลด์ใน PSI เทียบกับ ชุดข้อมูล CrUX บน BigQuery คือการที่ข้อมูลของ PSI มีการอัปเดตทุกวัน ขณะที่ชุดข้อมูล BigQuery ได้รับการอัปเดตทุกเดือนและจำกัดเฉพาะข้อมูลระดับต้นทางเท่านั้น แหล่งข้อมูลทั้ง 2 รายการจะแสดงช่วงเวลา 28 วันหลังจากนี้

การวินิจฉัยในห้องทดลอง

PSI ใช้ Lighthouse เพื่อวิเคราะห์ URL ที่ระบุในข้อมูลจำลอง หมวดหมู่ประสิทธิภาพ การช่วยเหลือพิเศษ แนวทางปฏิบัติแนะนำ และ SEO

คะแนน

ที่ด้านบนของส่วนคือคะแนนของแต่ละหมวดหมู่ซึ่งกำหนดโดยการเรียกใช้ Lighthouse เพื่อรวบรวมและวิเคราะห์ข้อมูลการวินิจฉัยเกี่ยวกับหน้าเว็บ หากได้ 90 คะแนนขึ้นไปจะถือว่าอยู่ในเกณฑ์ดี คะแนน 50-89 ถือว่าต้องปรับปรุง และคะแนนต่ำกว่า 50 ถือว่าแย่

เมตริก

หมวดหมู่ประสิทธิภาพยังมีประสิทธิภาพของหน้าเว็บในเมตริกต่างๆ ด้วย ได้แก่ First Contentful Paint Largest Contentful Paint ดัชนีความเร็ว, Cumulative Layout Shift เวลาในการโต้ตอบ และเวลาทั้งหมดในการบล็อก

เมตริกแต่ละรายการจะให้คะแนนและติดป้ายกำกับด้วยไอคอนต่อไปนี้

- คำว่า "ดี" จะระบุด้วยวงกลมสีเขียว

- ต้องการการปรับปรุงจะกำกับด้วยตารางข้อมูลสีเหลืองอำพัน

- คุณภาพไม่ดีจะแสดงด้วยสามเหลี่ยมคำเตือนสีแดง

การตรวจสอบ

ในแต่ละหมวดหมู่จะมีการตรวจสอบที่ให้ข้อมูลเกี่ยวกับวิธีปรับปรุงผู้ใช้หน้าเว็บ ประสบการณ์การใช้งาน ดูรายละเอียดได้ในเอกสารประกอบของ Lighthouse รายละเอียดการตรวจสอบของแต่ละหมวดหมู่

คำถามที่พบบ่อย

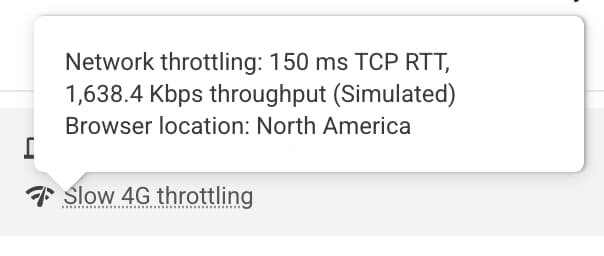

Lighthouse ใช้อุปกรณ์และเครือข่ายเงื่อนไขใดในการจําลองการโหลดหน้าเว็บ

ปัจจุบัน Lighthouse จำลองเงื่อนไขการโหลดหน้าเว็บของอุปกรณ์ระดับกลาง (Moto G4) บนเครือข่ายมือถือสำหรับอุปกรณ์เคลื่อนที่ และ เดสก์ท็อปจำลองที่มีการเชื่อมต่อแบบใช้สายสำหรับเดสก์ท็อป PageSpeed ยังทำงานใน ศูนย์ข้อมูลที่อาจแตกต่างกันไปตามสภาพเครือข่าย คุณสามารถตรวจสอบตำแหน่ง ที่การทดสอบ คือการดูบล็อกสภาพแวดล้อมของรายงาน Lighthouse ดังนี้

หมายเหตุ: PageSpeed จะรายงานว่าทำงานในทวีปอเมริกาเหนือ ยุโรป หรือเอเชีย

เหตุใดบางครั้งข้อมูลภาคสนามและข้อมูลในห้องทดลองจึงขัดแย้งกัน

ข้อมูลภาคสนามเป็นรายงานที่ผ่านมาเกี่ยวกับประสิทธิภาพของ URL หนึ่งๆ และแสดงข้อมูลประสิทธิภาพที่ไม่ระบุตัวบุคคลจากผู้ใช้ในชีวิตจริงในอุปกรณ์และเงื่อนไขเครือข่ายที่หลากหลาย ข้อมูลในห้องทดลองนั้นมาจากการจำลองการโหลดหน้าเว็บบนอุปกรณ์เครื่องเดียว และ ของเงื่อนไขเครือข่าย ด้วยเหตุนี้ ค่าจึงอาจแตกต่างกันไป ดูข้อมูลเพิ่มเติมที่หัวข้อสาเหตุที่ข้อมูลในห้องทดลองและภาคสนามอาจแตกต่างกัน (และวิธีจัดการกับปัญหานี้)

เหตุใดจึงเลือกเปอร์เซ็นต์ที่ 75 สำหรับเมตริกทั้งหมด

เป้าหมายของเราคือการทำให้หน้าเว็บทํางานได้ดีสําหรับผู้ใช้ส่วนใหญ่ โดยมุ่งเน้นที่อันดับที่ 75 ค่าเปอร์เซ็นต์ไทล์สำหรับเมตริกของเรา ซึ่งจะช่วยให้แน่ใจว่าหน้าเว็บมอบประสบการณ์การใช้งานที่ดีให้แก่ผู้ใช้ ภายใต้สภาวะของอุปกรณ์และเครือข่ายที่ยากที่สุด ดูการกำหนดเกณฑ์เมตริก Core Web Vitals เพื่อดูข้อมูลเพิ่มเติม

ข้อมูลในการทดสอบมีคะแนนที่ดีเท่าไร

คะแนนสีเขียว (90+) ถือว่าดี แต่โปรดทราบว่าการมีข้อมูลในเครื่องที่ดีมากไม่ได้หมายความว่าประสบการณ์ของผู้ใช้จริงจะดีมากด้วย

เหตุใดคะแนนประสิทธิภาพจึงเปลี่ยนแปลงไปในแต่ละการเรียกใช้ ฉันไม่ได้เปลี่ยนแปลงอะไรในหน้าเว็บ

ความแปรปรวนในการวัดประสิทธิภาพจะแสดงผ่านช่องทางต่างๆ ที่มีระดับผลลัพธ์แตกต่างกัน แหล่งที่มาที่พบบ่อยของการเปลี่ยนแปลงเมตริก ได้แก่ ความพร้อมใช้งานของเครือข่ายภายใน ความพร้อมใช้งานของฮาร์ดแวร์ไคลเอ็นต์ และการแย่งชิงทรัพยากรไคลเอ็นต์

เหตุใดข้อมูล CrUX ของผู้ใช้จริงจึงใช้กับ URL หรือต้นทางไม่ได้

รายงานประสบการณ์ของผู้ใช้ Chrome จะรวบรวมข้อมูลความเร็วตามจริงจากผู้ใช้ที่เลือกใช้ และกำหนดให้ URL ต้องเป็นแบบสาธารณะ (ทำการ Crawl และจัดทำดัชนีได้) และต้องมีตัวอย่างที่แตกต่างกันในจำนวนที่เพียงพอซึ่งแสดงมุมมองที่ครอบคลุมและลบข้อมูลระบุตัวบุคคลออกเกี่ยวกับประสิทธิภาพของ URL หรือต้นทาง

หากมีคำถามเพิ่มเติม

หากคุณมีคำถามเกี่ยวกับการใช้ PageSpeed Insights ที่เฉพาะเจาะจงและตอบได้ ถามคำถามเป็นภาษาอังกฤษใน Stack Overflow

หากคุณมีความคิดเห็นหรือคำถามทั่วไปเกี่ยวกับ PageSpeed Insights หรือหากต้องการเริ่ม การสนทนาทั่วไป ให้เริ่มต้นชุดข้อความในรายชื่ออีเมล

หากมีคำถามทั่วไปเกี่ยวกับเมตริก Web Vitals ให้เริ่มชุดข้อความในกลุ่มสนทนา web-vitals-feedback

ความคิดเห็น

หน้านี้มีประโยชน์ไหม