Learning Rate and Convergence

This is the first of several Playground exercises. Playground is a program developed especially for this course to teach machine learning principles. Each Playground exercise in this course includes an embedded playground instance with presets.

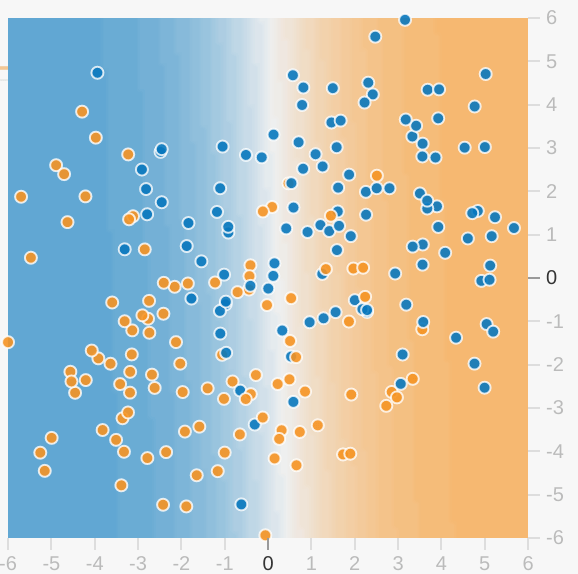

Each Playground exercise generates a dataset. The label for this dataset has two possible values. You could think of those two possible values as spam vs. not spam or perhaps healthy trees vs. sick trees. The goal of most exercises is to tweak various hyperparameters to build a model that successfully classifies (separates or distinguishes) one label value from the other. Note that most data sets contain a certain amount of noise that will make it impossible to successfully classify every example.

The interface for this exercise provides three buttons:

| Icon | Name | What it Does |

|---|---|---|

|

|

Reset | Resets Iterations to 0. Resets any weights that model had already learned. |

|

|

Step | Advance one iteration. With each iteration, the model changes—sometimes subtly and sometimes dramatically. |

|

|

Regenerate | Generates a new data set. Does not reset Iterations. |

In this first Playground exercise, you'll experiment with learning rate by performing two tasks.

Task 1: Notice the Learning rate menu at the top-right of Playground. The given Learning rate—3—is very high. Observe how that high Learning rate affects your model by clicking the "Step" button 10 or 20 times. After each early iteration, notice how the model visualization changes dramatically. You might even see some instability after the model appears to have converged. Also notice the lines running from x1 and x2 to the model visualization. The weights of these lines indicate the weights of those features in the model. That is, a thick line indicates a high weight.

Task 2: Do the following:

- Press the Reset button.

- Lower the Learning rate.

- Press the Step button a bunch of times.

How did the lower learning rate impact convergence? Examine both the number of steps needed for the model to converge, and also how smoothly and steadily the model converges. Experiment with even lower values of learning rate. Can you find a learning rate too slow to be useful? (You'll find a discussion just below the exercise.)